Skip Navigation

- Scientific Research

- Professional Development

- Career Paths

- About Neuronline

- Community Leaders Program

- Write for Us

- Community Guidelines

- COLLECTIONS

Scientific Rigor and the Quest for Truth

- By Cheryl Sisk, PhD

- Featured in:

- Rigor and Reproducibility

Scientific rigor means implementing the highest standards and best practices of the scientific method and applying those to one’s research. It is all about discovering the truth.

Scientific rigor involves minimizing bias in subject selection and data analysis. It is about determining the appropriate sample size for your study so that you have sufficient statistical power to be more confident about whether you are generating false positives or missing out on false negatives. It’s about conducting research that has a good chance of being replicated in your own lab and other laboratories.

The idea is to figure out under what range of conditions a particular outcome is generated — the wider the range, the higher the likelihood that the outcome reflects a scientific truth. If results can be replicated in one’s own lab, but cannot be reproduced in another lab, does not generalize well in other situations, or does not hold up over the test of time, the outcome might not be that important.

What’s at Stake

We want to know and like to think that our research is generalizable and robust. If the research is not conducted with scientific rigor, then generalizability and robustness are not going to happen.

And, if neuroscience research is not conducted with scientific rigor, then it’s a big waste of time, money, and energy in pursuing outcomes that are not real. We also risk the possibility of missing great insights because we didn’t conduct the research in a way that would allow us to detect those insights.

Some of the issues around blind analysis and randomization of subjects are particularly important for clinical applications because lives are going to depend on their outcomes.

We also want science to be objective and empirical. But we’ve known for a long time that when an experimenter knows what treatment group a patient, rat, or a mouse is in, then that knowledge can influence the way they look at the data or analyze the data. It’s not intentional, but it is experimenter bias. The same is true for patients. If they know they are in one group and not the other, then bias is introduced. It is therefore critical to know how large the placebo effect is. Ultimately we are after the truth. If people — either the experimenter or the subject — are not blind to their treatment group, that introduces bias that will cloud the truth.

The challenges are mainly around the time it takes to be careful and rigorous in research. PIs are faced with many responsibilities ranging from teaching, completing administrative/committee work, administering grants, keeping the lab funded, and publishing papers. They are pushed and pulled in all directions. However, it takes time to ensure that there is scientific rigor, and implementing the best practices of scientific rigor will almost certainly increase the time to publication of one’s results.

My Lab’s Approach

Before any experiment is conducted, the student or postdoc has to write a plan of study so they think purposefully about their study in advance. The plan of study includes, among other points: rationale for the study, an answer to whether it is discovery science or hypothesis-driven (both have their place), sample size, determination of statistical power, detailed methods and procedures, and how data will be analyzed.

We also periodically read and discuss three papers that span 110 years of science. One is John Platt’s 1964 Science paper, “Strong Inference,” which talks about hypothesis testing and the importance of having alternative hypotheses and an experimental design intended to falsify (not support) your hypothesis. The second paper written in 1897 by Thomas Crowder Chamberlin discusses the method of multiple working hypotheses. More recently, a 2014 paper by Douglas Fudge, “50 Years of Platt’s Strong Inference,” revisits principles outlined in the 1964 Platt paper.

Students find the idea of designing experiments that will falsify your hypothesis, given a particular outcome, a challenging exercise — and so do I. We have a lot of fun doing this.

Forward Look

Through the Training Modules to Enhance Data Reproducibility grant (I am a co-PI), SfN seeks to raise awareness in the neuroscience community about the importance of scientific rigor and its application to our discipline. If we are going to make strides that stand the test of time, it is essential.

The next generation of neuroscientists needs to be aware of these important points. Of course, awareness will make the discipline stronger. We also need to provide necessary training to conduct research in a rigorous way.

In addition, the relationship between young investigators and trainees and their mentors is really important in learning about scientific rigor. It is my responsibility as a mentor and trainer to raise young neuroscientists in a way that makes scientific rigor and strong inference habits of mind and practice.

It is also important for young researchers just learning the ropes to seek training in this area. This can teach them to question both their own assumptions and those of others in their lab, and to do what they can as budding scientists to enhance the scientific rigor and credibility of their research.

About the Contributor

More in Scientific Research

- Accessibility Policy

- Privacy Notice

- Manage Cookies

Copyright © 2019 Society for Neuroscience

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 3. Psychological Science

3.1 Psychologists Use the Scientific Method to Guide Their Research

Learning objectives.

- Describe the principles of the scientific method and explain its importance in conducting and interpreting research.

- Differentiate laws from theories and explain how research hypotheses are developed and tested.

- Discuss the procedures that researchers use to ensure that their research with humans and with animals is ethical.

Psychologists aren’t the only people who seek to understand human behaviour and solve social problems. Philosophers, religious leaders, and politicians, among others, also strive to provide explanations for human behaviour. But psychologists believe that research is the best tool for understanding human beings and their relationships with others. Rather than accepting the claim of a philosopher that people do (or do not) have free will, a psychologist would collect data to empirically test whether or not people are able to actively control their own behaviour. Rather than accepting a politician’s contention that creating (or abandoning) a new centre for mental health will improve the lives of individuals in the inner city, a psychologist would empirically assess the effects of receiving mental health treatment on the quality of life of the recipients. The statements made by psychologists are empirical, which means they are based on systematic collection and analysis of data .

The Scientific Method

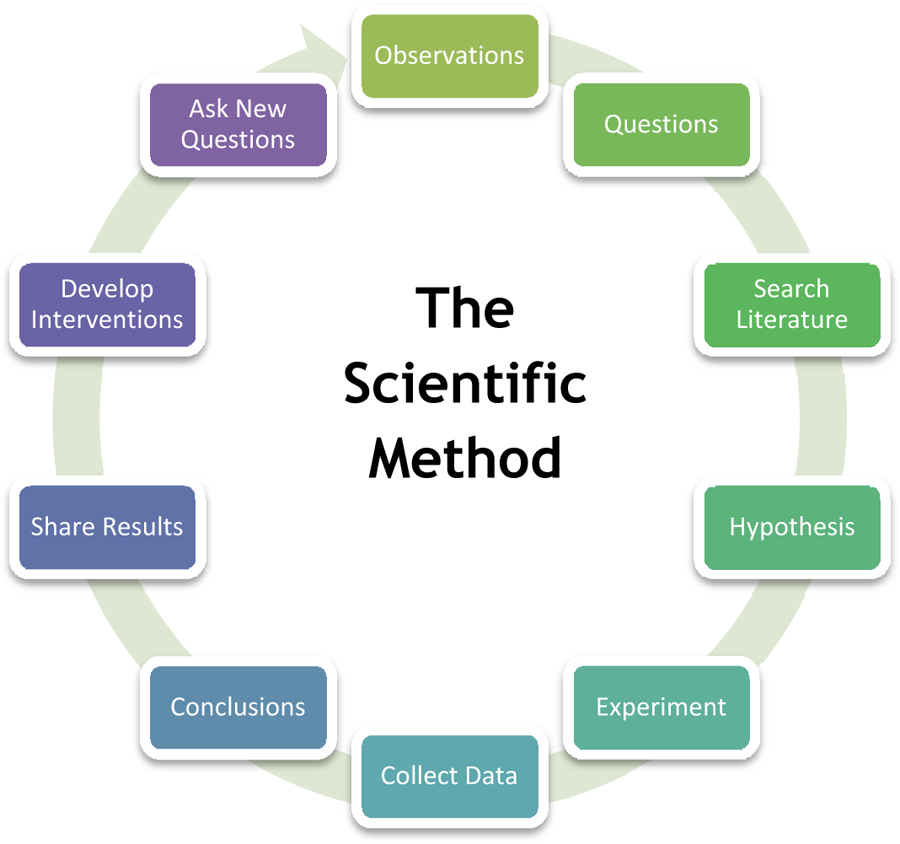

All scientists (whether they are physicists, chemists, biologists, sociologists, or psychologists) are engaged in the basic processes of collecting data and drawing conclusions about those data. The methods used by scientists have developed over many years and provide a common framework for developing, organizing, and sharing information. The scientific method is the set of assumptions, rules, and procedures scientists use to conduct research .

In addition to requiring that science be empirical, the scientific method demands that the procedures used be objective , or free from the personal bias or emotions of the scientist . The scientific method proscribes how scientists collect and analyze data, how they draw conclusions from data, and how they share data with others. These rules increase objectivity by placing data under the scrutiny of other scientists and even the public at large. Because data are reported objectively, other scientists know exactly how the scientist collected and analyzed the data. This means that they do not have to rely only on the scientist’s own interpretation of the data; they may draw their own, potentially different, conclusions.

Most new research is designed to replicate — that is, to repeat, add to, or modify — previous research findings. The scientific method therefore results in an accumulation of scientific knowledge through the reporting of research and the addition to and modification of these reported findings by other scientists.

Laws and Theories as Organizing Principles

One goal of research is to organize information into meaningful statements that can be applied in many situations. Principles that are so general as to apply to all situations in a given domain of inquiry are known as laws . There are well-known laws in the physical sciences, such as the law of gravity and the laws of thermodynamics, and there are some universally accepted laws in psychology, such as the law of effect and Weber’s law. But because laws are very general principles and their validity has already been well established, they are themselves rarely directly subjected to scientific test.

The next step down from laws in the hierarchy of organizing principles is theory. A theory is an integrated set of principles that explains and predicts many, but not all, observed relationships within a given domain of inquiry . One example of an important theory in psychology is the stage theory of cognitive development proposed by the Swiss psychologist Jean Piaget. The theory states that children pass through a series of cognitive stages as they grow, each of which must be mastered in succession before movement to the next cognitive stage can occur . This is an extremely useful theory in human development because it can be applied to many different content areas and can be tested in many different ways.

Good theories have four important characteristics. First, good theories are general , meaning they summarize many different outcomes . Second, they are parsimonious , meaning they provide the simplest possible account of those outcomes . The stage theory of cognitive development meets both of these requirements. It can account for developmental changes in behaviour across a wide variety of domains, and yet it does so parsimoniously — by hypothesizing a simple set of cognitive stages. Third, good theories provide ideas for future research. The stage theory of cognitive development has been applied not only to learning about cognitive skills, but also to the study of children’s moral (Kohlberg, 1966) and gender (Ruble & Martin, 1998) development.

Finally, good theories are falsifiable (Popper, 1959), which means the variables of interest can be adequately measured and the relationships between the variables that are predicted by the theory can be shown through research to be incorrect . The stage theory of cognitive development is falsifiable because the stages of cognitive reasoning can be measured and because if research discovers, for instance, that children learn new tasks before they have reached the cognitive stage hypothesized to be required for that task, then the theory will be shown to be incorrect.

No single theory is able to account for all behaviour in all cases. Rather, theories are each limited in that they make accurate predictions in some situations or for some people but not in other situations or for other people. As a result, there is a constant exchange between theory and data: existing theories are modified on the basis of collected data, and the new modified theories then make new predictions that are tested by new data, and so forth. When a better theory is found, it will replace the old one. This is part of the accumulation of scientific knowledge.

The Research Hypothesis

Theories are usually framed too broadly to be tested in a single experiment. Therefore, scientists use a more precise statement of the presumed relationship between specific parts of a theory — a research hypothesis — as the basis for their research. A research hypothesis is a specific and falsifiable prediction about the relationship between or among two or more variables , where a variable is any attribute that can assume different values among different people or across different times or places . The research hypothesis states the existence of a relationship between the variables of interest and the specific direction of that relationship. For instance, the research hypothesis “Using marijuana will reduce learning” predicts that there is a relationship between one variable, “using marijuana,” and another variable called “learning.” Similarly, in the research hypothesis “Participating in psychotherapy will reduce anxiety,” the variables that are expected to be related are “participating in psychotherapy” and “level of anxiety.”

When stated in an abstract manner, the ideas that form the basis of a research hypothesis are known as conceptual variables. Conceptual variables are abstract ideas that form the basis of research hypotheses . Sometimes the conceptual variables are rather simple — for instance, age, gender, or weight. In other cases the conceptual variables represent more complex ideas, such as anxiety, cognitive development, learning, self-esteem, or sexism.

The first step in testing a research hypothesis involves turning the conceptual variables into measured variables , which are variables consisting of numbers that represent the conceptual variables . For instance, the conceptual variable “participating in psychotherapy” could be represented as the measured variable “number of psychotherapy hours the patient has accrued,” and the conceptual variable “using marijuana” could be assessed by having the research participants rate, on a scale from 1 to 10, how often they use marijuana or by administering a blood test that measures the presence of the chemicals in marijuana.

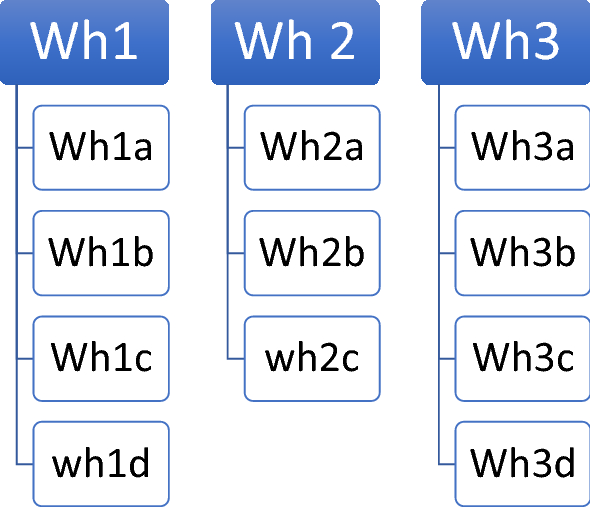

Psychologists use the term operational definition to refer to a precise statement of how a conceptual variable is turned into a measured variable . The relationship between conceptual and measured variables in a research hypothesis is diagrammed in Figure 3.1. The conceptual variables are represented in circles at the top of the figure (Psychotherapy and anxiety), and the measured variables are represented in squares at the bottom (number of hours the patient has spent in psychotherapy and anxiety concerns as reported by the patient). The two vertical arrows, which lead from the conceptual variables to the measured variables, represent the operational definitions of the two variables. The arrows indicate the expectation that changes in the conceptual variables (psychotherapy and anxiety) will cause changes in the corresponding measured variables (number of hours in psychotherapy and reported anxiety concernts). The measured variables are then used to draw inferences about the conceptual variables.

Table 3.1 lists some potential operational definitions of conceptual variables that have been used in psychological research. As you read through this list, note that in contrast to the abstract conceptual variables, the measured variables are very specific. This specificity is important for two reasons. First, more specific definitions mean that there is less danger that the collected data will be misunderstood by others. Second, specific definitions will enable future researchers to replicate the research.

| Conceptual variable | Operational definitions |

|---|---|

| Aggression | |

| Interpersonal attraction | |

| Employee satisfaction | ) to 9 ( ) |

| Decision-making skills | |

| Depression | |

Conducting Ethical Research

One of the questions that all scientists must address concerns the ethics of their research. Physicists are concerned about the potentially harmful outcomes of their experiments with nuclear materials. Biologists worry about the potential outcomes of creating genetically engineered human babies. Medical researchers agonize over the ethics of withholding potentially beneficial drugs from control groups in clinical trials. Likewise, psychologists are continually considering the ethics of their research.

Research in psychology may cause some stress, harm, or inconvenience for the people who participate in that research. For instance, researchers may require introductory psychology students to participate in research projects and then deceive these students, at least temporarily, about the nature of the research. Psychologists may induce stress, anxiety, or negative moods in their participants, expose them to weak electrical shocks, or convince them to behave in ways that violate their moral standards. And researchers may sometimes use animals in their research, potentially harming them in the process.

Decisions about whether research is ethical are made using established ethical codes developed by scientific organizations, such as the Canadian Psychological Association, and federal governments. In Canada, the federal agencies, Health Canada, and the Canadian Institute for Health Research provide the guidelines for ethical standards in research. Some research, such as the research conducted by the Nazis on prisoners during World War II, is perceived as immoral by almost everyone. Other procedures, such as the use of animals in research testing the effectiveness of drugs, are more controversial.

Scientific research has provided information that has improved the lives of many people. Therefore, it is unreasonable to argue that because scientific research has costs, no research should be conducted. This argument fails to consider the fact that there are significant costs to not doing research and that these costs may be greater than the potential costs of conducting the research (Rosenthal, 1994). In each case, before beginning to conduct the research, scientists have attempted to determine the potential risks and benefits of the research and have come to the conclusion that the potential benefits of conducting the research outweigh the potential costs to the research participants.

Characteristics of an Ethical Research Project Using Human Participants

- Trust and positive rapport are created between the researcher and the participant.

- The rights of both the experimenter and participant are considered, and the relationship between them is mutually beneficial.

- The experimenter treats the participant with concern and respect and attempts to make the research experience a pleasant and informative one.

- Before the research begins, the participant is given all information relevant to his or her decision to participate, including any possibilities of physical danger or psychological stress.

- The participant is given a chance to have questions about the procedure answered, thus guaranteeing his or her free choice about participating.

- After the experiment is over, any deception that has been used is made public, and the necessity for it is explained.

- The experimenter carefully debriefs the participant, explaining the underlying research hypothesis and the purpose of the experimental procedure in detail and answering any questions.

- The experimenter provides information about how he or she can be contacted and offers to provide information about the results of the research if the participant is interested in receiving it. (Stangor, 2011)

This list presents some of the most important factors that psychologists take into consideration when designing their research. The most direct ethical concern of the scientist is to prevent harm to the research participants. One example is the well-known research of Stanley Milgram (1974) investigating obedience to authority. In these studies, participants were induced by an experimenter to administer electric shocks to another person so that Milgram could study the extent to which they would obey the demands of an authority figure. Most participants evidenced high levels of stress resulting from the psychological conflict they experienced between engaging in aggressive and dangerous behaviour and following the instructions of the experimenter. Studies such as those by Milgram are no longer conducted because the scientific community is now much more sensitized to the potential of such procedures to create emotional discomfort or harm.

Another goal of ethical research is to guarantee that participants have free choice regarding whether they wish to participate in research. Students in psychology classes may be allowed, or even required, to participate in research, but they are also always given an option to choose a different study to be in, or to perform other activities instead. And once an experiment begins, the research participant is always free to leave the experiment if he or she wishes to. Concerns with free choice also occur in institutional settings, such as in schools, hospitals, corporations, and prisons, when individuals are required by the institutions to take certain tests, or when employees are told or asked to participate in research.

Researchers must also protect the privacy of the research participants. In some cases data can be kept anonymous by not having the respondents put any identifying information on their questionnaires. In other cases the data cannot be anonymous because the researcher needs to keep track of which respondent contributed the data. In this case, one technique is to have each participant use a unique code number to identify his or her data, such as the last four digits of the student ID number. In this way the researcher can keep track of which person completed which questionnaire, but no one will be able to connect the data with the individual who contributed them.

Perhaps the most widespread ethical concern to the participants in behavioural research is the extent to which researchers employ deception. Deception occurs whenever research participants are not completely and fully informed about the nature of the research project before participating in it . Deception may occur in an active way, such as when the researcher tells the participants that he or she is studying learning when in fact the experiment really concerns obedience to authority. In other cases the deception is more passive, such as when participants are not told about the hypothesis being studied or the potential use of the data being collected.

Some researchers have argued that no deception should ever be used in any research (Baumrind, 1985). They argue that participants should always be told the complete truth about the nature of the research they are in, and that when participants are deceived there will be negative consequences, such as the possibility that participants may arrive at other studies already expecting to be deceived. Other psychologists defend the use of deception on the grounds that it is needed to get participants to act naturally and to enable the study of psychological phenomena that might not otherwise get investigated. They argue that it would be impossible to study topics such as altruism, aggression, obedience, and stereotyping without using deception because if participants were informed ahead of time what the study involved, this knowledge would certainly change their behaviour. The codes of ethics of the Canadian Psychological Association and the Tri-Council Policy Statement of Canada’s three federal research agencies (the Canadian Institute of Health Research [CIHR], the Natural Sciences and Engineering Research Council of Canada [NSERC], and the Social Sciences and Humanities Research Council of Canada [SSHRC] or “the Agencies”) allow researchers to use deception, but these codes also require them to explicitly consider how their research might be conducted without the use of deception.

Ensuring that Research Is Ethical

Making decisions about the ethics of research involves weighing the costs and benefits of conducting versus not conducting a given research project. The costs involve potential harm to the research participants and to the field, whereas the benefits include the potential for advancing knowledge about human behaviour and offering various advantages, some educational, to the individual participants. Most generally, the ethics of a given research project are determined through a cost-benefit analysis , in which the costs are compared with the benefits . If the potential costs of the research appear to outweigh any potential benefits that might come from it, then the research should not proceed.

Arriving at a cost-benefit ratio is not simple. For one thing, there is no way to know ahead of time what the effects of a given procedure will be on every person or animal who participates or what benefit to society the research is likely to produce. In addition, what is ethical is defined by the current state of thinking within society, and thus perceived costs and benefits change over time. In Canada, the Tri-Council regulations require that all universities receiving funds from the Agencies set up an Ethical Review Board (ERB) to determine whether proposed research meets department regulations. The ERB is a committee of at least five members whose goal it is to determine the cost-benefit ratio of research conducted within an institution . The ERB must approve the procedures of all the research conducted at the institution before the research can begin. The board may suggest modifications to the procedures, or (in rare cases) it may inform the scientist that the research violates Tri-Council Research Policy Statement and thus cannot be conducted at all.

One important tool for ensuring that research is ethical is the use of informed consent . A sample informed consent form is shown in Figure 3.2, Informed consent , conducted before a participant begins a research session, is designed to explain the research procedures and inform the participant of his or her rights during the investigation . The informed consent explains as much as possible about the true nature of the study, particularly everything that might be expected to influence willingness to participate, but it may in some cases withhold some information that allows the study to work.

The informed consent form explains the research procedures and informs the participant of his or her rights during the investigation. Informed consent should address the following issues:

- A very general statement about the purpose of the study

- A brief description of what the participants will be asked to do

- A brief description of the risks, if any, and what the researcher will do to restore the participant

- A statement informing participants that they may refuse to participate or withdraw at any time without being penalized

- A statement regarding how the participant’s confidentiality will be protected

- Encouragement to ask questions about participation

- Instructions regarding whom to contact if there are concerns

- Information regarding where the subjects may be informed about the study’s findings

Because participating in research has the potential for producing long-term changes in the research participants, all participants should be fully debriefed immediately after their participation. The debriefing is a procedure designed to fully explain the purposes and procedures of the research and remove any harmful after-effects of participation .

Research with Animals

Because animals make up an important part of the natural world, and because some research cannot be conducted using humans, animals are also participants in psychological research (Figure 3.3). Most psychological research using animals is now conducted with rats, mice, and birds, and the use of other animals in research is declining (Thomas & Blackman, 1992). As with ethical decisions involving human participants, a set of basic principles has been developed that helps researchers make informed decisions about such research; a summary is shown below.

Canadian Psychological Association Guidelines on Humane Care and Use of Animals in Research

The following are some of the most important ethical principles from the Canadian Psychological Association’s (CPA) guidelines on research with animals.

- II.45 Not use animals in their research unless there is a reasonable expectation that the research will increase understanding of the structures and processes underlying behaviour, or increase understanding of the particular animal species used in the study, or result eventually in benefits to the health and welfare of humans or other animals.

- II.46 Use a procedure subjecting animals to pain, stress, or privation only if an alternative procedure is unavailable and the goal is justified by its prospective scientific, educational, or applied value.

- II.47 Make every effort to minimize the discomfort, illness, and pain of animals. This would include performing surgical procedures only under appropriate anaesthesia, using techniques to avoid infection and minimize pain during and after surgery and, if disposing of experimental animals is carried out at the termination of the study, doing so in a humane way. (Canadian Code of Ethics for Psychologists)

- II.48 Use animals in classroom demonstrations only if the instructional objectives cannot be achieved through the use of video-tapes, films, or other methods, and if the type of demonstration is warranted by the anticipated instructional gain (Canadian Psychological Association, 2000).

Because the use of animals in research involves a personal value, people naturally disagree about this practice. Although many people accept the value of such research (Plous, 1996), a minority of people, including animal-rights activists, believe that it is ethically wrong to conduct research on animals. This argument is based on the assumption that because animals are living creatures just as humans are, no harm should ever be done to them.

Most scientists, however, reject this view. They argue that such beliefs ignore the potential benefits that have come, and continue to come, from research with animals. For instance, drugs that can reduce the incidence of cancer or AIDS may first be tested on animals, and surgery that can save human lives may first be practised on animals. Research on animals has also led to a better understanding of the physiological causes of depression, phobias, and stress, among other illnesses. In contrast to animal-rights activists, then, scientists believe that because there are many benefits that accrue from animal research, such research can and should continue as long as the humane treatment of the animals used in the research is guaranteed.

Key Takeaways

- Psychologists use the scientific method to generate, accumulate, and report scientific knowledge.

- Basic research, which answers questions about behaviour, and applied research, which finds solutions to everyday problems, inform each other and work together to advance science.

- Research reports describing scientific studies are published in scientific journals so that other scientists and laypersons may review the empirical findings.

- Organizing principles, including laws, theories, and research hypotheses, give structure and uniformity to scientific methods.

- Concerns for conducting ethical research are paramount. Researchers ensure that participants are given free choice to participate and that their privacy is protected. Informed consent and debriefing help provide humane treatment of participants.

- A cost-benefit analysis is used to determine what research should and should not be allowed to proceed.

Exercises and Critical Thinking

- Give an example from personal experience of how you or someone you know has benefited from the results of scientific research.

- Find and discuss a research project that in your opinion has ethical concerns. Explain why you find these concerns to be troubling.

- Indicate your personal feelings about the use of animals in research. When should and should not animals be used? What principles have you used to come to these conclusions?

Image Attributions

Figure 3.3: “ Wistar rat ” by Janet Stephens (http://en.wikipedia.org/wiki/File:Wistar_rat.jpg) is in the public domain .

Baumrind, D. (1985). Research using intentional deception: Ethical issues revisited. American Psychologist, 40 , 165–174.

Canadian Psychological Association. (2000). Canadian code of ethics for psychologists (third edition) [PDF] . Retrieved July 2014 from http://www.cpa.ca/cpasite/userfiles/Documents/Practice_Page/Ethics_Code_Psych.pdf

Kohlberg, L. (1966). A cognitive-developmental analysis of children’s sex-role concepts and attitudes. In E. E. Maccoby (Ed.), The development of sex differences . Stanford, CA: Stanford University Press.

Milgram, S. (1974). Obedience to authority: An experimental view . New York, NY: Harper and Row.

Plous, S. (1996). Attitudes toward the use of animals in psychological research and education. Psychological Science, 7 , 352–358.

Popper, K. R. (1959). The logic of scientific discovery . New York, NY: Basic Books.

Rosenthal, R. (1994). Science and ethics in conducting, analyzing, and reporting psychological research. Psychological Science, 5 , 127–134.

Ruble, D., & Martin, C. (1998). Gender development. In W. Damon (Ed.), Handbook of child psychology (5th ed., pp. 933–1016). New York, NY: John Wiley & Sons.

Stangor, C. (2011). Research methods for the behavioral sciences (4th ed.). Mountain View, CA: Cengage.

Thomas, G., & Blackman, D. (1992). The future of animal studies in psychology. American Psychologist, 47 , 1678.

Long Descriptions

Figure 3.2 long description: Sample research consent form.

My name is [insert your name], and this research project is part of the requirement for a [insert your degree program] at [blank] University. My credentials with [blank] university can be established by telephoning [insert name and number of supervisor].

This document constitutes an agreement to participate in my research project, the objective of which is to [insert research objectives and the sponsoring organization here].

The research will consist of [insert your methodology] and its foreseen to last [insert amount of time]. The foreseen questions will refer to [insert summary of foreseen questions]. In addition to submitting my final report to [blank] University in partial fulfillment for a [insert your degree program], I will also be sharing my search findings with [insert your sponsoring organization]. [Disclose all the purposes to which the research data is going to be put, e.g. journal articles, books, etc.].

Information will be recorded in hand-written format (or taped/videotaped, etc) and where appropriate, summarized, in anonymous format, in the body of the final report. At no time will any specific comments be attributed to any individual unless specific agreement has been obtained beforehand. All documentation will be kept strictly confidential.

A copy of the final report will be published. A copy will be housed at [blank] university, available online through [blank] and will be publicly accessible. Access and distribution will be unrestricted.

[Disclose any and all conflicts of interest and how those will be managed.]

You are not compelled to participate in this research project. If you do choose to participate, you are free to withdraw at any time without prejudice. Similarly, if you choose not to participate in this research project, this information will also be maintained in confidence.

By signing this letter, you give free and informed consent to participate in this project.

Name (Please print), Signed: Date: [Return to Figure 3.2]

Introduction to Psychology - 1st Canadian Edition Copyright © 2014 by Jennifer Walinga and Charles Stangor is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

What is Research?: The Truth about Research

- The Truth about Research

- Research Steps

- Evaluating Sources

- Parts of a Research Article

Research isn't what you think it is. It's not just dusty books written by men long since gone from this world. No...research is something we all do almost every day of our lives. You do it when you ask your friend if the movie they saw was any good. You do it when you go on Yelp to find a place to eat. You even do it when you read the comments section of an article on your favorite site. The key is knowing how to do it well. Doing it well doesn't mean putting the same amount of research into every problem--it means knowing the level of research needed, as well as how to reach that level.

Different Topics (and Different Needs) Require Different Expertise

For example, Wikipedia is fine if I want to know what happened on my favorite tv show last season, but I can't cite Wikipedia on my term paper. I also wouldn't go to a lawyer to see if I need to have my appendix taken out. So when doing research, it is always important to take the author's experience and credentials into consideration. Just because I trust Rachael Ray to provide good recipes for game day doesn't mean I should take financial advice from her. So always consider the source.

All Information is Created for a Reason

The person creating the information you're reading, hearing, watching, etc., is doing it for a reason. Maybe it's to inform or educate you; maybe it's to entertain or persuade you; maybe it's to get back at his roommate for not washing the dishes. The point is that every piece of information you read was created with a specific purpose.

Knowledge is Power

All information has value. The amount of that value depends on the person who created that information and on the person who is receiving it. For instance, if your 8 year old nephew tells you that a meteorite is about to hit New Orleans, you are not going to value that information the same way you would if Neil deGrasse Tyson said it. Likewise, you probably wouldn't value details of the latest kids movie as much as your nephew would.

Research is Answering a Question

Any time you do research, you are simply trying to answer a question. In the process of answering that question, you may find that even more questions pop up. For instance, you may wonder why the sky is blue. You learn that it has to do with the way light refracts. You may wonder why light refracts, which would lead you to discover that light acts like a wave and a particle. And it goes on and on.

Articles and Books are Just Really Public Conversations

Most scholarly articles and books started as questions that the author had about a topic. From there, the author either agreed or disagreed with what he/she read. Tons of research later, the author publishes their opinion. Think of it as a really public, really slow, really long conversation between scholars on a topic.

Searching for Information is an Exploration You Should Plan for

It's easy to get lost in the din of information. Have a plan. It's okay to venture from the plan from time to time. But having a plan makes it easier to find your way back to what you're trying to find out. As you go along, you may decide that you'd prefer to go a slightly (or totally) different direction with your research. That's fine. That's normal. Just don't go off in a direction because you got distracted. Think Wikipedia--you start out looking up the plot of Game of Thrones and end up reading about cheetahs in the Serengeti.

- Next: Research Steps >>

- Last Updated: Jul 20, 2017 9:23 PM

- URL: https://libguides.uno.edu/whatisresearch

Every print subscription comes with full digital access

Science News

Is redoing scientific research the best way to find truth.

During replication attempts, too many studies fail to pass muster

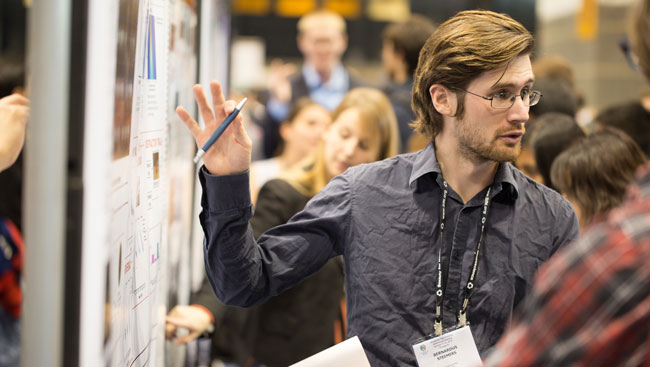

REPEAT PERFORMANCE By some accounts, science is facing a crisis of confidence because the results of many studies aren’t confirmed when researchers attempt to replicate the experiments.

Harry Campbell

Share this:

By Tina Hesman Saey

January 13, 2015 at 2:23 pm

R. Allan Mufson remembers the alarming letters from physicians. They were testing a drug intended to help cancer patients by boosting levels of oxygen-carrying hemoglobin in their blood.

In animal studies and early clinical trials, the drug known as Epo (for erythropoietin) appeared to counteract anemia caused by radiation and chemotherapy. It had the potential to spare patients from the need for blood transfusions. Researchers also had evidence that Epo might increase radiation’s tumor-killing power.

But when doctors started giving Epo or related drugs, called erythropoietic-stimulating agents, to large numbers of cancer patients in clinical trials, it looked like deaths increased. Physicians were concerned, and some stopped their studies early.

At the same time, laboratory researchers were collecting evidence that Epo might be feeding rather than fighting tumors. When other scientists, particularly researchers who worked for the company that made the drug, tried to replicate the original findings, they couldn’t.

Next issue, February 7: “Big Data, Big Challenges”

This article is part one of a two-part series. In the Feb. 7 issue, writer Tina Hesman Saey will explore how a move toward massive datasets creates opportunities for chaos and errors to multiply .

Scientists should be able to say whether Epo is good or bad for cancer patients, but seven years later, they still can’t. The Epo debate highlights deeper trouble in the life sciences and social sciences, two fields where it appears particularly hard to replicate research findings. Replicability is a cornerstone of science, but too many studies are failing the test.

“There’s a community sense that this is a growing problem,” says Lawrence Tabak, deputy director of the National Institutes of Health. Early last year, NIH joined the chorus of researchers drawing attention to the problem, and the agency issued a plan and a call to action.

Unprecedented funding challenges have put scientists under extreme pressure to publish quickly and often. Those pressures may lead researchers to publish results before proper vetting or to keep hush about experiments that didn’t pan out. At the same time, journals have pared down the section in a published paper devoted to describing a study’s methods: “In some journals it’s really a methods tweet,” Tabak says. Scientists are less certain than ever that what they read in journals is true.

12 reasons research goes wrong

Many people say one solution to the problem is to have independent labs replicate key studies to validate their findings. The hope is to identify where and why things go wrong. Armed with that knowledge, the replicators think they can improve the reliability of published reports.

Others call that quest futile, saying it’s difficult — if not impossible — to redo a study exactly, especially when working with highly variable subjects, such as people, animals or cells. Repeating published work wastes time and money, the critics say, and it does nothing to advance knowledge. They’d prefer to see questions approached with a variety of different methods. It’s the general patterns and basic principles — the reproducibility of a finding, not the precise replication of a specific experiment — that really matter.

It seems that everyone has an opinion about the underlying causes leading to irreproducibility, and many have offered solutions. But no one really knows entirely what is wrong or if any of the proffered fixes will work.

Much of the controversy has centered on the types of statistical analyses used in most scientific studies, and hardly anyone disputes that the math is a major tripping point. An influential 2005 paper looking at the statistical weakness of scientific studies generated much of the self-reflection taking place within the medical community over the last decade. While those issues still exist, especially as more complex analyses are applied to big data studies, there remain deeper problems that may be harder to fix.

Taking sides

Epo researchers weren’t the first to find discrepancies in their results, but their experience set the stage for much of the current controversy.

Story continues below infographic

Wrong answers

The Bayer pharmaceutical company tried to repeat studies in three research fields (gold chart), mostly cancer studies. Almost two-thirds of the redos (dark teal) produced results inconsistent with the original findings.

Source: F. Prinz et al/Nature Reviews Drug Discovery 2011

Mufson, head of the National Cancer Institute’s Cancer Immunology and Hematology Etiology Branch, organized a two-day workshop in 2007 where academic, government and pharmaceutical company scientists, clinicians and patient advocates discussed the Epo findings.

A divide quickly emerged between pharmaceutical researchers and scientists from academic labs, says Charles Bennett, an oncologist at the University of South Carolina.

Bennett was part of a team that had reported in 2005 that erythropoietin reduced the need for blood transfusions and possibly improved survival among cancer patients. But he came to the meeting armed with very different data . He and colleagues found that erythropoietin and darbepoetin used to treat anemia in cancer patients raised the risk of blood clots by 57 percent and the risk of dying by about 10 percent. Others found that people with breast or head and neck cancers died sooner than other cancer patients if they took Epo.

Those who argued that Epo was harmful to patients cited cellular mechanisms: tumor cells make more Epo receptors than other cells. More receptors, the researchers feared, meant the drug was stimulating growth of the cancer cells, a finding that might explain why patients were dying.

Company scientists from Amgen, which makes Epo drugs, charged that they had tried and could not replicate the results published by the academic researchers. After listening to the researchers hash through data for two days, Bennett could see why there was conflict. The company and academic scientists couldn’t even agree on what constituted growth of tumor cells, or on the correct tools for detecting Epo receptors on tumor cells, he says. That disconnect meant neither side would be able to confirm the other’s findings, nor could they completely discount the results. The meeting ended with a list of concerns and direction for future studies, but little consensus.

“I went in thinking it was black and white,” Bennett says. “Now, I’m very much convinced it’s a gray answer and everybody’s right.”

From there, pressure continued to build. In 2012, Amgen caused shock waves by reporting that it could independently confirm only six of 53 “landmark” papers on preclinical cancer studies. Replicating results is one of the first steps companies take before investing in further development of a drug. Amgen will not disclose how it conducted the replication experiments or even which studies it tried to replicate. Bennett suspects the controversial Epo experiments were among the chosen studies, perhaps tinting the results.

We’re always in a gray area between perfect truth and complete falsehood.

— Giovanni Parmigiani

Amgen’s revelation came on the heels of a similar report from the pharmaceutical company Bayer. In 2011, three Bayer researchers reported in Nature Reviews Drug Discovery that company scientists could fully replicate only about 20 to 25 percent of published preclinical cancer, cardiovascular and women’s health studies. Like Amgen, Bayer did not say which studies it attempted to replicate. But those inconsistencies could mean the company would have to drop projects or expend more resources to validate the original reports.

Scientists were already uneasy because of a well-known 2005 essay by epidemiologist John Ioannidis, now at Stanford University. He had used statistical arguments to contend that most research findings are false. Faulty statistics often indicate a finding is true when it is not. Those falsely positive results usually don’t replicate.

Academic scientists have had no easier time than drug companies in replicating others’ results. Researchers at MD Anderson Cancer Center in Houston surveyed their colleagues about whether they had ever had difficulty replicating findings from published papers. More than half, 54.6 percent, of the 434 respondents said that they had, the survey team reported in PLOS ONE in 2013. Only a third of those people were able to correct the discrepancy or explain why they got different answers.

“Those kinds of studies are sort of shocking and worrying,” says Elizabeth Iorns, a biologist at the University of Miami in Florida and chief executive officer for Science Exchange, a network of labs that attempt to independently validate research results.

Over the long term, science is a self-correcting process and will sort itself out, Tabak and NIH director Francis Collins wrote last January in Nature . “In the shorter term, however, the checks and balances that once ensured scientific fidelity have been hobbled. This has compromised the ability of today’s researchers to reproduce others’ findings,” Tabak and Collins wrote. Myriad reasons for the failure to reproduce have been given, many involving the culture of science. Fixing the problem is going to require a more sophisticated understanding of what’s actually wrong, Ioannidis and others argue.

Two schools of thought

Researchers don’t even agree on whether it is necessary to duplicate studies exactly, or to validate the underlying principles, says Giovanni Parmigiani, a statistician at the Dana-Farber Cancer Institute in Boston. Scientists have two schools of thought about verifying someone else’s results: replication and reproducibility. The replication school teaches that researchers should retrace all of the steps in a study from data generation through the final analysis to see if the same answer emerges. If a study is true and right, it should.

Proponents of the other school, reproducibility, contend that complete duplication only demonstrates whether a phenomenon occurs under the exact conditions of the experiment. Obtaining consistent results across studies using different methods or groups of people or animals is a more reliable gauge of biological meaningfulness, the reproducibility school teaches. To add to the confusion, some scientists reverse the labels.

Timothy Wilson, a social psychologist at the University of Virginia in Charlottesville, is in the reproducibility camp. He would prefer that studies extend the original findings, perhaps modifying variables to learn more about the underlying principles. “Let’s try to discover something,” he says. “This is the way science marches forward. It’s slow and messy, but it works.”

But Iorns and Brian Nosek, a psychologist and one of Wilson’s colleagues at the University of Virginia, are among those who think exact duplication can move research in the right direction.

In 2013, Nosek and his former student Jeffrey Spies cofounded the Center for Open Science, with the lofty goal “to increase openness, integrity and reproducibility of scientific research.” Their approach was twofold: provide infrastructure to allow scientists to more easily and openly share data and conduct research projects to repeat studies in various disciplines in science.

Soon, Nosek and Iorns’ Science Exchange teamed up to replicate 50 of the most important (defined as highly cited) cancer studies published between 2010 and 2012. On December 10, 2014, the Reproducibility Project: Cancer Biology kicked off when three groups announced in the journal eLife their intention to replicate key experiments from previous studies and shared their plans for how to do it.

Iorns, Nosek and their collaborators hope the effort will give scientists a better idea of the reliability of these studies. If the replication efforts fail, the researchers want to know why. It’s possible that the underlying biology is sound, but that some technical glitch prevents successful replication of the results. Or the researchers may have been barking up the wrong tree. Most likely the real answer is somewhere in the middle.

Neuroscience researchers realized the value of duplicating studies with therapeutic potential early on. In 2003, the National Institute of Neuro-logical Disorders and Stroke contracted labs to redo some important spinal cord injury studies that showed promise for helping patients. Neuroscientist Oswald Steward of the University of California, Irvine School of Medicine heads one of the contract labs.

Story continues below table

Survey says …

Academic researchers have trouble duplicating other researchers’ published results, a survey from MD Anderson Cancer Center suggests. Researchers, especially junior faculty members and trainees, often don’t resolve discrepancies and tend not to publish conflicting reports.

Source: A. Mobley et al/PLOS ONE 2013

Of the 12 studies Steward and colleagues tried to copy, they could fully replicate only one. And only after the researchers determined that the ability of a drug to limit hemorrhaging and nerve degeneration near an injury depended upon the exact mechanism that produced the injury. Half the studies could not be replicated at all and the rest were partially replicated, or produced mixed or less robust results than the originals, according to a 2012 report in Experimental Neurology .

Notably, the researchers cited 11 reasons that might account for why previous studies failed to replicate; only one was that the original study was wrong. Exact duplications of original studies are impossible, Steward and his colleagues contend.

Acts of aggression

Before looking at cancer studies, Nosek investigated his own field with a large collaborative research project. In a special issue of Social Psychology published last April , he and other researchers reported results of 15 replication studies testing 26 psychological phenomena. Of 26 original observations tested, they could only replicate 10. That doesn’t mean the rest failed entirely; several of the replication studies got similar or mixed results to the original, but they couldn’t qualify as a success because they didn’t pass statistical tests.

Simone Schnall conducted one of the studies that other researchers claimed they could not replicate. Schnall, a social psychologist at the University of Cambridge, studies how emotions affect judgment.

She has found that making people sit at a sticky, filthy desk or showing them revolting movie scenes not only disgusts them, it makes their moral judgment harsher. In 2008, Schnall and colleagues examined disgust’s flip side, cleanliness, and found that hand washing made people’s moral judgments less harsh.

M. Brent Donnellan, one of the researchers who attempted to replicate Schnall’s 2008 findings, blogged before the replication study was published that his group made two unsuccessful attempts to duplicate Schnall’s original findings. “We gave it our best shot and pretty much encountered an epic fail as my 10-year-old would say,” he wrote. When Schnall and others complained that the comments were unprofessional and pointed out several possible reasons the study failed to replicate, Donnellan, a psychologist at Texas A&M University in College Station, apologized for the remark, calling it “ill-advised.”

Schnall’s criticism set off a flurry of negative remarks from some researchers, while others leapt to her defense. The most vociferous of her champions have called replicators “bullies,” “second-stringers” and worse. The experience, Schnall said, has damaged her reputation and affected her ability to get funding; when decision makers hear about the failed replication they suspect she did something wrong.

“Somehow failure to replicate is viewed as more informative than the original studies,” says Wilson. In Schnall’s case, “For all we know it was an epic fail on the replicators’ part.”

The scientific community needs to realize that it is difficult to replicate a study, says Ioannidis. “People should not be shamed,” he says. Every geneticist, himself included, has published studies purporting to find genetic causes of disease that turned out to be wrong, he says.

Iorns is not out to stigmatize anyone, she says. “We don’t want people to feel like we’re policing them or coming after them.” She aims to improve the quality of science and scientists. Researchers should be rewarded for producing consistently reproducible results, she says. “Ultimately it should be the major criteria by which scientists are assessed. What could be more important?”

Variable soup

Much of the discussion of replicability has centered on social and cultural factors that contribute to publication of irreplicable results, but no one has really been discussing the mechanisms that may lead replication efforts to fail, says Benjamin Djulbegovic, a clinical researcher at the University of South Florida in Tampa. He and long-time collaborator mathematician Iztok Hozo of Indiana University Northwest in Gary have been mulling over the question for years, Djulbegovic says.

They were inspired by the “butterfly effect,” an illustration of chaos theory that one small action can have major repercussions later. The classic example holds that a butterfly flapping its wings in Brazil can brew a tornado in Texas. Djulbegovic hit on the idea that there’s chaos at work in most biology and psychology studies as well.

Changing even a few of the original conditions of an experiment can have a butterfly effect on the outcome of replication attempts, he and Hozo reported in June in Acta Informatica Medica . The two researchers considered a simplified case in which 12 factors may affect a doctor’s decision on how to treat a patient. The researchers focused on clinical decision making, but the concept is applicable to other areas of science, Djulbegovic says. Most of the factors, such as the decision maker’s time pressure (yes or no) or cultural factors (present or not important) have two possible starting places. The doctor’s individual characteristics — age (old or young), gender (male or female) — could have four combinations and the decision maker’s personality had five different modes. All together, those dozen initial factors make up 20,480 combinations that could represent the initial conditions of the experiment.

That didn’t even include variables about where exams took place, the conditions affecting study participants (Were they tired? Had they recently fought with a loved one or indulged in alcohol?), or the handling of biological samples that might affect the diagnostic test results. Researchers have good and bad days too. “You may interview people in the morning, but nobody controls how well you slept last night or how long it took you to drive to work,” Djulbegovic says. Those invisible variables may very well influence the medical decisions made that day and therefore affect the study’s outcome.

Djulbegovic and Hozo varied some of the initial conditions in computer simulations. If initial conditions varied between experiments by 2.5 conditions or less, the results were highly consistent, or replicable. But changing 3.5 to four initial factors gave answers all over the map, indicating that very slight changes in initial conditions can render experiments irreproducible.

The study is not rigorous mathematical proof, Djulbegovic says. “We just sort of put some thoughts in writing.”

Balancing act

Failed replications may offer scientists some valuable insights, Steward says. “We need to recognize that many results won’t make it through the translational grist mill.” In other words, a therapy that shows promise under specific conditions, but can’t be replicated in other labs, is not ready to be tried in humans.

“In many cases there’s a biological story there, but it’s a fragile one,” Steward says. “If it’s fragile, it’s not translatable.”

That doesn’t negate the original findings, he says. “It changes our perspective on what it takes to get a translatable result.”

Nosek, proponent of replication that he is, admits that scientists need room for error. Requiring absolute replicability could discourage researchers from ever taking a chance, producing only the tiniest of incremental advances, he says. Science needs both crazy ideas and careful research to succeed.

“It’s totally OK that you have this outrageous attempt that fails,” Nosek says. After all, “Einstein was wrong about a lot of stuff. Newton. Thomas Edison. They had plenty of failures, too.” But science can’t survive on bold audacity alone, either. “We need a balance of innovation and verification,” Nosek says.

How best to achieve that balance is anybody’s guess. In their January 2014 paper , Collins and Tabak reviewed NIH’s plan, which includes training modules for teaching early-career scientists the proper way to do research, standards for committees reviewing research proposals and an emphasis on data sharing. But the funding agency can’t change things alone.

In November, in response to the NIH call to action, more than 30 major journals announced that they had adopted a set of guidelines for reporting results of preclinical studies. Those guidelines include calls for more rigorous statistical analyses, detailed reporting on how the studies were done, and a strong recommendation that all datasets be made available upon request.

Ioannidis offered his own suggestions in the October PLOS Medicine . “We need better science on the way science is done,” he says. He helped start the Meta-Research Innovation Center at Stanford to conduct research on research and figure out how to improve it.

In the decade since he published his assertion of the wrongness of research, Ioannidis has seen change. “We’re doing better, but the challenges are even bigger than they were 10 years ago,” he says.

He is reluctant to put a number on science’s reliability as a whole, though. “If I said 55 to 65 percent [of results] are not replicable, it would not do justice to the fact that some types of scientific results are 99 percent likely to be true.”

Science is not irrevocably broken, he asserts. It just needs some improvements.

“Despite the fact that I’ve published papers with pretty depressive titles, I’m actually an optimist,” Ioannidis says. “I find no other investment of a society that is better placed than science.”

This article appeared in the January 24, 2015 issue of Science News with the headline “Repeat Performance.”

More Stories from Science News on Science & Society

A fluffy, orange fungus could transform food waste into tasty dishes

‘Turning to Stone’ paints rocks as storytellers and mentors

Old books can have unsafe levels of chromium, but readers’ risk is low

Astronauts actually get stuck in space all the time

Scientists are getting serious about UFOs. Here’s why

‘Then I Am Myself the World’ ponders what it means to be conscious

Twisters asks if you can 'tame' a tornado. We have the answer

The world has water problems. This book has solutions

Subscribers, enter your e-mail address for full access to the Science News archives and digital editions.

Not a subscriber? Become one now .

Community Blog

Keep up-to-date on postgraduate related issues with our quick reads written by students, postdocs, professors and industry leaders.

What is Research? – Purpose of Research

- By DiscoverPhDs

- September 10, 2020

The purpose of research is to enhance society by advancing knowledge through the development of scientific theories, concepts and ideas. A research purpose is met through forming hypotheses, collecting data, analysing results, forming conclusions, implementing findings into real-life applications and forming new research questions.

What is Research

Simply put, research is the process of discovering new knowledge. This knowledge can be either the development of new concepts or the advancement of existing knowledge and theories, leading to a new understanding that was not previously known.

As a more formal definition of research, the following has been extracted from the Code of Federal Regulations :

While research can be carried out by anyone and in any field, most research is usually done to broaden knowledge in the physical, biological, and social worlds. This can range from learning why certain materials behave the way they do, to asking why certain people are more resilient than others when faced with the same challenges.

The use of ‘systematic investigation’ in the formal definition represents how research is normally conducted – a hypothesis is formed, appropriate research methods are designed, data is collected and analysed, and research results are summarised into one or more ‘research conclusions’. These research conclusions are then shared with the rest of the scientific community to add to the existing knowledge and serve as evidence to form additional questions that can be investigated. It is this cyclical process that enables scientific research to make continuous progress over the years; the true purpose of research.

What is the Purpose of Research

From weather forecasts to the discovery of antibiotics, researchers are constantly trying to find new ways to understand the world and how things work – with the ultimate goal of improving our lives.

The purpose of research is therefore to find out what is known, what is not and what we can develop further. In this way, scientists can develop new theories, ideas and products that shape our society and our everyday lives.

Although research can take many forms, there are three main purposes of research:

- Exploratory: Exploratory research is the first research to be conducted around a problem that has not yet been clearly defined. Exploration research therefore aims to gain a better understanding of the exact nature of the problem and not to provide a conclusive answer to the problem itself. This enables us to conduct more in-depth research later on.

- Descriptive: Descriptive research expands knowledge of a research problem or phenomenon by describing it according to its characteristics and population. Descriptive research focuses on the ‘how’ and ‘what’, but not on the ‘why’.

- Explanatory: Explanatory research, also referred to as casual research, is conducted to determine how variables interact, i.e. to identify cause-and-effect relationships. Explanatory research deals with the ‘why’ of research questions and is therefore often based on experiments.

Characteristics of Research

There are 8 core characteristics that all research projects should have. These are:

- Empirical – based on proven scientific methods derived from real-life observations and experiments.

- Logical – follows sequential procedures based on valid principles.

- Cyclic – research begins with a question and ends with a question, i.e. research should lead to a new line of questioning.

- Controlled – vigorous measures put into place to keep all variables constant, except those under investigation.

- Hypothesis-based – the research design generates data that sufficiently meets the research objectives and can prove or disprove the hypothesis. It makes the research study repeatable and gives credibility to the results.

- Analytical – data is generated, recorded and analysed using proven techniques to ensure high accuracy and repeatability while minimising potential errors and anomalies.

- Objective – sound judgement is used by the researcher to ensure that the research findings are valid.

- Statistical treatment – statistical treatment is used to transform the available data into something more meaningful from which knowledge can be gained.

Finding a PhD has never been this easy – search for a PhD by keyword, location or academic area of interest.

Types of Research

Research can be divided into two main types: basic research (also known as pure research) and applied research.

Basic Research

Basic research, also known as pure research, is an original investigation into the reasons behind a process, phenomenon or particular event. It focuses on generating knowledge around existing basic principles.

Basic research is generally considered ‘non-commercial research’ because it does not focus on solving practical problems, and has no immediate benefit or ways it can be applied.

While basic research may not have direct applications, it usually provides new insights that can later be used in applied research.

Applied Research

Applied research investigates well-known theories and principles in order to enhance knowledge around a practical aim. Because of this, applied research focuses on solving real-life problems by deriving knowledge which has an immediate application.

Methods of Research

Research methods for data collection fall into one of two categories: inductive methods or deductive methods.

Inductive research methods focus on the analysis of an observation and are usually associated with qualitative research. Deductive research methods focus on the verification of an observation and are typically associated with quantitative research.

Qualitative Research

Qualitative research is a method that enables non-numerical data collection through open-ended methods such as interviews, case studies and focus groups .

It enables researchers to collect data on personal experiences, feelings or behaviours, as well as the reasons behind them. Because of this, qualitative research is often used in fields such as social science, psychology and philosophy and other areas where it is useful to know the connection between what has occurred and why it has occurred.

Quantitative Research

Quantitative research is a method that collects and analyses numerical data through statistical analysis.

It allows us to quantify variables, uncover relationships, and make generalisations across a larger population. As a result, quantitative research is often used in the natural and physical sciences such as engineering, biology, chemistry, physics, computer science, finance, and medical research, etc.

What does Research Involve?

Research often follows a systematic approach known as a Scientific Method, which is carried out using an hourglass model.

A research project first starts with a problem statement, or rather, the research purpose for engaging in the study. This can take the form of the ‘ scope of the study ’ or ‘ aims and objectives ’ of your research topic.

Subsequently, a literature review is carried out and a hypothesis is formed. The researcher then creates a research methodology and collects the data.

The data is then analysed using various statistical methods and the null hypothesis is either accepted or rejected.

In both cases, the study and its conclusion are officially written up as a report or research paper, and the researcher may also recommend lines of further questioning. The report or research paper is then shared with the wider research community, and the cycle begins all over again.

Although these steps outline the overall research process, keep in mind that research projects are highly dynamic and are therefore considered an iterative process with continued refinements and not a series of fixed stages.

This post explains the difference between the journal paper status of In Review and Under Review.

Reference management software solutions offer a powerful way for you to track and manage your academic references. Read our blog post to learn more about what they are and how to use them.

Considering whether to do an MBA or a PhD? If so, find out what their differences are, and more importantly, which one is better suited for you.

Join thousands of other students and stay up to date with the latest PhD programmes, funding opportunities and advice.

Browse PhDs Now

A science investigatory project is a science-based research project or study that is performed by school children in a classroom, exhibition or science fair.

Do you need to have published papers to do a PhD? The simple answer is no but it could benefit your application if you can.

Sara is currently in the 4th year of the Physics Doctoral Program at The Graduate Center of the City University of New York. Her research investigates quantum transport properties of 2D electron systems.

Nathan is about to enter the 2nd year of his PhD at the University of Hertfordshire. His research looks at how lifestyle stresses can impact skin barrier biophysics and skin barrier and oral cavity biochemistry and microbiology.

Join Thousands of Students

Module 1: Introduction: What is Research?

Learning Objectives

By the end of this module, you will be able to:

- Explain how the scientific method is used to develop new knowledge

- Describe why it is important to follow a research plan

The Scientific Method consists of observing the world around you and creating a hypothesis about relationships in the world. A hypothesis is an informed and educated prediction or explanation about something. Part of the research process involves testing the hypothesis , and then examining the results of these tests as they relate to both the hypothesis and the world around you. When a researcher forms a hypothesis, this acts like a map through the research study. It tells the researcher which factors are important to study and how they might be related to each other or caused by a manipulation that the researcher introduces (e.g. a program, treatment or change in the environment). With this map, the researcher can interpret the information he/she collects and can make sound conclusions about the results.

Research can be done with human beings, animals, plants, other organisms and inorganic matter. When research is done with human beings and animals, it must follow specific rules about the treatment of humans and animals that have been created by the U.S. Federal Government. This ensures that humans and animals are treated with dignity and respect, and that the research causes minimal harm.

No matter what topic is being studied, the value of the research depends on how well it is designed and done. Therefore, one of the most important considerations in doing good research is to follow the design or plan that is developed by an experienced researcher who is called the Principal Investigator (PI). The PI is in charge of all aspects of the research and creates what is called a protocol (the research plan) that all people doing the research must follow. By doing so, the PI and the public can be sure that the results of the research are real and useful to other scientists.

Module 1: Discussion Questions

- How is a hypothesis like a road map?

- Who is ultimately responsible for the design and conduct of a research study?

- How does following the research protocol contribute to informing public health practices?

Email Updates

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Sweepstakes

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

Scientific Method Steps in Psychology Research

Steps, Uses, and Key Terms

Verywell / Theresa Chiechi

How do researchers investigate psychological phenomena? They utilize a process known as the scientific method to study different aspects of how people think and behave.

When conducting research, the scientific method steps to follow are:

- Observe what you want to investigate

- Ask a research question and make predictions

- Test the hypothesis and collect data

- Examine the results and draw conclusions

- Report and share the results

This process not only allows scientists to investigate and understand different psychological phenomena but also provides researchers and others a way to share and discuss the results of their studies.

Generally, there are five main steps in the scientific method, although some may break down this process into six or seven steps. An additional step in the process can also include developing new research questions based on your findings.

What Is the Scientific Method?

What is the scientific method and how is it used in psychology?

The scientific method consists of five steps. It is essentially a step-by-step process that researchers can follow to determine if there is some type of relationship between two or more variables.

By knowing the steps of the scientific method, you can better understand the process researchers go through to arrive at conclusions about human behavior.

Scientific Method Steps

While research studies can vary, these are the basic steps that psychologists and scientists use when investigating human behavior.

The following are the scientific method steps:

Step 1. Make an Observation

Before a researcher can begin, they must choose a topic to study. Once an area of interest has been chosen, the researchers must then conduct a thorough review of the existing literature on the subject. This review will provide valuable information about what has already been learned about the topic and what questions remain to be answered.

A literature review might involve looking at a considerable amount of written material from both books and academic journals dating back decades.

The relevant information collected by the researcher will be presented in the introduction section of the final published study results. This background material will also help the researcher with the first major step in conducting a psychology study: formulating a hypothesis.