Exploring Experimental Research: Methodologies, Designs, and Applications Across Disciplines

- SSRN Electronic Journal

- The National University of Cheasim Kamchaymear

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Khaoula Boussalham

- COMPUT COMMUN REV

- Debbie Rohwer

- Sorakrich Maneewan

- Ravinder Koul

- Int J Contemp Hospit Manag

- J EXP ANAL BEHAV

- Alan E. Kazdin

- Jimmie Leppink

- Keith Morrison

- Louis Cohen

- Lawrence Manion

- ACCOUNT ORG SOC

- Wim A. Van der Stede

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Design | Step-by-Step Guide with Examples

Published on 5 May 2022 by Shona McCombes . Revised on 20 March 2023.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall aims and approach

- The type of research design you’ll use

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research aims and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, frequently asked questions.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities – start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

| Qualitative approach | Quantitative approach |

|---|---|

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

It’s also possible to use a mixed methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Prevent plagiarism, run a free check.

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

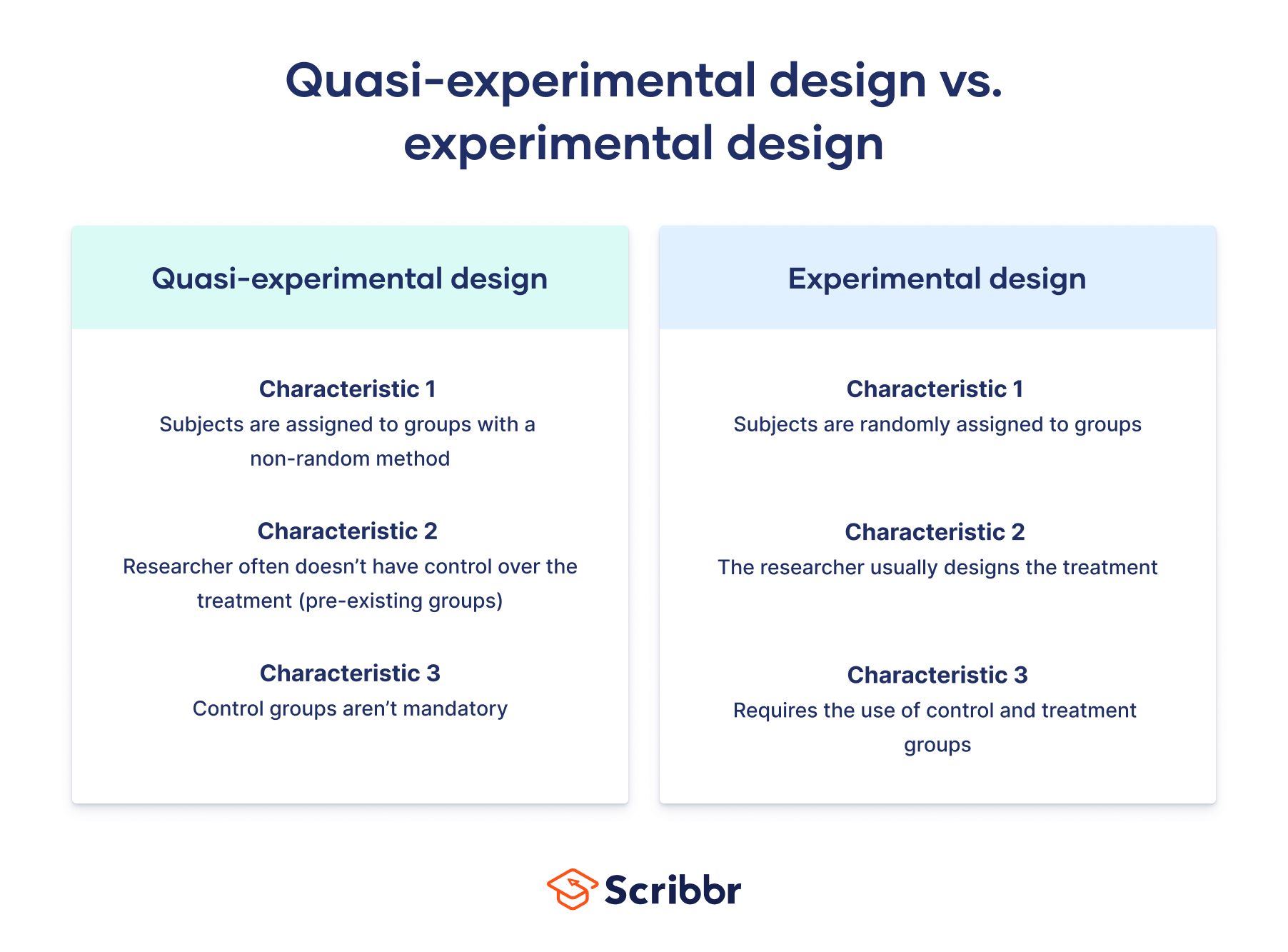

Quantitative designs can be split into four main types. Experimental and quasi-experimental designs allow you to test cause-and-effect relationships, while descriptive and correlational designs allow you to measure variables and describe relationships between them.

| Type of design | Purpose and characteristics |

|---|---|

| Experimental | |

| Quasi-experimental | |

| Correlational | |

| Descriptive |

With descriptive and correlational designs, you can get a clear picture of characteristics, trends, and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analysing the data.

| Type of design | Purpose and characteristics |

|---|---|

| Grounded theory | |

| Phenomenology |

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study – plants, animals, organisations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region, or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalise your results to the population as a whole.

| Probability sampling | Non-probability sampling |

|---|---|

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study, your aim is to deeply understand a specific context, not to generalise to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question.

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviours, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews.

| Questionnaires | Interviews |

|---|---|

Observation methods

Observations allow you to collect data unobtrusively, observing characteristics, behaviours, or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

| Quantitative observation | |

|---|---|

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

| Field | Examples of data collection methods |

|---|---|

| Media & communication | Collecting a sample of texts (e.g., speeches, articles, or social media posts) for data on cultural norms and narratives |

| Psychology | Using technologies like neuroimaging, eye-tracking, or computer-based tasks to collect data on things like attention, emotional response, or reaction time |

| Education | Using tests or assignments to collect data on knowledge and skills |

| Physical sciences | Using scientific instruments to collect data on things like weight, blood pressure, or chemical composition |

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected – for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are reliable and valid.

Operationalisation

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalisation means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in – for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity

Reliability means your results can be consistently reproduced , while validity means that you’re actually measuring the concept you’re interested in.

| Reliability | Validity |

|---|---|

For valid and reliable results, your measurement materials should be thoroughly researched and carefully designed. Plan your procedures to make sure you carry out the same steps in the same way for each participant.

If you’re developing a new questionnaire or other instrument to measure a specific concept, running a pilot study allows you to check its validity and reliability in advance.

Sampling procedures

As well as choosing an appropriate sampling method, you need a concrete plan for how you’ll actually contact and recruit your selected sample.

That means making decisions about things like:

- How many participants do you need for an adequate sample size?

- What inclusion and exclusion criteria will you use to identify eligible participants?

- How will you contact your sample – by mail, online, by phone, or in person?

If you’re using a probability sampling method, it’s important that everyone who is randomly selected actually participates in the study. How will you ensure a high response rate?

If you’re using a non-probability method, how will you avoid bias and ensure a representative sample?

Data management

It’s also important to create a data management plan for organising and storing your data.

Will you need to transcribe interviews or perform data entry for observations? You should anonymise and safeguard any sensitive data, and make sure it’s backed up regularly.

Keeping your data well organised will save time when it comes to analysing them. It can also help other researchers validate and add to your findings.

On their own, raw data can’t answer your research question. The last step of designing your research is planning how you’ll analyse the data.

Quantitative data analysis

In quantitative research, you’ll most likely use some form of statistical analysis . With statistics, you can summarise your sample data, make estimates, and test hypotheses.

Using descriptive statistics , you can summarise your sample data in terms of:

- The distribution of the data (e.g., the frequency of each score on a test)

- The central tendency of the data (e.g., the mean to describe the average score)

- The variability of the data (e.g., the standard deviation to describe how spread out the scores are)

The specific calculations you can do depend on the level of measurement of your variables.

Using inferential statistics , you can:

- Make estimates about the population based on your sample data.

- Test hypotheses about a relationship between variables.

Regression and correlation tests look for associations between two or more variables, while comparison tests (such as t tests and ANOVAs ) look for differences in the outcomes of different groups.

Your choice of statistical test depends on various aspects of your research design, including the types of variables you’re dealing with and the distribution of your data.

Qualitative data analysis

In qualitative research, your data will usually be very dense with information and ideas. Instead of summing it up in numbers, you’ll need to comb through the data in detail, interpret its meanings, identify patterns, and extract the parts that are most relevant to your research question.

Two of the most common approaches to doing this are thematic analysis and discourse analysis .

| Approach | Characteristics |

|---|---|

| Thematic analysis | |

| Discourse analysis |

There are many other ways of analysing qualitative data depending on the aims of your research. To get a sense of potential approaches, try reading some qualitative research papers in your field.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, March 20). Research Design | Step-by-Step Guide with Examples. Scribbr. Retrieved 9 September 2024, from https://www.scribbr.co.uk/research-methods/research-design/

Is this article helpful?

Shona McCombes

Instant insights, infinite possibilities

Experimental design: Guide, steps, examples

Last updated

27 April 2023

Reviewed by

Miroslav Damyanov

Short on time? Get an AI generated summary of this article instead

Experimental research design is a scientific framework that allows you to manipulate one or more variables while controlling the test environment.

When testing a theory or new product, it can be helpful to have a certain level of control and manipulate variables to discover different outcomes. You can use these experiments to determine cause and effect or study variable associations.

This guide explores the types of experimental design, the steps in designing an experiment, and the advantages and limitations of experimental design.

Make research less tedious

Dovetail streamlines research to help you uncover and share actionable insights

- What is experimental research design?

You can determine the relationship between each of the variables by:

Manipulating one or more independent variables (i.e., stimuli or treatments)

Applying the changes to one or more dependent variables (i.e., test groups or outcomes)

With the ability to analyze the relationship between variables and using measurable data, you can increase the accuracy of the result.

What is a good experimental design?

A good experimental design requires:

Significant planning to ensure control over the testing environment

Sound experimental treatments

Properly assigning subjects to treatment groups

Without proper planning, unexpected external variables can alter an experiment's outcome.

To meet your research goals, your experimental design should include these characteristics:

Provide unbiased estimates of inputs and associated uncertainties

Enable the researcher to detect differences caused by independent variables

Include a plan for analysis and reporting of the results

Provide easily interpretable results with specific conclusions

What's the difference between experimental and quasi-experimental design?

The major difference between experimental and quasi-experimental design is the random assignment of subjects to groups.

A true experiment relies on certain controls. Typically, the researcher designs the treatment and randomly assigns subjects to control and treatment groups.

However, these conditions are unethical or impossible to achieve in some situations.

When it's unethical or impractical to assign participants randomly, that’s when a quasi-experimental design comes in.

This design allows researchers to conduct a similar experiment by assigning subjects to groups based on non-random criteria.

Another type of quasi-experimental design might occur when the researcher doesn't have control over the treatment but studies pre-existing groups after they receive different treatments.

When can a researcher conduct experimental research?

Various settings and professions can use experimental research to gather information and observe behavior in controlled settings.

Basically, a researcher can conduct experimental research any time they want to test a theory with variable and dependent controls.

Experimental research is an option when the project includes an independent variable and a desire to understand the relationship between cause and effect.

- The importance of experimental research design

Experimental research enables researchers to conduct studies that provide specific, definitive answers to questions and hypotheses.

Researchers can test Independent variables in controlled settings to:

Test the effectiveness of a new medication

Design better products for consumers

Answer questions about human health and behavior

Developing a quality research plan means a researcher can accurately answer vital research questions with minimal error. As a result, definitive conclusions can influence the future of the independent variable.

Types of experimental research designs

There are three main types of experimental research design. The research type you use will depend on the criteria of your experiment, your research budget, and environmental limitations.

Pre-experimental research design

A pre-experimental research study is a basic observational study that monitors independent variables’ effects.

During research, you observe one or more groups after applying a treatment to test whether the treatment causes any change.

The three subtypes of pre-experimental research design are:

One-shot case study research design

This research method introduces a single test group to a single stimulus to study the results at the end of the application.

After researchers presume the stimulus or treatment has caused changes, they gather results to determine how it affects the test subjects.

One-group pretest-posttest design

This method uses a single test group but includes a pretest study as a benchmark. The researcher applies a test before and after the group’s exposure to a specific stimulus.

Static group comparison design

This method includes two or more groups, enabling the researcher to use one group as a control. They apply a stimulus to one group and leave the other group static.

A posttest study compares the results among groups.

True experimental research design

A true experiment is the most common research method. It involves statistical analysis to prove or disprove a specific hypothesis .

Under completely experimental conditions, researchers expose participants in two or more randomized groups to different stimuli.

Random selection removes any potential for bias, providing more reliable results.

These are the three main sub-groups of true experimental research design:

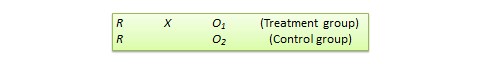

Posttest-only control group design

This structure requires the researcher to divide participants into two random groups. One group receives no stimuli and acts as a control while the other group experiences stimuli.

Researchers perform a test at the end of the experiment to observe the stimuli exposure results.

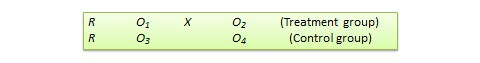

Pretest-posttest control group design

This test also requires two groups. It includes a pretest as a benchmark before introducing the stimulus.

The pretest introduces multiple ways to test subjects. For instance, if the control group also experiences a change, it reveals that taking the test twice changes the results.

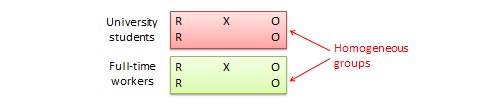

Solomon four-group design

This structure divides subjects into two groups, with two as control groups. Researchers assign the first control group a posttest only and the second control group a pretest and a posttest.

The two variable groups mirror the control groups, but researchers expose them to stimuli. The ability to differentiate between groups in multiple ways provides researchers with more testing approaches for data-based conclusions.

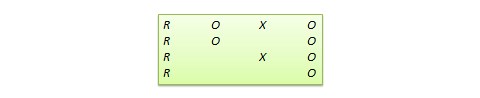

Quasi-experimental research design

Although closely related to a true experiment, quasi-experimental research design differs in approach and scope.

Quasi-experimental research design doesn’t have randomly selected participants. Researchers typically divide the groups in this research by pre-existing differences.

Quasi-experimental research is more common in educational studies, nursing, or other research projects where it's not ethical or practical to use randomized subject groups.

- 5 steps for designing an experiment

Experimental research requires a clearly defined plan to outline the research parameters and expected goals.

Here are five key steps in designing a successful experiment:

Step 1: Define variables and their relationship

Your experiment should begin with a question: What are you hoping to learn through your experiment?

The relationship between variables in your study will determine your answer.

Define the independent variable (the intended stimuli) and the dependent variable (the expected effect of the stimuli). After identifying these groups, consider how you might control them in your experiment.

Could natural variations affect your research? If so, your experiment should include a pretest and posttest.

Step 2: Develop a specific, testable hypothesis

With a firm understanding of the system you intend to study, you can write a specific, testable hypothesis.

What is the expected outcome of your study?

Develop a prediction about how the independent variable will affect the dependent variable.

How will the stimuli in your experiment affect your test subjects?

Your hypothesis should provide a prediction of the answer to your research question .

Step 3: Design experimental treatments to manipulate your independent variable

Depending on your experiment, your variable may be a fixed stimulus (like a medical treatment) or a variable stimulus (like a period during which an activity occurs).

Determine which type of stimulus meets your experiment’s needs and how widely or finely to vary your stimuli.

Step 4: Assign subjects to groups

When you have a clear idea of how to carry out your experiment, you can determine how to assemble test groups for an accurate study.

When choosing your study groups, consider:

The size of your experiment

Whether you can select groups randomly

Your target audience for the outcome of the study

You should be able to create groups with an equal number of subjects and include subjects that match your target audience. Remember, you should assign one group as a control and use one or more groups to study the effects of variables.

Step 5: Plan how to measure your dependent variable

This step determines how you'll collect data to determine the study's outcome. You should seek reliable and valid measurements that minimize research bias or error.

You can measure some data with scientific tools, while you’ll need to operationalize other forms to turn them into measurable observations.

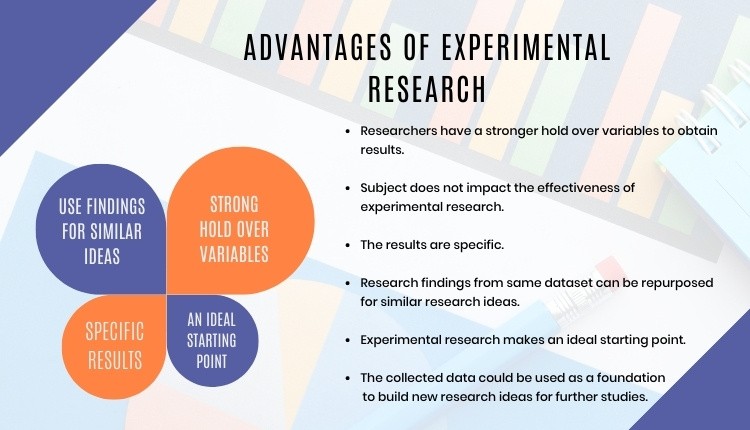

- Advantages of experimental research

Experimental research is an integral part of our world. It allows researchers to conduct experiments that answer specific questions.

While researchers use many methods to conduct different experiments, experimental research offers these distinct benefits:

Researchers can determine cause and effect by manipulating variables.

It gives researchers a high level of control.

Researchers can test multiple variables within a single experiment.

All industries and fields of knowledge can use it.

Researchers can duplicate results to promote the validity of the study .

Replicating natural settings rapidly means immediate research.

Researchers can combine it with other research methods.

It provides specific conclusions about the validity of a product, theory, or idea.

- Disadvantages (or limitations) of experimental research

Unfortunately, no research type yields ideal conditions or perfect results.�

While experimental research might be the right choice for some studies, certain conditions could render experiments useless or even dangerous.

Before conducting experimental research, consider these disadvantages and limitations:

Required professional qualification

Only competent professionals with an academic degree and specific training are qualified to conduct rigorous experimental research. This ensures results are unbiased and valid.

Limited scope

Experimental research may not capture the complexity of some phenomena, such as social interactions or cultural norms. These are difficult to control in a laboratory setting.

Resource-intensive

Experimental research can be expensive, time-consuming, and require significant resources, such as specialized equipment or trained personnel.

Limited generalizability

The controlled nature means the research findings may not fully apply to real-world situations or people outside the experimental setting.

Practical or ethical concerns

Some experiments may involve manipulating variables that could harm participants or violate ethical guidelines .

Researchers must ensure their experiments do not cause harm or discomfort to participants.

Sometimes, recruiting a sample of people to randomly assign may be difficult.

- Experimental research design example

Experiments across all industries and research realms provide scientists, developers, and other researchers with definitive answers. These experiments can solve problems, create inventions, and heal illnesses.

Product design testing is an excellent example of experimental research.

A company in the product development phase creates multiple prototypes for testing. With a randomized selection, researchers introduce each test group to a different prototype.

When groups experience different product designs , the company can assess which option most appeals to potential customers.

Experimental research design provides researchers with a controlled environment to conduct experiments that evaluate cause and effect.

Using the five steps to develop a research plan ensures you anticipate and eliminate external variables while answering life’s crucial questions.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 22 August 2024

Last updated: 5 February 2023

Last updated: 16 August 2024

Last updated: 9 March 2023

Last updated: 30 April 2024

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

- Types of experimental

Log in or sign up

Get started for free

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

6.2 Experimental Design

Learning objectives.

- Explain the difference between between-subjects and within-subjects experiments, list some of the pros and cons of each approach, and decide which approach to use to answer a particular research question.

- Define random assignment, distinguish it from random sampling, explain its purpose in experimental research, and use some simple strategies to implement it.

- Define what a control condition is, explain its purpose in research on treatment effectiveness, and describe some alternative types of control conditions.

- Define several types of carryover effect, give examples of each, and explain how counterbalancing helps to deal with them.

In this section, we look at some different ways to design an experiment. The primary distinction we will make is between approaches in which each participant experiences one level of the independent variable and approaches in which each participant experiences all levels of the independent variable. The former are called between-subjects experiments and the latter are called within-subjects experiments.

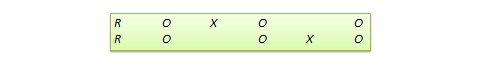

Between-Subjects Experiments

In a between-subjects experiment , each participant is tested in only one condition. For example, a researcher with a sample of 100 college students might assign half of them to write about a traumatic event and the other half write about a neutral event. Or a researcher with a sample of 60 people with severe agoraphobia (fear of open spaces) might assign 20 of them to receive each of three different treatments for that disorder. It is essential in a between-subjects experiment that the researcher assign participants to conditions so that the different groups are, on average, highly similar to each other. Those in a trauma condition and a neutral condition, for example, should include a similar proportion of men and women, and they should have similar average intelligence quotients (IQs), similar average levels of motivation, similar average numbers of health problems, and so on. This is a matter of controlling these extraneous participant variables across conditions so that they do not become confounding variables.

Random Assignment

The primary way that researchers accomplish this kind of control of extraneous variables across conditions is called random assignment , which means using a random process to decide which participants are tested in which conditions. Do not confuse random assignment with random sampling. Random sampling is a method for selecting a sample from a population, and it is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other fields too.

In its strictest sense, random assignment should meet two criteria. One is that each participant has an equal chance of being assigned to each condition (e.g., a 50% chance of being assigned to each of two conditions). The second is that each participant is assigned to a condition independently of other participants. Thus one way to assign participants to two conditions would be to flip a coin for each one. If the coin lands heads, the participant is assigned to Condition A, and if it lands tails, the participant is assigned to Condition B. For three conditions, one could use a computer to generate a random integer from 1 to 3 for each participant. If the integer is 1, the participant is assigned to Condition A; if it is 2, the participant is assigned to Condition B; and if it is 3, the participant is assigned to Condition C. In practice, a full sequence of conditions—one for each participant expected to be in the experiment—is usually created ahead of time, and each new participant is assigned to the next condition in the sequence as he or she is tested. When the procedure is computerized, the computer program often handles the random assignment.

One problem with coin flipping and other strict procedures for random assignment is that they are likely to result in unequal sample sizes in the different conditions. Unequal sample sizes are generally not a serious problem, and you should never throw away data you have already collected to achieve equal sample sizes. However, for a fixed number of participants, it is statistically most efficient to divide them into equal-sized groups. It is standard practice, therefore, to use a kind of modified random assignment that keeps the number of participants in each group as similar as possible. One approach is block randomization . In block randomization, all the conditions occur once in the sequence before any of them is repeated. Then they all occur again before any of them is repeated again. Within each of these “blocks,” the conditions occur in a random order. Again, the sequence of conditions is usually generated before any participants are tested, and each new participant is assigned to the next condition in the sequence. Table 6.2 “Block Randomization Sequence for Assigning Nine Participants to Three Conditions” shows such a sequence for assigning nine participants to three conditions. The Research Randomizer website ( http://www.randomizer.org ) will generate block randomization sequences for any number of participants and conditions. Again, when the procedure is computerized, the computer program often handles the block randomization.

Table 6.2 Block Randomization Sequence for Assigning Nine Participants to Three Conditions

| Participant | Condition |

|---|---|

| 4 | B |

| 5 | C |

| 6 | A |

Random assignment is not guaranteed to control all extraneous variables across conditions. It is always possible that just by chance, the participants in one condition might turn out to be substantially older, less tired, more motivated, or less depressed on average than the participants in another condition. However, there are some reasons that this is not a major concern. One is that random assignment works better than one might expect, especially for large samples. Another is that the inferential statistics that researchers use to decide whether a difference between groups reflects a difference in the population takes the “fallibility” of random assignment into account. Yet another reason is that even if random assignment does result in a confounding variable and therefore produces misleading results, this is likely to be detected when the experiment is replicated. The upshot is that random assignment to conditions—although not infallible in terms of controlling extraneous variables—is always considered a strength of a research design.

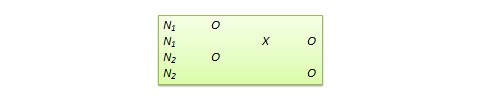

Treatment and Control Conditions

Between-subjects experiments are often used to determine whether a treatment works. In psychological research, a treatment is any intervention meant to change people’s behavior for the better. This includes psychotherapies and medical treatments for psychological disorders but also interventions designed to improve learning, promote conservation, reduce prejudice, and so on. To determine whether a treatment works, participants are randomly assigned to either a treatment condition , in which they receive the treatment, or a control condition , in which they do not receive the treatment. If participants in the treatment condition end up better off than participants in the control condition—for example, they are less depressed, learn faster, conserve more, express less prejudice—then the researcher can conclude that the treatment works. In research on the effectiveness of psychotherapies and medical treatments, this type of experiment is often called a randomized clinical trial .

There are different types of control conditions. In a no-treatment control condition , participants receive no treatment whatsoever. One problem with this approach, however, is the existence of placebo effects. A placebo is a simulated treatment that lacks any active ingredient or element that should make it effective, and a placebo effect is a positive effect of such a treatment. Many folk remedies that seem to work—such as eating chicken soup for a cold or placing soap under the bedsheets to stop nighttime leg cramps—are probably nothing more than placebos. Although placebo effects are not well understood, they are probably driven primarily by people’s expectations that they will improve. Having the expectation to improve can result in reduced stress, anxiety, and depression, which can alter perceptions and even improve immune system functioning (Price, Finniss, & Benedetti, 2008).

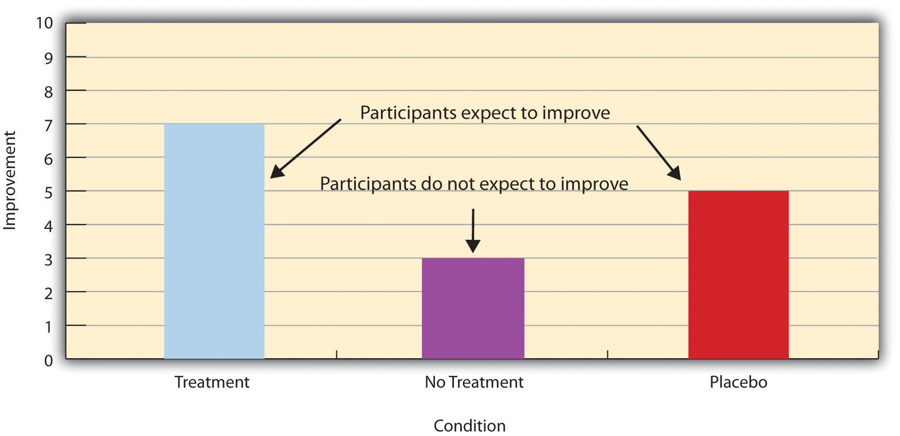

Placebo effects are interesting in their own right (see Note 6.28 “The Powerful Placebo” ), but they also pose a serious problem for researchers who want to determine whether a treatment works. Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” shows some hypothetical results in which participants in a treatment condition improved more on average than participants in a no-treatment control condition. If these conditions (the two leftmost bars in Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” ) were the only conditions in this experiment, however, one could not conclude that the treatment worked. It could be instead that participants in the treatment group improved more because they expected to improve, while those in the no-treatment control condition did not.

Figure 6.2 Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions

Fortunately, there are several solutions to this problem. One is to include a placebo control condition , in which participants receive a placebo that looks much like the treatment but lacks the active ingredient or element thought to be responsible for the treatment’s effectiveness. When participants in a treatment condition take a pill, for example, then those in a placebo control condition would take an identical-looking pill that lacks the active ingredient in the treatment (a “sugar pill”). In research on psychotherapy effectiveness, the placebo might involve going to a psychotherapist and talking in an unstructured way about one’s problems. The idea is that if participants in both the treatment and the placebo control groups expect to improve, then any improvement in the treatment group over and above that in the placebo control group must have been caused by the treatment and not by participants’ expectations. This is what is shown by a comparison of the two outer bars in Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” .

Of course, the principle of informed consent requires that participants be told that they will be assigned to either a treatment or a placebo control condition—even though they cannot be told which until the experiment ends. In many cases the participants who had been in the control condition are then offered an opportunity to have the real treatment. An alternative approach is to use a waitlist control condition , in which participants are told that they will receive the treatment but must wait until the participants in the treatment condition have already received it. This allows researchers to compare participants who have received the treatment with participants who are not currently receiving it but who still expect to improve (eventually). A final solution to the problem of placebo effects is to leave out the control condition completely and compare any new treatment with the best available alternative treatment. For example, a new treatment for simple phobia could be compared with standard exposure therapy. Because participants in both conditions receive a treatment, their expectations about improvement should be similar. This approach also makes sense because once there is an effective treatment, the interesting question about a new treatment is not simply “Does it work?” but “Does it work better than what is already available?”

The Powerful Placebo

Many people are not surprised that placebos can have a positive effect on disorders that seem fundamentally psychological, including depression, anxiety, and insomnia. However, placebos can also have a positive effect on disorders that most people think of as fundamentally physiological. These include asthma, ulcers, and warts (Shapiro & Shapiro, 1999). There is even evidence that placebo surgery—also called “sham surgery”—can be as effective as actual surgery.

Medical researcher J. Bruce Moseley and his colleagues conducted a study on the effectiveness of two arthroscopic surgery procedures for osteoarthritis of the knee (Moseley et al., 2002). The control participants in this study were prepped for surgery, received a tranquilizer, and even received three small incisions in their knees. But they did not receive the actual arthroscopic surgical procedure. The surprising result was that all participants improved in terms of both knee pain and function, and the sham surgery group improved just as much as the treatment groups. According to the researchers, “This study provides strong evidence that arthroscopic lavage with or without débridement [the surgical procedures used] is not better than and appears to be equivalent to a placebo procedure in improving knee pain and self-reported function” (p. 85).

Research has shown that patients with osteoarthritis of the knee who receive a “sham surgery” experience reductions in pain and improvement in knee function similar to those of patients who receive a real surgery.

Army Medicine – Surgery – CC BY 2.0.

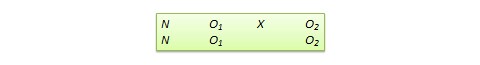

Within-Subjects Experiments

In a within-subjects experiment , each participant is tested under all conditions. Consider an experiment on the effect of a defendant’s physical attractiveness on judgments of his guilt. Again, in a between-subjects experiment, one group of participants would be shown an attractive defendant and asked to judge his guilt, and another group of participants would be shown an unattractive defendant and asked to judge his guilt. In a within-subjects experiment, however, the same group of participants would judge the guilt of both an attractive and an unattractive defendant.

The primary advantage of this approach is that it provides maximum control of extraneous participant variables. Participants in all conditions have the same mean IQ, same socioeconomic status, same number of siblings, and so on—because they are the very same people. Within-subjects experiments also make it possible to use statistical procedures that remove the effect of these extraneous participant variables on the dependent variable and therefore make the data less “noisy” and the effect of the independent variable easier to detect. We will look more closely at this idea later in the book.

Carryover Effects and Counterbalancing

The primary disadvantage of within-subjects designs is that they can result in carryover effects. A carryover effect is an effect of being tested in one condition on participants’ behavior in later conditions. One type of carryover effect is a practice effect , where participants perform a task better in later conditions because they have had a chance to practice it. Another type is a fatigue effect , where participants perform a task worse in later conditions because they become tired or bored. Being tested in one condition can also change how participants perceive stimuli or interpret their task in later conditions. This is called a context effect . For example, an average-looking defendant might be judged more harshly when participants have just judged an attractive defendant than when they have just judged an unattractive defendant. Within-subjects experiments also make it easier for participants to guess the hypothesis. For example, a participant who is asked to judge the guilt of an attractive defendant and then is asked to judge the guilt of an unattractive defendant is likely to guess that the hypothesis is that defendant attractiveness affects judgments of guilt. This could lead the participant to judge the unattractive defendant more harshly because he thinks this is what he is expected to do. Or it could make participants judge the two defendants similarly in an effort to be “fair.”

Carryover effects can be interesting in their own right. (Does the attractiveness of one person depend on the attractiveness of other people that we have seen recently?) But when they are not the focus of the research, carryover effects can be problematic. Imagine, for example, that participants judge the guilt of an attractive defendant and then judge the guilt of an unattractive defendant. If they judge the unattractive defendant more harshly, this might be because of his unattractiveness. But it could be instead that they judge him more harshly because they are becoming bored or tired. In other words, the order of the conditions is a confounding variable. The attractive condition is always the first condition and the unattractive condition the second. Thus any difference between the conditions in terms of the dependent variable could be caused by the order of the conditions and not the independent variable itself.

There is a solution to the problem of order effects, however, that can be used in many situations. It is counterbalancing , which means testing different participants in different orders. For example, some participants would be tested in the attractive defendant condition followed by the unattractive defendant condition, and others would be tested in the unattractive condition followed by the attractive condition. With three conditions, there would be six different orders (ABC, ACB, BAC, BCA, CAB, and CBA), so some participants would be tested in each of the six orders. With counterbalancing, participants are assigned to orders randomly, using the techniques we have already discussed. Thus random assignment plays an important role in within-subjects designs just as in between-subjects designs. Here, instead of randomly assigning to conditions, they are randomly assigned to different orders of conditions. In fact, it can safely be said that if a study does not involve random assignment in one form or another, it is not an experiment.

There are two ways to think about what counterbalancing accomplishes. One is that it controls the order of conditions so that it is no longer a confounding variable. Instead of the attractive condition always being first and the unattractive condition always being second, the attractive condition comes first for some participants and second for others. Likewise, the unattractive condition comes first for some participants and second for others. Thus any overall difference in the dependent variable between the two conditions cannot have been caused by the order of conditions. A second way to think about what counterbalancing accomplishes is that if there are carryover effects, it makes it possible to detect them. One can analyze the data separately for each order to see whether it had an effect.

When 9 Is “Larger” Than 221

Researcher Michael Birnbaum has argued that the lack of context provided by between-subjects designs is often a bigger problem than the context effects created by within-subjects designs. To demonstrate this, he asked one group of participants to rate how large the number 9 was on a 1-to-10 rating scale and another group to rate how large the number 221 was on the same 1-to-10 rating scale (Birnbaum, 1999). Participants in this between-subjects design gave the number 9 a mean rating of 5.13 and the number 221 a mean rating of 3.10. In other words, they rated 9 as larger than 221! According to Birnbaum, this is because participants spontaneously compared 9 with other one-digit numbers (in which case it is relatively large) and compared 221 with other three-digit numbers (in which case it is relatively small).

Simultaneous Within-Subjects Designs

So far, we have discussed an approach to within-subjects designs in which participants are tested in one condition at a time. There is another approach, however, that is often used when participants make multiple responses in each condition. Imagine, for example, that participants judge the guilt of 10 attractive defendants and 10 unattractive defendants. Instead of having people make judgments about all 10 defendants of one type followed by all 10 defendants of the other type, the researcher could present all 20 defendants in a sequence that mixed the two types. The researcher could then compute each participant’s mean rating for each type of defendant. Or imagine an experiment designed to see whether people with social anxiety disorder remember negative adjectives (e.g., “stupid,” “incompetent”) better than positive ones (e.g., “happy,” “productive”). The researcher could have participants study a single list that includes both kinds of words and then have them try to recall as many words as possible. The researcher could then count the number of each type of word that was recalled. There are many ways to determine the order in which the stimuli are presented, but one common way is to generate a different random order for each participant.

Between-Subjects or Within-Subjects?

Almost every experiment can be conducted using either a between-subjects design or a within-subjects design. This means that researchers must choose between the two approaches based on their relative merits for the particular situation.

Between-subjects experiments have the advantage of being conceptually simpler and requiring less testing time per participant. They also avoid carryover effects without the need for counterbalancing. Within-subjects experiments have the advantage of controlling extraneous participant variables, which generally reduces noise in the data and makes it easier to detect a relationship between the independent and dependent variables.

A good rule of thumb, then, is that if it is possible to conduct a within-subjects experiment (with proper counterbalancing) in the time that is available per participant—and you have no serious concerns about carryover effects—this is probably the best option. If a within-subjects design would be difficult or impossible to carry out, then you should consider a between-subjects design instead. For example, if you were testing participants in a doctor’s waiting room or shoppers in line at a grocery store, you might not have enough time to test each participant in all conditions and therefore would opt for a between-subjects design. Or imagine you were trying to reduce people’s level of prejudice by having them interact with someone of another race. A within-subjects design with counterbalancing would require testing some participants in the treatment condition first and then in a control condition. But if the treatment works and reduces people’s level of prejudice, then they would no longer be suitable for testing in the control condition. This is true for many designs that involve a treatment meant to produce long-term change in participants’ behavior (e.g., studies testing the effectiveness of psychotherapy). Clearly, a between-subjects design would be necessary here.

Remember also that using one type of design does not preclude using the other type in a different study. There is no reason that a researcher could not use both a between-subjects design and a within-subjects design to answer the same research question. In fact, professional researchers often do exactly this.

Key Takeaways

- Experiments can be conducted using either between-subjects or within-subjects designs. Deciding which to use in a particular situation requires careful consideration of the pros and cons of each approach.

- Random assignment to conditions in between-subjects experiments or to orders of conditions in within-subjects experiments is a fundamental element of experimental research. Its purpose is to control extraneous variables so that they do not become confounding variables.

- Experimental research on the effectiveness of a treatment requires both a treatment condition and a control condition, which can be a no-treatment control condition, a placebo control condition, or a waitlist control condition. Experimental treatments can also be compared with the best available alternative.

Discussion: For each of the following topics, list the pros and cons of a between-subjects and within-subjects design and decide which would be better.

- You want to test the relative effectiveness of two training programs for running a marathon.

- Using photographs of people as stimuli, you want to see if smiling people are perceived as more intelligent than people who are not smiling.

- In a field experiment, you want to see if the way a panhandler is dressed (neatly vs. sloppily) affects whether or not passersby give him any money.

- You want to see if concrete nouns (e.g., dog ) are recalled better than abstract nouns (e.g., truth ).

- Discussion: Imagine that an experiment shows that participants who receive psychodynamic therapy for a dog phobia improve more than participants in a no-treatment control group. Explain a fundamental problem with this research design and at least two ways that it might be corrected.

Birnbaum, M. H. (1999). How to show that 9 > 221: Collect judgments in a between-subjects design. Psychological Methods, 4 , 243–249.

Moseley, J. B., O’Malley, K., Petersen, N. J., Menke, T. J., Brody, B. A., Kuykendall, D. H., … Wray, N. P. (2002). A controlled trial of arthroscopic surgery for osteoarthritis of the knee. The New England Journal of Medicine, 347 , 81–88.

Price, D. D., Finniss, D. G., & Benedetti, F. (2008). A comprehensive review of the placebo effect: Recent advances and current thought. Annual Review of Psychology, 59 , 565–590.

Shapiro, A. K., & Shapiro, E. (1999). The powerful placebo: From ancient priest to modern physician . Baltimore, MD: Johns Hopkins University Press.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

- General & Introductory Statistics

- Survey Research Methods & Sampling

Experimental Methods in Survey Research: Techniques that Combine Random Sampling with Random Assignment

ISBN: 978-1-119-08374-0

October 2019

Paul J. Lavrakas , Michael W. Traugott , Courtney Kennedy , Allyson L. Holbrook , Edith D. de Leeuw , Brady T. West

A thorough and comprehensive guide to the theoretical, practical, and methodological approaches used in survey experiments across disciplines such as political science, health sciences, sociology, economics, psychology, and marketing

This book explores and explains the broad range of experimental designs embedded in surveys that use both probability and non-probability samples. It approaches the usage of survey-based experiments with a Total Survey Error (TSE) perspective, which provides insight on the strengths and weaknesses of the techniques used.

Experimental Methods in Survey Research: Techniques that Combine Random Sampling with Random Assignment addresses experiments on within-unit coverage, reducing nonresponse, question and questionnaire design, minimizing interview measurement bias, using adaptive design, trend data, vignettes, the analysis of data from survey experiments, and other topics, across social, behavioral, and marketing science domains.

Each chapter begins with a description of the experimental method or application and its importance, followed by reference to relevant literature. At least one detailed original experimental case study then follows to illustrate the experimental method’s deployment, implementation, and analysis from a TSE perspective. The chapters conclude with theoretical and practical implications on the usage of the experimental method addressed. In summary, this book:

- Fills a gap in the current literature by successfully combining the subjects of survey methodology and experimental methodology in an effort to maximize both internal validity and external validity

- Offers a wide range of types of experimentation in survey research with in-depth attention to their various methodologies and applications

- Is edited by internationally recognized experts in the field of survey research/methodology and in the usage of survey-based experimentation —featuring contributions from across a variety of disciplines in the social and behavioral sciences

- Presents advances in the field of survey experiments, as well as relevant references in each chapter for further study

- Includes more than 20 types of original experiments carried out within probability sample surveys

- Addresses myriad practical and operational aspects for designing, implementing, and analyzing survey-based experiments by using a Total Survey Error perspective to address the strengths and weaknesses of each experimental technique and method

Experimental Methods in Survey Research: Techniques that Combine Random Sampling with Random Assignment is an ideal reference for survey researchers and practitioners in areas such political science, health sciences, sociology, economics, psychology, public policy, data collection, data science, and marketing. It is also a very useful textbook for graduate-level courses on survey experiments and survey methodology.

Paul J. Lavrakas, PhD, is Senior Fellow at the NORC at the University of Chicago, Adjunct Professor at University of Illinois-Chicago, Senior Methodologist at the Social Research Centre of Australian National University and at the Office for Survey Research at Michigan State University.

Michael W. Traugott, PhD, is Research Professor in the Institute for Social Research at the University of Michigan.

Courtney Kennedy, PhD, is Director of Survey Research at Pew Research Center in Washington, DC.

Allyson L. Holbrook, PhD, is Professor of Public Administration and Psychology at the University of Illinois-Chicago.

Edith D. de Leeuw, PhD, is Professor of Survey Methodology in the Department of Methodology and Statistics at Utrecht University.

Brady T. West, PhD, is Research Associate Professor in the Survey Research Center at the University of Michigan-Ann Arbor.

Understanding Survey Research Designs: Experimental vs Descriptive

Table of Contents

Have you ever wondered how researchers gather data to explore trends, opinions, or behaviors among large groups of people? Survey research designs are a critical tool in the arsenal of social scientists, marketers, and policy makers. But not all surveys are created equal; they come in different formats with varying purposes. Today, let’s demystify two primary types of survey research designs : experimental and descriptive. By understanding their unique characteristics and applications, you’ll gain insights into how conclusions about our world are drawn from carefully collected data.

What is survey research design?

Before diving into the specific types, let’s clarify what we mean by survey research design. It’s a framework that guides the collection, analysis, and interpretation of data gathered through questionnaires or interviews. This design determines how a survey is conducted, the target population, the sampling method, and how results are analyzed to ensure that the information collected is relevant, reliable, and can support or refute a research hypothesis.

Experimental survey research design

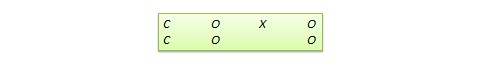

In experimental survey research design s, the researcher manipulates one or more variables to observe their effect on another variable. This method is often used to establish cause-and-effect relationships. Here’s what defines an experimental design:

- Controlled manipulation of variables: The researcher introduces changes to the in dependent variable(s) to see the effects on the dependent variable(s).

- Random assignment: Participants are randomly assigned to different groups (e.g., control and experimental) to ensure that the groups are comparable.

- Comparison of groups: By comparing data from different groups, researchers can infer the impact of the manipulated variable.

Types of experimental designs

Within experimental designs, there are several subtypes, including true experiments , quasi-experiments , and pre-experimental designs . True experiments have strict control over variables and random assignment , while quasi-experiments lack random assignment. Pre-experimental designs are the least rigorous, often lacking both control and randomization.

Descriptive survey research design

Unlike experimental designs, descriptive survey research design s do not involve manipulation or control of variables. Instead, they aim to describe characteristics of a population or phenomenon as they naturally occur. Attributes of descriptive design include:

- No manipulation: The researcher observes without intervening in the natural setting.

- Focus on current status: Descriptive surveys often aim to provide a snapshot of the current state of affairs.

- Wide range of data: They can collect a vast array of data, from opinions to demographic information.

Applications of descriptive survey designs

Descriptive surveys are widely used in various fields for different purposes. They can track consumer preferences , measure employee satisfaction , or gauge public opinion on social issues. The key is that they seek to paint a picture of what exists or what people believe at a given moment in time.

Choosing the right survey design

Deciding whether to use an experimental or descriptive survey design hinges on the research question. If the goal is to determine causality , experimental designs are the go-to. However, if the objective is to describe or explore a phenomenon without altering the environment, descriptive designs are more appropriate. Considerations include:

- Research objectives: What are you trying to find out? Do you want to test a hypothesis or simply describe a situation?

- Resources available: Experimental designs often require more resources in terms of time, money, and expertise.

- Ethical considerations: Some questions may not be ethically testable in an experimental design due to the need for manipulation.

Challenges and limitations

Both experimental and descriptive survey research designs come with their own set of challenges and limitations. For experimental designs, ensuring a truly random assignment can be difficult, and external variables may still influence outcomes. Descriptive designs may suffer from biases in self-reporting and are unable to provide causal explanations.

Best practices in survey research design

To maximize the effectiveness of a survey research design, whether experimental or descriptive, researchers should adhere to best practices:

- Clear and concise questionnaire: Questions should be easily understandable and focused on the research objectives.

- Representative sampling: The sample should accurately reflect the population being studied.

- Rigorous analysis: Statistical methods should be appropriate for the data and research questions.

- Transparency: Researchers should be transparent about methodologies, challenges, and potential biases in their work.

Survey research designs are powerful tools that, when used correctly, provide valuable insights into human behavior and preferences. Whether experimental or descriptive, each design has its rightful place depending on the research question. By considering goals, resources, and ethical implications, researchers can select the design that best fits their needs, leading to more accurate and impactful findings.

What do you think? How might understanding these research designs change the way you view poll results or studies shared in the media? Can you think of a situation where one design may be more beneficial than the other?

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Submit Comment

Research Methodologies & Methods

1 Logic of Inquiry in Social Research

- A Science of Society

- Comte’s Ideas on the Nature of Sociology

- Observation in Social Sciences

- Logical Understanding of Social Reality

2 Empirical Approach

- Empirical Approach

- Rules of Data Collection

- Cultural Relativism

- Problems Encountered in Data Collection

- Difference between Common Sense and Science

- What is Ethical?

- What is Normal?

- Understanding the Data Collected

- Managing Diversities in Social Research

- Problematising the Object of Study

- Conclusion: Return to Good Old Empirical Approach

3 Diverse Logic of Theory Building

- Concern with Theory in Sociology

- Concepts: Basic Elements of Theories

- Why Do We Need Theory?

- Hypothesis Description and Experimentation

- Controlled Experiment

- Designing an Experiment

- How to Test a Hypothesis

- Sensitivity to Alternative Explanations

- Rival Hypothesis Construction

- The Use and Scope of Social Science Theory

- Theory Building and Researcher’s Values

4 Theoretical Analysis

- Premises of Evolutionary and Functional Theories

- Critique of Evolutionary and Functional Theories

- Turning away from Functionalism

- What after Functionalism

- Post-modernism

- Trends other than Post-modernism

5 Issues of Epistemology

- Some Major Concerns of Epistemology

- Rationalism

- Phenomenology: Bracketing Experience

6 Philosophy of Social Science

- Foundations of Science

- Science, Modernity, and Sociology

- Rethinking Science

- Crisis in Foundation

7 Positivism and its Critique

- Heroic Science and Origin of Positivism

- Early Positivism

- Consolidation of Positivism

- Critiques of Positivism

8 Hermeneutics

- Methodological Disputes in the Social Sciences

- Tracing the History of Hermeneutics

- Hermeneutics and Sociology

- Philosophical Hermeneutics

- The Hermeneutics of Suspicion

- Phenomenology and Hermeneutics

9 Comparative Method

- Relationship with Common Sense; Interrogating Ideological Location

- The Historical Context

- Elements of the Comparative Approach

10 Feminist Approach

- Features of the Feminist Method

- Feminist Methods adopt the Reflexive Stance

- Feminist Discourse in India

11 Participatory Method

- Delineation of Key Features

12 Types of Research

- Basic and Applied Research

- Descriptive and Analytical Research

- Empirical and Exploratory Research

- Quantitative and Qualitative Research

- Explanatory (Causal) and Longitudinal Research

- Experimental and Evaluative Research

- Participatory Action Research

13 Methods of Research

- Evolutionary Method

- Comparative Method

- Historical Method

- Personal Documents

14 Elements of Research Design

- Structuring the Research Process

15 Sampling Methods and Estimation of Sample Size

- Classification of Sampling Methods

- Sample Size

16 Measures of Central Tendency

- Relationship between Mean, Mode, and Median

- Choosing a Measure of Central Tendency

17 Measures of Dispersion and Variability

- The Variance

- The Standard Deviation

- Coefficient of Variation

18 Statistical Inference- Tests of Hypothesis

- Statistical Inference

- Tests of Significance

19 Correlation and Regression

- Correlation

- Method of Calculating Correlation of Ungrouped Data

- Method Of Calculating Correlation Of Grouped Data

20 Survey Method

- Rationale of Survey Research Method

- History of Survey Research

- Defining Survey Research

- Sampling and Survey Techniques

- Operationalising Survey Research Tools

- Advantages and Weaknesses of Survey Research

21 Survey Design

- Preliminary Considerations

- Stages / Phases in Survey Research

- Formulation of Research Question

- Survey Research Designs

- Sampling Design

22 Survey Instrumentation

- Techniques/Instruments for Data Collection

- Questionnaire Construction

- Issues in Designing a Survey Instrument

23 Survey Execution and Data Analysis

- Problems and Issues in Executing Survey Research

- Data Analysis

- Ethical Issues in Survey Research

24 Field Research – I

- History of Field Research

- Ethnography

- Theme Selection

- Gaining Entry in the Field

- Key Informants

- Participant Observation

25 Field Research – II

- Interview its Types and Process

- Feminist and Postmodernist Perspectives on Interviewing

- Narrative Analysis

- Interpretation

- Case Study and its Types

- Life Histories

- Oral History

- PRA and RRA Techniques

26 Reliability, Validity and Triangulation

- Concepts of Reliability and Validity

- Three Types of “Reliability”

- Working Towards Reliability

- Procedural Validity

- Field Research as a Validity Check

- Method Appropriate Criteria

- Triangulation

- Ethical Considerations in Qualitative Research

27 Qualitative Data Formatting and Processing

- Qualitative Data Processing and Analysis

- Description

- Classification

- Making Connections

- Theoretical Coding

- Qualitative Content Analysis

28 Writing up Qualitative Data

- Problems of Writing Up

- Grasp and Then Render

- “Writing Down” and “Writing Up”

- Write Early

- Writing Styles

- First Draft

29 Using Internet and Word Processor

- What is Internet and How Does it Work?

- Internet Services

- Searching on the Web: Search Engines

- Accessing and Using Online Information

- Online Journals and Texts

- Statistical Reference Sites

- Data Sources

- Uses of E-mail Services in Research

30 Using SPSS for Data Analysis Contents

- Introduction

- Starting and Exiting SPSS

- Creating a Data File

- Univariate Analysis

- Bivariate Analysis

31 Using SPSS in Report Writing

- Why to Use SPSS

- Working with SPSS Output

- Copying SPSS Output to MS Word Document

32 Tabulation and Graphic Presentation- Case Studies

- Structure for Presentation of Research Findings

- Data Presentation: Editing, Coding, and Transcribing

- Case Studies

- Qualitative Data Analysis and Presentation through Software

- Types of ICT used for Research

33 Guidelines to Research Project Assignment

- Overview of Research Methodologies and Methods (MSO 002)

- Research Project Objectives

- Preparation for Research Project

- Stages of the Research Project

- Supervision During the Research Project

- Submission of Research Project

- Methodology for Evaluating Research Project

Share on Mastodon

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Experimental Design: Definition and Types

By Jim Frost 3 Comments

What is Experimental Design?

An experimental design is a detailed plan for collecting and using data to identify causal relationships. Through careful planning, the design of experiments allows your data collection efforts to have a reasonable chance of detecting effects and testing hypotheses that answer your research questions.

An experiment is a data collection procedure that occurs in controlled conditions to identify and understand causal relationships between variables. Researchers can use many potential designs. The ultimate choice depends on their research question, resources, goals, and constraints. In some fields of study, researchers refer to experimental design as the design of experiments (DOE). Both terms are synonymous.

Ultimately, the design of experiments helps ensure that your procedures and data will evaluate your research question effectively. Without an experimental design, you might waste your efforts in a process that, for many potential reasons, can’t answer your research question. In short, it helps you trust your results.

Learn more about Independent and Dependent Variables .

Design of Experiments: Goals & Settings

Experiments occur in many settings, ranging from psychology, social sciences, medicine, physics, engineering, and industrial and service sectors. Typically, experimental goals are to discover a previously unknown effect , confirm a known effect, or test a hypothesis.

Effects represent causal relationships between variables. For example, in a medical experiment, does the new medicine cause an improvement in health outcomes? If so, the medicine has a causal effect on the outcome.

An experimental design’s focus depends on the subject area and can include the following goals: