Copyright © 2024 AudiologyOnline - All Rights Reserved

- Back to Basics: Speech Audiometry

Janet R. Schoepflin, PhD

- Hearing Evaluation - Adults

Editor's Note: This is a transcript of an AudiologyOnline live seminar. Please download supplemental course materials . Speech is the auditory stimulus through which we communicate. The recognition of speech is therefore of great interest to all of us in the fields of speech and hearing. Speech audiometry developed originally out of the work conducted at Bell Labs in the 1920s and 1930s where they were looking into the efficiency of communication systems, and really gained momentum post World War II as returning veterans presented with hearing loss. The methods and materials for testing speech intelligibility were of interest then, and are still of interest today. It is due to this ongoing interest as seen in the questions that students ask during classes, by questions new audiologists raise as they begin their practice, and by the comments and questions we see on various audiology listservs about the most efficient and effective ways to test speech in the clinical setting, that AudiologyOnline proposed this webinar as part of their Back to Basics series. I am delighted to participate. I am presenting a review of the array of speech tests that we use in clinical evaluation with a summary of some of the old and new research that has come about to support the recommended practices. The topics that I will address today are an overview of speech threshold testing, suprathreshold speech recognition testing, the most comfortable listening level testing, uncomfortable listening level, and a brief mention of some new directions that speech testing is taking. In the context of testing speech, I will assume that the environment in which you are testing meets the ANSI permissible noise criteria and that the audiometer transducers that are being used to perform speech testing are all calibrated to the ANSI standards for speech. I will not be talking about those standards, but it's of course important to keep those in mind.

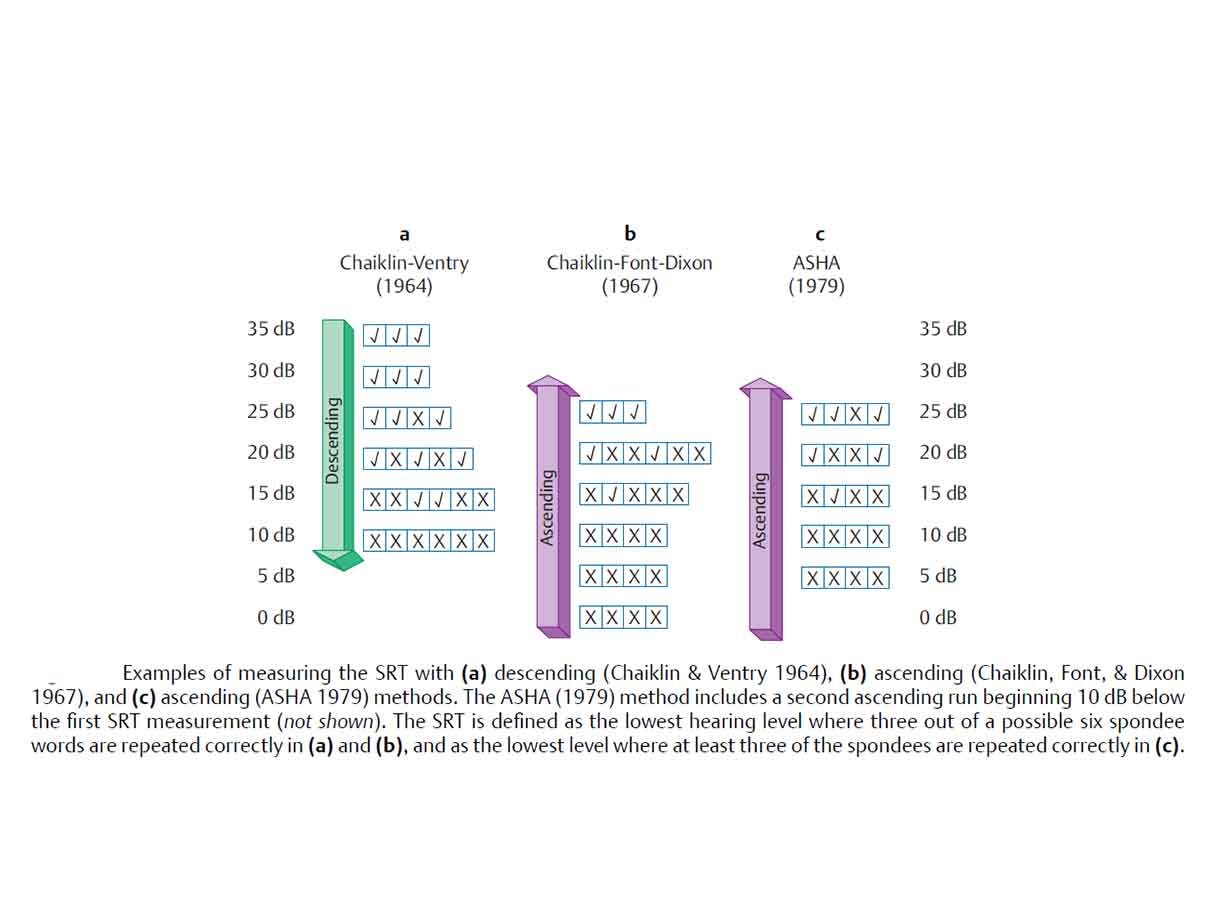

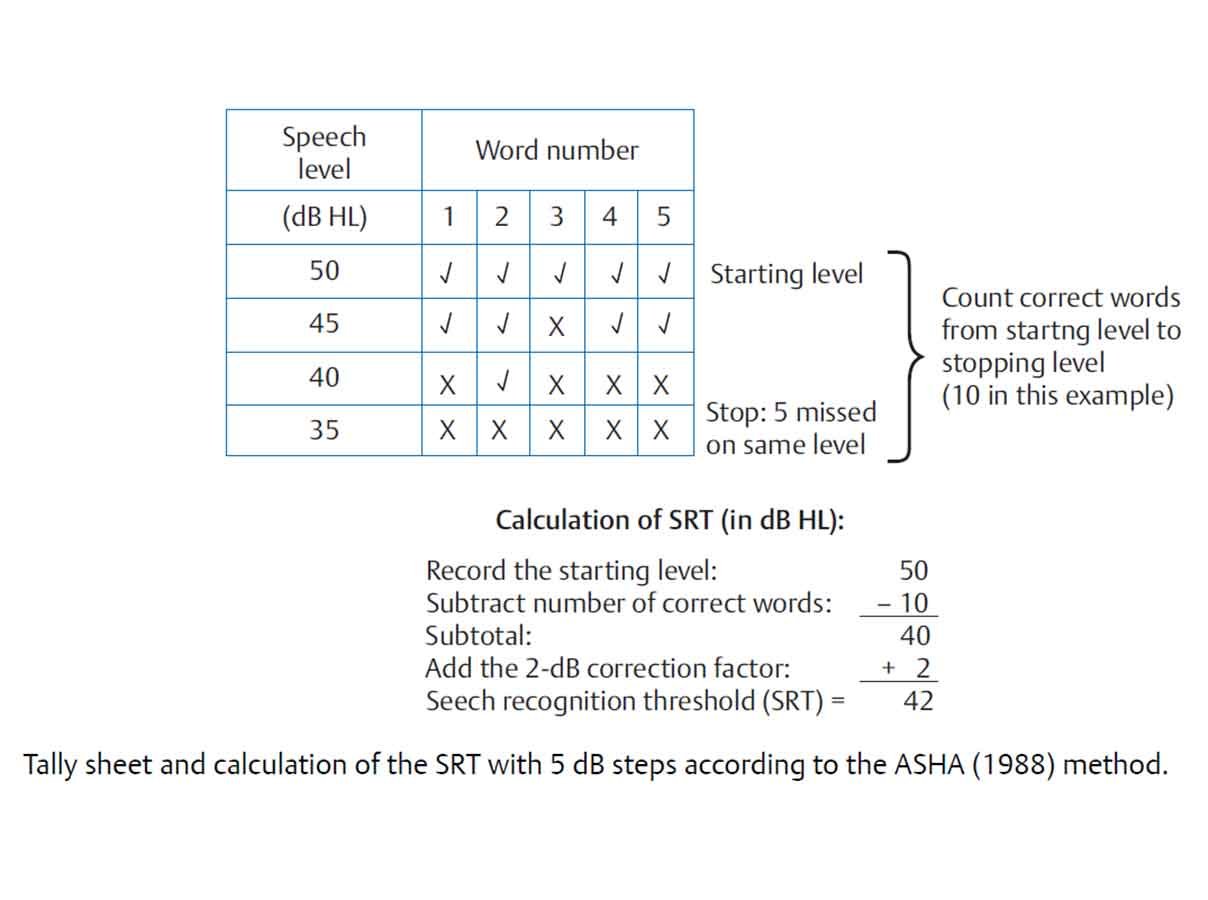

Speech Threshold testing involves several considerations. They include the purposes of the test or the reasons for performing the test, the materials that should be used in testing, and the method or procedure for testing. Purposes of Speech Threshold Testing A number of purposes have been given for speech threshold testing. In the past, speech thresholds were used as a means to cross-check the validity of pure tone thresholds. This purpose lacks some validity because we have other physiologic and electrophysiologic procedures like OAEs and imittance test results to help us in that cross-check. However, the speech threshold measure is a test of hearing. It is not entirely invalid to be performed as a cross-check for pure tone hearing. I think sometimes we are anxious to get rid of things because we feel we have a better handle from other tests, but in this case, it may not be the wisest thing to toss out. Also in past years, speech thresholds were used to determine the level for suprathreshold speech recognition testing. That also lacks validity, because the level at which suprathreshold testing is conducted depends on the reason you are doing the test itself. It is necessary to test speech thresholds if you are going to bill 92557. Aside from that, the current purpose for speech threshold testing is in the evaluation of pediatric and difficult to test patients. Clinical practice surveys tell us that the majority of clinicians do test speech thresholds for all their patients whether it is for billing purposes or not. It is always important that testing is done in the recommended, standardized manner. The accepted measures for speech thresholds are the Speech Recognition Threshold (SRT) and the Speech Detection Threshold (SDT). Those terms are used because they specify the material or stimulus, i.e. speech, as well as the task that the listener is required to do, which is recognition or identification in the case of the SRT, and detection or noticing of presence versus absence of the stimulus in the case of SDT. The terms also specify the criterion for performance which is threshold or generally 50%. The SDT is most commonly performed on those individuals who have been unable to complete an SRT, such as very young children. Because recognition is not required in the speech detection task, it is expected that the SDT will be about 5 to 10 dB better than the SRT, which requires recognition of the material. Materials for Speech Threshold Testing The materials that are used in speech threshold testing are spondees, which are familiar two-syllable words that have a fairly steep psychometric function. Cold running speech or connected discourse is an alternative for speech detection testing since recognition is not required in that task. Whatever material is used, it should be noted on the audiogram. It is important to make notations on the audiogram about the protocols and the materials we are using, although in common practice many of us are lax in doing so. Methods for Speech Threshold Testing The methods consideration in speech threshold testing is how we are going to do the test. This would include whether we use monitored live voice or recorded materials, and whether we familiarize the patient with the materials and the technique that we use to elicit threshold. Monitored live voice and recorded speech can both be used in SRT testing. However, recorded presentation is recommended because recorded materials standardize the test procedure. With live voice presentation, the monitoring of each syllable of each spondee, so that it peaks at 0 on the VU meter can be fairly difficult. The consistency of the presentation is lost then. Using recorded materials is recommended, but it is less important in speech threshold testing than it is in suprathreshold speech testing. As I mentioned with the materials that are used, it is important to note on the audiogram what method of presentation has been used. As far as familiarization goes, we have known for about 50 years, since Tillman and Jerger (1959) identified familiarity as a factor in speech thresholds, that familiarization of the patient with the test words should be included as part of every test. Several clinical practice surveys suggest that familiarization is not often done with the patients. This is not a good practice because familiarization does influence thresholds and should be part of the procedure. The last consideration under methods is regarding the technique that is going to be used. Several different techniques have been proposed for the determination of SRT. Clinical practice surveys suggest the most commonly used method is a bracketing procedure. The typical down 10 dB, up 5 dB is often used with two to four words presented at each level, and the threshold then is defined as the lowest level at which 50% or at least 50% of the words are correctly repeated. This is not the procedure that is recommended by ASHA (1988). The ASHA-recommended procedure is a descending technique where two spondees are presented at each decrement from the starting level. There are other modifications that have been proposed, but they are not widely used.

Suprathreshold speech testing involves considerations as well. They are similar to those that we mentioned for threshold tests, but they are more complicated than the threshold considerations. They include the purposes of the testing, the materials that should be used in testing, whether the test material should be delivered via monitored live voice or recorded materials, the level or levels at which the testing should be conducted, whether a full list, half list, or an abbreviated word list should be used, and whether or not the test should be given in quiet or noise. Purposes of Suprathreshold Testing There are several reasons to conduct suprathreshold tests. They include estimating the communicative ability of the individual at a normal conversational level; determining whether or not a more thorough diagnostic assessment is going to be conducted; hearing aid considerations, and analysis of the error patterns in speech recognition. When the purpose of testing is to estimate communicative ability at a normal conversational level, then the test should be given at a level around 50 to 60 dBHL since that is representative of a normal conversational level at a communicating distance of about 1 meter. While monosyllabic words in quiet do not give a complete picture of communicative ability in daily situations, it is a procedure that people like to use to give some broad sense of overall communicative ability. If the purpose of the testing is for diagnostic assessment, then a psychometric or performance-intensity function should be obtained. If the reason for the testing is for hearing aid considerations, then the test is often given using words or sentences and either in quiet or in a background of noise. Another purpose is the analysis of error patterns in speech recognition and in that situation, a test other than some open set monosyllabic word test would be appropriate. Materials for Suprathreshold Testing The choice of materials for testing depends on the purpose of the test and on the age and abilities of the patients. The issues in materials include the set and the test items themselves.

Closed set vs. Open set. The first consideration is whether a closed set or an open set is appropriate. Closed set tests limit the number of response alternatives to a fairly small set, usually between 4 and 10 depending on the procedure. The number of alternatives influences the guess rate. This is a consideration as well. The Word Intelligibility by Picture Identification or the WIPI test is a commonly used closed set test for children as it requires only the picture pointing response and it has a receptive language vocabulary that is as low as about 5 years. It is very useful in pediatric evaluations as is another closed set test, the Northwestern University Children's Perception of Speech test (NU-CHIPS).

In contrast, the open set protocol provides an unlimited number of stimulus alternatives. Therefore, open set tests are more difficult. The clinical practice surveys available suggest for routine audiometric testing that monosyllabic word lists are the most widely used materials in suprathreshold speech recognition testing for routine evaluations, but sentences in noise are gaining popularity for hearing aid purposes.

CID W-22 vs. NU-6. The most common materials for speech recognition testing are the monosyllabic words, the Central Institute of the Deaf W-22 and the Northwestern University-6 word list. These are the most common open set materials and there has been some discussion among audiologists concerning the differences between those. From a historical perspective, the CID W-22 list came from the original Harvard PAL-PB50 words and the W-22s are a group of the more familiar of those. They were developed into four 50-word lists. They are still commonly used by audiologists today. The NU-6 lists were developed later and instead of looking for phonetic balance, they considered a more phonemic balance. The articulation function for both of those using recorded materials is about the same, 4% per dB. The NU-6 tests are considered somewhat more difficult than the W-22s. Clinical surveys show that both materials are used by practicing audiologists, with usage of the NU-6 lists beginning to surpass usage of W-22s.

Nonsense materials. There are other materials that are available for suprathreshold speech testing. There are other monosyllabic word lists like the Gardner high frequency word list (Gardner, 1971) that could be useful for special applications or special populations. There are also nonsense syllabic tasks which were used in early research in communication. An advantage of the nonsense syllables is that the effects of word familiarity and lexical constraints are reduced as compared to using actual words as test materials. A few that are available are the City University of New York Nonsense Syllable test, the Nonsense Syllable test, and others.

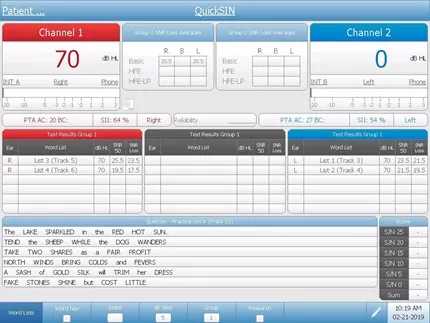

Sentence materials. Sentence materials are gaining popularity, particularly in hearing aid applications. This is because speech that contains contextual cues and is presented in a noise background is expected to have better predictive validity than words in quiet. The two sentence procedures that are popular are the Hearing In Noise Test (HINT) (Nilsson, Soli,& Sullivan, 1994) and the QuickSIN (Killion, Niquette, Gudmundsen, Revit & Banerjee, 2004). Other sentence tests that are available that have particular applications are the Synthetic Sentence Identification test (SSI), the Speech Perception and Noise test (SPIN), and the Connected Speech test.

Monitored Live Voice vs. Recorded. As with speech threshold testing, the use of recorded materials for suprathreshold speech testing standardizes the test administration. The recorded version of the test is actually the test in my opinion. This goes back to a study in 1969 where the findings said the test is not just the written word list, but rather it is a recorded version of those words.

Inter-speaker and intra-speaker variability makes using recorded materials the method of choice in almost all cases for suprathreshold testing. Monitored live voice (MLV) is not recommended. In years gone by, recorded materials were difficult to manipulate, but the ease and flexibility that is afforded us by CDs and digital recordings makes recorded materials the only way to go for testing suprathreshold speech recognition. Another issue to consider is the use of the carrier phrase. Since the carrier phrase is included on recordings and recorded materials are the recommended procedure, that issue is settled. However, I do know that monitored live voice is necessary in certain situations and if monitored live voice is used in testing, then the carrier phrase should precede the test word. In monitored live voice, the carrier phrase is intended to allow the test word to have its own natural inflection and its own natural power. The VU meter should peak at 0 for the carrier phrase and the test word then is delivered at its own natural or normal level for that word in the phrase.

Levels. The level at which testing is done is another consideration. The psychometric or performance-intensity function plots speech performance in percent correct on the Y-axis, as a function of the level of the speech signal on the X-axis. This is important because testing at only one level, which is fairly common, gives us insufficient information about the patient's optimal performance or what we commonly call the PB-max. It also does not allow us to know anything about any possible deterioration in performance if the level is increased. As a reminder, normal hearers show a function that reaches its maximum around 25 to 40 dB SL (re: SRT) and that is the reason why suprathreshold testing is often conducted at that level. For normals, the performance remains at that level, 100% or so, as the level increases. People with conductive hearing loss also show a similar function. Individuals with sensorineural hearing loss, however, show a performance function that reaches its maximum at generally less than 100%. They can either show performance that stays at that level as intensity increases, or they can show a curve that reaches its maximum and then decreases in performance as intensity increases. This is known as roll-over. A single level is not the best way to go as we cannot anticipate which patients may have rollover during testing, unless we test at a level higher than where the maximum score was obtained. I recognize that there are often time constraints in everyday practice, but two levels are recommended so that the performance-intensity function can be observed for an individual patient at least in an abbreviated way.

Recently, Guthrie and Mackersie (2009) published a paper that compared several different presentation levels to ascertain which level would result in maximum word recognition in individuals who had different hearing loss configurations. They looked at a number of presentation levels ranging from 10 dB above the SRT to a level at the UCL (uncomfortable listening level) -5 dB. Their results indicated that individuals with mild to moderate losses and those with more steeply sloping losses reached their best scores at a UCL -5 dB. That was also true for those patients who had moderately-severe to severe losses. The best phoneme recognition scores for their populations were achieved at a level of UCL -5 dB. As a reminder about speech recognition testing, masking is frequently needed because the test is being presented at a level above threshold, in many cases well above the threshold. Masking will always be needed for suprathreshold testing when the presentation level in the test ear is 40 dB or greater above the best bone conduction threshold in the non-test ear if supra-aural phones are used.

Full lists vs. half-lists. Another consideration is whether a full list or a half-list should be administered. Original lists were composed of 50 words and those 50 words were created for phonetic balance and for simplicity in scoring. It made it easy for the test to be scored if 50 words were administered and each word was worth 2%. Because 50-word lists take a long time, people often use half-lists or even shorter lists for the purpose of suprathreshold speech recognition testing. Let's look into this practice a little further.

An early study was done by Thornton and Raffin (1978) using the Binomial Distribution Model. They investigated the critical differences between one score and a retest score that would be necessary for those scores to be considered statistically significant. Their findings showed that with an increasing set size, variability decreased. It would seem that more items are better. More recently Hurley and Sells (2003) conducted a study that looked at developing a test methodology that would identify those patients requiring a full 50 item suprathreshold test and allow abbreviated testing of patients who do not need a full 50 item list. They used Auditec recordings and developed 10-word and 25-word screening tests. They found that the four lists of NU-6 10-word and the 25-word screening tests were able to differentiate listeners who had impaired word recognition who needed a full 50-word list from those with unimpaired word recognition ability who only needed the 10-word or 25-word list. If abbreviated testing is important, then it would seem that this would be the protocol to follow. These screening lists are available in a recorded version and their findings were based on a recorded version. Once again, it is important to use recorded materials whether you are going to use a full list or use an abbreviated list.

Quiet vs. Noise. Another consideration in suprathreshold speech recognition testing is whether to test in quiet or in noise. The effects of sensorineural hearing loss beyond the threshold loss, such as impaired frequency resolution or impaired temporal resolution, makes speech recognition performance in quiet a poor predictor for how those individuals will perform in noise. Speech recognition in noise is being promoted by a number of experts because adding noise improves the sensitivity of the test and the validity of the test. Giving the test at several levels will provide for a better separation between people who have hearing loss and those who have normal hearing. We know that individuals with hearing loss have a lot more difficulty with speech recognition in noise than those with normal hearing, and that those with sensorineural hearing loss often require a much greater signal-to-noise ratio (SNR), 10 to 15 better, than normal hearers.

Monosyllabic words in noise have not been widely used in clinical evaluation. However there are several word lists that are available. One of them is the Words in Noise test or WIN test which presents NU-6 words in a multi-talker babble. The words are presented at several different SNRs with the babble remaining at a constant level. One of the advantages of using these kinds of tests is that they are adaptive. They can be administered in a shorter period of time and they do not run into the same problems that we see with ceiling effects and floor effects. As I mentioned earlier, sentence tests in noise have become increasingly popular in hearing aid applications. Testing speech in noise is one way to look at amplification pre and post fitting. The Hearing in Noise Test and QuickSin, have gained popularity in those applications. The HINT was developed by Nilsson and colleagues in 1994 and later modified. It is scored as the dB to noise ratio that is necessary to get a 50% correct performance on the sentences. The sentences are the BKB (Bamford-Kowal-Bench) sentences. They are presented in sets of 10 and the listener listens and repeats the entire sentence correctly in order to get credit. In the HINT, the speech spectrum noise stays constant and the signal level is varied to obtain that 50% point. The QuickSin is a test that was developed by Killion and colleagues (2004) and uses the IEEE sentences. It has six sentences per list with five key words that are the scoring words in each sentence. All of them are presented in a multi-talker babble. The sentences get presented one at a time in 5 dB decrements from a high positive SNR down to 0 dB SNR. Again the test is scored as the 50% point in terms of dB signal-to-noise ratio. The guide proposed by Killion on the SNR is if an individual has somewhere around a 0 to 3 dB SNR it would be considered normal, 3 to 7 would be a mild SNR loss, 7 to15 dB would be a moderate SNR loss, and greater than 15 dB would be a severe SNR loss.

Scoring. Scoring is another issue in suprathreshold speech recognition testing. It is generally done on a whole word basis. However phoneme scoring is another option. If phoneme scoring is used, it is a way of increasing the set size and you have more items to score without adding to the time of the test. If whole word scoring is used, the words have to be exactly correct. In this situation, being close does not count. The word must be absolutely correct in order to be judged as being correct. Over time, different scoring categorizations have been proposed, although the percentages that are attributed to those categories vary among the different proposals.

The traditional categorizations include excellent, good, fair, poor, and very poor. These categories are defined as:

- Excellent or within normal limits = 90 - 100% on whole word scoring

- Good or slight difficulty = 78 - 88%

- Fair to moderate difficulty = 66 - 76%

- Poor or great difficulty = 54 - 64 %

- Very poor is < 52%

A very useful test routinely administered to those who are being considered for hearing aids is the level at which a listener finds listening most comfortable. The materials that are used for this are usually cold running speech or connected discourse. The listener is asked to rate the level at which listening is found to be most comfortable. Several trials are usually completed because most comfortable listening is typically a range, not a specific level or a single value. People sometimes want sounds a little louder or a little softer, so the range is a more appropriate term for this than most comfortable level. However whatever is obtained, whether it is a most comfortable level or a most comfortable range, should be recorded on the audiogram. Again, the material used should also be noted on the audiogram. As I mentioned earlier the most comfortable level (MCL) is often not the level at which a listener achieves maximum intelligibility. Using MCL in order to determine where the suprathreshold speech recognition measure will be done is not a good reason to use this test. MCL is useful, but not for determining where maximum intelligibility will be. The study I mentioned earlier showed that maximum intelligibility was reached for most people with hearing loss at a UCL -5. MCL is useful however in determining ANL or acceptable noise level.

The uncomfortable listening level (UCL) is also conducted with cold running speech. The instructions for this test can certainly influence the outcome since uncomfortable or uncomfortably loud for some individuals may not really be their UCL, but rather a preference for listening at a softer level. It is important to define for the patient what you mean by uncomfortably loud. The utility of the UCL is in providing an estimate for the dynamic range for speech which is the difference between the UCL and the SRT. In normals, this range is usually 100 dB or more, but it is reduced in ears with sensorineural hearing loss often dramatically. By doing the UCL, you can get an estimate of the individual's dynamic range for speech.

Acceptable Noise Level (ANL) is the amount of background noise that a listener is willing to accept while listening to speech (Nabelek, Tucker, & Letowski, 1991). It is a test of noise tolerance and it has been shown to be related to the successful use of hearing aids and to potential benefit with hearing aids (Nabelek, Freyaldenhoven, Tampas, & Muenchen, 2006). It uses the MCL and a measure known as BNL or background noise level. To conduct the test, a recorded speech passage is presented to the listener in the sound field for the MCL. Again note the use of recorded materials. The noise is then introduced to the listener to a level that will be the highest level that that person is able to accept or "put up with" while they are listening to and following the story in the speech passage. The ANL then becomes the difference between the MCL and the BNL. Individuals that have very low scores on the ANL are considered successful hearing aid users or good candidates for hearing aids. Those that have very high scores are considered unsuccessful users or poor hearing aid candidates. Obviously there are number of other applications for speech in audiologic practice, not the least of which is in the assessment of auditory processing. Many seminars could be conducted on this topic alone. Another application or future direction for speech audiometry is to more realistically assess hearing aid performance in "real world" environments. This is an area where research is currently underway.

Question: Are there any more specific instructions for the UCL measurement? Answer: Instructions are very important. We need to make it clear to a patient exactly what we expect them to do. I personally do not like things loud. If I am asked to indicate what is uncomfortably loud, I am much below what is really my UCL. I think you have to be very direct in instructing your patients in that you are not looking for a little uncomfortable, but where they just do not want to hear it or cannot take it. Question: Can you sum up what the best methods are to test hearing aid performance? I assume this means with speech signals. Answer: I think the use of the HINT or the QuickSin would be the most useful on a behavioral test. We have other ways of looking at performance that are not behavioral. Question: What about dialects? In my area, some of the local dialects have clipped words during speech testing. I am not sure if I should count those as correct or incorrect. Answer: It all depends on your situation. If a patient's production is really reflective of the dialect of that region and they are saying the word as everyone else in that area would say it, then I would say they do have the word correct. If necessary, if you are really unclear, you can always ask the patient to spell the word or write it down. This extra time can be inconvenient, but that is the best way to be sure that they have correctly identified the word. Question: Is there a reference for the bracketing method? Answer: The bracketing method is based on the old modified Hughson-Westlake that many people use for pure tone threshold testing. It is very similar to that traditional down 10 dB, up 5 dB. I am sure there are more references, but the Hughson-Westlake is what bracketing is based on. Question: Once you get an SRT result, if you want to compare it to the thresholds to validate your pure tones, how do you compare it to the audiogram? Answer: If it is a flat hearing loss, then you can compare to the 3-frequency pure tone average (PTA). If there is a high frequency loss, where audibility at perhaps 2000 Hz is greatly reduced, then it is better to use just the average of 500Hz and 1000Hz as your comparison. If it is a steeply sloping loss, then you look for agreement with the best threshold, which would probably be the 500 Hz threshold. The reverse is also true for patients who have rising configurations. Compare the SRT to the best two frequencies of the PTA, if the loss has either a steep slope or a steep rise, or the best frequency in the PTA if it is a really precipitous change in configuration. Question: Where can I find speech lists in Russian or other languages? Answer: Auditec has some material available in languages other than English - it would be best to contact them directly. You can also view their catalog at www.auditec.com Carolyn Smaka: This raises a question I have. If an audiologist is not fluent in a particular language, such as Spanish, is it ok to obtain a word list or recording in that language and conduct speech testing? Janet Schoepflin: I do not think that is a good practice. If you are not fluent in a language, you do not know all the subtleties of that language and the allophonic variations. People want to get an estimation of suprathreshold speech recognition and this would be an attempt to do that. This goes along with dialect. Whether you are using a recording, or doing your best to say these words exactly as there are supposed to be said, and your patient is fluent in a language and they say the word back to you, since you are not familiar with all the variations in the language it is possible that you will score the word incorrectly. You may think it is correct when it is actually incorrect, or you may think it is incorrect when it is correct based on the dialect or variation of that language. Question: In school we were instructed to use the full 50-word list for any word discrimination testing at suprathreshold, but if we are pressed for time, a half word list would be okay. However, my professor warned us that we absolutely must go in order on the word list. Can you clarify this? Answer: I'm not sure why that might have been said. I was trained in the model to use the 50-word list. This was because the phonetic balance that was proposed for those words was based on the 50 words. If you only used 25 words, you were not getting the phonetic balance. I think the more current findings from Hurley and Sells show us that it is possible to use a shorter list developed specifically for this purpose. It should be the recorded version of those words. These lists are available through Auditec. Question: On the NU-6 list, the words 'tough' and 'puff' are next to each other. 'Tough' is often mistaken for 'puff' so then when we reads 'puff', the person looks confused. Is it okay to mix up the order on the word list? Answer: I think in that case it is perfectly fine to move that one word down. Question: When do you recommend conducting speech testing, before or after pure tone testing? Answer: I have always been a person who likes to interact with my patients. My own procedure is to do an SRT first. Frequently for an SRT I do use live voice. I do not use monitored live voice for suprathreshold testing. It gives me a time to interact with the patient. People feel comfortable with speech. It is a communicative act. Then I do pure tone testing. Personally I would not do suprathreshold until I finished pure tone testing. My sequence is often SRT, pure tone, and suprathreshold. If this is not a good protocol for you based on time, then I would conduct pure tone testing, SRT, and then suprathreshold. Question: Some of the spondee words are outdated such as inkwell and whitewash. Is it okay to substitute other words that we know are spondee words, but may not be on the list? Or if we familiarize people, does it matter? Answer: The words that are on the list were put there for their so-called familiarity, but also because they were somewhat homogeneous and equal in intelligibility. I think inkwell, drawbridge and whitewash are outdated. If you follow a protocol where you are using a representative sample of the words and you are familiarizing, I think it is perfectly fine to eliminate those words you do not want to use. You just do not want to end up only using five or six words as it will limit the test set. Question: At what age is it appropriate to expect a child to perform suprathreshold speech recognition testing? Answer: If the child has a receptive language age of around 4 or 5 years, even 3 years maybe, it is possible to use the NU-CHIPS as a measure. It really does depend on language more than anything else, and the fact that the child can sit still for a period of time to do the test. Question: Regarding masking, when you are going 40 dB above the bone conduction threshold in the non-test ear, what frequency are you looking at? Are you comparing speech presented at 40 above a pure tone average of the bone conduction threshold? Answer: The best bone conduction threshold in the non-test ear is what really should be used. Question: When seeing a patient in follow-up after an ENT prescribes a steroid therapy for hydrops, do you recommend using the same word list to compare their suprathreshold speech recognition? Answer: I think it is better to use a different list, personally. Word familiarity as we said can influence even threshold and it certainly can affect suprathreshold performance. I think it is best to use a different word list. Carolyn Smaka: Thanks to everyone for their questions. Dr. Schoepflin has provided her email address with the handout. If your question was not answered or if you have further thoughts after the presentation, please feel free to follow up directly with her via email. Janet Schoepflin: Thank you so much. It was my pleasure and I hope everyone found the presentation worthwhile.

American Speech, Language and Hearing Association. (1988). Determining Threshold Level for Speech [Guidelines]. Available from www.asha.org/policy Gardner, H.(1971). Application of a high-frequency consonant discrimination word list in hearing-aid evaluation. Journal of Speech and Hearing Disorders, 36 , 354-355. Guthrie, L. & Mackersie, C. (2009). A comparison of presentation levels to maximize word recognition scores. Journal of the American Academy of Audiology, 20 (6), 381-90. Hurley, R. & Sells, J. (2003). An abbreviated word recognition protocol based on item difficulty. Ear & Hearing, 24 (2), 111-118. Killion, M., Niquette, P., Gudmundsen, G., Revit, L., & Banerjee, S. (2004). Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners. Journal of the Acoustical Society of America, 116 (4 Pt 1), 2395-405. Nabelek, A., Freyaldenhoven, M., Tampas, J., Burchfield, S., & Muenchen, R. (2006). Acceptable noise level as a predictor of hearing aid use. Journal of the American Academy of Audiology, 17 , 626-639. Nabelek, A., Tucker, F., & Letowski, T. (1991). Toleration of background noises: Relationship with patterns of hearing aid use by elderly persons. Journal of Speech and Hearing Research, 34 , 679-685. Nilsson, M., Soli. S,, & Sullivan, J. (1994). Development of the hearing in noise test for the measurement of speech reception thresholds in quiet and in noise. Journal of the Acoustical Society of America, 95 (2), 1085-99. Thornton, A.. & Raffin, M, (1978). Speech-discrimination scores modeled as a binomial variable. Journal of Speech and Hearing Research, 21 , 507-518. Tillman, T., & Jerger, J. (1959). Some factors affecting the spondee threshold in normal-hearing subjects. Journal of Speech and Hearing Research, 2 , 141-146.

Chair, Communication Sciences and Disorders, Adelphi University

Janet Schoepflin is an Associate Professor and Chair of the Department of Communication Sciences and Disorders at Adelphi University and a member of the faculty of the Long Island AuD Consortium. Her areas of research interest include speech perception in children and adults, particularly those with hearing loss, and the effects of noise on audition and speech recognition performance.

Related Courses

Using gsi for cochlear implant evaluations, course: #39682 level: introductory 1 hour, empowerment and behavioral insights in client decision making, presented in partnership with nal, course: #37124 level: intermediate 1 hour, cognition and audition: supporting evidence, screening options, and clinical research, course: #37381 level: introductory 1 hour, innovative audiologic care delivery, course: #38661 level: intermediate 4 hours, aurical hit applications part 1 - applications for hearing instrument fittings and beyond, course: #28678 level: intermediate 1 hour.

Our site uses cookies to improve your experience. By using our site, you agree to our Privacy Policy .

American Speech-Language-Hearing Association

- Certification

- Publications

- Continuing Education

- Practice Management

- Audiologists

- Speech-Language Pathologists

- Academic & Faculty

- Audiology & SLP Assistants

- Speech Testing

Types of Tests

- Auditory Brainstem Response (ABR)

- Otoacoustic Emissions (OAEs)

- Pure-Tone Testing

- Tests of the Middle Ear

About Speech Testing

An audiologist may do a number of tests to check your hearing. Speech testing will look at how well you listen to and repeat words. One test is the speech reception threshold, or SRT.

The SRT is for older children and adults who can talk. The results are compared to pure-tone test results to help identify hearing loss.

How Speech Testing Is Done

The audiologist will say words to you through headphones, and you will repeat the words. The audiologist will record the softest speech you can repeat. You may also need to repeat words that you hear at a louder level. This is done to test word recognition.

Speech testing may happen in a quiet or noisy place. People who have hearing loss often say that they have the most trouble hearing in noisy places. So, it is helpful to test how well you hear in noise.

Learn more about hearing testing .

To find an audiologist near you, visit ProFind .

In the Public Section

- Hearing & Balance

- Speech, Language & Swallowing

- About Health Insurance

- Adding Speech & Hearing Benefits

- Advocacy & Outreach

- Find a Professional

- Advertising Disclaimer

- Advertise with us

ASHA Corporate Partners

- Become A Corporate Partner

The American Speech-Language-Hearing Association (ASHA) is the national professional, scientific, and credentialing association for 234,000 members, certificate holders, and affiliates who are audiologists; speech-language pathologists; speech, language, and hearing scientists; audiology and speech-language pathology assistants; and students.

- All ASHA Websites

- Work at ASHA

- Marketing Solutions

Information For

Get involved.

- ASHA Community

- Become a Mentor

- Become a Volunteer

- Special Interest Groups (SIGs)

Connect With ASHA

American Speech-Language-Hearing Association 2200 Research Blvd., Rockville, MD 20850 Members: 800-498-2071 Non-Member: 800-638-8255

MORE WAYS TO CONNECT

Media Resources

- Press Queries

Site Help | A–Z Topic Index | Privacy Statement | Terms of Use © 1997- American Speech-Language-Hearing Association

- AudioStar Pro

- TympStar Pro

- Video Library

- 60 Minute Courses

- 30 Minute Courses

- Testing Guides

- All Content

- Get a Quote

- Select Language

Speech Audiometry

Audiometry guides, introduction.

Speech audiometry is an important component of a comprehensive hearing evaluation. There are several kinds of speech audiometry, but the most common uses are to 1) verify the pure tone thresholds 2) determine speech understanding and 3) determine most comfortable and uncomfortable listening levels. The results are used with the other tests to develop a diagnosis and treatment plan.

SDT = Speech Detection Threshold, SAT = Speech Awareness Threshold. These terms are interchangeable and they describe the lowest level at which a patient can hear the presence of speech 50% of the time. They specifically refer to the speech being AUDIBLE, not INTELLIGIBLE.

This test is performed by presenting spondee (two-syllable) words such as baseball, ice cream, hotdog and the patient is to respond when they hear the speech. This is often used with non-verbal patients such as infants or other difficult to test populations. The thresholds should correspond to the PTA and is used to verify the pure tone threshold testing.

How to Test:

Instruct the patient that he or she will be hearing words that have two parts, such as “mushroom” or “baseball.” The patient should repeat the words and if not sure, he or she should not be afraid to guess.

Using either live voice or recorded speech, present the spondee word lists testing the better ear first. Start 20 dB above the 1000 Hz pure tone threshold level. Present one word on the list and, if the response is correct, lower the level by 5 dB. Continue until the patient has difficulty with the words. When this occurs, present more words for each 5 dB step.

Speech Reception Threshold (SRT)

SRT, or speech reception threshold, is a fast way to help verify that the pure tone thresholds are valid. Common compound words - or spondee words - are presented at varying degrees of loudness until it is too soft for the patient to hear. SRT scores are compared to the pure tone average as part of the cross check principle. When these two values agree, the reliability of testing is improved.

Word Recognition

Instruct the patient that he or she is to repeat the words presented. Using either live voice or recorded speech, present the standardized PB word list of your choice. Present the words at a level comfortable to the patient; at least 30 dB and generally 35 to 50 dB above the 1000 Hz pure tone threshold. Using the scorer buttons on the front panel, press the “Correct” button each time the right response is given and the “Incorrect” button each time a wrong response is given.

The Discrimination Score is the percentage of words repeated correctly: Discrimination % at HL = 100 x Number of Correct Responses/Number of Trials.

WRS = Word Recognition Score, SRS = Speech Reception Score, Speech Discrimination Score. These terms are interchangeable and describe the patient’s capability to correctly repeat a list of phonetically balanced (PB) words at a comfortable level. The score is a percentage of correct responses and indicates the patient’s ability to understand speech.

Word Recognition Score (WRS)

WRS, or word recognition score, is a type of speech audiometry that is designed to measure speech understanding. Sometimes it is called word discrimination. The words used are common and phonetically balanced and typically presented at a level that is comfortable for the patient. The results of WRS can be used to help set realistic expectations and formulate a treatment plan.

Speech In Noise Test

Speech in noise testing is a critical component to a comprehensive hearing evaluation. When you test a patient's ability to understand speech in a "real world setting" like background noise, the results influence the diagnosis, the recommendations, and the patient's understanding of their own hearing loss.

Auditory Processing

Sometimes, a patient's brain has trouble making sense of auditory information. This is called an auditory processing disorder. It's not always clear that this lack of understanding is a hearing issue, so it requires a very specialized battery of speech tests to identify what kind of processing disorder exists and develop recommendations to improve the listening and understanding for the patient.

QuickSIN is a quick sentence in noise test that quantifies how a patient hears in noise. The patient repeats sentences that are embedded in different levels of restaurant noise and the result is an SNR loss - or Signal To Noise ratio loss. Taking a few additional minutes to measure the SNR loss of every patient seen in your clinic provides valuable insights on the overall status of the patient' s auditory system and allows you to counsel more effectively about communication in real-world situations. Using the Quick SIN to make important decisions about hearing loss treatment and rehabilitation is a key differentiator for clinicians who strive to provide patient-centered care.

BKB-SIN is a sentence in noise test that quantifies how patients hear in noise. The patient repeats sentences that are embedded in different levels of restaurant noise an the result is an SNR loss - or signal to noise ratio loss. This test is designed to evaluate patients of many ages and has normative corrections for children and adults. Taking a few additional minutes to measure the SNR loss of every patient seen in your clinic is a key differentiator for clinicians who strive to provide patient-centered care.

- Education >

- Testing Guides >

- Speech Audiometry >

GRASON-STADLER

- Our Approach

- Cookie Policy

- AMTAS Pro

Corporate Headquarters 10395 West 70th St. Eden Prairie, MN 55344

General Inquires +1 800-700-2282 +1 952-278-4402 [email protected] (US) [email protected] (International)

Technical Support Hardware: +1 877-722-4490 Software: +1 952-278-4456

DISTRIBUTOR LOGIN

- GSI Extranet

- Request an Account

- Forgot Password?

Get Connected

- Distributor Locator

© Copyright 2024

Speech Reception Thresholds – Procedure and Application

Speech Reception Thresholds – Procedure and Application: The speech reception threshold is the minimum hearing level for speech (ANSI, 2010) at which an individual can recognize 50% of the speech material. Speech reception thresholds are achieved in each ear. The term speech reception threshold is synonymous with speech recognition threshold.

Purpose of Speech Reception Thresholds:

- To validate the thresholds obtained through PTA

- To serve as a reference point for supra-threshold tests

- To ascertain the need for aural (re)-habilitation and monitor its progress

- To determine hearing sensitivity in difficult to test population

Materials for Speech Reception Thresholds:

- Spondaic words are the usual and recommended test material for the SRT test. They are 2-syllable words that have equal stress on both syllables.

- Word familiarization can be done prior to the start of test. This ensures that the client is familiar with the test vocabulary, and the client’s responses can be accurately interpreted by the clinician. Care to be taken to eliminate the visual cues during familiarization.

- Based on the circumstances or individuals (age, language facility, physical condition), the standard word list can be modified; however, that the use of speech stimuli with less homogeneity than spondaic words may compromise the reliability of this measure.

- The test material used should be noted in reporting of the results.

Response Format / Mode of Speech Reception Thresholds:

- The usual response mode for obtaining the SRT is repetition of the stimulus item.

- For many patients it is not possible to obtain verbal responses, necessitating the use of alternative response modes such as writing down the responses or closed set of choices such as picture pointing, signing, or visual scanning etc.

- If picture pointing mode is to be used, then the clinician should be cautious in choosing the number of response items (e.g., between 6 and 12 words usually is appropriate).

Procedure of Speech Reception Thresholds:

There are different methods to obtain SRT – ascending or descending method.

Generally, descending method (ASHA, 1988) is preferred and is described below.

- Obtain pure tone average (PTA) .

- Starting level for SRT: 30-40dB above anticipated SRT or 20 dBSL (with reference to PTA).

- Present one spondee at a time at this level. Decrease in 10 dB decrements, whenever the client response is correct. The 10 dB decrement continues until one word is missed/ until the client responds incorrectly.

- Now present a second spondaic word at the same level that the client responded incorrectly.

- If the second word is correctly identified by the client, the level is attenuated by 10 dB and two spondees are presented. This process is continued until two spondees are incorrectly identified at one level. This is the preliminary phase and the actual test phase begins, which can be performed in 5 dB step (Martin & Sides, 1985).

- If you get response for at least one spondee, reduce the intensity by 5 dB and present 03 spondees at that level.

- Continue the same procedure, until “no response” for all the 03 spondees obtained. Increase by 5 dB and continue it by presenting 03 spondees at each level till you get 2/3 spondees (>50%). That level can be considered as SRT.

Interpretation of Speech Reception Thresholds:

- The SRT shall be recorded in dB HL. The results should be recorded for each ear on the same form that contains the client’s results for pure tone audiometry. Additional space should be available to report other pertinent information that describes the test situation, such as alternative materials or response modes

- The SRT & PTA correlation are usually within 6 – 12dB.

- If there is disagreement, it could indicate one of the possibilities: misunderstanding of the instructions, functional hearing loss (non-organic) , instrumentation malfunction, pathology along CANS including VIII nerve, cognitive and language difficulties etc. For e.g. the SRT can be poorer than PTA in elderly and auditory processing disorders; whereas the SRT can be better than PTA in cases of malingerers/functional hearing loss .

Masking of Speech Reception Thresholds:

- Masking should be applied to the non-test ear, when the obtained SRT in one ear exceeds the apparent SRT or a pure tone BC threshold at 500, 1000, 2000 or 4000 Hz in the contralateral ear by 40 dB or more.

- The masker used should have a wide band spectrum (white, pink or speech noise) to effectively mask the speech stimuli.

- The level of effective masking used should be sufficient to eliminate reception by the non-test ear without causing over masking and should be recorded on the same form as that used to record audiometric results.

Application of Speech Reception Thresholds:

Speech recognition measures have been used in every phase of audiology, such as

- To describe the extent of hearing impairment in terms of how it affects speech understanding,

- In the differential diagnosis of auditory disorders,

- For determining the needs for amplification and other forms of audiologic rehabilitation,

- For making comparisons between various hearing aids and amplification approaches,

- For verifying the benefits of hearing aid use and other forms of audiologic rehabilitation, and

- For monitoring patient performance over time for either diagnostic or rehabilitative purposes.

References :

⇒ https://www.ishaindia.org.in/pdf/Guidelines-Standard-Audiometric-Screening-Procedures.PDF ⇒ https://www.asha.org/PRPSpecificTopic.aspx?folderid=8589935335§ion=Assessment#Speech_Audiometry ⇒ Essentials of Audiology – Stanley A. Gelfand, PhD (Book)

You are reading about:

Share this:.

- Click to share on Twitter (Opens in new window)

- Click to share on Facebook (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Telegram (Opens in new window)

- Click to share on WhatsApp (Opens in new window)

- Click to print (Opens in new window)

Written by BASLPCOURSE.COM

June 11, 2020, audiologic testing materials | audiology | baslp 2nd semester audiology notes | baslp 2nd semester audiology unit 4 notes | baslp 2nd semester notes | baslp notes | blog | hearing assessment tests, 0comment(s), follow us on.

For more updates follow us on Facebook, Twitter, Instagram, Youtube and Linkedin

You may also like….

Types of Earmolds for Hearing Aid – Skeleton | Custom

Jan 18, 2024

Types of Earmolds for Hearing Aid - Skeleton | Custom: The realm of hearing aids is a diverse landscape with a myriad...

Procedure for Selecting Earmold and Earshell

Jan 17, 2024

Procedure for Selecting Earmold and Earshell: When it comes to optimizing the acoustic performance of hearing aids,...

Ear Impression Techniques for Earmolds and Earshells

Jan 16, 2024

Ear Impression Techniques for Earmolds and Earshells: In the realm of audiology and hearing aid fabrication, the Ear...

If you have any Suggestion or Question Please Leave a Reply Cancel reply

- Audiometers

- Tympanometers

- Hearing Aid Fitting

- Research Systems

- Research Unit

- ACT Research

- Our History

- Distributors

- Sustainability

- Environmental Sustainability

Training in Speech Audiometry

- Why Perform Functional Hearing Tests?

- Performing aided speech testing to validate pediatric hearing devices

Speech Audiometry: An Introduction

Description, table of contents, what is speech audiometry, why perform speech audiometry.

- Contraindications and considerations

Audiometers that can perform speech audiometry

How to perform speech audiometry, results interpretation, calibration for speech audiometry.

Speech audiometry is an umbrella term used to describe a collection of audiometric tests using speech as the stimulus. You can perform speech audiometry by presenting speech to the subject in both quiet and in the presence of noise (e.g. speech babble or speech noise). The latter is speech-in-noise testing and is beyond the scope of this article.

Speech audiometry is a core test in the audiologist’s test battery because pure tone audiometry (the primary test of hearing sensitivity) is a limited predictor of a person’s ability to recognize speech. Improving an individual’s access to speech sounds is often the main motivation for fitting them with a hearing aid. Therefore, it is important to understand how a person with hearing loss recognizes or discriminates speech before fitting them with amplification, and speech audiometry provides a method of doing this.

A decrease in hearing sensitivity, as measured by pure tone audiometry, results in greater difficulty understanding speech. However, the literature also shows that two individuals of the same age with similar audiograms can have quite different speech recognition scores. Therefore, by performing speech audiometry, an audiologist can determine how well a person can access speech information.

Acquiring this information is key in the diagnostic process. For instance, it can assist in differentiating between different types of hearing loss. You can also use information from speech audiometry in the (re)habilitation process. For example, the results can guide you toward the appropriate amplification technology, such as directional microphones or remote microphone devices. Speech audiometry can also provide the audiologist with a prediction of how well a subject will hear with their new hearing aids. You can use this information to set realistic expectations and help with other aspects of the counseling process.

Below are some more examples of how you can use the results obtained from speech testing.

Identify need for further testing

Based on the results from speech recognition testing, it may be appropriate to perform further testing to get more information on the nature of the hearing loss. An example could be to perform a TEN test to detect a dead region or to perform the Audible Contrast Threshold (ACT™) test .

Inform amplification decisions

You can use the results from speech audiometry to determine whether binaural amplification is the most appropriate fitting approach or if you should consider alternatives such as CROS aids.

You can use the results obtained through speech audiometry to discuss and manage the amplification expectations of patients and their communication partners.

Unexpected asymmetric speech discrimination, significant roll-over , or particularly poor speech discrimination may warrant further investigation by a medical professional.

Non-organic hearing loss

You can use speech testing to cross-check the results from pure tone audiometry for suspected non‑organic hearing loss.

Contraindications and considerations when performing speech audiometry

Before speech audiometry, it is important that you perform pure tone audiometry and otoscopy. Results from these procedures can reveal contraindications to performing speech audiometry.

Otoscopic findings

Speech testing using headphones or inserts is generally contraindicated when the ear canal is occluded with:

- Foreign body

- Or infective otitis externa

In these situations, you can perform bone conduction speech testing or sound field testing.

Audiometric findings

Speech audiometry can be challenging to perform in subjects with severe-to-profound hearing losses as well as asymmetrical hearing losses where the level of stimulation and/or masking noise required is beyond the limits of the audiometer or the patient's uncomfortable loudness levels (ULLs).

Subject variables

Depending on the age or language ability of the subject, complex words may not be suitable. This is particularly true for young children and adults with learning disabilities or other complex presentations such as dementia and reduced cognitive function.

You should also perform speech audiometry in a language which is native to your patient. Speech recognition testing may not be suitable for patients with expressive speech difficulties. However, in these situations, speech detection testing should be possible.

Before we discuss speech audiometry in more detail, let’s briefly consider the instrumentation to deliver the speech stimuli. As speech audiometry plays a significant role in diagnostic audiometry, many audiometers include – or have the option to include – speech testing capabilities.

Table 1 outlines which audiometers from Interacoustics can perform speech audiometry.

| Clinical audiometer | |

| Diagnostic audiometer | |

| Diagnostic audiometer | |

| Equinox 2.0 | PC-based audiometer |

| Portable audiometer | |

| Hearing aid fitting system | |

| Hearing aid fitting system |

Table 1: Audiometers from Interacoustics that can perform speech audiometry.

Because speech audiometry uses speech as the stimulus and languages are different across the globe, the way in which speech audiometry is implemented varies depending on the country where the test is being performed. For the purposes of this article, we will start with addressing how to measure speech in quiet using the international organization of standards ISO 8252-3:2022 as the reference to describe the terminology and processes encompassing speech audiometry. We will describe two tests: speech detection testing and speech recognition testing.

Speech detection testing

In speech detection testing, you ask the subject to identify when they hear speech (not necessarily understand). It is the most basic form of speech testing because understanding is not required. However, it is not commonly performed. In this test, words are normally presented to the ear(s) through headphones (monaural or binaural testing) or through a loudspeaker (binaural testing).

Speech detection threshold (SDT)

Here, the tester will present speech at varying intensity levels and the patient identifies when they can detect speech. The goal is to identify the level at which the patient detects speech in 50% of the trials. This is the speech detection threshold. It is important not to confuse this with the speech discrimination threshold. The speech discrimination threshold looks at a person’s ability to recognize speech and we will explain it later in this article.

The speech detection threshold has been found to correlate well with the pure tone average, which is calculated from pure tone audiometry. Because of this, the main application of speech detection testing in the clinical setting is confirmation of the audiogram.

Speech recognition testing

In speech recognition testing, also known as speech discrimination testing, the subject must not only detect the speech, but also correctly recognize the word or words presented. This is the most popular form of speech testing and provides insights into how a person with hearing loss can discriminate speech in ideal conditions.

Across the globe, the methods of obtaining this information are different and this often leads to confusion about speech recognition testing. Despite there being differences in the way speech recognition testing is performed, there are some core calculations and test parameters which are used globally.

Speech recognition testing: Calculations

There are two main calculations in speech recognition testing.

1. Speech recognition threshold (SRT)

This is the level in dB HL at which the patient recognizes 50% of the test material correctly. This level will differ depending on the test material used. Some references describe the SRT as the speech discrimination threshold or SDT. This can be confusing because the acronym SDT belongs to the speech detection threshold. For this reason, we will not use the term discrimination but instead continue with the term speech recognition threshold.

2. Word recognition score (WRS)

In word recognition testing, you present a list of phonetically balanced words to the subject at a single intensity and ask them to repeat the words they hear. You score if the patient repeats these words correctly or incorrectly. This score, expressed as a percentage of correct words, is calculated by dividing the number of words correctly identified by the total number of words presented.

In some countries, multiple word recognition scores are recorded at various intensities and plotted on a graph. In other countries, a single word recognition score is performed using a level based on the SRT (usually presented 20 to 40 dB louder than the SRT).

Speech recognition testing: Parameters

Before completing a speech recognition test, there are several parameters to consider.

1. Test transducer

You can perform speech recognition testing using air conduction, bone conduction, and speakers in a sound-field setup.

2. Types of words

Speech recognition testing can be performed using a variety of different words or sentences. Some countries use monosyllabic words such as ‘boat’ or ‘cat’ whereas other countries prefer to use spondee words such as ‘baseball’ or ‘cowboy’. These words are then combined with other words to create a phonetically balanced list of words called a word list.

3. Number of words

The number of words in a word list can impact the score. If there are too few words in the list, then there is a risk that not enough data points are acquired to accurately calculate the word recognition score. However, too many words may lead to increased test times and patient fatigue. Word lists often consist of 10 to 25 words.

You can either score words as whole words or by the number of phonemes they contain.

An example of scoring can be illustrated by the word ‘boat’. When scoring using whole words, anything other than the word ‘boat’ would result in an incorrect score.

However, in phoneme scoring, the word ‘boat’ is broken down into its individual phonemes: /b/, /oa/, and /t/. Each phoneme is then scored as a point, meaning that the word boat has a maximum score of 3. An example could be that a patient mishears the word ‘boat’ and reports the word to be ‘float’. With phoneme scoring, 2 points would be awarded for this answer whereas in word scoring, the word float would be marked as incorrect.

5. Delivery of material

Modern audiometers have the functionality of storing word lists digitally onto the hardware of the device so that you can deliver a calibrated speech signal the same way each time you test a patient. This is different from the older methods of testing using live voice or a CD recording of the speech material. Using digitally stored and calibrated speech material in .wav files provides the most reliable and repeatable results as the delivery of the speech is not influenced by the tester.

6. Aided or unaided

You can perform speech recognition testing either aided or unaided. When performing aided measurements, the stimulus is usually played through a loudspeaker and the test is recorded binaurally.

Global examples of how speech recognition testing is performed and reported

Below are examples of how speech recognition testing is performed in the US and the UK. This will show how speech testing varies across the globe.

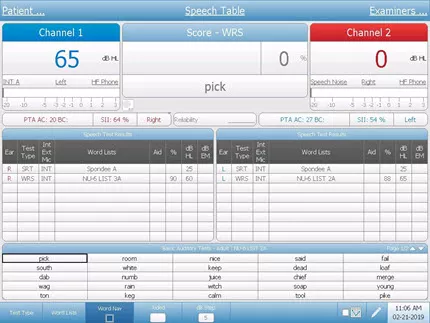

Speech recognition testing in the US: Speech tables

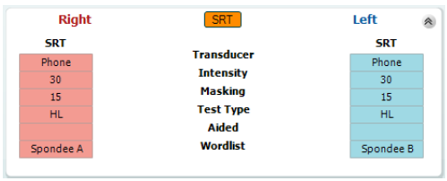

In the US, the SRT and WRS are usually performed as two separate tests using different word lists for each test. The results are displayed in tables called speech tables.

The SRT is the first speech test which is performed and typically uses spondee words (a word with two equally stressed syllables, such as ‘hotdog’) as the stimulus. During this test, you present spondee words to the patient at different intensities and a bracketing technique establishes the threshold at where the patient correctly identifies 50% of the words.

In the below video, we can see how an SRT is performed using spondee words.

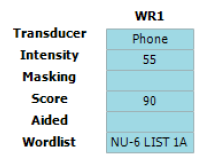

Below, you can see a table showing the results from an SRT test (Figure 1). Here, we can see that the SRT has been measured in each ear. The table shows the intensity at which the SRT was found as well as the transducer, word list, and the level at which masking noise was presented (if applicable). Here we see an unaided SRT of 30 dB HL in both the left and right ears.

Once you have established the intensity of the SRT in dB HL, you can use it to calculate the intensity to present the next list of words to measure the WRS. In WRS testing, it is common to start at an intensity of between 20 dB and 40 dB louder than the speech recognition threshold and to use a different word list from the SRT. The word lists most commonly used in the US for WRS are the NU-6 and CID-W22 word lists.

In word recognition score testing, you present an entire word list to the test subject at a single intensity and score each word based on whether the subject can correctly repeat it or not. The results are reported as a percentage.

The video below demonstrates how to perform the word recognition score.

Below is an image of a speech table showing the word recognition score in the left ear using the NU‑6 word list at an intensity of 55 dB HL (Figure 2). Here we can see that the patient in this example scored 90%, indicating good speech recognition at moderate intensities.

Speech recognition testing in the UK: Speech audiogram

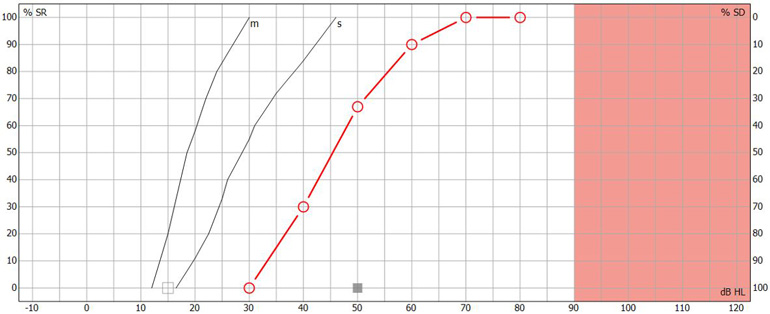

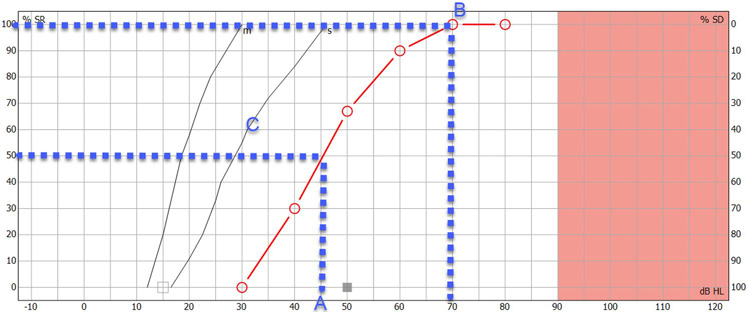

In the UK, speech recognition testing is performed with the goal of obtaining a speech audiogram. A speech audiogram is a graphical representation of how well an individual can discriminate speech across a variety of intensities (Figure 3).

In the UK, the most common method of recording a speech audiogram is to present several different word lists to the subject at varying intensities and calculate multiple word recognition scores. The AB (Arthur Boothroyd) word lists are the most used lists. The initial list is presented around 20 to 30 dB sensation level with subsequent lists performed at quieter intensities before finally increasing the sensation level to determine how well the patient can recognize words at louder intensities.

The speech audiogram is made up of plotting the WRS at each intensity on a graph displaying word recognition score in % as a function of intensity in dB HL. The following video explains how it is performed.

Below is an image of a completed speech audiogram (Figure 4). There are several components.

Point A on the graph shows the intensity in dB HL where the person identified 50% of the speech material correctly. This is the speech recognition threshold or SRT.

Point B on the graph shows the maximum speech recognition score which informs the clinician of the maximum score the subject obtained.

Point C on the graph shows the reference speech recognition curve; this is specific to the test material used (e.g., AB words) and method of presentation (e.g., headphones), and shows a curve which describes the median speech recognition scores at multiple intensities for a group of normal hearing individuals.

Having this displayed on a single graph can provide a quick and easy way to determine and analyze the ability of the person to hear speech and compare their results to a normative group. Lastly, you can use the speech audiogram to identify roll-over. Roll-over occurs when the speech recognition deteriorates at loud intensities and can be a sign of retro-cochlear hearing loss. We will discuss this further in the interpretation section.

Masking in speech recognition testing

Just like in audiometry, cross hearing can also occur in speech audiometry. Therefore, it is important to mask the non-test ear when testing monaurally. Masking is important because word recognition testing is usually performed at supra-threshold levels. Speech encompasses a wide spectrum of frequencies, so the use of narrowband noise as a masking stimulus is not appropriate, and you need to modify the masking noise for speech audiometry. In speech audiometry, speech noise is typically used to mask the non-test ear.

There are several approaches to calculating required masking noise level. An equation by Coles and Priede (1975) suggests one approach which applies to all types of hearing loss (sensorineural, conductive, and mixed):

- Masking level = D S plus max ABG NT minus 40 plus E M

It considers the following factors.

1. Dial setting

D S is the level of dial setting in dB HL for presentation of speech to the test ear.

2. Air-bone gap

Max ABG NT is the maximum air-bone gap between 250 to 4000 Hz in the non‑test ear.

3. Interaural attenuation

Interaural attenuation: The value of 40 comes from the minimum interaural attenuation for masking in audiometry using headphones (for insert earphones, this would be 55 dB).

4. Effective masking

E M is effective masking. Modern audiometers are calibrated in E M , so you don’t need to include this in the calculation. However, if you are using an old audiometer calibrated to an older calibration standard, then you should calculate the E M .

You can calculate it by measuring the difference in the speech dial setting presented to normal listeners at a level that yields a score of 95% in quiet and the noise dial setting presented to the same ear that yields a score less than 10%.

You can use the results from speech audiometry for many purposes. The below section describes these applications.

1. Cross-check against pure tone audiometry results

The cross-check principle in audiology states that no auditory test result should be accepted and used in the diagnosis of hearing loss until you confirm or cross-check it by one or more independent measures (Hall J. W., 3rd, 2016). Speech-in-quiet testing serves this purpose for the pure tone audiogram.

The following scores and their descriptions identify how well the speech detection threshold and the pure tone average correlate (Table 2).

| 6 dB or less | Good |

| 7 to 12 dB | Adequate |

| 13 dB or more | Poor |

Table 2: Correlation between speech detection threshold and pure tone average.

If there is a poor correlation between the speech detection threshold and the pure tone average, it warrants further investigation to determine the underlying cause or to identify if there was a technical error in the recordings of one of the tests.

2. Detect asymmetries between ears

Another core use of speech audiometry in quiet is to determine the symmetry between the two ears and whether it is appropriate to fit binaural amplification. Significant differences between ears can occur when there are two different etiologies causing hearing loss.

An example of this could be a patient with sensorineural hearing loss who then also contracts unilateral Meniere’s disease . In this example, it would be important to understand if there are significant differences in the word recognition scores between the two ears. If there are significant differences, then it may not be appropriate for you to fit binaural amplification, where other forms of amplification such as contralateral routing of sound (CROS) devices may be more appropriate.

3. Identify if further testing is required

The results from speech audiometry in quiet can identify whether further testing is required. This could be highlighted in several ways.

One example could be a severe difference in the SRT and the pure tone average. Another example could be significant asymmetries between the two ears. Lastly, very poor speech recognition scores in quiet might also be a red flag for further testing.

In these examples, the clinician might decide to perform a test to detect the presence of cochlear dead regions such as the TEN test or an ACT test to get more information.

4. Detect retro-cochlear hearing loss

In subjects with retro-cochlear causes of hearing loss, speech recognition can begin to deteriorate as sounds are made louder. This is called ‘roll-over’ and is calculated by the following equation:

- Roll-over index = (maximum score minus minimum score) divided by maximum score

If roll-over is detected at a certain value (the value is dependent on the word list chosen for testing but is commonly larger than 0.4), then it is considered to be a sign of retro-cochlear pathology. This could then have an influence on the fitting strategy for patients exhibiting these results.

It is important to note however that as the cross-check principle states, you should interpret any roll-over with caution and you should perform additional tests such as acoustic reflexes , the reflex decay test, or auditory brainstem response measurements to confirm the presence of a retro-cochlear lesion.

5. Predict success with amplification

The maximum speech recognition score is a useful measure which you can use to predict whether a person will benefit from hearing aids. More recent, and advanced tests such as the ACT test combined with the Acceptable Noise Level (ANL) test offer good alternatives to predicting hearing success with amplification.

Just like in pure tone audiometry, the stimuli which are presented during speech audiometry require annual calibration by a specialized technician ster. Checking of the transducers of the audiometer to determine if the speech stimulus contains any distortions or level abnormalities should also be performed daily. This process replicates the daily checks a clinicians would do for pure tone audiometry. If speech is being presented using a sound field setup, then you can use a sound level meter to check if the material is being presented at the correct level.

The next level of calibration depends on how the speech material is delivered to the audiometer. Speech material can be presented in many ways including live voice, CD, or installed WAV files on the audiometer. Speech being presented as live voice cannot be calibrated but instead requires the clinician to use the VU meter on the audiometer (which indicates the level of the signal being presented) to determine if they are speaking at the correct intensity. Speech material on a CD requires daily checks and is also performed using the VU meter on the audiometer. Here, a speech calibration tone track on the CD is used, and the VU meter is adjusted accordingly to the desired level as determined by the manufacturer of the speech material.

The most reliable way to deliver a speech stimulus is through a WAV file. By presenting through a WAV file, you can skip the daily tone-based calibration as this method allows you to calibrate the speech material as part of the annual calibration process. This saves the clinician time and ensures the stimulus is calibrated to the same standard as the pure tones in their audiometer. To calibrate the WAV file stimulus, the speech material is calibrated against a speech calibration tone. This is stored on the audiometer. Typically, a 1000 Hz speech tone is used for the calibration and the calibration process is the same as for a 1000 Hz pure tone calibration.

Lastly, if the speech is being presented through the sound field, a calibration professional should perform an annual sound field speaker calibration using an external free field microphone aimed directly at the speaker from the position of the patient’s head.

Coles, R. R., & Priede, V. M. (1975). Masking of the non-test ear in speech audiometry . The Journal of laryngology and otology , 89 (3), 217–226.

Graham, J. Baguley, D. (2009). Ballantyne's Deafness, 7th Edition. Whiley Blackwell.

Hall J. W., 3rd (2016). Crosscheck Principle in Pediatric Audiology Today: A 40-Year Perspective . Journal of audiology & otology , 20 (2), 59–67.

Katz, J. (2009). Handbook of Clinical Audiology. Wolters Kluwer.

Killion, M. C., Niquette, P. A., Gudmundsen, G. I., Revit, L. J., & Banerjee, S. (2004). Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners . The Journal of the Acoustical Society of America , 116 (4), 2395–2405.

Stach, B.A (1998). Clinical Audiology: An Introduction, Cengage Learning.

Popular Academy Advancements

What is nhl-to-ehl correction, getting started: assr, what is the ce-chirp® family of stimuli, nhl-to-ehl correction for abr stimuli.

- Find a distributor

- Customer stories

- Made Magazine

- ABR equipment

- OAE devices

- Hearing aid fitting systems

- Balance testing equipment

Certificates

- Privacy policy

- Cookie Policy