- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Statistical Hypothesis Testing Overview

By Jim Frost 59 Comments

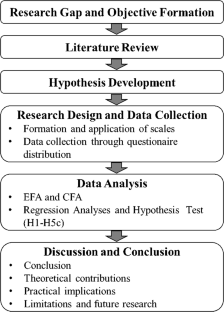

In this blog post, I explain why you need to use statistical hypothesis testing and help you navigate the essential terminology. Hypothesis testing is a crucial procedure to perform when you want to make inferences about a population using a random sample. These inferences include estimating population properties such as the mean, differences between means, proportions, and the relationships between variables.

This post provides an overview of statistical hypothesis testing. If you need to perform hypothesis tests, consider getting my book, Hypothesis Testing: An Intuitive Guide .

Why You Should Perform Statistical Hypothesis Testing

Hypothesis testing is a form of inferential statistics that allows us to draw conclusions about an entire population based on a representative sample. You gain tremendous benefits by working with a sample. In most cases, it is simply impossible to observe the entire population to understand its properties. The only alternative is to collect a random sample and then use statistics to analyze it.

While samples are much more practical and less expensive to work with, there are trade-offs. When you estimate the properties of a population from a sample, the sample statistics are unlikely to equal the actual population value exactly. For instance, your sample mean is unlikely to equal the population mean. The difference between the sample statistic and the population value is the sample error.

Differences that researchers observe in samples might be due to sampling error rather than representing a true effect at the population level. If sampling error causes the observed difference, the next time someone performs the same experiment the results might be different. Hypothesis testing incorporates estimates of the sampling error to help you make the correct decision. Learn more about Sampling Error .

For example, if you are studying the proportion of defects produced by two manufacturing methods, any difference you observe between the two sample proportions might be sample error rather than a true difference. If the difference does not exist at the population level, you won’t obtain the benefits that you expect based on the sample statistics. That can be a costly mistake!

Let’s cover some basic hypothesis testing terms that you need to know.

Background information : Difference between Descriptive and Inferential Statistics and Populations, Parameters, and Samples in Inferential Statistics

Hypothesis Testing

Hypothesis testing is a statistical analysis that uses sample data to assess two mutually exclusive theories about the properties of a population. Statisticians call these theories the null hypothesis and the alternative hypothesis. A hypothesis test assesses your sample statistic and factors in an estimate of the sample error to determine which hypothesis the data support.

When you can reject the null hypothesis, the results are statistically significant, and your data support the theory that an effect exists at the population level.

The effect is the difference between the population value and the null hypothesis value. The effect is also known as population effect or the difference. For example, the mean difference between the health outcome for a treatment group and a control group is the effect.

Typically, you do not know the size of the actual effect. However, you can use a hypothesis test to help you determine whether an effect exists and to estimate its size. Hypothesis tests convert your sample effect into a test statistic, which it evaluates for statistical significance. Learn more about Test Statistics .

An effect can be statistically significant, but that doesn’t necessarily indicate that it is important in a real-world, practical sense. For more information, read my post about Statistical vs. Practical Significance .

Null Hypothesis

The null hypothesis is one of two mutually exclusive theories about the properties of the population in hypothesis testing. Typically, the null hypothesis states that there is no effect (i.e., the effect size equals zero). The null is often signified by H 0 .

In all hypothesis testing, the researchers are testing an effect of some sort. The effect can be the effectiveness of a new vaccination, the durability of a new product, the proportion of defect in a manufacturing process, and so on. There is some benefit or difference that the researchers hope to identify.

However, it’s possible that there is no effect or no difference between the experimental groups. In statistics, we call this lack of an effect the null hypothesis. Therefore, if you can reject the null, you can favor the alternative hypothesis, which states that the effect exists (doesn’t equal zero) at the population level.

You can think of the null as the default theory that requires sufficiently strong evidence against in order to reject it.

For example, in a 2-sample t-test, the null often states that the difference between the two means equals zero.

When you can reject the null hypothesis, your results are statistically significant. Learn more about Statistical Significance: Definition & Meaning .

Related post : Understanding the Null Hypothesis in More Detail

Alternative Hypothesis

The alternative hypothesis is the other theory about the properties of the population in hypothesis testing. Typically, the alternative hypothesis states that a population parameter does not equal the null hypothesis value. In other words, there is a non-zero effect. If your sample contains sufficient evidence, you can reject the null and favor the alternative hypothesis. The alternative is often identified with H 1 or H A .

For example, in a 2-sample t-test, the alternative often states that the difference between the two means does not equal zero.

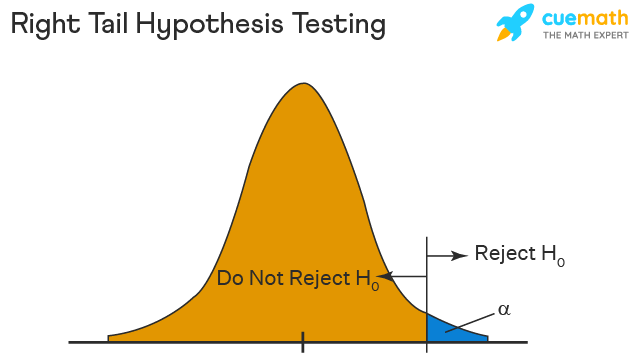

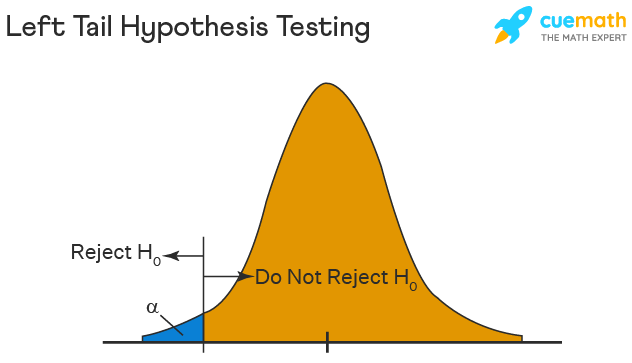

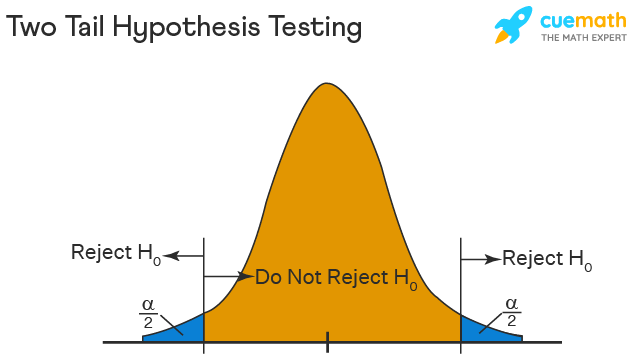

You can specify either a one- or two-tailed alternative hypothesis:

If you perform a two-tailed hypothesis test, the alternative states that the population parameter does not equal the null value. For example, when the alternative hypothesis is H A : μ ≠ 0, the test can detect differences both greater than and less than the null value.

A one-tailed alternative has more power to detect an effect but it can test for a difference in only one direction. For example, H A : μ > 0 can only test for differences that are greater than zero.

Related posts : Understanding T-tests and One-Tailed and Two-Tailed Hypothesis Tests Explained

P-values are the probability that you would obtain the effect observed in your sample, or larger, if the null hypothesis is correct. In simpler terms, p-values tell you how strongly your sample data contradict the null. Lower p-values represent stronger evidence against the null. You use P-values in conjunction with the significance level to determine whether your data favor the null or alternative hypothesis.

Related post : Interpreting P-values Correctly

Significance Level (Alpha)

For instance, a significance level of 0.05 signifies a 5% risk of deciding that an effect exists when it does not exist.

Use p-values and significance levels together to help you determine which hypothesis the data support. If the p-value is less than your significance level, you can reject the null and conclude that the effect is statistically significant. In other words, the evidence in your sample is strong enough to be able to reject the null hypothesis at the population level.

Related posts : Graphical Approach to Significance Levels and P-values and Conceptual Approach to Understanding Significance Levels

Types of Errors in Hypothesis Testing

Statistical hypothesis tests are not 100% accurate because they use a random sample to draw conclusions about entire populations. There are two types of errors related to drawing an incorrect conclusion.

- False positives: You reject a null that is true. Statisticians call this a Type I error . The Type I error rate equals your significance level or alpha (α).

- False negatives: You fail to reject a null that is false. Statisticians call this a Type II error. Generally, you do not know the Type II error rate. However, it is a larger risk when you have a small sample size , noisy data, or a small effect size. The type II error rate is also known as beta (β).

Statistical power is the probability that a hypothesis test correctly infers that a sample effect exists in the population. In other words, the test correctly rejects a false null hypothesis. Consequently, power is inversely related to a Type II error. Power = 1 – β. Learn more about Power in Statistics .

Related posts : Types of Errors in Hypothesis Testing and Estimating a Good Sample Size for Your Study Using Power Analysis

Which Type of Hypothesis Test is Right for You?

There are many different types of procedures you can use. The correct choice depends on your research goals and the data you collect. Do you need to understand the mean or the differences between means? Or, perhaps you need to assess proportions. You can even use hypothesis testing to determine whether the relationships between variables are statistically significant.

To choose the proper statistical procedure, you’ll need to assess your study objectives and collect the correct type of data . This background research is necessary before you begin a study.

Related Post : Hypothesis Tests for Continuous, Binary, and Count Data

Statistical tests are crucial when you want to use sample data to make conclusions about a population because these tests account for sample error. Using significance levels and p-values to determine when to reject the null hypothesis improves the probability that you will draw the correct conclusion.

To see an alternative approach to these traditional hypothesis testing methods, learn about bootstrapping in statistics !

If you want to see examples of hypothesis testing in action, I recommend the following posts that I have written:

- How Effective Are Flu Shots? This example shows how you can use statistics to test proportions.

- Fatality Rates in Star Trek . This example shows how to use hypothesis testing with categorical data.

- Busting Myths About the Battle of the Sexes . A fun example based on a Mythbusters episode that assess continuous data using several different tests.

- Are Yawns Contagious? Another fun example inspired by a Mythbusters episode.

Share this:

Reader Interactions

January 14, 2024 at 8:43 am

Hello professor Jim, how are you doing! Pls. What are the properties of a population and their examples? Thanks for your time and understanding.

January 14, 2024 at 12:57 pm

Please read my post about Populations vs. Samples for more information and examples.

Also, please note there is a search bar in the upper-right margin of my website. Use that to search for topics.

July 5, 2023 at 7:05 am

Hello, I have a question as I read your post. You say in p-values section

“P-values are the probability that you would obtain the effect observed in your sample, or larger, if the null hypothesis is correct. In simpler terms, p-values tell you how strongly your sample data contradict the null. Lower p-values represent stronger evidence against the null.”

But according to your definition of effect, the null states that an effect does not exist, correct? So what I assume you want to say is that “P-values are the probability that you would obtain the effect observed in your sample, or larger, if the null hypothesis is **incorrect**.”

July 6, 2023 at 5:18 am

Hi Shrinivas,

The correct definition of p-value is that it is a probability that exists in the context of a true null hypothesis. So, the quotation is correct in stating “if the null hypothesis is correct.”

Essentially, the p-value tells you the likelihood of your observed results (or more extreme) if the null hypothesis is true. It gives you an idea of whether your results are surprising or unusual if there is no effect.

Hence, with sufficiently low p-values, you reject the null hypothesis because it’s telling you that your sample results were unlikely to have occurred if there was no effect in the population.

I hope that helps make it more clear. If not, let me know I’ll attempt to clarify!

May 8, 2023 at 12:47 am

Thanks a lot Ny best regards

May 7, 2023 at 11:15 pm

Hi Jim Can you tell me something about size effect? Thanks

May 8, 2023 at 12:29 am

Here’s a post that I’ve written about Effect Sizes that will hopefully tell you what you need to know. Please read that. Then, if you have any more specific questions about effect sizes, please post them there. Thanks!

January 7, 2023 at 4:19 pm

Hi Jim, I have only read two pages so far but I am really amazed because in few paragraphs you made me clearly understand the concepts of months of courses I received in biostatistics! Thanks so much for this work you have done it helps a lot!

January 10, 2023 at 3:25 pm

Thanks so much!

June 17, 2021 at 1:45 pm

Can you help in the following question: Rocinante36 is priced at ₹7 lakh and has been designed to deliver a mileage of 22 km/litre and a top speed of 140 km/hr. Formulate the null and alternative hypotheses for mileage and top speed to check whether the new models are performing as per the desired design specifications.

April 19, 2021 at 1:51 pm

Its indeed great to read your work statistics.

I have a doubt regarding the one sample t-test. So as per your book on hypothesis testing with reference to page no 45, you have mentioned the difference between “the sample mean and the hypothesised mean is statistically significant”. So as per my understanding it should be quoted like “the difference between the population mean and the hypothesised mean is statistically significant”. The catch here is the hypothesised mean represents the sample mean.

Please help me understand this.

Regards Rajat

April 19, 2021 at 3:46 pm

Thanks for buying my book. I’m so glad it’s been helpful!

The test is performed on the sample but the results apply to the population. Hence, if the difference between the sample mean (observed in your study) and the hypothesized mean is statistically significant, that suggests that population does not equal the hypothesized mean.

For one sample tests, the hypothesized mean is not the sample mean. It is a mean that you want to use for the test value. It usually represents a value that is important to your research. In other words, it’s a value that you pick for some theoretical/practical reasons. You pick it because you want to determine whether the population mean is different from that particular value.

I hope that helps!

November 5, 2020 at 6:24 am

Jim, you are such a magnificent statistician/economist/econometrician/data scientist etc whatever profession. Your work inspires and simplifies the lives of so many researchers around the world. I truly admire you and your work. I will buy a copy of each book you have on statistics or econometrics. Keep doing the good work. Remain ever blessed

November 6, 2020 at 9:47 pm

Hi Renatus,

Thanks so much for you very kind comments. You made my day!! I’m so glad that my website has been helpful. And, thanks so much for supporting my books! 🙂

November 2, 2020 at 9:32 pm

Hi Jim, I hope you are aware of 2019 American Statistical Association’s official statement on Statistical Significance: https://www.tandfonline.com/doi/full/10.1080/00031305.2019.1583913 In case you do not bother reading the full article, may I quote you the core message here: “We conclude, based on our review of the articles in this special issue and the broader literature, that it is time to stop using the term “statistically significant” entirely. Nor should variants such as “significantly different,” “p < 0.05,” and “nonsignificant” survive, whether expressed in words, by asterisks in a table, or in some other way."

With best wishes,

November 3, 2020 at 2:09 am

I’m definitely aware of the debate surrounding how to use p-values most effectively. However, I need to correct you on one point. The link you provide is NOT a statement by the American Statistical Association. It is an editorial by several authors.

There is considerable debate over this issue. There are problems with p-values. However, as the authors state themselves, much of the problem is over people’s mindsets about how to use p-values and their incorrect interpretations about what statistical significance does and does not mean.

If you were to read my website more thoroughly, you’d be aware that I share many of their concerns and I address them in multiple posts. One of the authors’ key points is the need to be thoughtful and conduct thoughtful research and analysis. I emphasize this aspect in multiple posts on this topic. I’ll ask you to read the following three because they all address some of the authors’ concerns and suggestions. But you might run across others to read as well.

Five Tips for Using P-values to Avoid Being Misled How to Interpret P-values Correctly P-values and the Reproducibility of Experimental Results

September 24, 2020 at 11:52 pm

HI Jim, i just want you to know that you made explanation for Statistics so simple! I should say lesser and fewer words that reduce the complexity. All the best! 🙂

September 25, 2020 at 1:03 am

Thanks, Rene! Your kind words mean a lot to me! I’m so glad it has been helpful!

September 23, 2020 at 2:21 am

Honestly, I never understood stats during my entire M.Ed course and was another nightmare for me. But how easily you have explained each concept, I have understood stats way beyond my imagination. Thank you so much for helping ignorant research scholars like us. Looking forward to get hardcopy of your book. Kindly tell is it available through flipkart?

September 24, 2020 at 11:14 pm

I’m so happy to hear that my website has been helpful!

I checked on flipkart and it appears like my books are not available there. I’m never exactly sure where they’re available due to the vagaries of different distribution channels. They are available on Amazon in India.

Introduction to Statistics: An Intuitive Guide (Amazon IN) Hypothesis Testing: An Intuitive Guide (Amazon IN)

July 26, 2020 at 11:57 am

Dear Jim I am a teacher from India . I don’t have any background in statistics, and still I should tell that in a single read I can follow your explanations . I take my entire biostatistics class for botany graduates with your explanations. Thanks a lot. May I know how I can avail your books in India

July 28, 2020 at 12:31 am

Right now my books are only available as ebooks from my website. However, soon I’ll have some exciting news about other ways to obtain it. Stay tuned! I’ll announce it on my email list. If you’re not already on it, you can sign up using the form that is in the right margin of my website.

June 22, 2020 at 2:02 pm

Also can you please let me if this book covers topics like EDA and principal component analysis?

June 22, 2020 at 2:07 pm

This book doesn’t cover principal components analysis. Although, I wouldn’t really classify that as a hypothesis test. In the future, I might write a multivariate analysis book that would cover this and others. But, that’s well down the road.

My Introduction to Statistics covers EDA. That’s the largely graphical look at your data that you often do prior to hypothesis testing. The Introduction book perfectly leads right into the Hypothesis Testing book.

June 22, 2020 at 1:45 pm

Thanks for the detailed explanation. It does clear my doubts. I saw that your book related to hypothesis testing has the topics that I am studying currently. I am looking forward to purchasing it.

Regards, Take Care

June 19, 2020 at 1:03 pm

For this particular article I did not understand a couple of statements and it would great if you could help: 1)”If sample error causes the observed difference, the next time someone performs the same experiment the results might be different.” 2)”If the difference does not exist at the population level, you won’t obtain the benefits that you expect based on the sample statistics.”

I discovered your articles by chance and now I keep coming back to read & understand statistical concepts. These articles are very informative & easy to digest. Thanks for the simplifying things.

June 20, 2020 at 9:53 pm

I’m so happy to hear that you’ve found my website to be helpful!

To answer your questions, keep in mind that a central tenant of inferential statistics is that the random sample that a study drew was only one of an infinite number of possible it could’ve drawn. Each random sample produces different results. Most results will cluster around the population value assuming they used good methodology. However, random sampling error always exists and makes it so that population estimates from a sample almost never exactly equal the correct population value.

So, imagine that we’re studying a medication and comparing the treatment and control groups. Suppose that the medicine is truly not effect and that the population difference between the treatment and control group is zero (i.e., no difference.) Despite the true difference being zero, most sample estimates will show some degree of either a positive or negative effect thanks to random sampling error. So, just because a study has an observed difference does not mean that a difference exists at the population level. So, on to your questions:

1. If the observed difference is just random error, then it makes sense that if you collected another random sample, the difference could change. It could change from negative to positive, positive to negative, more extreme, less extreme, etc. However, if the difference exists at the population level, most random samples drawn from the population will reflect that difference. If the medicine has an effect, most random samples will reflect that fact and not bounce around on both sides of zero as much.

2. This is closely related to the previous answer. If there is no difference at the population level, but say you approve the medicine because of the observed effects in a sample. Even though your random sample showed an effect (which was really random error), that effect doesn’t exist. So, when you start using it on a larger scale, people won’t benefit from the medicine. That’s why it’s important to separate out what is easily explained by random error versus what is not easily explained by it.

I think reading my post about how hypothesis tests work will help clarify this process. Also, in about 24 hours (as I write this), I’ll be releasing my new ebook about Hypothesis Testing!

May 29, 2020 at 5:23 am

Hi Jim, I really enjoy your blog. Can you please link me on your blog where you discuss about Subgroup analysis and how it is done? I need to use non parametric and parametric statistical methods for my work and also do subgroup analysis in order to identify potential groups of patients that may benefit more from using a treatment than other groups.

May 29, 2020 at 2:12 pm

Hi, I don’t have a specific article about subgroup analysis. However, subgroup analysis is just the dividing up of a larger sample into subgroups and then analyzing those subgroups separately. You can use the various analyses I write about on the subgroups.

Alternatively, you can include the subgroups in regression analysis as an indicator variable and include that variable as a main effect and an interaction effect to see how the relationships vary by subgroup without needing to subdivide your data. I write about that approach in my article about comparing regression lines . This approach is my preferred approach when possible.

April 19, 2020 at 7:58 am

sir is confidence interval is a part of estimation?

April 17, 2020 at 3:36 pm

Sir can u plz briefly explain alternatives of hypothesis testing? I m unable to find the answer

April 18, 2020 at 1:22 am

Assuming you want to draw conclusions about populations by using samples (i.e., inferential statistics ), you can use confidence intervals and bootstrap methods as alternatives to the traditional hypothesis testing methods.

March 9, 2020 at 10:01 pm

Hi JIm, could you please help with activities that can best teach concepts of hypothesis testing through simulation, Also, do you have any question set that would enhance students intuition why learning hypothesis testing as a topic in introductory statistics. Thanks.

March 5, 2020 at 3:48 pm

Hi Jim, I’m studying multiple hypothesis testing & was wondering if you had any material that would be relevant. I’m more trying to understand how testing multiple samples simultaneously affects your results & more on the Bonferroni Correction

March 5, 2020 at 4:05 pm

I write about multiple comparisons (aka post hoc tests) in the ANOVA context . I don’t talk about Bonferroni Corrections specifically but I cover related types of corrections. I’m not sure if that exactly addresses what you want to know but is probably the closest I have already written. I hope it helps!

January 14, 2020 at 9:03 pm

Thank you! Have a great day/evening.

January 13, 2020 at 7:10 pm

Any help would be greatly appreciated. What is the difference between The Hypothesis Test and The Statistical Test of Hypothesis?

January 14, 2020 at 11:02 am

They sound like the same thing to me. Unless this is specialized terminology for a particular field or the author was intending something specific, I’d guess they’re one and the same.

April 1, 2019 at 10:00 am

so these are the only two forms of Hypothesis used in statistical testing?

April 1, 2019 at 10:02 am

Are you referring to the null and alternative hypothesis? If so, yes, that’s those are the standard hypotheses in a statistical hypothesis test.

April 1, 2019 at 9:57 am

year very insightful post, thanks for the write up

October 27, 2018 at 11:09 pm

hi there, am upcoming statistician, out of all blogs that i have read, i have found this one more useful as long as my problem is concerned. thanks so much

October 27, 2018 at 11:14 pm

Hi Stano, you’re very welcome! Thanks for your kind words. They mean a lot! I’m happy to hear that my posts were able to help you. I’m sure you will be a fantastic statistician. Best of luck with your studies!

October 26, 2018 at 11:39 am

Dear Jim, thank you very much for your explanations! I have a question. Can I use t-test to compare two samples in case each of them have right bias?

October 26, 2018 at 12:00 pm

Hi Tetyana,

You’re very welcome!

The term “right bias” is not a standard term. Do you by chance mean right skewed distributions? In other words, if you plot the distribution for each group on a histogram they have longer right tails? These are not the symmetrical bell-shape curves of the normal distribution.

If that’s the case, yes you can as long as you exceed a specific sample size within each group. I include a table that contains these sample size requirements in my post about nonparametric vs parametric analyses .

Bias in statistics refers to cases where an estimate of a value is systematically higher or lower than the true value. If this is the case, you might be able to use t-tests, but you’d need to be sure to understand the nature of the bias so you would understand what the results are really indicating.

I hope this helps!

April 2, 2018 at 7:28 am

Simple and upto the point 👍 Thank you so much.

April 2, 2018 at 11:11 am

Hi Kalpana, thanks! And I’m glad it was helpful!

March 26, 2018 at 8:41 am

Am I correct if I say: Alpha – Probability of wrongly rejection of null hypothesis P-value – Probability of wrongly acceptance of null hypothesis

March 28, 2018 at 3:14 pm

You’re correct about alpha. Alpha is the probability of rejecting the null hypothesis when the null is true.

Unfortunately, your definition of the p-value is a bit off. The p-value has a fairly convoluted definition. It is the probability of obtaining the effect observed in a sample, or more extreme, if the null hypothesis is true. The p-value does NOT indicate the probability that either the null or alternative is true or false. Although, those are very common misinterpretations. To learn more, read my post about how to interpret p-values correctly .

March 2, 2018 at 6:10 pm

I recently started reading your blog and it is very helpful to understand each concept of statistical tests in easy way with some good examples. Also, I recommend to other people go through all these blogs which you posted. Specially for those people who have not statistical background and they are facing to many problems while studying statistical analysis.

Thank you for your such good blogs.

March 3, 2018 at 10:12 pm

Hi Amit, I’m so glad that my blog posts have been helpful for you! It means a lot to me that you took the time to write such a nice comment! Also, thanks for recommending by blog to others! I try really hard to write posts about statistics that are easy to understand.

January 17, 2018 at 7:03 am

I recently started reading your blog and I find it very interesting. I am learning statistics by my own, and I generally do many google search to understand the concepts. So this blog is quite helpful for me, as it have most of the content which I am looking for.

January 17, 2018 at 3:56 pm

Hi Shashank, thank you! And, I’m very glad to hear that my blog is helpful!

January 2, 2018 at 2:28 pm

thank u very much sir.

January 2, 2018 at 2:36 pm

You’re very welcome, Hiral!

November 21, 2017 at 12:43 pm

Thank u so much sir….your posts always helps me to be a #statistician

November 21, 2017 at 2:40 pm

Hi Sachin, you’re very welcome! I’m happy that you find my posts to be helpful!

November 19, 2017 at 8:22 pm

great post as usual, but it would be nice to see an example.

November 19, 2017 at 8:27 pm

Thank you! At the end of this post, I have links to four other posts that show examples of hypothesis tests in action. You’ll find what you’re looking for in those posts!

Comments and Questions Cancel reply

Reset password New user? Sign up

Existing user? Log in

Hypothesis Testing

Already have an account? Log in here.

A hypothesis test is a statistical inference method used to test the significance of a proposed (hypothesized) relation between population statistics (parameters) and their corresponding sample estimators . In other words, hypothesis tests are used to determine if there is enough evidence in a sample to prove a hypothesis true for the entire population.

The test considers two hypotheses: the null hypothesis , which is a statement meant to be tested, usually something like "there is no effect" with the intention of proving this false, and the alternate hypothesis , which is the statement meant to stand after the test is performed. The two hypotheses must be mutually exclusive ; moreover, in most applications, the two are complementary (one being the negation of the other). The test works by comparing the \(p\)-value to the level of significance (a chosen target). If the \(p\)-value is less than or equal to the level of significance, then the null hypothesis is rejected.

When analyzing data, only samples of a certain size might be manageable as efficient computations. In some situations the error terms follow a continuous or infinite distribution, hence the use of samples to suggest accuracy of the chosen test statistics. The method of hypothesis testing gives an advantage over guessing what distribution or which parameters the data follows.

Definitions and Methodology

Hypothesis test and confidence intervals.

In statistical inference, properties (parameters) of a population are analyzed by sampling data sets. Given assumptions on the distribution, i.e. a statistical model of the data, certain hypotheses can be deduced from the known behavior of the model. These hypotheses must be tested against sampled data from the population.

The null hypothesis \((\)denoted \(H_0)\) is a statement that is assumed to be true. If the null hypothesis is rejected, then there is enough evidence (statistical significance) to accept the alternate hypothesis \((\)denoted \(H_1).\) Before doing any test for significance, both hypotheses must be clearly stated and non-conflictive, i.e. mutually exclusive, statements. Rejecting the null hypothesis, given that it is true, is called a type I error and it is denoted \(\alpha\), which is also its probability of occurrence. Failing to reject the null hypothesis, given that it is false, is called a type II error and it is denoted \(\beta\), which is also its probability of occurrence. Also, \(\alpha\) is known as the significance level , and \(1-\beta\) is known as the power of the test. \(H_0\) \(\textbf{is true}\)\(\hspace{15mm}\) \(H_0\) \(\textbf{is false}\) \(\textbf{Reject}\) \(H_0\)\(\hspace{10mm}\) Type I error Correct Decision \(\textbf{Reject}\) \(H_1\) Correct Decision Type II error The test statistic is the standardized value following the sampled data under the assumption that the null hypothesis is true, and a chosen particular test. These tests depend on the statistic to be studied and the assumed distribution it follows, e.g. the population mean following a normal distribution. The \(p\)-value is the probability of observing an extreme test statistic in the direction of the alternate hypothesis, given that the null hypothesis is true. The critical value is the value of the assumed distribution of the test statistic such that the probability of making a type I error is small.

Methodologies: Given an estimator \(\hat \theta\) of a population statistic \(\theta\), following a probability distribution \(P(T)\), computed from a sample \(\mathcal{S},\) and given a significance level \(\alpha\) and test statistic \(t^*,\) define \(H_0\) and \(H_1;\) compute the test statistic \(t^*.\) \(p\)-value Approach (most prevalent): Find the \(p\)-value using \(t^*\) (right-tailed). If the \(p\)-value is at most \(\alpha,\) reject \(H_0\). Otherwise, reject \(H_1\). Critical Value Approach: Find the critical value solving the equation \(P(T\geq t_\alpha)=\alpha\) (right-tailed). If \(t^*>t_\alpha\), reject \(H_0\). Otherwise, reject \(H_1\). Note: Failing to reject \(H_0\) only means inability to accept \(H_1\), and it does not mean to accept \(H_0\).

Assume a normally distributed population has recorded cholesterol levels with various statistics computed. From a sample of 100 subjects in the population, the sample mean was 214.12 mg/dL (milligrams per deciliter), with a sample standard deviation of 45.71 mg/dL. Perform a hypothesis test, with significance level 0.05, to test if there is enough evidence to conclude that the population mean is larger than 200 mg/dL. Hypothesis Test We will perform a hypothesis test using the \(p\)-value approach with significance level \(\alpha=0.05:\) Define \(H_0\): \(\mu=200\). Define \(H_1\): \(\mu>200\). Since our values are normally distributed, the test statistic is \(z^*=\frac{\bar X - \mu_0}{\frac{s}{\sqrt{n}}}=\frac{214.12 - 200}{\frac{45.71}{\sqrt{100}}}\approx 3.09\). Using a standard normal distribution, we find that our \(p\)-value is approximately \(0.001\). Since the \(p\)-value is at most \(\alpha=0.05,\) we reject \(H_0\). Therefore, we can conclude that the test shows sufficient evidence to support the claim that \(\mu\) is larger than \(200\) mg/dL.

If the sample size was smaller, the normal and \(t\)-distributions behave differently. Also, the question itself must be managed by a double-tail test instead.

Assume a population's cholesterol levels are recorded and various statistics are computed. From a sample of 25 subjects, the sample mean was 214.12 mg/dL (milligrams per deciliter), with a sample standard deviation of 45.71 mg/dL. Perform a hypothesis test, with significance level 0.05, to test if there is enough evidence to conclude that the population mean is not equal to 200 mg/dL. Hypothesis Test We will perform a hypothesis test using the \(p\)-value approach with significance level \(\alpha=0.05\) and the \(t\)-distribution with 24 degrees of freedom: Define \(H_0\): \(\mu=200\). Define \(H_1\): \(\mu\neq 200\). Using the \(t\)-distribution, the test statistic is \(t^*=\frac{\bar X - \mu_0}{\frac{s}{\sqrt{n}}}=\frac{214.12 - 200}{\frac{45.71}{\sqrt{25}}}\approx 1.54\). Using a \(t\)-distribution with 24 degrees of freedom, we find that our \(p\)-value is approximately \(2(0.068)=0.136\). We have multiplied by two since this is a two-tailed argument, i.e. the mean can be smaller than or larger than. Since the \(p\)-value is larger than \(\alpha=0.05,\) we fail to reject \(H_0\). Therefore, the test does not show sufficient evidence to support the claim that \(\mu\) is not equal to \(200\) mg/dL.

The complement of the rejection on a two-tailed hypothesis test (with significance level \(\alpha\)) for a population parameter \(\theta\) is equivalent to finding a confidence interval \((\)with confidence level \(1-\alpha)\) for the population parameter \(\theta\). If the assumption on the parameter \(\theta\) falls inside the confidence interval, then the test has failed to reject the null hypothesis \((\)with \(p\)-value greater than \(\alpha).\) Otherwise, if \(\theta\) does not fall in the confidence interval, then the null hypothesis is rejected in favor of the alternate \((\)with \(p\)-value at most \(\alpha).\)

- Statistics (Estimation)

- Normal Distribution

- Correlation

- Confidence Intervals

Problem Loading...

Note Loading...

Set Loading...

- Search Search Please fill out this field.

What Is Hypothesis Testing?

- How It Works

4 Step Process

The bottom line.

- Fundamental Analysis

Hypothesis Testing: 4 Steps and Example

:max_bytes(150000):strip_icc():format(webp)/ChristinaMajaski-5c9433ea46e0fb0001d880b1.jpeg)

Hypothesis testing, sometimes called significance testing, is an act in statistics whereby an analyst tests an assumption regarding a population parameter. The methodology employed by the analyst depends on the nature of the data used and the reason for the analysis.

Hypothesis testing is used to assess the plausibility of a hypothesis by using sample data. Such data may come from a larger population or a data-generating process. The word "population" will be used for both of these cases in the following descriptions.

Key Takeaways

- Hypothesis testing is used to assess the plausibility of a hypothesis by using sample data.

- The test provides evidence concerning the plausibility of the hypothesis, given the data.

- Statistical analysts test a hypothesis by measuring and examining a random sample of the population being analyzed.

- The four steps of hypothesis testing include stating the hypotheses, formulating an analysis plan, analyzing the sample data, and analyzing the result.

How Hypothesis Testing Works

In hypothesis testing, an analyst tests a statistical sample, intending to provide evidence on the plausibility of the null hypothesis. Statistical analysts measure and examine a random sample of the population being analyzed. All analysts use a random population sample to test two different hypotheses: the null hypothesis and the alternative hypothesis.

The null hypothesis is usually a hypothesis of equality between population parameters; e.g., a null hypothesis may state that the population mean return is equal to zero. The alternative hypothesis is effectively the opposite of a null hypothesis. Thus, they are mutually exclusive , and only one can be true. However, one of the two hypotheses will always be true.

The null hypothesis is a statement about a population parameter, such as the population mean, that is assumed to be true.

- State the hypotheses.

- Formulate an analysis plan, which outlines how the data will be evaluated.

- Carry out the plan and analyze the sample data.

- Analyze the results and either reject the null hypothesis, or state that the null hypothesis is plausible, given the data.

Example of Hypothesis Testing

If an individual wants to test that a penny has exactly a 50% chance of landing on heads, the null hypothesis would be that 50% is correct, and the alternative hypothesis would be that 50% is not correct. Mathematically, the null hypothesis is represented as Ho: P = 0.5. The alternative hypothesis is shown as "Ha" and is identical to the null hypothesis, except with the equal sign struck-through, meaning that it does not equal 50%.

A random sample of 100 coin flips is taken, and the null hypothesis is tested. If it is found that the 100 coin flips were distributed as 40 heads and 60 tails, the analyst would assume that a penny does not have a 50% chance of landing on heads and would reject the null hypothesis and accept the alternative hypothesis.

If there were 48 heads and 52 tails, then it is plausible that the coin could be fair and still produce such a result. In cases such as this where the null hypothesis is "accepted," the analyst states that the difference between the expected results (50 heads and 50 tails) and the observed results (48 heads and 52 tails) is "explainable by chance alone."

When Did Hypothesis Testing Begin?

Some statisticians attribute the first hypothesis tests to satirical writer John Arbuthnot in 1710, who studied male and female births in England after observing that in nearly every year, male births exceeded female births by a slight proportion. Arbuthnot calculated that the probability of this happening by chance was small, and therefore it was due to “divine providence.”

What are the Benefits of Hypothesis Testing?

Hypothesis testing helps assess the accuracy of new ideas or theories by testing them against data. This allows researchers to determine whether the evidence supports their hypothesis, helping to avoid false claims and conclusions. Hypothesis testing also provides a framework for decision-making based on data rather than personal opinions or biases. By relying on statistical analysis, hypothesis testing helps to reduce the effects of chance and confounding variables, providing a robust framework for making informed conclusions.

What are the Limitations of Hypothesis Testing?

Hypothesis testing relies exclusively on data and doesn’t provide a comprehensive understanding of the subject being studied. Additionally, the accuracy of the results depends on the quality of the available data and the statistical methods used. Inaccurate data or inappropriate hypothesis formulation may lead to incorrect conclusions or failed tests. Hypothesis testing can also lead to errors, such as analysts either accepting or rejecting a null hypothesis when they shouldn’t have. These errors may result in false conclusions or missed opportunities to identify significant patterns or relationships in the data.

Hypothesis testing refers to a statistical process that helps researchers determine the reliability of a study. By using a well-formulated hypothesis and set of statistical tests, individuals or businesses can make inferences about the population that they are studying and draw conclusions based on the data presented. All hypothesis testing methods have the same four-step process, which includes stating the hypotheses, formulating an analysis plan, analyzing the sample data, and analyzing the result.

Sage. " Introduction to Hypothesis Testing ," Page 4.

Elder Research. " Who Invented the Null Hypothesis? "

Formplus. " Hypothesis Testing: Definition, Uses, Limitations and Examples ."

:max_bytes(150000):strip_icc():format(webp)/GettyImages-767986903-16fb9c6298c645928fa94ac24a36d521.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

Hypothesis Testing

Hypothesis testing is a tool for making statistical inferences about the population data. It is an analysis tool that tests assumptions and determines how likely something is within a given standard of accuracy. Hypothesis testing provides a way to verify whether the results of an experiment are valid.

A null hypothesis and an alternative hypothesis are set up before performing the hypothesis testing. This helps to arrive at a conclusion regarding the sample obtained from the population. In this article, we will learn more about hypothesis testing, its types, steps to perform the testing, and associated examples.

| 1. | |

| 2. | |

| 3. | |

| 4. | |

| 5. | |

| 6. | |

| 7. | |

| 8. |

What is Hypothesis Testing in Statistics?

Hypothesis testing uses sample data from the population to draw useful conclusions regarding the population probability distribution . It tests an assumption made about the data using different types of hypothesis testing methodologies. The hypothesis testing results in either rejecting or not rejecting the null hypothesis.

Hypothesis Testing Definition

Hypothesis testing can be defined as a statistical tool that is used to identify if the results of an experiment are meaningful or not. It involves setting up a null hypothesis and an alternative hypothesis. These two hypotheses will always be mutually exclusive. This means that if the null hypothesis is true then the alternative hypothesis is false and vice versa. An example of hypothesis testing is setting up a test to check if a new medicine works on a disease in a more efficient manner.

Null Hypothesis

The null hypothesis is a concise mathematical statement that is used to indicate that there is no difference between two possibilities. In other words, there is no difference between certain characteristics of data. This hypothesis assumes that the outcomes of an experiment are based on chance alone. It is denoted as \(H_{0}\). Hypothesis testing is used to conclude if the null hypothesis can be rejected or not. Suppose an experiment is conducted to check if girls are shorter than boys at the age of 5. The null hypothesis will say that they are the same height.

Alternative Hypothesis

The alternative hypothesis is an alternative to the null hypothesis. It is used to show that the observations of an experiment are due to some real effect. It indicates that there is a statistical significance between two possible outcomes and can be denoted as \(H_{1}\) or \(H_{a}\). For the above-mentioned example, the alternative hypothesis would be that girls are shorter than boys at the age of 5.

Hypothesis Testing P Value

In hypothesis testing, the p value is used to indicate whether the results obtained after conducting a test are statistically significant or not. It also indicates the probability of making an error in rejecting or not rejecting the null hypothesis.This value is always a number between 0 and 1. The p value is compared to an alpha level, \(\alpha\) or significance level. The alpha level can be defined as the acceptable risk of incorrectly rejecting the null hypothesis. The alpha level is usually chosen between 1% to 5%.

Hypothesis Testing Critical region

All sets of values that lead to rejecting the null hypothesis lie in the critical region. Furthermore, the value that separates the critical region from the non-critical region is known as the critical value.

Hypothesis Testing Formula

Depending upon the type of data available and the size, different types of hypothesis testing are used to determine whether the null hypothesis can be rejected or not. The hypothesis testing formula for some important test statistics are given below:

- z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\). \(\overline{x}\) is the sample mean, \(\mu\) is the population mean, \(\sigma\) is the population standard deviation and n is the size of the sample.

- t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\). s is the sample standard deviation.

- \(\chi ^{2} = \sum \frac{(O_{i}-E_{i})^{2}}{E_{i}}\). \(O_{i}\) is the observed value and \(E_{i}\) is the expected value.

We will learn more about these test statistics in the upcoming section.

Types of Hypothesis Testing

Selecting the correct test for performing hypothesis testing can be confusing. These tests are used to determine a test statistic on the basis of which the null hypothesis can either be rejected or not rejected. Some of the important tests used for hypothesis testing are given below.

Hypothesis Testing Z Test

A z test is a way of hypothesis testing that is used for a large sample size (n ≥ 30). It is used to determine whether there is a difference between the population mean and the sample mean when the population standard deviation is known. It can also be used to compare the mean of two samples. It is used to compute the z test statistic. The formulas are given as follows:

- One sample: z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

- Two samples: z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing t Test

The t test is another method of hypothesis testing that is used for a small sample size (n < 30). It is also used to compare the sample mean and population mean. However, the population standard deviation is not known. Instead, the sample standard deviation is known. The mean of two samples can also be compared using the t test.

- One sample: t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\).

- Two samples: t = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{s_{1}^{2}}{n_{1}}+\frac{s_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing Chi Square

The Chi square test is a hypothesis testing method that is used to check whether the variables in a population are independent or not. It is used when the test statistic is chi-squared distributed.

One Tailed Hypothesis Testing

One tailed hypothesis testing is done when the rejection region is only in one direction. It can also be known as directional hypothesis testing because the effects can be tested in one direction only. This type of testing is further classified into the right tailed test and left tailed test.

Right Tailed Hypothesis Testing

The right tail test is also known as the upper tail test. This test is used to check whether the population parameter is greater than some value. The null and alternative hypotheses for this test are given as follows:

\(H_{0}\): The population parameter is ≤ some value

\(H_{1}\): The population parameter is > some value.

If the test statistic has a greater value than the critical value then the null hypothesis is rejected

Left Tailed Hypothesis Testing

The left tail test is also known as the lower tail test. It is used to check whether the population parameter is less than some value. The hypotheses for this hypothesis testing can be written as follows:

\(H_{0}\): The population parameter is ≥ some value

\(H_{1}\): The population parameter is < some value.

The null hypothesis is rejected if the test statistic has a value lesser than the critical value.

Two Tailed Hypothesis Testing

In this hypothesis testing method, the critical region lies on both sides of the sampling distribution. It is also known as a non - directional hypothesis testing method. The two-tailed test is used when it needs to be determined if the population parameter is assumed to be different than some value. The hypotheses can be set up as follows:

\(H_{0}\): the population parameter = some value

\(H_{1}\): the population parameter ≠ some value

The null hypothesis is rejected if the test statistic has a value that is not equal to the critical value.

Hypothesis Testing Steps

Hypothesis testing can be easily performed in five simple steps. The most important step is to correctly set up the hypotheses and identify the right method for hypothesis testing. The basic steps to perform hypothesis testing are as follows:

- Step 1: Set up the null hypothesis by correctly identifying whether it is the left-tailed, right-tailed, or two-tailed hypothesis testing.

- Step 2: Set up the alternative hypothesis.

- Step 3: Choose the correct significance level, \(\alpha\), and find the critical value.

- Step 4: Calculate the correct test statistic (z, t or \(\chi\)) and p-value.

- Step 5: Compare the test statistic with the critical value or compare the p-value with \(\alpha\) to arrive at a conclusion. In other words, decide if the null hypothesis is to be rejected or not.

Hypothesis Testing Example

The best way to solve a problem on hypothesis testing is by applying the 5 steps mentioned in the previous section. Suppose a researcher claims that the mean average weight of men is greater than 100kgs with a standard deviation of 15kgs. 30 men are chosen with an average weight of 112.5 Kgs. Using hypothesis testing, check if there is enough evidence to support the researcher's claim. The confidence interval is given as 95%.

Step 1: This is an example of a right-tailed test. Set up the null hypothesis as \(H_{0}\): \(\mu\) = 100.

Step 2: The alternative hypothesis is given by \(H_{1}\): \(\mu\) > 100.

Step 3: As this is a one-tailed test, \(\alpha\) = 100% - 95% = 5%. This can be used to determine the critical value.

1 - \(\alpha\) = 1 - 0.05 = 0.95

0.95 gives the required area under the curve. Now using a normal distribution table, the area 0.95 is at z = 1.645. A similar process can be followed for a t-test. The only additional requirement is to calculate the degrees of freedom given by n - 1.

Step 4: Calculate the z test statistic. This is because the sample size is 30. Furthermore, the sample and population means are known along with the standard deviation.

z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

\(\mu\) = 100, \(\overline{x}\) = 112.5, n = 30, \(\sigma\) = 15

z = \(\frac{112.5-100}{\frac{15}{\sqrt{30}}}\) = 4.56

Step 5: Conclusion. As 4.56 > 1.645 thus, the null hypothesis can be rejected.

Hypothesis Testing and Confidence Intervals

Confidence intervals form an important part of hypothesis testing. This is because the alpha level can be determined from a given confidence interval. Suppose a confidence interval is given as 95%. Subtract the confidence interval from 100%. This gives 100 - 95 = 5% or 0.05. This is the alpha value of a one-tailed hypothesis testing. To obtain the alpha value for a two-tailed hypothesis testing, divide this value by 2. This gives 0.05 / 2 = 0.025.

Related Articles:

- Probability and Statistics

- Data Handling

Important Notes on Hypothesis Testing

- Hypothesis testing is a technique that is used to verify whether the results of an experiment are statistically significant.

- It involves the setting up of a null hypothesis and an alternate hypothesis.

- There are three types of tests that can be conducted under hypothesis testing - z test, t test, and chi square test.

- Hypothesis testing can be classified as right tail, left tail, and two tail tests.

Examples on Hypothesis Testing

- Example 1: The average weight of a dumbbell in a gym is 90lbs. However, a physical trainer believes that the average weight might be higher. A random sample of 5 dumbbells with an average weight of 110lbs and a standard deviation of 18lbs. Using hypothesis testing check if the physical trainer's claim can be supported for a 95% confidence level. Solution: As the sample size is lesser than 30, the t-test is used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) > 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 5, s = 18. \(\alpha\) = 0.05 Using the t-distribution table, the critical value is 2.132 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = 2.484 As 2.484 > 2.132, the null hypothesis is rejected. Answer: The average weight of the dumbbells may be greater than 90lbs

- Example 2: The average score on a test is 80 with a standard deviation of 10. With a new teaching curriculum introduced it is believed that this score will change. On random testing, the score of 38 students, the mean was found to be 88. With a 0.05 significance level, is there any evidence to support this claim? Solution: This is an example of two-tail hypothesis testing. The z test will be used. \(H_{0}\): \(\mu\) = 80, \(H_{1}\): \(\mu\) ≠ 80 \(\overline{x}\) = 88, \(\mu\) = 80, n = 36, \(\sigma\) = 10. \(\alpha\) = 0.05 / 2 = 0.025 The critical value using the normal distribution table is 1.96 z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\) z = \(\frac{88-80}{\frac{10}{\sqrt{36}}}\) = 4.8 As 4.8 > 1.96, the null hypothesis is rejected. Answer: There is a difference in the scores after the new curriculum was introduced.

- Example 3: The average score of a class is 90. However, a teacher believes that the average score might be lower. The scores of 6 students were randomly measured. The mean was 82 with a standard deviation of 18. With a 0.05 significance level use hypothesis testing to check if this claim is true. Solution: The t test will be used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) < 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 6, s = 18 The critical value from the t table is -2.015 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = \(\frac{82-90}{\frac{18}{\sqrt{6}}}\) t = -1.088 As -1.088 > -2.015, we fail to reject the null hypothesis. Answer: There is not enough evidence to support the claim.

go to slide go to slide go to slide

Book a Free Trial Class

FAQs on Hypothesis Testing

What is hypothesis testing.

Hypothesis testing in statistics is a tool that is used to make inferences about the population data. It is also used to check if the results of an experiment are valid.

What is the z Test in Hypothesis Testing?

The z test in hypothesis testing is used to find the z test statistic for normally distributed data . The z test is used when the standard deviation of the population is known and the sample size is greater than or equal to 30.

What is the t Test in Hypothesis Testing?

The t test in hypothesis testing is used when the data follows a student t distribution . It is used when the sample size is less than 30 and standard deviation of the population is not known.

What is the formula for z test in Hypothesis Testing?

The formula for a one sample z test in hypothesis testing is z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\) and for two samples is z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

What is the p Value in Hypothesis Testing?

The p value helps to determine if the test results are statistically significant or not. In hypothesis testing, the null hypothesis can either be rejected or not rejected based on the comparison between the p value and the alpha level.

What is One Tail Hypothesis Testing?

When the rejection region is only on one side of the distribution curve then it is known as one tail hypothesis testing. The right tail test and the left tail test are two types of directional hypothesis testing.

What is the Alpha Level in Two Tail Hypothesis Testing?

To get the alpha level in a two tail hypothesis testing divide \(\alpha\) by 2. This is done as there are two rejection regions in the curve.

Tutorial Playlist

Statistics tutorial, everything you need to know about the probability density function in statistics, the best guide to understand central limit theorem, an in-depth guide to measures of central tendency : mean, median and mode, the ultimate guide to understand conditional probability.

A Comprehensive Look at Percentile in Statistics

The Best Guide to Understand Bayes Theorem

Everything you need to know about the normal distribution, an in-depth explanation of cumulative distribution function, a complete guide to chi-square test, what is hypothesis testing in statistics types and examples, understanding the fundamentals of arithmetic and geometric progression, the definitive guide to understand spearman’s rank correlation, mean squared error: overview, examples, concepts and more, all you need to know about the empirical rule in statistics, the complete guide to skewness and kurtosis, a holistic look at bernoulli distribution.

All You Need to Know About Bias in Statistics

A Complete Guide to Get a Grasp of Time Series Analysis

The Key Differences Between Z-Test Vs. T-Test

The Complete Guide to Understand Pearson's Correlation

A complete guide on the types of statistical studies, everything you need to know about poisson distribution, your best guide to understand correlation vs. regression, the most comprehensive guide for beginners on what is correlation, hypothesis testing in statistics - types | examples.

Lesson 10 of 24 By Avijeet Biswal

Table of Contents

In today’s data-driven world, decisions are based on data all the time. Hypothesis plays a crucial role in that process, whether it may be making business decisions, in the health sector, academia, or in quality improvement. Without hypothesis & hypothesis tests, you risk drawing the wrong conclusions and making bad decisions. In this tutorial, you will look at Hypothesis Testing in Statistics.

The Ultimate Ticket to Top Data Science Job Roles

What Is Hypothesis Testing in Statistics?

Hypothesis Testing is a type of statistical analysis in which you put your assumptions about a population parameter to the test. It is used to estimate the relationship between 2 statistical variables.

Let's discuss few examples of statistical hypothesis from real-life -

- A teacher assumes that 60% of his college's students come from lower-middle-class families.

- A doctor believes that 3D (Diet, Dose, and Discipline) is 90% effective for diabetic patients.

Now that you know about hypothesis testing, look at the two types of hypothesis testing in statistics.

Hypothesis Testing Formula

Z = ( x̅ – μ0 ) / (σ /√n)

- Here, x̅ is the sample mean,

- μ0 is the population mean,

- σ is the standard deviation,

- n is the sample size.

How Hypothesis Testing Works?

An analyst performs hypothesis testing on a statistical sample to present evidence of the plausibility of the null hypothesis. Measurements and analyses are conducted on a random sample of the population to test a theory. Analysts use a random population sample to test two hypotheses: the null and alternative hypotheses.

The null hypothesis is typically an equality hypothesis between population parameters; for example, a null hypothesis may claim that the population means return equals zero. The alternate hypothesis is essentially the inverse of the null hypothesis (e.g., the population means the return is not equal to zero). As a result, they are mutually exclusive, and only one can be correct. One of the two possibilities, however, will always be correct.

Your Dream Career is Just Around The Corner!

Null Hypothesis and Alternative Hypothesis

The Null Hypothesis is the assumption that the event will not occur. A null hypothesis has no bearing on the study's outcome unless it is rejected.

H0 is the symbol for it, and it is pronounced H-naught.

The Alternate Hypothesis is the logical opposite of the null hypothesis. The acceptance of the alternative hypothesis follows the rejection of the null hypothesis. H1 is the symbol for it.

Let's understand this with an example.

A sanitizer manufacturer claims that its product kills 95 percent of germs on average.

To put this company's claim to the test, create a null and alternate hypothesis.

H0 (Null Hypothesis): Average = 95%.

Alternative Hypothesis (H1): The average is less than 95%.

Another straightforward example to understand this concept is determining whether or not a coin is fair and balanced. The null hypothesis states that the probability of a show of heads is equal to the likelihood of a show of tails. In contrast, the alternate theory states that the probability of a show of heads and tails would be very different.

Become a Data Scientist with Hands-on Training!

Hypothesis Testing Calculation With Examples

Let's consider a hypothesis test for the average height of women in the United States. Suppose our null hypothesis is that the average height is 5'4". We gather a sample of 100 women and determine that their average height is 5'5". The standard deviation of population is 2.

To calculate the z-score, we would use the following formula:

z = ( x̅ – μ0 ) / (σ /√n)

z = (5'5" - 5'4") / (2" / √100)

z = 0.5 / (0.045)

We will reject the null hypothesis as the z-score of 11.11 is very large and conclude that there is evidence to suggest that the average height of women in the US is greater than 5'4".

Steps in Hypothesis Testing

Hypothesis testing is a statistical method to determine if there is enough evidence in a sample of data to infer that a certain condition is true for the entire population. Here’s a breakdown of the typical steps involved in hypothesis testing:

Formulate Hypotheses

- Null Hypothesis (H0): This hypothesis states that there is no effect or difference, and it is the hypothesis you attempt to reject with your test.

- Alternative Hypothesis (H1 or Ha): This hypothesis is what you might believe to be true or hope to prove true. It is usually considered the opposite of the null hypothesis.

Choose the Significance Level (α)

The significance level, often denoted by alpha (α), is the probability of rejecting the null hypothesis when it is true. Common choices for α are 0.05 (5%), 0.01 (1%), and 0.10 (10%).

Select the Appropriate Test

Choose a statistical test based on the type of data and the hypothesis. Common tests include t-tests, chi-square tests, ANOVA, and regression analysis. The selection depends on data type, distribution, sample size, and whether the hypothesis is one-tailed or two-tailed.

Collect Data

Gather the data that will be analyzed in the test. This data should be representative of the population to infer conclusions accurately.

Calculate the Test Statistic

Based on the collected data and the chosen test, calculate a test statistic that reflects how much the observed data deviates from the null hypothesis.

Determine the p-value

The p-value is the probability of observing test results at least as extreme as the results observed, assuming the null hypothesis is correct. It helps determine the strength of the evidence against the null hypothesis.

Make a Decision

Compare the p-value to the chosen significance level:

- If the p-value ≤ α: Reject the null hypothesis, suggesting sufficient evidence in the data supports the alternative hypothesis.

- If the p-value > α: Do not reject the null hypothesis, suggesting insufficient evidence to support the alternative hypothesis.

Report the Results

Present the findings from the hypothesis test, including the test statistic, p-value, and the conclusion about the hypotheses.

Perform Post-hoc Analysis (if necessary)

Depending on the results and the study design, further analysis may be needed to explore the data more deeply or to address multiple comparisons if several hypotheses were tested simultaneously.

Types of Hypothesis Testing

To determine whether a discovery or relationship is statistically significant, hypothesis testing uses a z-test. It usually checks to see if two means are the same (the null hypothesis). Only when the population standard deviation is known and the sample size is 30 data points or more, can a z-test be applied.

A statistical test called a t-test is employed to compare the means of two groups. To determine whether two groups differ or if a procedure or treatment affects the population of interest, it is frequently used in hypothesis testing.

Chi-Square

You utilize a Chi-square test for hypothesis testing concerning whether your data is as predicted. To determine if the expected and observed results are well-fitted, the Chi-square test analyzes the differences between categorical variables from a random sample. The test's fundamental premise is that the observed values in your data should be compared to the predicted values that would be present if the null hypothesis were true.

Hypothesis Testing and Confidence Intervals

Both confidence intervals and hypothesis tests are inferential techniques that depend on approximating the sample distribution. Data from a sample is used to estimate a population parameter using confidence intervals. Data from a sample is used in hypothesis testing to examine a given hypothesis. We must have a postulated parameter to conduct hypothesis testing.

Bootstrap distributions and randomization distributions are created using comparable simulation techniques. The observed sample statistic is the focal point of a bootstrap distribution, whereas the null hypothesis value is the focal point of a randomization distribution.

A variety of feasible population parameter estimates are included in confidence ranges. In this lesson, we created just two-tailed confidence intervals. There is a direct connection between these two-tail confidence intervals and these two-tail hypothesis tests. The results of a two-tailed hypothesis test and two-tailed confidence intervals typically provide the same results. In other words, a hypothesis test at the 0.05 level will virtually always fail to reject the null hypothesis if the 95% confidence interval contains the predicted value. A hypothesis test at the 0.05 level will nearly certainly reject the null hypothesis if the 95% confidence interval does not include the hypothesized parameter.

Become a Data Scientist through hands-on learning with hackathons, masterclasses, webinars, and Ask-Me-Anything! Start learning now!

Simple and Composite Hypothesis Testing

Depending on the population distribution, you can classify the statistical hypothesis into two types.

Simple Hypothesis: A simple hypothesis specifies an exact value for the parameter.

Composite Hypothesis: A composite hypothesis specifies a range of values.

A company is claiming that their average sales for this quarter are 1000 units. This is an example of a simple hypothesis.

Suppose the company claims that the sales are in the range of 900 to 1000 units. Then this is a case of a composite hypothesis.

One-Tailed and Two-Tailed Hypothesis Testing

The One-Tailed test, also called a directional test, considers a critical region of data that would result in the null hypothesis being rejected if the test sample falls into it, inevitably meaning the acceptance of the alternate hypothesis.

In a one-tailed test, the critical distribution area is one-sided, meaning the test sample is either greater or lesser than a specific value.

In two tails, the test sample is checked to be greater or less than a range of values in a Two-Tailed test, implying that the critical distribution area is two-sided.

If the sample falls within this range, the alternate hypothesis will be accepted, and the null hypothesis will be rejected.

Become a Data Scientist With Real-World Experience

Right Tailed Hypothesis Testing

If the larger than (>) sign appears in your hypothesis statement, you are using a right-tailed test, also known as an upper test. Or, to put it another way, the disparity is to the right. For instance, you can contrast the battery life before and after a change in production. Your hypothesis statements can be the following if you want to know if the battery life is longer than the original (let's say 90 hours):

- The null hypothesis is (H0 <= 90) or less change.

- A possibility is that battery life has risen (H1) > 90.

The crucial point in this situation is that the alternate hypothesis (H1), not the null hypothesis, decides whether you get a right-tailed test.

Left Tailed Hypothesis Testing

Alternative hypotheses that assert the true value of a parameter is lower than the null hypothesis are tested with a left-tailed test; they are indicated by the asterisk "<".

Suppose H0: mean = 50 and H1: mean not equal to 50

According to the H1, the mean can be greater than or less than 50. This is an example of a Two-tailed test.

In a similar manner, if H0: mean >=50, then H1: mean <50

Here the mean is less than 50. It is called a One-tailed test.

Type 1 and Type 2 Error

A hypothesis test can result in two types of errors.

Type 1 Error: A Type-I error occurs when sample results reject the null hypothesis despite being true.

Type 2 Error: A Type-II error occurs when the null hypothesis is not rejected when it is false, unlike a Type-I error.

Suppose a teacher evaluates the examination paper to decide whether a student passes or fails.

H0: Student has passed

H1: Student has failed

Type I error will be the teacher failing the student [rejects H0] although the student scored the passing marks [H0 was true].

Type II error will be the case where the teacher passes the student [do not reject H0] although the student did not score the passing marks [H1 is true].

Our Data Scientist Master's Program covers core topics such as R, Python, Machine Learning, Tableau, Hadoop, and Spark. Get started on your journey today!

Limitations of Hypothesis Testing

Hypothesis testing has some limitations that researchers should be aware of:

- It cannot prove or establish the truth: Hypothesis testing provides evidence to support or reject a hypothesis, but it cannot confirm the absolute truth of the research question.

- Results are sample-specific: Hypothesis testing is based on analyzing a sample from a population, and the conclusions drawn are specific to that particular sample.

- Possible errors: During hypothesis testing, there is a chance of committing type I error (rejecting a true null hypothesis) or type II error (failing to reject a false null hypothesis).

- Assumptions and requirements: Different tests have specific assumptions and requirements that must be met to accurately interpret results.

Learn All The Tricks Of The BI Trade

After reading this tutorial, you would have a much better understanding of hypothesis testing, one of the most important concepts in the field of Data Science . The majority of hypotheses are based on speculation about observed behavior, natural phenomena, or established theories.

If you are interested in statistics of data science and skills needed for such a career, you ought to explore the Post Graduate Program in Data Science.

If you have any questions regarding this ‘Hypothesis Testing In Statistics’ tutorial, do share them in the comment section. Our subject matter expert will respond to your queries. Happy learning!

1. What is hypothesis testing in statistics with example?

Hypothesis testing is a statistical method used to determine if there is enough evidence in a sample data to draw conclusions about a population. It involves formulating two competing hypotheses, the null hypothesis (H0) and the alternative hypothesis (Ha), and then collecting data to assess the evidence. An example: testing if a new drug improves patient recovery (Ha) compared to the standard treatment (H0) based on collected patient data.

2. What is H0 and H1 in statistics?

In statistics, H0 and H1 represent the null and alternative hypotheses. The null hypothesis, H0, is the default assumption that no effect or difference exists between groups or conditions. The alternative hypothesis, H1, is the competing claim suggesting an effect or a difference. Statistical tests determine whether to reject the null hypothesis in favor of the alternative hypothesis based on the data.

3. What is a simple hypothesis with an example?

A simple hypothesis is a specific statement predicting a single relationship between two variables. It posits a direct and uncomplicated outcome. For example, a simple hypothesis might state, "Increased sunlight exposure increases the growth rate of sunflowers." Here, the hypothesis suggests a direct relationship between the amount of sunlight (independent variable) and the growth rate of sunflowers (dependent variable), with no additional variables considered.

4. What are the 3 major types of hypothesis?

The three major types of hypotheses are:

- Null Hypothesis (H0): Represents the default assumption, stating that there is no significant effect or relationship in the data.

- Alternative Hypothesis (Ha): Contradicts the null hypothesis and proposes a specific effect or relationship that researchers want to investigate.

- Nondirectional Hypothesis: An alternative hypothesis that doesn't specify the direction of the effect, leaving it open for both positive and negative possibilities.

Find our PL-300 Microsoft Power BI Certification Training Online Classroom training classes in top cities:

| Name | Date | Place | |

|---|---|---|---|

| 7 Sep -22 Sep 2024, Weekend batch | Your City | ||

| 21 Sep -6 Oct 2024, Weekend batch | Your City | ||

| 12 Oct -27 Oct 2024, Weekend batch | Your City |

About the Author

Avijeet is a Senior Research Analyst at Simplilearn. Passionate about Data Analytics, Machine Learning, and Deep Learning, Avijeet is also interested in politics, cricket, and football.

Recommended Resources

Free eBook: Top Programming Languages For A Data Scientist

Normality Test in Minitab: Minitab with Statistics

Machine Learning Career Guide: A Playbook to Becoming a Machine Learning Engineer

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

- > Machine Learning

- > Statistics

What is Hypothesis Testing? Types and Methods

- Soumyaa Rawat

- Jul 23, 2021

Hypothesis Testing