- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- Introduction

Data collection

data analysis

Our editors will review what you’ve submitted and determine whether to revise the article.

- Academia - Data Analysis

- U.S. Department of Health and Human Services - Office of Research Integrity - Data Analysis

- Chemistry LibreTexts - Data Analysis

- IBM - What is Exploratory Data Analysis?

- Table Of Contents

data analysis , the process of systematically collecting, cleaning, transforming, describing, modeling, and interpreting data , generally employing statistical techniques. Data analysis is an important part of both scientific research and business, where demand has grown in recent years for data-driven decision making . Data analysis techniques are used to gain useful insights from datasets, which can then be used to make operational decisions or guide future research . With the rise of “ big data ,” the storage of vast quantities of data in large databases and data warehouses, there is increasing need to apply data analysis techniques to generate insights about volumes of data too large to be manipulated by instruments of low information-processing capacity.

Datasets are collections of information. Generally, data and datasets are themselves collected to help answer questions, make decisions, or otherwise inform reasoning. The rise of information technology has led to the generation of vast amounts of data of many kinds, such as text, pictures, videos, personal information, account data, and metadata, the last of which provide information about other data. It is common for apps and websites to collect data about how their products are used or about the people using their platforms. Consequently, there is vastly more data being collected today than at any other time in human history. A single business may track billions of interactions with millions of consumers at hundreds of locations with thousands of employees and any number of products. Analyzing that volume of data is generally only possible using specialized computational and statistical techniques.

The desire for businesses to make the best use of their data has led to the development of the field of business intelligence , which covers a variety of tools and techniques that allow businesses to perform data analysis on the information they collect.

For data to be analyzed, it must first be collected and stored. Raw data must be processed into a format that can be used for analysis and be cleaned so that errors and inconsistencies are minimized. Data can be stored in many ways, but one of the most useful is in a database . A database is a collection of interrelated data organized so that certain records (collections of data related to a single entity) can be retrieved on the basis of various criteria . The most familiar kind of database is the relational database , which stores data in tables with rows that represent records (tuples) and columns that represent fields (attributes). A query is a command that retrieves a subset of the information in the database according to certain criteria. A query may retrieve only records that meet certain criteria, or it may join fields from records across multiple tables by use of a common field.

Frequently, data from many sources is collected into large archives of data called data warehouses. The process of moving data from its original sources (such as databases) to a centralized location (generally a data warehouse) is called ETL (which stands for extract , transform , and load ).

- The extraction step occurs when you identify and copy or export the desired data from its source, such as by running a database query to retrieve the desired records.

- The transformation step is the process of cleaning the data so that they fit the analytical need for the data and the schema of the data warehouse. This may involve changing formats for certain fields, removing duplicate records, or renaming fields, among other processes.

- Finally, the clean data are loaded into the data warehouse, where they may join vast amounts of historical data and data from other sources.

After data are effectively collected and cleaned, they can be analyzed with a variety of techniques. Analysis often begins with descriptive and exploratory data analysis. Descriptive data analysis uses statistics to organize and summarize data, making it easier to understand the broad qualities of the dataset. Exploratory data analysis looks for insights into the data that may arise from descriptions of distribution, central tendency, or variability for a single data field. Further relationships between data may become apparent by examining two fields together. Visualizations may be employed during analysis, such as histograms (graphs in which the length of a bar indicates a quantity) or stem-and-leaf plots (which divide data into buckets, or “stems,” with individual data points serving as “leaves” on the stem).

Data analysis frequently goes beyond descriptive analysis to predictive analysis, making predictions about the future using predictive modeling techniques. Predictive modeling uses machine learning , regression analysis methods (which mathematically calculate the relationship between an independent variable and a dependent variable), and classification techniques to identify trends and relationships among variables. Predictive analysis may involve data mining , which is the process of discovering interesting or useful patterns in large volumes of information. Data mining often involves cluster analysis , which tries to find natural groupings within data, and anomaly detection , which detects instances in data that are unusual and stand out from other patterns. It may also look for rules within datasets, strong relationships among variables in the data.

Data Analysis

- Introduction to Data Analysis

- Quantitative Analysis Tools

- Qualitative Analysis Tools

- Mixed Methods Analysis

- Geospatial Analysis

- Further Reading

What is Data Analysis?

According to the federal government, data analysis is "the process of systematically applying statistical and/or logical techniques to describe and illustrate, condense and recap, and evaluate data" ( Responsible Conduct in Data Management ). Important components of data analysis include searching for patterns, remaining unbiased in drawing inference from data, practicing responsible data management , and maintaining "honest and accurate analysis" ( Responsible Conduct in Data Management ).

In order to understand data analysis further, it can be helpful to take a step back and understand the question "What is data?". Many of us associate data with spreadsheets of numbers and values, however, data can encompass much more than that. According to the federal government, data is "The recorded factual material commonly accepted in the scientific community as necessary to validate research findings" ( OMB Circular 110 ). This broad definition can include information in many formats.

Some examples of types of data are as follows:

- Photographs

- Hand-written notes from field observation

- Machine learning training data sets

- Ethnographic interview transcripts

- Sheet music

- Scripts for plays and musicals

- Observations from laboratory experiments ( CMU Data 101 )

Thus, data analysis includes the processing and manipulation of these data sources in order to gain additional insight from data, answer a research question, or confirm a research hypothesis.

Data analysis falls within the larger research data lifecycle, as seen below.

( University of Virginia )

Why Analyze Data?

Through data analysis, a researcher can gain additional insight from data and draw conclusions to address the research question or hypothesis. Use of data analysis tools helps researchers understand and interpret data.

What are the Types of Data Analysis?

Data analysis can be quantitative, qualitative, or mixed methods.

Quantitative research typically involves numbers and "close-ended questions and responses" ( Creswell & Creswell, 2018 , p. 3). Quantitative research tests variables against objective theories, usually measured and collected on instruments and analyzed using statistical procedures ( Creswell & Creswell, 2018 , p. 4). Quantitative analysis usually uses deductive reasoning.

Qualitative research typically involves words and "open-ended questions and responses" ( Creswell & Creswell, 2018 , p. 3). According to Creswell & Creswell, "qualitative research is an approach for exploring and understanding the meaning individuals or groups ascribe to a social or human problem" ( 2018 , p. 4). Thus, qualitative analysis usually invokes inductive reasoning.

Mixed methods research uses methods from both quantitative and qualitative research approaches. Mixed methods research works under the "core assumption... that the integration of qualitative and quantitative data yields additional insight beyond the information provided by either the quantitative or qualitative data alone" ( Creswell & Creswell, 2018 , p. 4).

- Next: Planning >>

- Last Updated: Aug 28, 2024 1:41 PM

- URL: https://guides.library.georgetown.edu/data-analysis

Statistical Analysis in Research: Meaning, Methods and Types

Home » Videos » Statistical Analysis in Research: Meaning, Methods and Types

The scientific method is an empirical approach to acquiring new knowledge by making skeptical observations and analyses to develop a meaningful interpretation. It is the basis of research and the primary pillar of modern science. Researchers seek to understand the relationships between factors associated with the phenomena of interest. In some cases, research works with vast chunks of data, making it difficult to observe or manipulate each data point. As a result, statistical analysis in research becomes a means of evaluating relationships and interconnections between variables with tools and analytical techniques for working with large data. Since researchers use statistical power analysis to assess the probability of finding an effect in such an investigation, the method is relatively accurate. Hence, statistical analysis in research eases analytical methods by focusing on the quantifiable aspects of phenomena.

What is Statistical Analysis in Research? A Simplified Definition

Statistical analysis uses quantitative data to investigate patterns, relationships, and patterns to understand real-life and simulated phenomena. The approach is a key analytical tool in various fields, including academia, business, government, and science in general. This statistical analysis in research definition implies that the primary focus of the scientific method is quantitative research. Notably, the investigator targets the constructs developed from general concepts as the researchers can quantify their hypotheses and present their findings in simple statistics.

When a business needs to learn how to improve its product, they collect statistical data about the production line and customer satisfaction. Qualitative data is valuable and often identifies the most common themes in the stakeholders’ responses. On the other hand, the quantitative data creates a level of importance, comparing the themes based on their criticality to the affected persons. For instance, descriptive statistics highlight tendency, frequency, variation, and position information. While the mean shows the average number of respondents who value a certain aspect, the variance indicates the accuracy of the data. In any case, statistical analysis creates simplified concepts used to understand the phenomenon under investigation. It is also a key component in academia as the primary approach to data representation, especially in research projects, term papers and dissertations.

Most Useful Statistical Analysis Methods in Research

Using statistical analysis methods in research is inevitable, especially in academic assignments, projects, and term papers. It’s always advisable to seek assistance from your professor or you can try research paper writing by CustomWritings before you start your academic project or write statistical analysis in research paper. Consulting an expert when developing a topic for your thesis or short mid-term assignment increases your chances of getting a better grade. Most importantly, it improves your understanding of research methods with insights on how to enhance the originality and quality of personalized essays. Professional writers can also help select the most suitable statistical analysis method for your thesis, influencing the choice of data and type of study.

Descriptive Statistics

Descriptive statistics is a statistical method summarizing quantitative figures to understand critical details about the sample and population. A description statistic is a figure that quantifies a specific aspect of the data. For instance, instead of analyzing the behavior of a thousand students, research can identify the most common actions among them. By doing this, the person utilizes statistical analysis in research, particularly descriptive statistics.

- Measures of central tendency . Central tendency measures are the mean, mode, and media or the averages denoting specific data points. They assess the centrality of the probability distribution, hence the name. These measures describe the data in relation to the center.

- Measures of frequency . These statistics document the number of times an event happens. They include frequency, count, ratios, rates, and proportions. Measures of frequency can also show how often a score occurs.

- Measures of dispersion/variation . These descriptive statistics assess the intervals between the data points. The objective is to view the spread or disparity between the specific inputs. Measures of variation include the standard deviation, variance, and range. They indicate how the spread may affect other statistics, such as the mean.

- Measures of position . Sometimes researchers can investigate relationships between scores. Measures of position, such as percentiles, quartiles, and ranks, demonstrate this association. They are often useful when comparing the data to normalized information.

Inferential Statistics

Inferential statistics is critical in statistical analysis in quantitative research. This approach uses statistical tests to draw conclusions about the population. Examples of inferential statistics include t-tests, F-tests, ANOVA, p-value, Mann-Whitney U test, and Wilcoxon W test. This

Common Statistical Analysis in Research Types

Although inferential and descriptive statistics can be classified as types of statistical analysis in research, they are mostly considered analytical methods. Types of research are distinguishable by the differences in the methodology employed in analyzing, assembling, classifying, manipulating, and interpreting data. The categories may also depend on the type of data used.

Predictive Analysis

Predictive research analyzes past and present data to assess trends and predict future events. An excellent example of predictive analysis is a market survey that seeks to understand customers’ spending habits to weigh the possibility of a repeat or future purchase. Such studies assess the likelihood of an action based on trends.

Prescriptive Analysis

On the other hand, a prescriptive analysis targets likely courses of action. It’s decision-making research designed to identify optimal solutions to a problem. Its primary objective is to test or assess alternative measures.

Causal Analysis

Causal research investigates the explanation behind the events. It explores the relationship between factors for causation. Thus, researchers use causal analyses to analyze root causes, possible problems, and unknown outcomes.

Mechanistic Analysis

This type of research investigates the mechanism of action. Instead of focusing only on the causes or possible outcomes, researchers may seek an understanding of the processes involved. In such cases, they use mechanistic analyses to document, observe, or learn the mechanisms involved.

Exploratory Data Analysis

Similarly, an exploratory study is extensive with a wider scope and minimal limitations. This type of research seeks insight into the topic of interest. An exploratory researcher does not try to generalize or predict relationships. Instead, they look for information about the subject before conducting an in-depth analysis.

The Importance of Statistical Analysis in Research

As a matter of fact, statistical analysis provides critical information for decision-making. Decision-makers require past trends and predictive assumptions to inform their actions. In most cases, the data is too complex or lacks meaningful inferences. Statistical tools for analyzing such details help save time and money, deriving only valuable information for assessment. An excellent statistical analysis in research example is a randomized control trial (RCT) for the Covid-19 vaccine. You can download a sample of such a document online to understand the significance such analyses have to the stakeholders. A vaccine RCT assesses the effectiveness, side effects, duration of protection, and other benefits. Hence, statistical analysis in research is a helpful tool for understanding data.

Sources and links For the articles and videos I use different databases, such as Eurostat, OECD World Bank Open Data, Data Gov and others. You are free to use the video I have made on your site using the link or the embed code. If you have any questions, don’t hesitate to write to me!

Support statistics and data, if you have reached the end and like this project, you can donate a coffee to “statistics and data”..

Copyright © 2022 Statistics and Data

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Anaesth

- v.60(9); 2016 Sep

Basic statistical tools in research and data analysis

Zulfiqar ali.

Department of Anaesthesiology, Division of Neuroanaesthesiology, Sheri Kashmir Institute of Medical Sciences, Soura, Srinagar, Jammu and Kashmir, India

S Bala Bhaskar

1 Department of Anaesthesiology and Critical Care, Vijayanagar Institute of Medical Sciences, Bellary, Karnataka, India

Statistical methods involved in carrying out a study include planning, designing, collecting data, analysing, drawing meaningful interpretation and reporting of the research findings. The statistical analysis gives meaning to the meaningless numbers, thereby breathing life into a lifeless data. The results and inferences are precise only if proper statistical tests are used. This article will try to acquaint the reader with the basic research tools that are utilised while conducting various studies. The article covers a brief outline of the variables, an understanding of quantitative and qualitative variables and the measures of central tendency. An idea of the sample size estimation, power analysis and the statistical errors is given. Finally, there is a summary of parametric and non-parametric tests used for data analysis.

INTRODUCTION

Statistics is a branch of science that deals with the collection, organisation, analysis of data and drawing of inferences from the samples to the whole population.[ 1 ] This requires a proper design of the study, an appropriate selection of the study sample and choice of a suitable statistical test. An adequate knowledge of statistics is necessary for proper designing of an epidemiological study or a clinical trial. Improper statistical methods may result in erroneous conclusions which may lead to unethical practice.[ 2 ]

Variable is a characteristic that varies from one individual member of population to another individual.[ 3 ] Variables such as height and weight are measured by some type of scale, convey quantitative information and are called as quantitative variables. Sex and eye colour give qualitative information and are called as qualitative variables[ 3 ] [ Figure 1 ].

Classification of variables

Quantitative variables

Quantitative or numerical data are subdivided into discrete and continuous measurements. Discrete numerical data are recorded as a whole number such as 0, 1, 2, 3,… (integer), whereas continuous data can assume any value. Observations that can be counted constitute the discrete data and observations that can be measured constitute the continuous data. Examples of discrete data are number of episodes of respiratory arrests or the number of re-intubations in an intensive care unit. Similarly, examples of continuous data are the serial serum glucose levels, partial pressure of oxygen in arterial blood and the oesophageal temperature.

A hierarchical scale of increasing precision can be used for observing and recording the data which is based on categorical, ordinal, interval and ratio scales [ Figure 1 ].

Categorical or nominal variables are unordered. The data are merely classified into categories and cannot be arranged in any particular order. If only two categories exist (as in gender male and female), it is called as a dichotomous (or binary) data. The various causes of re-intubation in an intensive care unit due to upper airway obstruction, impaired clearance of secretions, hypoxemia, hypercapnia, pulmonary oedema and neurological impairment are examples of categorical variables.

Ordinal variables have a clear ordering between the variables. However, the ordered data may not have equal intervals. Examples are the American Society of Anesthesiologists status or Richmond agitation-sedation scale.

Interval variables are similar to an ordinal variable, except that the intervals between the values of the interval variable are equally spaced. A good example of an interval scale is the Fahrenheit degree scale used to measure temperature. With the Fahrenheit scale, the difference between 70° and 75° is equal to the difference between 80° and 85°: The units of measurement are equal throughout the full range of the scale.

Ratio scales are similar to interval scales, in that equal differences between scale values have equal quantitative meaning. However, ratio scales also have a true zero point, which gives them an additional property. For example, the system of centimetres is an example of a ratio scale. There is a true zero point and the value of 0 cm means a complete absence of length. The thyromental distance of 6 cm in an adult may be twice that of a child in whom it may be 3 cm.

STATISTICS: DESCRIPTIVE AND INFERENTIAL STATISTICS

Descriptive statistics[ 4 ] try to describe the relationship between variables in a sample or population. Descriptive statistics provide a summary of data in the form of mean, median and mode. Inferential statistics[ 4 ] use a random sample of data taken from a population to describe and make inferences about the whole population. It is valuable when it is not possible to examine each member of an entire population. The examples if descriptive and inferential statistics are illustrated in Table 1 .

Example of descriptive and inferential statistics

Descriptive statistics

The extent to which the observations cluster around a central location is described by the central tendency and the spread towards the extremes is described by the degree of dispersion.

Measures of central tendency

The measures of central tendency are mean, median and mode.[ 6 ] Mean (or the arithmetic average) is the sum of all the scores divided by the number of scores. Mean may be influenced profoundly by the extreme variables. For example, the average stay of organophosphorus poisoning patients in ICU may be influenced by a single patient who stays in ICU for around 5 months because of septicaemia. The extreme values are called outliers. The formula for the mean is

where x = each observation and n = number of observations. Median[ 6 ] is defined as the middle of a distribution in a ranked data (with half of the variables in the sample above and half below the median value) while mode is the most frequently occurring variable in a distribution. Range defines the spread, or variability, of a sample.[ 7 ] It is described by the minimum and maximum values of the variables. If we rank the data and after ranking, group the observations into percentiles, we can get better information of the pattern of spread of the variables. In percentiles, we rank the observations into 100 equal parts. We can then describe 25%, 50%, 75% or any other percentile amount. The median is the 50 th percentile. The interquartile range will be the observations in the middle 50% of the observations about the median (25 th -75 th percentile). Variance[ 7 ] is a measure of how spread out is the distribution. It gives an indication of how close an individual observation clusters about the mean value. The variance of a population is defined by the following formula:

where σ 2 is the population variance, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The variance of a sample is defined by slightly different formula:

where s 2 is the sample variance, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. The formula for the variance of a population has the value ‘ n ’ as the denominator. The expression ‘ n −1’ is known as the degrees of freedom and is one less than the number of parameters. Each observation is free to vary, except the last one which must be a defined value. The variance is measured in squared units. To make the interpretation of the data simple and to retain the basic unit of observation, the square root of variance is used. The square root of the variance is the standard deviation (SD).[ 8 ] The SD of a population is defined by the following formula:

where σ is the population SD, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The SD of a sample is defined by slightly different formula:

where s is the sample SD, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. An example for calculation of variation and SD is illustrated in Table 2 .

Example of mean, variance, standard deviation

Normal distribution or Gaussian distribution

Most of the biological variables usually cluster around a central value, with symmetrical positive and negative deviations about this point.[ 1 ] The standard normal distribution curve is a symmetrical bell-shaped. In a normal distribution curve, about 68% of the scores are within 1 SD of the mean. Around 95% of the scores are within 2 SDs of the mean and 99% within 3 SDs of the mean [ Figure 2 ].

Normal distribution curve

Skewed distribution

It is a distribution with an asymmetry of the variables about its mean. In a negatively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the right of Figure 1 . In a positively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the left of the figure leading to a longer right tail.

Curves showing negatively skewed and positively skewed distribution

Inferential statistics

In inferential statistics, data are analysed from a sample to make inferences in the larger collection of the population. The purpose is to answer or test the hypotheses. A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. Hypothesis tests are thus procedures for making rational decisions about the reality of observed effects.

Probability is the measure of the likelihood that an event will occur. Probability is quantified as a number between 0 and 1 (where 0 indicates impossibility and 1 indicates certainty).

In inferential statistics, the term ‘null hypothesis’ ( H 0 ‘ H-naught ,’ ‘ H-null ’) denotes that there is no relationship (difference) between the population variables in question.[ 9 ]

Alternative hypothesis ( H 1 and H a ) denotes that a statement between the variables is expected to be true.[ 9 ]

The P value (or the calculated probability) is the probability of the event occurring by chance if the null hypothesis is true. The P value is a numerical between 0 and 1 and is interpreted by researchers in deciding whether to reject or retain the null hypothesis [ Table 3 ].

P values with interpretation

If P value is less than the arbitrarily chosen value (known as α or the significance level), the null hypothesis (H0) is rejected [ Table 4 ]. However, if null hypotheses (H0) is incorrectly rejected, this is known as a Type I error.[ 11 ] Further details regarding alpha error, beta error and sample size calculation and factors influencing them are dealt with in another section of this issue by Das S et al .[ 12 ]

Illustration for null hypothesis

PARAMETRIC AND NON-PARAMETRIC TESTS

Numerical data (quantitative variables) that are normally distributed are analysed with parametric tests.[ 13 ]

Two most basic prerequisites for parametric statistical analysis are:

- The assumption of normality which specifies that the means of the sample group are normally distributed

- The assumption of equal variance which specifies that the variances of the samples and of their corresponding population are equal.

However, if the distribution of the sample is skewed towards one side or the distribution is unknown due to the small sample size, non-parametric[ 14 ] statistical techniques are used. Non-parametric tests are used to analyse ordinal and categorical data.

Parametric tests

The parametric tests assume that the data are on a quantitative (numerical) scale, with a normal distribution of the underlying population. The samples have the same variance (homogeneity of variances). The samples are randomly drawn from the population, and the observations within a group are independent of each other. The commonly used parametric tests are the Student's t -test, analysis of variance (ANOVA) and repeated measures ANOVA.

Student's t -test

Student's t -test is used to test the null hypothesis that there is no difference between the means of the two groups. It is used in three circumstances:

where X = sample mean, u = population mean and SE = standard error of mean

where X 1 − X 2 is the difference between the means of the two groups and SE denotes the standard error of the difference.

- To test if the population means estimated by two dependent samples differ significantly (the paired t -test). A usual setting for paired t -test is when measurements are made on the same subjects before and after a treatment.

The formula for paired t -test is:

where d is the mean difference and SE denotes the standard error of this difference.

The group variances can be compared using the F -test. The F -test is the ratio of variances (var l/var 2). If F differs significantly from 1.0, then it is concluded that the group variances differ significantly.

Analysis of variance

The Student's t -test cannot be used for comparison of three or more groups. The purpose of ANOVA is to test if there is any significant difference between the means of two or more groups.

In ANOVA, we study two variances – (a) between-group variability and (b) within-group variability. The within-group variability (error variance) is the variation that cannot be accounted for in the study design. It is based on random differences present in our samples.

However, the between-group (or effect variance) is the result of our treatment. These two estimates of variances are compared using the F-test.

A simplified formula for the F statistic is:

where MS b is the mean squares between the groups and MS w is the mean squares within groups.

Repeated measures analysis of variance

As with ANOVA, repeated measures ANOVA analyses the equality of means of three or more groups. However, a repeated measure ANOVA is used when all variables of a sample are measured under different conditions or at different points in time.

As the variables are measured from a sample at different points of time, the measurement of the dependent variable is repeated. Using a standard ANOVA in this case is not appropriate because it fails to model the correlation between the repeated measures: The data violate the ANOVA assumption of independence. Hence, in the measurement of repeated dependent variables, repeated measures ANOVA should be used.

Non-parametric tests

When the assumptions of normality are not met, and the sample means are not normally, distributed parametric tests can lead to erroneous results. Non-parametric tests (distribution-free test) are used in such situation as they do not require the normality assumption.[ 15 ] Non-parametric tests may fail to detect a significant difference when compared with a parametric test. That is, they usually have less power.

As is done for the parametric tests, the test statistic is compared with known values for the sampling distribution of that statistic and the null hypothesis is accepted or rejected. The types of non-parametric analysis techniques and the corresponding parametric analysis techniques are delineated in Table 5 .

Analogue of parametric and non-parametric tests

Median test for one sample: The sign test and Wilcoxon's signed rank test

The sign test and Wilcoxon's signed rank test are used for median tests of one sample. These tests examine whether one instance of sample data is greater or smaller than the median reference value.

This test examines the hypothesis about the median θ0 of a population. It tests the null hypothesis H0 = θ0. When the observed value (Xi) is greater than the reference value (θ0), it is marked as+. If the observed value is smaller than the reference value, it is marked as − sign. If the observed value is equal to the reference value (θ0), it is eliminated from the sample.

If the null hypothesis is true, there will be an equal number of + signs and − signs.

The sign test ignores the actual values of the data and only uses + or − signs. Therefore, it is useful when it is difficult to measure the values.

Wilcoxon's signed rank test

There is a major limitation of sign test as we lose the quantitative information of the given data and merely use the + or – signs. Wilcoxon's signed rank test not only examines the observed values in comparison with θ0 but also takes into consideration the relative sizes, adding more statistical power to the test. As in the sign test, if there is an observed value that is equal to the reference value θ0, this observed value is eliminated from the sample.

Wilcoxon's rank sum test ranks all data points in order, calculates the rank sum of each sample and compares the difference in the rank sums.

Mann-Whitney test

It is used to test the null hypothesis that two samples have the same median or, alternatively, whether observations in one sample tend to be larger than observations in the other.

Mann–Whitney test compares all data (xi) belonging to the X group and all data (yi) belonging to the Y group and calculates the probability of xi being greater than yi: P (xi > yi). The null hypothesis states that P (xi > yi) = P (xi < yi) =1/2 while the alternative hypothesis states that P (xi > yi) ≠1/2.

Kolmogorov-Smirnov test

The two-sample Kolmogorov-Smirnov (KS) test was designed as a generic method to test whether two random samples are drawn from the same distribution. The null hypothesis of the KS test is that both distributions are identical. The statistic of the KS test is a distance between the two empirical distributions, computed as the maximum absolute difference between their cumulative curves.

Kruskal-Wallis test

The Kruskal–Wallis test is a non-parametric test to analyse the variance.[ 14 ] It analyses if there is any difference in the median values of three or more independent samples. The data values are ranked in an increasing order, and the rank sums calculated followed by calculation of the test statistic.

Jonckheere test

In contrast to Kruskal–Wallis test, in Jonckheere test, there is an a priori ordering that gives it a more statistical power than the Kruskal–Wallis test.[ 14 ]

Friedman test

The Friedman test is a non-parametric test for testing the difference between several related samples. The Friedman test is an alternative for repeated measures ANOVAs which is used when the same parameter has been measured under different conditions on the same subjects.[ 13 ]

Tests to analyse the categorical data

Chi-square test, Fischer's exact test and McNemar's test are used to analyse the categorical or nominal variables. The Chi-square test compares the frequencies and tests whether the observed data differ significantly from that of the expected data if there were no differences between groups (i.e., the null hypothesis). It is calculated by the sum of the squared difference between observed ( O ) and the expected ( E ) data (or the deviation, d ) divided by the expected data by the following formula:

A Yates correction factor is used when the sample size is small. Fischer's exact test is used to determine if there are non-random associations between two categorical variables. It does not assume random sampling, and instead of referring a calculated statistic to a sampling distribution, it calculates an exact probability. McNemar's test is used for paired nominal data. It is applied to 2 × 2 table with paired-dependent samples. It is used to determine whether the row and column frequencies are equal (that is, whether there is ‘marginal homogeneity’). The null hypothesis is that the paired proportions are equal. The Mantel-Haenszel Chi-square test is a multivariate test as it analyses multiple grouping variables. It stratifies according to the nominated confounding variables and identifies any that affects the primary outcome variable. If the outcome variable is dichotomous, then logistic regression is used.

SOFTWARES AVAILABLE FOR STATISTICS, SAMPLE SIZE CALCULATION AND POWER ANALYSIS

Numerous statistical software systems are available currently. The commonly used software systems are Statistical Package for the Social Sciences (SPSS – manufactured by IBM corporation), Statistical Analysis System ((SAS – developed by SAS Institute North Carolina, United States of America), R (designed by Ross Ihaka and Robert Gentleman from R core team), Minitab (developed by Minitab Inc), Stata (developed by StataCorp) and the MS Excel (developed by Microsoft).

There are a number of web resources which are related to statistical power analyses. A few are:

- StatPages.net – provides links to a number of online power calculators

- G-Power – provides a downloadable power analysis program that runs under DOS

- Power analysis for ANOVA designs an interactive site that calculates power or sample size needed to attain a given power for one effect in a factorial ANOVA design

- SPSS makes a program called SamplePower. It gives an output of a complete report on the computer screen which can be cut and paste into another document.

It is important that a researcher knows the concepts of the basic statistical methods used for conduct of a research study. This will help to conduct an appropriately well-designed study leading to valid and reliable results. Inappropriate use of statistical techniques may lead to faulty conclusions, inducing errors and undermining the significance of the article. Bad statistics may lead to bad research, and bad research may lead to unethical practice. Hence, an adequate knowledge of statistics and the appropriate use of statistical tests are important. An appropriate knowledge about the basic statistical methods will go a long way in improving the research designs and producing quality medical research which can be utilised for formulating the evidence-based guidelines.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

Unit of Analysis: Definition, Types & Examples

The unit of analysis is the people or things whose qualities will be measured. The unit of analysis is an essential part of a research project. It’s the main thing that a researcher looks at in his research.

A unit of analysis is the object about which you hope to have something to say at the end of your analysis, perhaps the major subject of your research.

In this blog post, we will explore and clarify the concept of the “unit of analysis,” including its definition, various types, and a concluding perspective on its significance.

What is a unit of analysis?

A unit of analysis is the thing you want to discuss after your research, probably what you would regard to be the primary emphasis of your research.

The researcher plans to comment on the primary topic or object in the research as a unit of analysis. The research question plays a significant role in determining it. The “who” or “what” that the researcher is interested in investigating is, to put it simply, the unit of analysis.

In his 2001 book Man, the State, and War, Waltz divides the world into three distinct spheres of study: the individual, the state, and war.

Understanding the reasoning behind the unit of analysis is vital. The likelihood of fruitful research increases if the rationale is understood. An individual, group, organization, nation, social phenomenon, etc., are a few examples.

LEARN ABOUT: Data Analytics Projects

Types of “unit of analysis”

In business research, there are almost unlimited types of possible analytical units. Data analytics and data analysis are closely related processes that involve extracting insights from data to make informed decisions. Even though the most typical unit of analysis is the individual, many research questions can be more precisely answered by looking at other types of units. Let’s find out,

1. Individual Level

The most prevalent unit of analysis in business research is the individual. These are the primary analytical units. The researcher may be interested in looking into:

- Employee actions

- Perceptions

- Attitudes or opinions.

Employees may come from wealthy or low-income families, as well as from rural or metropolitan areas.

A researcher might investigate if personnel from rural areas are more likely to arrive on time than those from urban areas. Additionally, he can check whether workers from rural areas who come from poorer families arrive on time compared to those from rural areas who come from wealthy families.

Each time, the individual (employee) serving as the analytical unit is discussed and explained. Employee analysis as a unit of analysis can shed light on issues in business, including customer and human resource behavior.

For example, employee work satisfaction and consumer purchasing patterns impact business, making research into these topics vital.

Psychologists typically concentrate on research on individuals. This research may significantly aid a firm’s success, as individuals’ knowledge and experiences reveal vital information. Thus, individuals are heavily utilized in business research.

2. Aggregates Level

Social science research does not usually focus on people. However, by combining individuals’ reactions, social scientists frequently describe and explain social interactions, communities, and groupings. Additionally, they research the collective of individuals, including communities, groups, and countries.

Aggregate levels can be divided into Groups (groups with an ad hoc structure) and Organizations (groups with a formal organization).

The following levels of the unit of analysis are made up of groups of people. A group is defined as two or more individuals who interact, share common traits, and feel connected to one another.

Many definitions also emphasize interdependence or objective resemblance (Turner, 1982; Platow, Grace, & Smithson, 2011) and those who identify as group members (Reicher, 1982) .

As a result, society and gangs serve as examples of groups. According to Webster’s Online Dictionary (2012), they can resemble some clubs but be far less formal.

Siblings, identical twins, family, and small group functioning are examples of studies with many units of analysis.

In such circumstances, a whole group might be compared to another. Families, gender-specific groups, pals, Facebook groups, and work departments can all be groups.

By analyzing groups, researchers can learn how they form and how age, experience, class, and gender affect them. When aggregated, an individual’s data describes the group they belong to.

Sociologists study groups like economists and businesspeople to form teams to complete projects. They continually research groups and group behavior.

Organizations

The next level of the unit of analysis is organizations, which are groups of people set up formally. Organizations could include businesses, religious groups, parts of the military, colleges, academic departments, supermarkets, business groups, and so on.

The social organization includes things like sexual composition, styles of leadership, organizational structure, systems of communication, and so on. (Susan & Wheelan, 2005; Chapais & Berman, 2004) . (Lim, Putnam, and Robert, 2010) say that well-known social organizations and religious institutions are among them.

Moody, White, and Douglas (2003) say social organizations are hierarchical. Hasmath, Hildebrandt, and Hsu (2016) say social organizations can take different forms. For example, they can be made by institutions like schools or governments.

Sociology, economics, political science, psychology, management, and organizational communication are some social science fields that study organizations (Douma & Schreuder, 2013) .

Organizations are different from groups in that they are more formal and have better organization. A researcher might want to study a company to generalize its results to the whole population of companies.

One way to look at an organization is by the number of employees, the net annual revenue, the net assets, the number of projects, and so on. He might want to know if big companies hire more or fewer women than small companies.

Organization researchers might be interested in how companies like Reliance, Amazon, and HCL affect our social and economic lives. People who work in business often study business organizations.

LEARN ABOUT: Data Management Framework

3. Social Level

The social level has 2 types,

Social Artifacts Level

Things are studied alongside humans. Social artifacts are human-made objects from diverse communities. Social artifacts are items, representations, assemblages, institutions, knowledge, and conceptual frameworks used to convey, interpret, or achieve a goal (IGI Global, 2017).

Cultural artifacts are anything humans generate that reveals their culture (Watts, 1981).

Social artifacts include books, newspapers, advertising, websites, technical devices, films, photographs, paintings, clothes, poems, jokes, students’ late excuses, scientific breakthroughs, furniture, machines, structures, etc. Infinite.

Humans build social objects for social behavior. As people or groups suggest a population in business research, each social object implies a class of items.

Same-class goods include business books, magazines, articles, and case studies. A business magazine’s quantity of articles, frequency, price, content, and editor in a research study may be characterized.

Then, a linked magazine’s population might be evaluated for description and explanation. Marx W. Wartofsky (1979) defined artifacts as primary artifacts utilized in production (like a camera), secondary artifacts connected to primary artifacts (like a camera user manual), and tertiary objects related to representations of secondary artifacts (like a camera user-manual sculpture).

The scientific study of an artifact reveals its creators and users. The artifact researcher may be interested in advertising, marketing, distribution, buying, etc.

Social Interaction Level

Social artifacts include social interaction. Such as:

- Eye contact with a coworker

- Buying something in a store

- Friendship decisions

- Road accidents

- Airline hijackings

- Professional counseling

- Whatsapp messaging

A researcher might study youthful employees’ smartphone addictions. Some addictions may involve social media, while others involve online games and movies that inhibit connection.

Smartphone addictions are examined as a societal phenomenon. Observation units are probably individuals (employees).

Anthropologists typically study social artifacts. They may be interested in the social order. A researcher who examines social interactions may be interested in how broader societal structures and factors impact daily behavior, festivals, and weddings.

LEARN ABOUT: Level of Analysis

Even though there is no perfect way to do research, it is generally agreed that researchers should try to find a unit of analysis that keeps the context needed to make sense of the data.

Researchers should consider the details of their research when deciding on the unit of analysis.

They should remember that consistent use of these units throughout the analysis process (from coding to developing categories and themes to interpreting the data) is essential to gaining insight from qualitative data and protecting the reliability of the results.

QuestionPro does much more than merely serve as survey software. We have a solution for every sector of the economy and every kind of issue. We also have systems for managing data, such as our research repository, Insights Hub.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Velodu and QuestionPro: Connecting Data with a Human Touch

Aug 28, 2024

Cross-Cultural Research: Methods, Challenges, & Key Findings

Aug 27, 2024

Qualtrics vs Microsoft Forms: Platform Comparison 2024

Are We Asking the Right Things at the Right Time in the Right Way? — Tuesday CX Thoughts

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

Thematic Analysis: A Step by Step Guide

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

What is Thematic Analysis?

Thematic analysis is a qualitative research method used to identify, analyze, and interpret patterns of shared meaning (themes) within a given data set, which can be in the form of interviews , focus group discussions , surveys, or other textual data.

Thematic analysis is a useful method for research seeking to understand people’s views, opinions, knowledge, experiences, or values from qualitative data.

This method is widely used in various fields, including psychology, sociology, and health sciences.

Thematic analysis minimally organizes and describes a data set in rich detail. Often, though, it goes further than this and interprets aspects of the research topic.

Key aspects of Thematic Analysis include:

- Flexibility : It can be adapted to suit the needs of various studies, providing a rich and detailed account of the data.

- Coding : The process involves assigning labels or codes to specific segments of the data that capture a single idea or concept relevant to the research question.

- Themes : Representing a broader level of analysis, encompassing multiple codes that share a common underlying meaning or pattern. They provide a more abstract and interpretive understanding of the data.

- Iterative process : Thematic analysis is a recursive process that involves constantly moving back and forth between the coded extracts, the entire data set, and the thematic analysis being produced.

- Interpretation : The researcher interprets the identified themes to make sense of the data and draw meaningful conclusions.

It’s important to note that the types of thematic analysis are not mutually exclusive, and researchers may adopt elements from different approaches depending on their research questions, goals, and epistemological stance.

The choice of approach should be guided by the research aims, the nature of the data, and the philosophical assumptions underpinning the study.

| Feature | Coding Reliability TA | Codebook TA | Reflexive TA |

|---|---|---|---|

| Conceptualized as topic summaries of the data | Typically conceptualized as topic summaries | Conceptualized as patterns of shared meaning that are underpinned by a central organizing concept | |

| Involves using a coding frame or codebook, which may be predetermined or generated from the data, to find evidence for themes or allocate data to predefined topics. Ideally, two or more researchers apply the coding frame separately to the data to avoid contamination | Typically involves early theme development and the use of a codebook and structured approach to coding | Involves an active process in which codes are developed from the data through the analysis. The researcher’s subjectivity shapes the coding and theme development process | |

| Emphasizes securing the reliability and accuracy of data coding, reflecting (post)positivist research values. Prioritizes minimizing subjectivity and maximizing objectivity in the coding process | Combines elements of both coding reliability and reflexive TA, but qualitative values tend to predominate. For example, the “accuracy” or “reliability” of coding is not a primary concern | Emphasizes the role of the researcher in knowledge construction and acknowledges that their subjectivity shapes the research process and outcomes | |

| Often used in research where minimizing subjectivity and maximizing objectivity in the coding process are highly valued | Commonly employed in applied research, particularly when information needs are predetermined, deadlines are tight, and research teams are large and may include qualitative novices. Pragmatic concerns often drive its use | Well-suited for exploring complex research issues. Often used in research where the researcher’s active role in knowledge construction is acknowledged and valued. Can be used to analyze a wide range of data, including interview transcripts, focus groups, and policy documents | |

| Themes are often predetermined or generated early in the analysis process, either prior to data analysis or following some familiarization with the data | Themes are typically developed early in the analysis process | Themes are developed later in the analytic process, emerging from the coded data | |

| The researcher’s subjectivity is minimized, aiming for objectivity in coding | The researcher’s subjectivity is acknowledged, though structured coding methods are used | The researcher’s subjectivity is viewed as a valuable resource in the analytic process and is considered to inevitably shape the research findings |

1. Coding Reliability Thematic Analysis

Coding reliability TA emphasizes using coding techniques to achieve reliable and accurate data coding, which reflects (post)positivist research values.

This approach emphasizes the reliability and replicability of the coding process. It involves multiple coders independently coding the data using a predetermined codebook.

The goal is to achieve a high level of agreement among the coders, which is often measured using inter-rater reliability metrics.

This approach often involves a coding frame or codebook determined in advance or generated after familiarization with the data.

In this type of TA, two or more researchers apply a fixed coding frame to the data, ideally working separately.

Some researchers even suggest that at least some coders should be unaware of the research question or area of study to prevent bias in the coding process.

Statistical tests are used to assess the level of agreement between coders, or the reliability of coding. Any differences in coding between researchers are resolved through consensus.

This approach is more suitable for research questions that require a more structured and reliable coding process, such as in content analysis or when comparing themes across different data sets.

2. Codebook Thematic Analysis

Codebook TA, such as template, framework, and matrix analysis, combines elements of coding reliability and reflexive.

Codebook TA, while employing structured coding methods like those used in coding reliability TA, generally prioritizes qualitative research values, such as reflexivity.

In this approach, the researcher develops a codebook based on their initial engagement with the data. The codebook contains a list of codes, their definitions, and examples from the data.

The codebook is then used to systematically code the entire data set. This approach allows for a more detailed and nuanced analysis of the data, as the codebook can be refined and expanded throughout the coding process.

It is particularly useful when the research aims to provide a comprehensive description of the data set.

Codebook TA is often chosen for pragmatic reasons in applied research, particularly when there are predetermined information needs, strict deadlines, and large teams with varying levels of qualitative research experience

The use of a codebook in this context helps to map the developing analysis, which is thought to improve teamwork, efficiency, and the speed of output delivery.

3. Reflexive Thematic Analysis

This approach emphasizes the role of the researcher in the analysis process. It acknowledges that the researcher’s subjectivity, theoretical assumptions, and interpretative framework shape the identification and interpretation of themes.

In reflexive TA, analysis starts with coding after data familiarization. Unlike other TA approaches, there is no codebook or coding frame. Instead, researchers develop codes as they work through the data.

As their understanding grows, codes can change to reflect new insights—for example, they might be renamed, combined with other codes, split into multiple codes, or have their boundaries redrawn.

If multiple researchers are involved, differences in coding are explored to enhance understanding, not to reach a consensus. The finalized coding is always open to new insights and coding.

Reflexive thematic analysis involves a more organic and iterative process of coding and theme development. The researcher continuously reflects on their role in the research process and how their own experiences and perspectives might influence the analysis.

This approach is particularly useful for exploratory research questions and when the researcher aims to provide a rich and nuanced interpretation of the data.

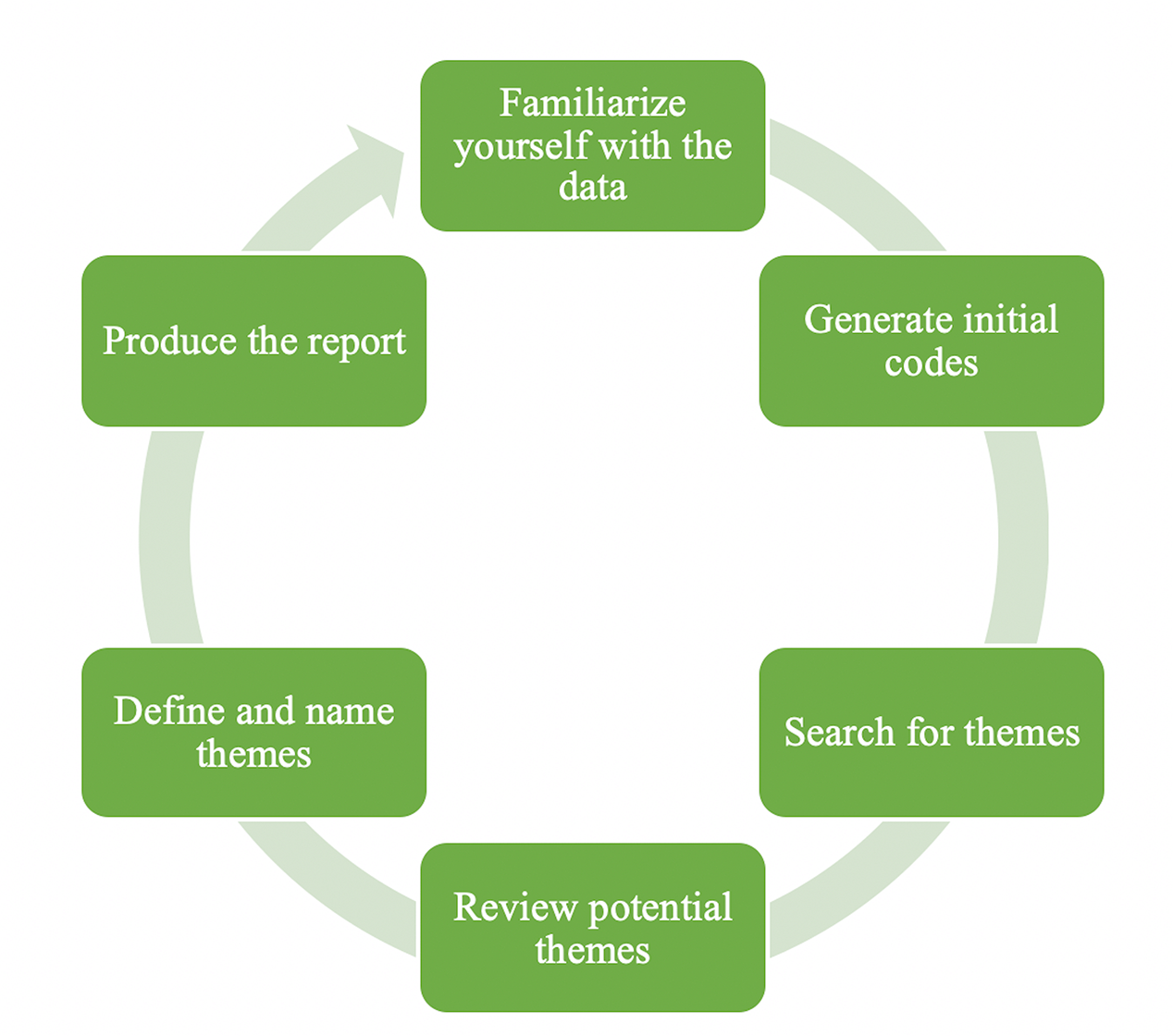

Six Steps Of Thematic Analysis

The process is characterized by a recursive movement between the different phases, rather than a strict linear progression.

This means that researchers might revisit earlier phases as their understanding of the data evolves, constantly refining their analysis.

For instance, during the reviewing and developing themes phase, researchers may realize that their initial codes don’t effectively capture the nuances of the data and might need to return to the coding phase.

This back-and-forth movement continues throughout the analysis, ensuring a thorough and evolving understanding of the data

Step 1: Familiarization With the Data

Familialization is crucial, as it helps researchers figure out the type (and number) of themes that might emerge from the data.

Familiarization involves immersing yourself in the data by reading and rereading textual data items, such as interview transcripts or survey responses.

You should read through the entire data set at least once, and possibly multiple times, until you feel intimately familiar with its content.

- Read and re-read the data (e.g., interview transcripts, survey responses, or other textual data) : The researcher reads through the entire data set (e.g., interview transcripts, survey responses, or field notes) multiple times to gain a comprehensive understanding of the data’s breadth and depth. This helps the researcher develop a holistic sense of the participants’ experiences, perspectives, and the overall narrative of the data.

- Listen to the audio recordings of the interviews : This helps to pick up on tone, emphasis, and emotional responses that may not be evident in the written transcripts. For instance, they might note a participant’s hesitation or excitement when discussing a particular topic. This is an important step if you didn’t collect the data or transcribe it yourself.

- Take notes on initial ideas and observations : Note-making at this stage should be observational and casual, not systematic and inclusive, as you aren’t coding yet. Think of the notes as memory aids and triggers for later coding and analysis. They are primarily for you, although they might be shared with research team members.

- Immerse yourself in the data to gain a deep understanding of its content : It’s not about just absorbing surface meaning like you would with a novel, but about thinking about what the data mean .

By the end of the familiarization step, the researcher should have a good grasp of the overall content of the data, the key issues and experiences discussed by the participants, and any initial patterns or themes that emerge.

This deep engagement with the data sets the stage for the subsequent steps of thematic analysis, where the researcher will systematically code and analyze the data to identify and interpret the central themes.

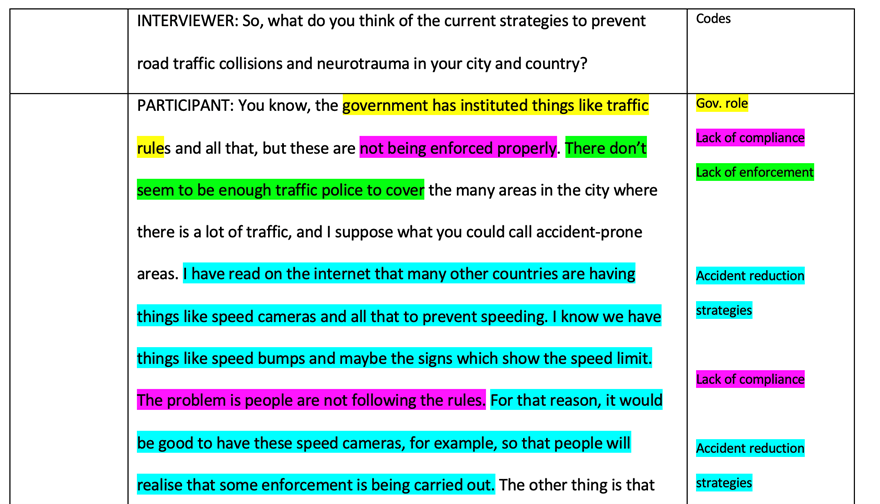

Step 2: Generating Initial Codes

Codes are concise labels or descriptions assigned to segments of the data that capture a specific feature or meaning relevant to the research question.

The process of qualitative coding helps the researcher organize and reduce the data into manageable chunks, making it easier to identify patterns and themes relevant to the research question.

Think of it this way: If your analysis is a house, themes are the walls and roof, while codes are the individual bricks and tiles.

Coding is an iterative process, with researchers refining and revising their codes as their understanding of the data evolves.

The ultimate goal is to develop a coherent and meaningful coding scheme that captures the richness and complexity of the participants’ experiences and helps answer the research questions.

Coding can be done manually (paper transcription and pen or highlighter) or by means of software (e.g. by using NVivo, MAXQDA or ATLAS.ti).

Decide On Your Coding Approach

- Will you use predefined deductive codes (based on theory or prior research), or let codes emerge from the data (inductive coding)?

- Will a piece of data have one code or multiple?

- Will you code everything or selectively? Broader research questions may warrant coding more comprehensively.

If you decide not to code everything, it’s crucial to:

- Have clear criteria for what you will and won’t code

- Be transparent about your selection process in research reports

- Remain open to revisiting uncoded data later in analysis

Do A First Round Of Coding

- Go through the data and assign initial codes to chunks that stand out

- Create a code name (a word or short phrase) that captures the essence of each chunk

- Keep a codebook – a list of your codes with descriptions or definitions

- Be open to adding, revising or combining codes as you go

After generating your first code, compare each new data extract to see if an existing code applies or a new one is needed.

Coding can be done at two levels of meaning:

- Semantic: Provides a concise summary of a portion of data, staying close to the content and the participant’s meaning. For example, “Fear/anxiety about people’s reactions to his sexuality.”

- Latent: Goes beyond the participant’s meaning to provide a conceptual interpretation of the data. For example, “Coming out imperative” interprets the meaning behind a participant’s statement.

Most codes will be a mix of descriptive and conceptual. Novice coders tend to generate more descriptive codes initially, developing more conceptual approaches with experience.

This step ends when:

- All data is fully coded.

- Data relevant to each code has been collated.

You have enough codes to capture the data’s diversity and patterns of meaning, with most codes appearing across multiple data items.

The number of codes you generate will depend on your topic, data set, and coding precision.

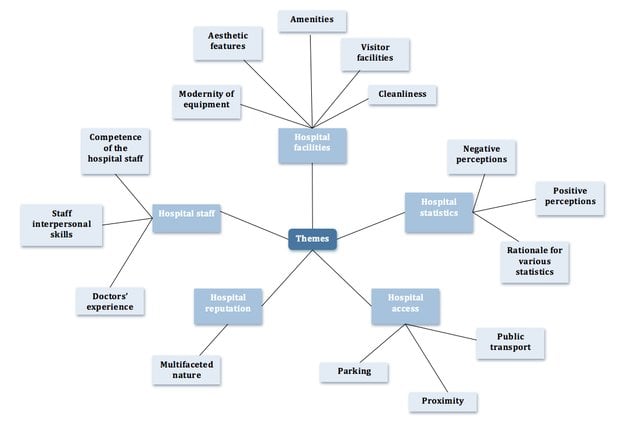

Step 3: Searching for Themes

Searching for themes begins after all data has been initially coded and collated, resulting in a comprehensive list of codes identified across the data set.

This step involves shifting from the specific, granular codes to a broader, more conceptual level of analysis.

Thematic analysis is not about “discovering” themes that already exist in the data, but rather actively constructing or generating themes through a careful and iterative process of examination and interpretation.

1 . Collating codes into potential themes :

The process of collating codes into potential themes involves grouping codes that share a unifying feature or represent a coherent and meaningful pattern in the data.

The researcher looks for patterns, similarities, and connections among the codes to develop overarching themes that capture the essence of the data.

By the end of this step, the researcher will have a collection of candidate themes and sub-themes, along with their associated data extracts.

However, these themes are still provisional and will be refined in the next step of reviewing the themes.

The searching for themes step helps the researcher move from a granular, code-level analysis to a more conceptual, theme-level understanding of the data.

This process is similar to sculpting, where the researcher shapes the “raw” data into a meaningful analysis.

This involves grouping codes that share a unifying feature or represent a coherent pattern in the data:

- Review the list of initial codes and their associated data extracts

- Look for codes that seem to share a common idea or concept

- Group related codes together to form potential themes

- Some codes may form main themes, while others may be sub-themes or may not fit into any theme

Thematic maps can help visualize the relationship between codes and themes. These visual aids provide a structured representation of the emerging patterns and connections within the data, aiding in understanding the significance of each theme and its contribution to the overall research question.

Example : Studying first-generation college students, the researcher might notice that the codes “financial challenges,” “working part-time,” and “scholarships” all relate to the broader theme of “Financial Obstacles and Support.”

Shared Meaning vs. Shared Topic in Thematic Analysis

Braun and Clarke distinguish between two different conceptualizations of themes : topic summaries and shared meaning

- Topic summary themes , which they consider to be underdeveloped, are organized around a shared topic but not a shared meaning, and often resemble “buckets” into which data is sorted.

- Shared meaning themes are patterns of shared meaning underpinned by a central organizing concept.

When grouping codes into themes, it’s crucial to ensure they share a central organizing concept or idea, reflecting a shared meaning rather than just belonging to the same topic.

Thematic analysis aims to uncover patterns of shared meaning within the data that offer insights into the research question

For example, codes centered around the concept of “Negotiating Sexual Identity” might not form one comprehensive theme, but rather two distinct themes: one related to “coming out and being out” and another exploring “different versions of being a gay man.”

Avoid : Themes as Topic Summaries (Shared Topic)

In this approach, themes simply summarize what participants mentioned about a particular topic, without necessarily revealing a unified meaning.

These themes are often underdeveloped and lack a central organizing concept.

It’s crucial to avoid creating themes that are merely summaries of data domains or directly reflect the interview questions.

Example : A theme titled “Incidents of homophobia” that merely describes various participant responses about homophobia without delving into deeper interpretations would be a topic summary theme.

Tip : Using interview questions as theme titles without further interpretation or relying on generic social functions (“social conflict”) or structural elements (“economics”) as themes often indicates a lack of shared meaning and thorough theme development. Such themes might lack a clear connection to the specific dataset

Ensure : Themes as Shared Meaning

Instead, themes should represent a deeper level of interpretation, capturing the essence of the data and providing meaningful insights into the research question.

These themes go beyond summarizing a topic by identifying a central concept or idea that connects the codes.

They reflect a pattern of shared meaning across different data points, even if those points come from different topics.

Example : The theme “‘There’s always that level of uncertainty’: Compulsory heterosexuality at university” effectively captures the shared experience of fear and uncertainty among LGBT students, connecting various codes related to homophobia and its impact on their lives.

2. Gathering data relevant to each potential theme

Once a potential theme is identified, all coded data extracts associated with the codes grouped under that theme are collated. This ensures a comprehensive view of the data pertaining to each theme.

This involves reviewing the collated data extracts for each code and organizing them under the relevant themes.

For example, if you have a potential theme called “Student Strategies for Test Preparation,” you would gather all data extracts that have been coded with related codes, such as “Time Management for Test Preparation” or “Study Groups for Test Preparation”.

You can then begin reviewing the data extracts for each theme to see if they form a coherent pattern.

This step helps to ensure that your themes accurately reflect the data and are not based on your own preconceptions.

It’s important to remember that coding is an organic and ongoing process.

You may need to re-read your entire data set to see if you have missed any data that is relevant to your themes, or if you need to create any new codes or themes.

The researcher should ensure that the data extracts within each theme are coherent and meaningful.

Example : The researcher would gather all the data extracts related to “Financial Obstacles and Support,” such as quotes about struggling to pay for tuition, working long hours, or receiving scholarships.

Here’s a more detailed explanation of how to gather data relevant to each potential theme:

- Start by creating a visual representation of your potential themes, such as a thematic map or table

- List each potential theme and its associated sub-themes (if any)

- This will help you organize your data and see the relationships between themes

- Go through your coded data extracts (e.g., highlighted quotes or segments from interview transcripts)

- For each coded extract, consider which theme or sub-theme it best fits under

- If a coded extract seems to fit under multiple themes, choose the theme that it most closely aligns with in terms of shared meaning

- As you identify which theme each coded extract belongs to, copy and paste the extract under the relevant theme in your thematic map or table

- Include enough context around each extract to ensure its meaning is clear

- If using qualitative data analysis software, you can assign the coded extracts to the relevant themes within the software

- As you gather data extracts under each theme, continuously review the extracts to ensure they form a coherent pattern

- If some extracts do not fit well with the rest of the data in a theme, consider whether they might better fit under a different theme or if the theme needs to be refined

3. Considering relationships between codes, themes, and different levels of themes

Once you have gathered all the relevant data extracts under each theme, review the themes to ensure they are meaningful and distinct.

This step involves analyzing how different codes combine to form overarching themes and exploring the hierarchical relationship between themes and sub-themes.

Within a theme, there can be different levels of themes, often organized hierarchically as main themes and sub-themes.

- Main themes represent the most overarching or significant patterns found in the data. They provide a high-level understanding of the key issues or concepts present in the data.

- Sub-themes , as the name suggests, fall under main themes, offering a more nuanced and detailed understanding of a particular aspect of the main theme.

The process of developing these relationships is iterative and involves:

- Creating a Thematic Map : The relationship between codes, sub-themes and main themes can be visualized using a thematic map, diagram, or table. Refine the thematic map as you continue to review and analyze the data.

- Examine how the codes and themes relate to each other : Some themes may be more prominent or overarching (main themes), while others may be secondary or subsidiary (sub-themes).

- Refining Themes : This map helps researchers review and refine themes, ensuring they are internally consistent (homogeneous) and distinct from other themes (heterogeneous).

- Defining and Naming Themes : Finally, themes are given clear and concise names and definitions that accurately reflect the meaning they represent in the data.

Consider how the themes tell a coherent story about the data and address the research question.

If some themes seem to overlap or are not well-supported by the data, consider combining or refining them.

If a theme is too broad or diverse, consider splitting it into separate themes or sub-theme.

Example : The researcher might identify “Academic Challenges” and “Social Adjustment” as other main themes, with sub-themes like “Imposter Syndrome” and “Balancing Work and School” under “Academic Challenges.” They would then consider how these themes relate to each other and contribute to the overall understanding of first-generation college students’ experiences.

Step 4: Reviewing Themes

The researcher reviews, modifies, and develops the preliminary themes identified in the previous step.

This phase involves a recursive process of checking the themes against the coded data extracts and the entire data set to ensure they accurately reflect the meanings evident in the data.