- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- Where was science invented?

- When did science begin?

empirical evidence

Our editors will review what you’ve submitted and determine whether to revise the article.

- National Center for Biotechnology Information - PubMed Central - Expert opinion vs. empirical evidence

- LiveScience - Empirical evidence: A definition

- Academia - Empirical Evidence

empirical evidence , information gathered directly or indirectly through observation or experimentation that may be used to confirm or disconfirm a scientific theory or to help justify, or establish as reasonable, a person’s belief in a given proposition. A belief may be said to be justified if there is sufficient evidence to make holding the belief reasonable.

The concept of evidence is the basis of philosophical evidentialism, an epistemological thesis according to which a person is justified in believing a given proposition p if and only if the person’s evidence for p is proper or sufficient. In this context , the Scottish Enlightenment philosopher David Hume (1711–76) famously asserted that the “wise man…proportions his belief to the evidence.” In a similar vein, the American astronomer Carl Sagan popularized the statement, “Extraordinary claims require extraordinary evidence.”

Foundationalists , however, defend the view that certain basic, or foundational, beliefs are either inherently justified or justified by something other than another belief (e.g., a sensation or perception) and that all other beliefs may be justified only if they are directly or indirectly supported by at least one foundational belief (that is, only if they are either supported by at least one foundational belief or supported by other beliefs that are themselves supported by at least one foundational belief). The most influential foundationalist of the modern period was the French philosopher and mathematician René Descartes (1596–1650), who attempted to establish a foundation for justified beliefs regarding an external world in his intuition that, for as long as he is thinking, he exists (“I think, therefore I am”; see cogito, ergo sum ). A traditional argument in favour of foundationalism asserts that no other account of inferential justification—the act of justifying a given belief by inferring it from another belief that itself is justified—is possible. Thus, assume that one belief, Belief 1, is justified by another belief, Belief 2. How is Belief 2 justified? It cannot be justified by Belief 1, because the inference from Belief 2 to Belief 1 would then be circular and invalid. It cannot be justified by a third nonfoundational Belief 3, because the same question would then apply to that belief, leading to an infinite regress. And one cannot simply assume that Belief 2 is not justified, for then Belief 1 would not be justified through the inference from Belief 2. Accordingly, there must be some beliefs whose justification does not depend on other beliefs, and those justified beliefs must function as a foundation for the inferential justification of other beliefs.

Empirical evidence can be quantitative or qualitative. Typically, numerical quantitative evidence can be represented visually by means of diagrams, graphs, or charts, reflecting the use of statistical or mathematical data and the researcher’s neutral noninteractive role. It can be obtained by methods such as experiments, surveys, correlational research (to study the relationship between variables), cross-sectional research (to compare different groups), causal-comparative research (to explore cause-effect relationships), and longitudinal studies (to test a subject during a given time period).

Qualitative evidence, on the other hand, can foster a deeper understanding of behaviour and related factors and is not typically expressed by using numbers. Often subjective and resulting from interaction between the researcher and participants, it can stem from the use of methods such as interviews (based on verbal interaction), observation (informing ethnographic research design), textual analysis (involving the description and interpretation of texts), focus groups (planned group discussions), and case studies (in-depth analyses of individuals or groups).

Empirical evidence is subject to assessments of its validity. Validity can be internal, involving the soundness of an experiment’s design and execution and the accuracy of subsequent data analysis , or external, involving generalizability to other research contexts ( see ecological validity ).

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Empirical Research: Definition, Methods, Types and Examples

Content Index

Empirical research: Definition

Empirical research: origin, quantitative research methods, qualitative research methods, steps for conducting empirical research, empirical research methodology cycle, advantages of empirical research, disadvantages of empirical research, why is there a need for empirical research.

Empirical research is defined as any research where conclusions of the study is strictly drawn from concretely empirical evidence, and therefore “verifiable” evidence.

This empirical evidence can be gathered using quantitative market research and qualitative market research methods.

For example: A research is being conducted to find out if listening to happy music in the workplace while working may promote creativity? An experiment is conducted by using a music website survey on a set of audience who are exposed to happy music and another set who are not listening to music at all, and the subjects are then observed. The results derived from such a research will give empirical evidence if it does promote creativity or not.

LEARN ABOUT: Behavioral Research

You must have heard the quote” I will not believe it unless I see it”. This came from the ancient empiricists, a fundamental understanding that powered the emergence of medieval science during the renaissance period and laid the foundation of modern science, as we know it today. The word itself has its roots in greek. It is derived from the greek word empeirikos which means “experienced”.

In today’s world, the word empirical refers to collection of data using evidence that is collected through observation or experience or by using calibrated scientific instruments. All of the above origins have one thing in common which is dependence of observation and experiments to collect data and test them to come up with conclusions.

LEARN ABOUT: Causal Research

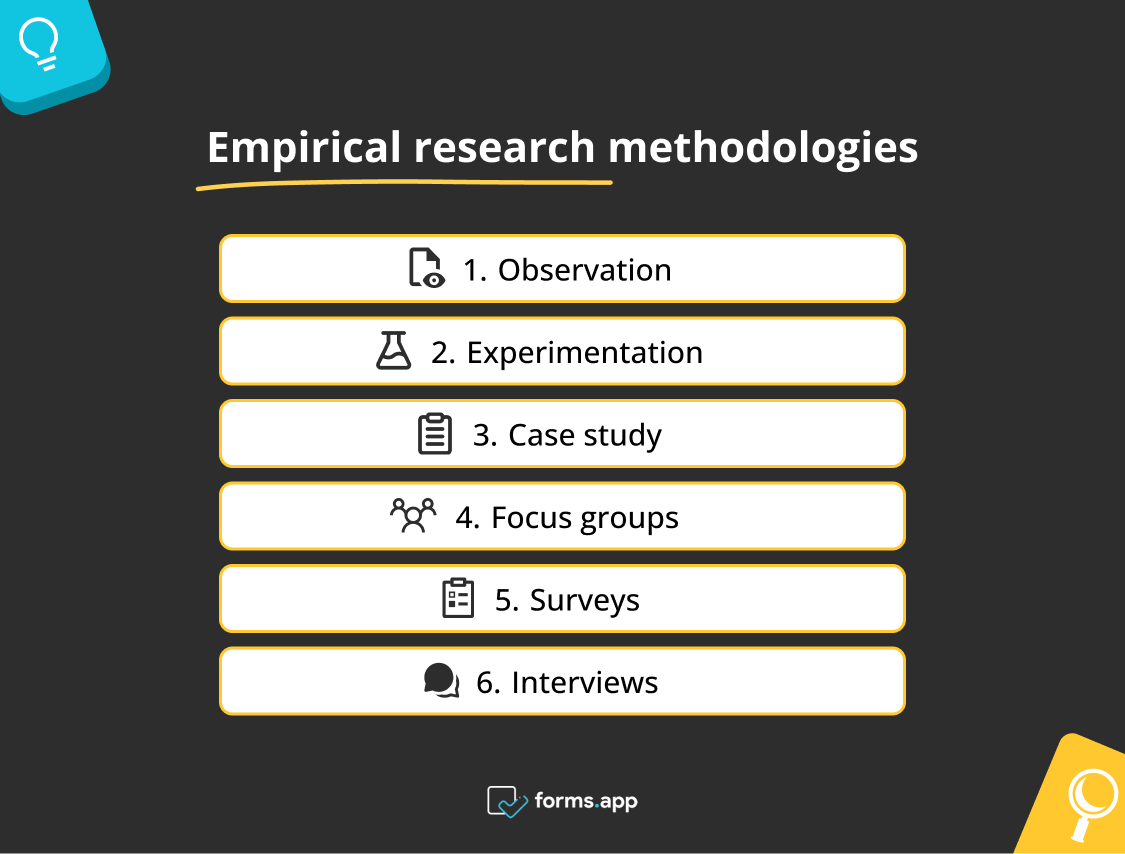

Types and methodologies of empirical research

Empirical research can be conducted and analysed using qualitative or quantitative methods.

- Quantitative research : Quantitative research methods are used to gather information through numerical data. It is used to quantify opinions, behaviors or other defined variables . These are predetermined and are in a more structured format. Some of the commonly used methods are survey, longitudinal studies, polls, etc

- Qualitative research: Qualitative research methods are used to gather non numerical data. It is used to find meanings, opinions, or the underlying reasons from its subjects. These methods are unstructured or semi structured. The sample size for such a research is usually small and it is a conversational type of method to provide more insight or in-depth information about the problem Some of the most popular forms of methods are focus groups, experiments, interviews, etc.

Data collected from these will need to be analysed. Empirical evidence can also be analysed either quantitatively and qualitatively. Using this, the researcher can answer empirical questions which have to be clearly defined and answerable with the findings he has got. The type of research design used will vary depending on the field in which it is going to be used. Many of them might choose to do a collective research involving quantitative and qualitative method to better answer questions which cannot be studied in a laboratory setting.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

Quantitative research methods aid in analyzing the empirical evidence gathered. By using these a researcher can find out if his hypothesis is supported or not.

- Survey research: Survey research generally involves a large audience to collect a large amount of data. This is a quantitative method having a predetermined set of closed questions which are pretty easy to answer. Because of the simplicity of such a method, high responses are achieved. It is one of the most commonly used methods for all kinds of research in today’s world.

Previously, surveys were taken face to face only with maybe a recorder. However, with advancement in technology and for ease, new mediums such as emails , or social media have emerged.

For example: Depletion of energy resources is a growing concern and hence there is a need for awareness about renewable energy. According to recent studies, fossil fuels still account for around 80% of energy consumption in the United States. Even though there is a rise in the use of green energy every year, there are certain parameters because of which the general population is still not opting for green energy. In order to understand why, a survey can be conducted to gather opinions of the general population about green energy and the factors that influence their choice of switching to renewable energy. Such a survey can help institutions or governing bodies to promote appropriate awareness and incentive schemes to push the use of greener energy.

Learn more: Renewable Energy Survey Template Descriptive Research vs Correlational Research

- Experimental research: In experimental research , an experiment is set up and a hypothesis is tested by creating a situation in which one of the variable is manipulated. This is also used to check cause and effect. It is tested to see what happens to the independent variable if the other one is removed or altered. The process for such a method is usually proposing a hypothesis, experimenting on it, analyzing the findings and reporting the findings to understand if it supports the theory or not.

For example: A particular product company is trying to find what is the reason for them to not be able to capture the market. So the organisation makes changes in each one of the processes like manufacturing, marketing, sales and operations. Through the experiment they understand that sales training directly impacts the market coverage for their product. If the person is trained well, then the product will have better coverage.

- Correlational research: Correlational research is used to find relation between two set of variables . Regression analysis is generally used to predict outcomes of such a method. It can be positive, negative or neutral correlation.

LEARN ABOUT: Level of Analysis

For example: Higher educated individuals will get higher paying jobs. This means higher education enables the individual to high paying job and less education will lead to lower paying jobs.

- Longitudinal study: Longitudinal study is used to understand the traits or behavior of a subject under observation after repeatedly testing the subject over a period of time. Data collected from such a method can be qualitative or quantitative in nature.

For example: A research to find out benefits of exercise. The target is asked to exercise everyday for a particular period of time and the results show higher endurance, stamina, and muscle growth. This supports the fact that exercise benefits an individual body.

- Cross sectional: Cross sectional study is an observational type of method, in which a set of audience is observed at a given point in time. In this type, the set of people are chosen in a fashion which depicts similarity in all the variables except the one which is being researched. This type does not enable the researcher to establish a cause and effect relationship as it is not observed for a continuous time period. It is majorly used by healthcare sector or the retail industry.

For example: A medical study to find the prevalence of under-nutrition disorders in kids of a given population. This will involve looking at a wide range of parameters like age, ethnicity, location, incomes and social backgrounds. If a significant number of kids coming from poor families show under-nutrition disorders, the researcher can further investigate into it. Usually a cross sectional study is followed by a longitudinal study to find out the exact reason.

- Causal-Comparative research : This method is based on comparison. It is mainly used to find out cause-effect relationship between two variables or even multiple variables.

For example: A researcher measured the productivity of employees in a company which gave breaks to the employees during work and compared that to the employees of the company which did not give breaks at all.

LEARN ABOUT: Action Research

Some research questions need to be analysed qualitatively, as quantitative methods are not applicable there. In many cases, in-depth information is needed or a researcher may need to observe a target audience behavior, hence the results needed are in a descriptive analysis form. Qualitative research results will be descriptive rather than predictive. It enables the researcher to build or support theories for future potential quantitative research. In such a situation qualitative research methods are used to derive a conclusion to support the theory or hypothesis being studied.

LEARN ABOUT: Qualitative Interview

- Case study: Case study method is used to find more information through carefully analyzing existing cases. It is very often used for business research or to gather empirical evidence for investigation purpose. It is a method to investigate a problem within its real life context through existing cases. The researcher has to carefully analyse making sure the parameter and variables in the existing case are the same as to the case that is being investigated. Using the findings from the case study, conclusions can be drawn regarding the topic that is being studied.

For example: A report mentioning the solution provided by a company to its client. The challenges they faced during initiation and deployment, the findings of the case and solutions they offered for the problems. Such case studies are used by most companies as it forms an empirical evidence for the company to promote in order to get more business.

- Observational method: Observational method is a process to observe and gather data from its target. Since it is a qualitative method it is time consuming and very personal. It can be said that observational research method is a part of ethnographic research which is also used to gather empirical evidence. This is usually a qualitative form of research, however in some cases it can be quantitative as well depending on what is being studied.

For example: setting up a research to observe a particular animal in the rain-forests of amazon. Such a research usually take a lot of time as observation has to be done for a set amount of time to study patterns or behavior of the subject. Another example used widely nowadays is to observe people shopping in a mall to figure out buying behavior of consumers.

- One-on-one interview: Such a method is purely qualitative and one of the most widely used. The reason being it enables a researcher get precise meaningful data if the right questions are asked. It is a conversational method where in-depth data can be gathered depending on where the conversation leads.

For example: A one-on-one interview with the finance minister to gather data on financial policies of the country and its implications on the public.

- Focus groups: Focus groups are used when a researcher wants to find answers to why, what and how questions. A small group is generally chosen for such a method and it is not necessary to interact with the group in person. A moderator is generally needed in case the group is being addressed in person. This is widely used by product companies to collect data about their brands and the product.

For example: A mobile phone manufacturer wanting to have a feedback on the dimensions of one of their models which is yet to be launched. Such studies help the company meet the demand of the customer and position their model appropriately in the market.

- Text analysis: Text analysis method is a little new compared to the other types. Such a method is used to analyse social life by going through images or words used by the individual. In today’s world, with social media playing a major part of everyone’s life, such a method enables the research to follow the pattern that relates to his study.

For example: A lot of companies ask for feedback from the customer in detail mentioning how satisfied are they with their customer support team. Such data enables the researcher to take appropriate decisions to make their support team better.

Sometimes a combination of the methods is also needed for some questions that cannot be answered using only one type of method especially when a researcher needs to gain a complete understanding of complex subject matter.

We recently published a blog that talks about examples of qualitative data in education ; why don’t you check it out for more ideas?

Learn More: Data Collection Methods: Types & Examples

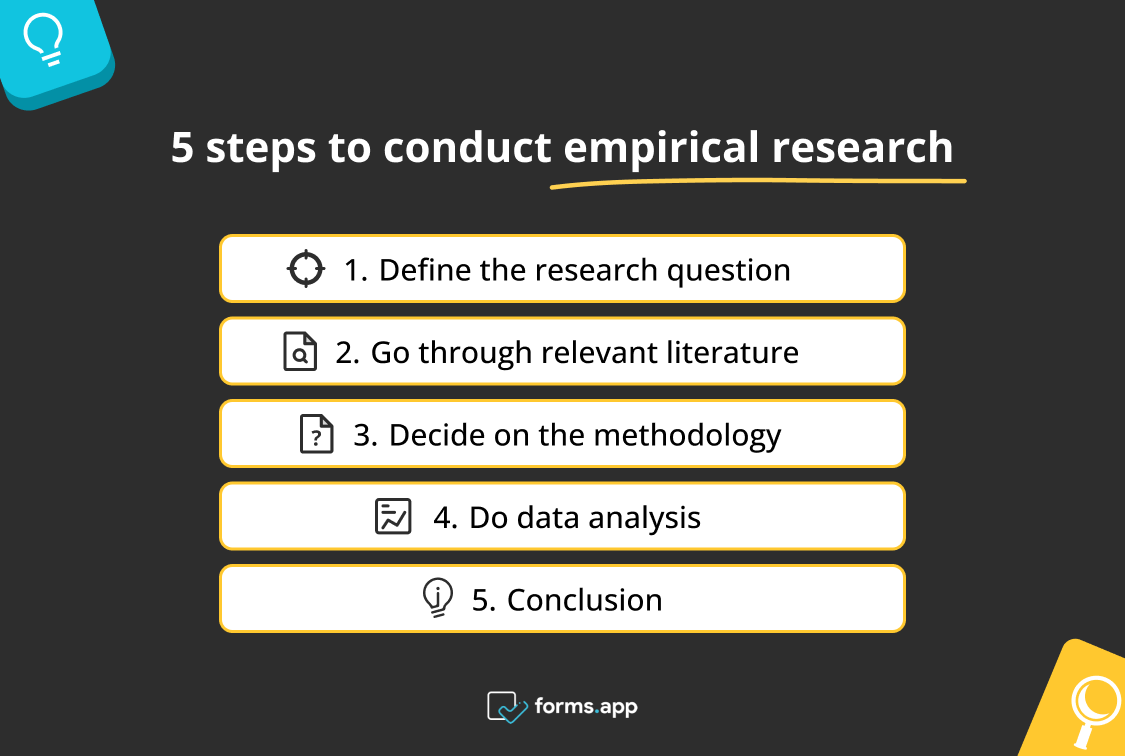

Since empirical research is based on observation and capturing experiences, it is important to plan the steps to conduct the experiment and how to analyse it. This will enable the researcher to resolve problems or obstacles which can occur during the experiment.

Step #1: Define the purpose of the research

This is the step where the researcher has to answer questions like what exactly do I want to find out? What is the problem statement? Are there any issues in terms of the availability of knowledge, data, time or resources. Will this research be more beneficial than what it will cost.

Before going ahead, a researcher has to clearly define his purpose for the research and set up a plan to carry out further tasks.

Step #2 : Supporting theories and relevant literature

The researcher needs to find out if there are theories which can be linked to his research problem . He has to figure out if any theory can help him support his findings. All kind of relevant literature will help the researcher to find if there are others who have researched this before, or what are the problems faced during this research. The researcher will also have to set up assumptions and also find out if there is any history regarding his research problem

Step #3: Creation of Hypothesis and measurement

Before beginning the actual research he needs to provide himself a working hypothesis or guess what will be the probable result. Researcher has to set up variables, decide the environment for the research and find out how can he relate between the variables.

Researcher will also need to define the units of measurements, tolerable degree for errors, and find out if the measurement chosen will be acceptable by others.

Step #4: Methodology, research design and data collection

In this step, the researcher has to define a strategy for conducting his research. He has to set up experiments to collect data which will enable him to propose the hypothesis. The researcher will decide whether he will need experimental or non experimental method for conducting the research. The type of research design will vary depending on the field in which the research is being conducted. Last but not the least, the researcher will have to find out parameters that will affect the validity of the research design. Data collection will need to be done by choosing appropriate samples depending on the research question. To carry out the research, he can use one of the many sampling techniques. Once data collection is complete, researcher will have empirical data which needs to be analysed.

LEARN ABOUT: Best Data Collection Tools

Step #5: Data Analysis and result

Data analysis can be done in two ways, qualitatively and quantitatively. Researcher will need to find out what qualitative method or quantitative method will be needed or will he need a combination of both. Depending on the unit of analysis of his data, he will know if his hypothesis is supported or rejected. Analyzing this data is the most important part to support his hypothesis.

Step #6: Conclusion

A report will need to be made with the findings of the research. The researcher can give the theories and literature that support his research. He can make suggestions or recommendations for further research on his topic.

A.D. de Groot, a famous dutch psychologist and a chess expert conducted some of the most notable experiments using chess in the 1940’s. During his study, he came up with a cycle which is consistent and now widely used to conduct empirical research. It consists of 5 phases with each phase being as important as the next one. The empirical cycle captures the process of coming up with hypothesis about how certain subjects work or behave and then testing these hypothesis against empirical data in a systematic and rigorous approach. It can be said that it characterizes the deductive approach to science. Following is the empirical cycle.

- Observation: At this phase an idea is sparked for proposing a hypothesis. During this phase empirical data is gathered using observation. For example: a particular species of flower bloom in a different color only during a specific season.

- Induction: Inductive reasoning is then carried out to form a general conclusion from the data gathered through observation. For example: As stated above it is observed that the species of flower blooms in a different color during a specific season. A researcher may ask a question “does the temperature in the season cause the color change in the flower?” He can assume that is the case, however it is a mere conjecture and hence an experiment needs to be set up to support this hypothesis. So he tags a few set of flowers kept at a different temperature and observes if they still change the color?

- Deduction: This phase helps the researcher to deduce a conclusion out of his experiment. This has to be based on logic and rationality to come up with specific unbiased results.For example: In the experiment, if the tagged flowers in a different temperature environment do not change the color then it can be concluded that temperature plays a role in changing the color of the bloom.

- Testing: This phase involves the researcher to return to empirical methods to put his hypothesis to the test. The researcher now needs to make sense of his data and hence needs to use statistical analysis plans to determine the temperature and bloom color relationship. If the researcher finds out that most flowers bloom a different color when exposed to the certain temperature and the others do not when the temperature is different, he has found support to his hypothesis. Please note this not proof but just a support to his hypothesis.

- Evaluation: This phase is generally forgotten by most but is an important one to keep gaining knowledge. During this phase the researcher puts forth the data he has collected, the support argument and his conclusion. The researcher also states the limitations for the experiment and his hypothesis and suggests tips for others to pick it up and continue a more in-depth research for others in the future. LEARN MORE: Population vs Sample

LEARN MORE: Population vs Sample

There is a reason why empirical research is one of the most widely used method. There are a few advantages associated with it. Following are a few of them.

- It is used to authenticate traditional research through various experiments and observations.

- This research methodology makes the research being conducted more competent and authentic.

- It enables a researcher understand the dynamic changes that can happen and change his strategy accordingly.

- The level of control in such a research is high so the researcher can control multiple variables.

- It plays a vital role in increasing internal validity .

Even though empirical research makes the research more competent and authentic, it does have a few disadvantages. Following are a few of them.

- Such a research needs patience as it can be very time consuming. The researcher has to collect data from multiple sources and the parameters involved are quite a few, which will lead to a time consuming research.

- Most of the time, a researcher will need to conduct research at different locations or in different environments, this can lead to an expensive affair.

- There are a few rules in which experiments can be performed and hence permissions are needed. Many a times, it is very difficult to get certain permissions to carry out different methods of this research.

- Collection of data can be a problem sometimes, as it has to be collected from a variety of sources through different methods.

LEARN ABOUT: Social Communication Questionnaire

Empirical research is important in today’s world because most people believe in something only that they can see, hear or experience. It is used to validate multiple hypothesis and increase human knowledge and continue doing it to keep advancing in various fields.

For example: Pharmaceutical companies use empirical research to try out a specific drug on controlled groups or random groups to study the effect and cause. This way, they prove certain theories they had proposed for the specific drug. Such research is very important as sometimes it can lead to finding a cure for a disease that has existed for many years. It is useful in science and many other fields like history, social sciences, business, etc.

LEARN ABOUT: 12 Best Tools for Researchers

With the advancement in today’s world, empirical research has become critical and a norm in many fields to support their hypothesis and gain more knowledge. The methods mentioned above are very useful for carrying out such research. However, a number of new methods will keep coming up as the nature of new investigative questions keeps getting unique or changing.

Create a single source of real data with a built-for-insights platform. Store past data, add nuggets of insights, and import research data from various sources into a CRM for insights. Build on ever-growing research with a real-time dashboard in a unified research management platform to turn insights into knowledge.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Jotform vs Microsoft Forms: Which Should You Choose?

Aug 26, 2024

Stay Conversations: What Is It, How to Use, Questions to Ask

Age Gating: Effective Strategies for Online Content Control

Aug 23, 2024

Work-Life Balance: Why We Need it & How to Improve It

Aug 22, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

- University of Memphis Libraries

- Research Guides

Empirical Research: Defining, Identifying, & Finding

Defining empirical research, what is empirical research, quantitative or qualitative.

- Introduction

- Database Tools

- Search Terms

- Image Descriptions

Calfee & Chambliss (2005) (UofM login required) describe empirical research as a "systematic approach for answering certain types of questions." Those questions are answered "[t]hrough the collection of evidence under carefully defined and replicable conditions" (p. 43).

The evidence collected during empirical research is often referred to as "data."

Characteristics of Empirical Research

Emerald Publishing's guide to conducting empirical research identifies a number of common elements to empirical research:

- A research question , which will determine research objectives.

- A particular and planned design for the research, which will depend on the question and which will find ways of answering it with appropriate use of resources.

- The gathering of primary data , which is then analysed.

- A particular methodology for collecting and analysing the data, such as an experiment or survey.

- The limitation of the data to a particular group, area or time scale, known as a sample [emphasis added]: for example, a specific number of employees of a particular company type, or all users of a library over a given time scale. The sample should be somehow representative of a wider population.

- The ability to recreate the study and test the results. This is known as reliability .

- The ability to generalize from the findings to a larger sample and to other situations.

If you see these elements in a research article, you can feel confident that you have found empirical research. Emerald's guide goes into more detail on each element.

Empirical research methodologies can be described as quantitative, qualitative, or a mix of both (usually called mixed-methods).

Ruane (2016) (UofM login required) gets at the basic differences in approach between quantitative and qualitative research:

- Quantitative research -- an approach to documenting reality that relies heavily on numbers both for the measurement of variables and for data analysis (p. 33).

- Qualitative research -- an approach to documenting reality that relies on words and images as the primary data source (p. 33).

Both quantitative and qualitative methods are empirical . If you can recognize that a research study is quantitative or qualitative study, then you have also recognized that it is empirical study.

Below are information on the characteristics of quantitative and qualitative research. This video from Scribbr also offers a good overall introduction to the two approaches to research methodology:

Characteristics of Quantitative Research

Researchers test hypotheses, or theories, based in assumptions about causality, i.e. we expect variable X to cause variable Y. Variables have to be controlled as much as possible to ensure validity. The results explain the relationship between the variables. Measures are based in pre-defined instruments.

Examples: experimental or quasi-experimental design, pretest & post-test, survey or questionnaire with closed-ended questions. Studies that identify factors that influence an outcomes, the utility of an intervention, or understanding predictors of outcomes.

Characteristics of Qualitative Research

Researchers explore “meaning individuals or groups ascribe to social or human problems (Creswell & Creswell, 2018, p3).” Questions and procedures emerge rather than being prescribed. Complexity, nuance, and individual meaning are valued. Research is both inductive and deductive. Data sources are multiple and varied, i.e. interviews, observations, documents, photographs, etc. The researcher is a key instrument and must be reflective of their background, culture, and experiences as influential of the research.

Examples: open question interviews and surveys, focus groups, case studies, grounded theory, ethnography, discourse analysis, narrative, phenomenology, participatory action research.

Calfee, R. C. & Chambliss, M. (2005). The design of empirical research. In J. Flood, D. Lapp, J. R. Squire, & J. Jensen (Eds.), Methods of research on teaching the English language arts: The methodology chapters from the handbook of research on teaching the English language arts (pp. 43-78). Routledge. http://ezproxy.memphis.edu/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=nlebk&AN=125955&site=eds-live&scope=site .

Creswell, J. W., & Creswell, J. D. (2018). Research design: Qualitative, quantitative, and mixed methods approaches (5th ed.). Thousand Oaks: Sage.

How to... conduct empirical research . (n.d.). Emerald Publishing. https://www.emeraldgrouppublishing.com/how-to/research-methods/conduct-empirical-research .

Scribbr. (2019). Quantitative vs. qualitative: The differences explained [video]. YouTube. https://www.youtube.com/watch?v=a-XtVF7Bofg .

Ruane, J. M. (2016). Introducing social research methods : Essentials for getting the edge . Wiley-Blackwell. http://ezproxy.memphis.edu/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=nlebk&AN=1107215&site=eds-live&scope=site .

- << Previous: Home

- Next: Identifying Empirical Research >>

- Last Updated: Apr 2, 2024 11:25 AM

- URL: https://libguides.memphis.edu/empirical-research

Empirical evidence: A definition

Empirical evidence is information that is acquired by observation or experimentation.

The scientific method

Types of empirical research, identifying empirical evidence, empirical law vs. scientific law, empirical, anecdotal and logical evidence, additional resources and reading, bibliography.

Empirical evidence is information acquired by observation or experimentation. Scientists record and analyze this data. The process is a central part of the scientific method , leading to the proving or disproving of a hypothesis and our better understanding of the world as a result.

Empirical evidence might be obtained through experiments that seek to provide a measurable or observable reaction, trials that repeat an experiment to test its efficacy (such as a drug trial, for instance) or other forms of data gathering against which a hypothesis can be tested and reliably measured.

"If a statement is about something that is itself observable, then the empirical testing can be direct. We just have a look to see if it is true. For example, the statement, 'The litmus paper is pink', is subject to direct empirical testing," wrote Peter Kosso in " A Summary of Scientific Method " (Springer, 2011).

"Science is most interesting and most useful to us when it is describing the unobservable things like atoms , germs , black holes , gravity , the process of evolution as it happened in the past, and so on," wrote Kosso. Scientific theories , meaning theories about nature that are unobservable, cannot be proven by direct empirical testing, but they can be tested indirectly, according to Kosso. "The nature of this indirect evidence, and the logical relation between evidence and theory, are the crux of scientific method," wrote Kosso.

The scientific method begins with scientists forming questions, or hypotheses , and then acquiring the knowledge through observations and experiments to either support or disprove a specific theory. "Empirical" means "based on observation or experience," according to the Merriam-Webster Dictionary . Empirical research is the process of finding empirical evidence. Empirical data is the information that comes from the research.

Before any pieces of empirical data are collected, scientists carefully design their research methods to ensure the accuracy, quality and integrity of the data. If there are flaws in the way that empirical data is collected, the research will not be considered valid.

The scientific method often involves lab experiments that are repeated over and over, and these experiments result in quantitative data in the form of numbers and statistics. However, that is not the only process used for gathering information to support or refute a theory.

This methodology mostly applies to the natural sciences. "The role of empirical experimentation and observation is negligible in mathematics compared to natural sciences such as psychology, biology or physics," wrote Mark Chang, an adjunct professor at Boston University, in " Principles of Scientific Methods " (Chapman and Hall, 2017).

"Empirical evidence includes measurements or data collected through direct observation or experimentation," said Jaime Tanner, a professor of biology at Marlboro College in Vermont. There are two research methods used to gather empirical measurements and data: qualitative and quantitative.

Qualitative research, often used in the social sciences, examines the reasons behind human behavior, according to the National Center for Biotechnology Information (NCBI) . It involves data that can be found using the human senses . This type of research is often done in the beginning of an experiment. "When combined with quantitative measures, qualitative study can give a better understanding of health related issues," wrote Dr. Sanjay Kalra for NCBI.

Quantitative research involves methods that are used to collect numerical data and analyze it using statistical methods, ."Quantitative research methods emphasize objective measurements and the statistical, mathematical, or numerical analysis of data collected through polls, questionnaires, and surveys, or by manipulating pre-existing statistical data using computational techniques," according to the LeTourneau University . This type of research is often used at the end of an experiment to refine and test the previous research.

Identifying empirical evidence in another researcher's experiments can sometimes be difficult. According to the Pennsylvania State University Libraries , there are some things one can look for when determining if evidence is empirical:

- Can the experiment be recreated and tested?

- Does the experiment have a statement about the methodology, tools and controls used?

- Is there a definition of the group or phenomena being studied?

The objective of science is that all empirical data that has been gathered through observation, experience and experimentation is without bias. The strength of any scientific research depends on the ability to gather and analyze empirical data in the most unbiased and controlled fashion possible.

However, in the 1960s, scientific historian and philosopher Thomas Kuhn promoted the idea that scientists can be influenced by prior beliefs and experiences, according to the Center for the Study of Language and Information .

— Amazing Black scientists

— Marie Curie: Facts and biography

— What is multiverse theory?

"Missing observations or incomplete data can also cause bias in data analysis, especially when the missing mechanism is not random," wrote Chang.

Because scientists are human and prone to error, empirical data is often gathered by multiple scientists who independently replicate experiments. This also guards against scientists who unconsciously, or in rare cases consciously, veer from the prescribed research parameters, which could skew the results.

The recording of empirical data is also crucial to the scientific method, as science can only be advanced if data is shared and analyzed. Peer review of empirical data is essential to protect against bad science, according to the University of California .

Empirical laws and scientific laws are often the same thing. "Laws are descriptions — often mathematical descriptions — of natural phenomenon," Peter Coppinger, associate professor of biology and biomedical engineering at the Rose-Hulman Institute of Technology, told Live Science.

Empirical laws are scientific laws that can be proven or disproved using observations or experiments, according to the Merriam-Webster Dictionary . So, as long as a scientific law can be tested using experiments or observations, it is considered an empirical law.

Empirical, anecdotal and logical evidence should not be confused. They are separate types of evidence that can be used to try to prove or disprove and idea or claim.

Logical evidence is used proven or disprove an idea using logic. Deductive reasoning may be used to come to a conclusion to provide logical evidence. For example, "All men are mortal. Harold is a man. Therefore, Harold is mortal."

Anecdotal evidence consists of stories that have been experienced by a person that are told to prove or disprove a point. For example, many people have told stories about their alien abductions to prove that aliens exist. Often, a person's anecdotal evidence cannot be proven or disproven.

There are some things in nature that science is still working to build evidence for, such as the hunt to explain consciousness .

Meanwhile, in other scientific fields, efforts are still being made to improve research methods, such as the plan by some psychologists to fix the science of psychology .

" A Summary of Scientific Method " by Peter Kosso (Springer, 2011)

"Empirical" Merriam-Webster Dictionary

" Principles of Scientific Methods " by Mark Chang (Chapman and Hall, 2017)

"Qualitative research" by Dr. Sanjay Kalra National Center for Biotechnology Information (NCBI)

"Quantitative Research and Analysis: Quantitative Methods Overview" LeTourneau University

"Empirical Research in the Social Sciences and Education" Pennsylvania State University Libraries

"Thomas Kuhn" Center for the Study of Language and Information

"Misconceptions about science" University of California

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Live Science x HowTheLightGetsIn — Get discounted tickets to the world’s largest ideas and music festival

Do opposites really attract in relationships?

Boeing Starliner will return from space without a crew, NASA announces in long-awaited decision

Most Popular

- 2 'Enhancing' future generations with CRISPR is a road to a 'new eugenics,' says ethicist Rosemarie Garland-Thomson

- 3 'Who are we to say they shouldn't exist?': Dr. Neal Baer on the threat of CRISPR-driven eugenics

- 4 'Unbreakable' quantum communication closer to reality thanks to new, exceptionally bright photons

- 5 Polar Ignite 3 Titanium review

- Ask a Librarian

Research: Overview & Approaches

- Getting Started with Undergraduate Research

- Planning & Getting Started

- Building Your Knowledge Base

- Locating Sources

- Reading Scholarly Articles

- Creating a Literature Review

- Productivity & Organizing Research

- Scholarly and Professional Relationships

Introduction to Empirical Research

Databases for finding empirical research, guided search, google scholar, examples of empirical research, sources and further reading.

- Interpretive Research

- Action-Based Research

- Creative & Experimental Approaches

Your Librarian

- Introductory Video This video covers what empirical research is, what kinds of questions and methods empirical researchers use, and some tips for finding empirical research articles in your discipline.

- Guided Search: Finding Empirical Research Articles This is a hands-on tutorial that will allow you to use your own search terms to find resources.

- Study on radiation transfer in human skin for cosmetics

- Long-Term Mobile Phone Use and the Risk of Vestibular Schwannoma: A Danish Nationwide Cohort Study

- Emissions Impacts and Benefits of Plug-In Hybrid Electric Vehicles and Vehicle-to-Grid Services

- Review of design considerations and technological challenges for successful development and deployment of plug-in hybrid electric vehicles

- Endocrine disrupters and human health: could oestrogenic chemicals in body care cosmetics adversely affect breast cancer incidence in women?

- << Previous: Scholarly and Professional Relationships

- Next: Interpretive Research >>

- Last Updated: Aug 13, 2024 12:18 PM

- URL: https://guides.lib.purdue.edu/research_approaches

Empirical Research

- Living reference work entry

- First Online: 22 May 2017

- Cite this living reference work entry

- Emeka Thaddues Njoku 2

544 Accesses

1 Citations

The term “empirical” entails gathered data based on experience, observations, or experimentation. In empirical research, knowledge is developed from factual experience as opposed to theoretical assumption and usually involved the use of data sources like datasets or fieldwork, but can also be based on observations within a laboratory setting. Testing hypothesis or answering definite questions is a primary feature of empirical research. Empirical research, in other words, involves the process of employing working hypothesis that are tested through experimentation or observation. Hence, empirical research is a method of uncovering empirical evidence.

Through the process of gathering valid empirical data, scientists from a variety of fields, ranging from the social to the natural sciences, have to carefully design their methods. This helps to ensure quality and accuracy of data collection and treatment. However, any error in empirical data collection process could inevitably render such...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Bibliography

Bhattacherjee, A. (2012). Social science research: Principles, methods, and practices. Textbooks Collection . Book 3.

Google Scholar

Comte, A., & Bridges, J. H. (Tr.) (1865). A general view of positivism . Trubner and Co. (reissued by Cambridge University Press , 2009).

Dilworth, C. B. (1982). Empirical research in the literature class. English Journal, 71 (3), 95–97.

Article Google Scholar

Heisenberg, W. (1971). Positivism, metaphysics and religion. In R. N. Nanshen (Ed.), Werner Heisenberg – Physics and beyond – Encounters and conversations , World Perspectives. 42. Translator: Arnold J. Pomerans. New York: Harper and Row.

Hossain, F. M. A. (2014). A critical analysis of empiricism. Open Journal of Philosophy, 2014 (4), 225–230.

Kant, I. (1783). Prolegomena to any future metaphysic (trans: Bennett, J.). Early Modern Texts. www.earlymoderntexts.com

Koch, S. (1992). Psychology’s Bridgman vs. Bridgman’s Bridgman: An essay in reconstruction. Theory and Psychology, 2 (3), 261–290.

Matin, A. (1968). An outline of philosophy . Dhaka: Mullick Brothers.

Mcleod, S. (2008). Psychology as science. http://www.simplypsychology.org/science-psychology.html

Popper, K. (1963). Conjectures and refutations: The growth of scientific knowledge . London: Routledge.

Simmel, G. (1908). The problem areas of sociology in Kurt H. Wolf: The sociology of Georg Simmel . London: The Free Press.

Weber, M. (1991). The nature of social action. In W. G. Runciman (Ed.), Weber: Selections in translation . Cambridge: Cambridge University Press.

Download references

Author information

Authors and affiliations.

Department of Political Science, University of Ibadan, Ibadan, Oyo, 200284, Nigeria

Emeka Thaddues Njoku

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Emeka Thaddues Njoku .

Editor information

Editors and affiliations.

Rhinebeck, New York, USA

David A. Leeming

Rights and permissions

Reprints and permissions

Copyright information

© 2017 Springer-Verlag GmbH Germany

About this entry

Cite this entry.

Njoku, E.T. (2017). Empirical Research. In: Leeming, D. (eds) Encyclopedia of Psychology and Religion. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-27771-9_200051-1

Download citation

DOI : https://doi.org/10.1007/978-3-642-27771-9_200051-1

Received : 01 April 2017

Accepted : 08 May 2017

Published : 22 May 2017

Publisher Name : Springer, Berlin, Heidelberg

Print ISBN : 978-3-642-27771-9

Online ISBN : 978-3-642-27771-9

eBook Packages : Springer Reference Behavioral Science and Psychology Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Penn State University Libraries

Empirical research in the social sciences and education.

- What is Empirical Research and How to Read It

- Finding Empirical Research in Library Databases

- Designing Empirical Research

- Ethics, Cultural Responsiveness, and Anti-Racism in Research

- Citing, Writing, and Presenting Your Work

Contact the Librarian at your campus for more help!

Introduction: What is Empirical Research?

Empirical research is based on observed and measured phenomena and derives knowledge from actual experience rather than from theory or belief.

How do you know if a study is empirical? Read the subheadings within the article, book, or report and look for a description of the research "methodology." Ask yourself: Could I recreate this study and test these results?

Key characteristics to look for:

- Specific research questions to be answered

- Definition of the population, behavior, or phenomena being studied

- Description of the process used to study this population or phenomena, including selection criteria, controls, and testing instruments (such as surveys)

Another hint: some scholarly journals use a specific layout, called the "IMRaD" format, to communicate empirical research findings. Such articles typically have 4 components:

- Introduction: sometimes called "literature review" -- what is currently known about the topic -- usually includes a theoretical framework and/or discussion of previous studies

- Methodology: sometimes called "research design" -- how to recreate the study -- usually describes the population, research process, and analytical tools used in the present study

- Results: sometimes called "findings" -- what was learned through the study -- usually appears as statistical data or as substantial quotations from research participants

- Discussion: sometimes called "conclusion" or "implications" -- why the study is important -- usually describes how the research results influence professional practices or future studies

Reading and Evaluating Scholarly Materials

Reading research can be a challenge. However, the tutorials and videos below can help. They explain what scholarly articles look like, how to read them, and how to evaluate them:

- CRAAP Checklist A frequently-used checklist that helps you examine the currency, relevance, authority, accuracy, and purpose of an information source.

- IF I APPLY A newer model of evaluating sources which encourages you to think about your own biases as a reader, as well as concerns about the item you are reading.

- Credo Video: How to Read Scholarly Materials (4 min.)

- Credo Tutorial: How to Read Scholarly Materials

- Credo Tutorial: Evaluating Information

- Credo Video: Evaluating Statistics (4 min.)

- Credo Tutorial: Evaluating for Diverse Points of View

- Next: Finding Empirical Research in Library Databases >>

- Last Updated: Aug 13, 2024 3:16 PM

- URL: https://guides.libraries.psu.edu/emp

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Published: 01 June 2023

Data, measurement and empirical methods in the science of science

- Lu Liu 1 , 2 , 3 , 4 ,

- Benjamin F. Jones ORCID: orcid.org/0000-0001-9697-9388 1 , 2 , 3 , 5 , 6 ,

- Brian Uzzi ORCID: orcid.org/0000-0001-6855-2854 1 , 2 , 3 &

- Dashun Wang ORCID: orcid.org/0000-0002-7054-2206 1 , 2 , 3 , 7

Nature Human Behaviour volume 7 , pages 1046–1058 ( 2023 ) Cite this article

20k Accesses

18 Citations

116 Altmetric

Metrics details

- Scientific community

The advent of large-scale datasets that trace the workings of science has encouraged researchers from many different disciplinary backgrounds to turn scientific methods into science itself, cultivating a rapidly expanding ‘science of science’. This Review considers this growing, multidisciplinary literature through the lens of data, measurement and empirical methods. We discuss the purposes, strengths and limitations of major empirical approaches, seeking to increase understanding of the field’s diverse methodologies and expand researchers’ toolkits. Overall, new empirical developments provide enormous capacity to test traditional beliefs and conceptual frameworks about science, discover factors associated with scientific productivity, predict scientific outcomes and design policies that facilitate scientific progress.

Similar content being viewed by others

SciSciNet: A large-scale open data lake for the science of science research

A dataset for measuring the impact of research data and their curation

Envisioning a “science diplomacy 2.0”: on data, global challenges, and multi-layered networks

Scientific advances are a key input to rising standards of living, health and the capacity of society to confront grand challenges, from climate change to the COVID-19 pandemic 1 , 2 , 3 . A deeper understanding of how science works and where innovation occurs can help us to more effectively design science policy and science institutions, better inform scientists’ own research choices, and create and capture enormous value for science and humanity. Building on these key premises, recent years have witnessed substantial development in the ‘science of science’ 4 , 5 , 6 , 7 , 8 , 9 , which uses large-scale datasets and diverse computational toolkits to unearth fundamental patterns behind scientific production and use.

The idea of turning scientific methods into science itself is long-standing. Since the mid-20th century, researchers from different disciplines have asked central questions about the nature of scientific progress and the practice, organization and impact of scientific research. Building on these rich historical roots, the field of the science of science draws upon many disciplines, ranging from information science to the social, physical and biological sciences to computer science, engineering and design. The science of science closely relates to several strands and communities of research, including metascience, scientometrics, the economics of science, research on research, science and technology studies, the sociology of science, metaknowledge and quantitative science studies 5 . There are noticeable differences between some of these communities, mostly around their historical origins and the initial disciplinary composition of researchers forming these communities. For example, metascience has its origins in the clinical sciences and psychology, and focuses on rigour, transparency, reproducibility and other open science-related practices and topics. The scientometrics community, born in library and information sciences, places a particular emphasis on developing robust and responsible measures and indicators for science. Science and technology studies engage the history of science and technology, the philosophy of science, and the interplay between science, technology and society. The science of science, which has its origins in physics, computer science and sociology, takes a data-driven approach and emphasizes questions on how science works. Each of these communities has made fundamental contributions to understanding science. While they differ in their origins, these differences pale in comparison to the overarching, common interest in understanding the practice of science and its societal impact.

Three major developments have encouraged rapid advances in the science of science. The first is in data 9 : modern databases include millions of research articles, grant proposals, patents and more. This windfall of data traces scientific activity in remarkable detail and at scale. The second development is in measurement: scholars have used data to develop many new measures of scientific activities and examine theories that have long been viewed as important but difficult to quantify. The third development is in empirical methods: thanks to parallel advances in data science, network science, artificial intelligence and econometrics, researchers can study relationships, make predictions and assess science policy in powerful new ways. Together, new data, measurements and methods have revealed fundamental new insights about the inner workings of science and scientific progress itself.

With multiple approaches, however, comes a key challenge. As researchers adhere to norms respected within their disciplines, their methods vary, with results often published in venues with non-overlapping readership, fragmenting research along disciplinary boundaries. This fragmentation challenges researchers’ ability to appreciate and understand the value of work outside of their own discipline, much less to build directly on it for further investigations.

Recognizing these challenges and the rapidly developing nature of the field, this paper reviews the empirical approaches that are prevalent in this literature. We aim to provide readers with an up-to-date understanding of the available datasets, measurement constructs and empirical methodologies, as well as the value and limitations of each. Owing to space constraints, this Review does not cover the full technical details of each method, referring readers to related guides to learn more. Instead, we will emphasize why a researcher might favour one method over another, depending on the research question.

Beyond a positive understanding of science, a key goal of the science of science is to inform science policy. While this Review mainly focuses on empirical approaches, with its core audience being researchers in the field, the studies reviewed are also germane to key policy questions. For example, what is the appropriate scale of scientific investment, in what directions and through what institutions 10 , 11 ? Are public investments in science aligned with public interests 12 ? What conditions produce novel or high-impact science 13 , 14 , 15 , 16 , 17 , 18 , 19 , 20 ? How do the reward systems of science influence the rate and direction of progress 13 , 21 , 22 , 23 , 24 , and what governs scientific reproducibility 25 , 26 , 27 ? How do contributions evolve over a scientific career 28 , 29 , 30 , 31 , 32 , and how may diversity among scientists advance scientific progress 33 , 34 , 35 , among other questions relevant to science policy 36 , 37 .

Overall, this review aims to facilitate entry to science of science research, expand researcher toolkits and illustrate how diverse research approaches contribute to our collective understanding of science. Section 2 reviews datasets and data linkages. Section 3 reviews major measurement constructs in the science of science. Section 4 considers a range of empirical methods, focusing on one study to illustrate each method and briefly summarizing related examples and applications. Section 5 concludes with an outlook for the science of science.

Historically, data on scientific activities were difficult to collect and were available in limited quantities. Gathering data could involve manually tallying statistics from publications 38 , 39 , interviewing scientists 16 , 40 , or assembling historical anecdotes and biographies 13 , 41 . Analyses were typically limited to a specific domain or group of scientists. Today, massive datasets on scientific production and use are at researchers’ fingertips 42 , 43 , 44 . Armed with big data and advanced algorithms, researchers can now probe questions previously not amenable to quantification and with enormous increases in scope and scale, as detailed below.

Publication datasets cover papers from nearly all scientific disciplines, enabling analyses of both general and domain-specific patterns. Commonly used datasets include the Web of Science (WoS), PubMed, CrossRef, ORCID, OpenCitations, Dimensions and OpenAlex. Datasets incorporating papers’ text (CORE) 45 , 46 , 47 , data entities (DataCite) 48 , 49 and peer review reports (Publons) 33 , 50 , 51 have also become available. These datasets further enable novel measurement, for example, representations of a paper’s content 52 , 53 , novelty 15 , 54 and interdisciplinarity 55 .

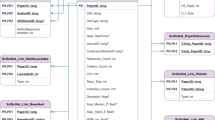

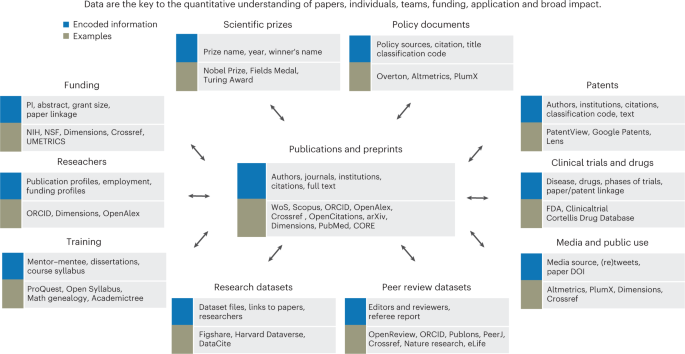

Notably, databases today capture more diverse aspects of science beyond publications, offering a richer and more encompassing view of research contexts and of researchers themselves (Fig. 1 ). For example, some datasets trace research funding to the specific publications these investments support 56 , 57 , allowing high-scale studies of the impact of funding on productivity and the return on public investment. Datasets incorporating job placements 58 , 59 , curriculum vitae 21 , 59 and scientific prizes 23 offer rich quantitative evidence on the social structure of science. Combining publication profiles with mentorship genealogies 60 , 61 , dissertations 34 and course syllabi 62 , 63 provides insights on mentoring and cultivating talent.

This figure presents commonly used data types in science of science research, information contained in each data type and examples of data sources. Datasets in the science of science research have not only grown in scale but have also expanded beyond publications to integrate upstream funding investments and downstream applications that extend beyond science itself.

Finally, today’s scope of data extends beyond science to broader aspects of society. Altmetrics 64 captures news media and social media mentions of scientific articles. Other databases incorporate marketplace uses of science, including through patents 10 , pharmaceutical clinical trials and drug approvals 65 , 66 . Policy documents 67 , 68 help us to understand the role of science in the halls of government 69 and policy making 12 , 68 .

While datasets of the modern scientific enterprise have grown exponentially, they are not without limitations. As is often the case for data-driven research, drawing conclusions from specific data sources requires scrutiny and care. Datasets are typically based on published work, which may favour easy-to-publish topics over important ones (the streetlight effect) 70 , 71 . The publication of negative results is also rare (the file drawer problem) 72 , 73 . Meanwhile, English language publications account for over 90% of articles in major data sources, with limited coverage of non-English journals 74 . Publication datasets may also reflect biases in data collection across research institutions or demographic groups. Despite the open science movement, many datasets require paid subscriptions, which can create inequality in data access. Creating more open datasets for the science of science, such as OpenAlex, may not only improve the robustness and replicability of empirical claims but also increase entry to the field.

As today’s datasets become larger in scale and continue to integrate new dimensions, they offer opportunities to unveil the inner workings and external impacts of science in new ways. They can enable researchers to reach beyond previous limitations while conducting original studies of new and long-standing questions about the sciences.

Measurement

Here we discuss prominent measurement approaches in the science of science, including their purposes and limitations.

Modern publication databases typically include data on which articles and authors cite other papers and scientists. These citation linkages have been used to engage core conceptual ideas in scientific research. Here we consider two common measures based on citation information: citation counts and knowledge flows.

First, citation counts are commonly used indicators of impact. The term ‘indicator’ implies that it only approximates the concept of interest. A citation count is defined as how many times a document is cited by subsequent documents and can proxy for the importance of research papers 75 , 76 as well as patented inventions 77 , 78 , 79 . Rather than treating each citation equally, measures may further weight the importance of each citation, for example by using the citation network structure to produce centrality 80 , PageRank 81 , 82 or Eigenfactor indicators 83 , 84 .

Citation-based indicators have also faced criticism 84 , 85 . Citation indicators necessarily oversimplify the construct of impact, often ignoring heterogeneity in the meaning and use of a particular reference, the variations in citation practices across fields and institutional contexts, and the potential for reputation and power structures in science to influence citation behaviour 86 , 87 . Researchers have started to understand more nuanced citation behaviours ranging from negative citations 86 to citation context 47 , 88 , 89 . Understanding what a citation actually measures matters in interpreting and applying many research findings in the science of science. Evaluations relying on citation-based indicators rather than expert judgements raise questions regarding misuse 90 , 91 , 92 . Given the importance of developing indicators that can reliably quantify and evaluate science, the scientometrics community has been working to provide guidance for responsible citation practices and assessment 85 .

Second, scientists use citations to trace knowledge flows. Each citation in a paper is a link to specific previous work from which we can proxy how new discoveries draw upon existing ideas 76 , 93 and how knowledge flows between fields of science 94 , 95 , research institutions 96 , regions and nations 97 , 98 , 99 , and individuals 81 . Combinations of citation linkages can also approximate novelty 15 , disruptiveness 17 , 100 and interdisciplinarity 55 , 95 , 101 , 102 . A rapidly expanding body of work further examines citations to scientific articles from other domains (for example, patents, clinical drug trials and policy documents) to understand the applied value of science 10 , 12 , 65 , 66 , 103 , 104 , 105 .

Individuals

Analysing individual careers allows researchers to answer questions such as: How do we quantify individual scientific productivity? What is a typical career lifecycle? How are resources and credits allocated across individuals and careers? A scholar’s career can be examined through the papers they publish 30 , 31 , 106 , 107 , 108 , with attention to career progression and mobility, publication counts and citation impact, as well as grant funding 24 , 109 , 110 and prizes 111 , 112 , 113 ,

Studies of individual impact focus on output, typically approximated by the number of papers a researcher publishes and citation indicators. A popular measure for individual impact is the h -index 114 , which takes both volume and per-paper impact into consideration. Specifically, a scientist is assigned the largest value h such that they have h papers that were each cited at least h times. Later studies build on the idea of the h -index and propose variants to address limitations 115 , these variants ranging from emphasizing highly cited papers in a career 116 , to field differences 117 and normalizations 118 , to the relative contribution of an individual in collaborative works 119 .

To study dynamics in output over the lifecycle, individuals can be studied according to age, career age or the sequence of publications. A long-standing literature has investigated the relationship between age and the likelihood of outstanding achievement 28 , 106 , 111 , 120 , 121 . Recent studies further decouple the relationship between age, publication volume and per-paper citation, and measure the likelihood of producing highly cited papers in the sequence of works one produces 30 , 31 .

As simple as it sounds, representing careers using publication records is difficult. Collecting the full publication list of a researcher is the foundation to study individuals yet remains a key challenge, requiring name disambiguation techniques to match specific works to specific researchers. Although algorithms are increasingly capable at identifying millions of career profiles 122 , they vary in accuracy and robustness. ORCID can help to alleviate the problem by offering researchers the opportunity to create, maintain and update individual profiles themselves, and it goes beyond publications to collect broader outputs and activities 123 . A second challenge is survivorship bias. Empirical studies tend to focus on careers that are long enough to afford statistical analyses, which limits the applicability of the findings to scientific careers as a whole. A third challenge is the breadth of scientists’ activities, where focusing on publications ignores other important contributions such as mentorship and teaching, service (for example, refereeing papers, reviewing grant proposals and editing journals) or leadership within their organizations. Although researchers have begun exploring these dimensions by linking individual publication profiles with genealogical databases 61 , 124 , dissertations 34 , grants 109 , curriculum vitae 21 and acknowledgements 125 , scientific careers beyond publication records remain under-studied 126 , 127 . Lastly, citation-based indicators only serve as an approximation of individual performance with similar limitations as discussed above. The scientific community has called for more appropriate practices 85 , 128 , ranging from incorporating expert assessment of research contributions to broadening the measures of impact beyond publications.

Over many decades, science has exhibited a substantial and steady shift away from solo authorship towards coauthorship, especially among highly cited works 18 , 129 , 130 . In light of this shift, a research field, the science of team science 131 , 132 , has emerged to study the mechanisms that facilitate or hinder the effectiveness of teams. Team size can be proxied by the number of coauthors on a paper, which has been shown to predict distinctive types of advance: whereas larger teams tend to develop ideas, smaller teams tend to disrupt current ways of thinking 17 . Team characteristics can be inferred from coauthors’ backgrounds 133 , 134 , 135 , allowing quantification of a team’s diversity in terms of field, age, gender or ethnicity. Collaboration networks based on coauthorship 130 , 136 , 137 , 138 , 139 offer nuanced network-based indicators to understand individual and institutional collaborations.

However, there are limitations to using coauthorship alone to study teams 132 . First, coauthorship can obscure individual roles 140 , 141 , 142 , which has prompted institutional responses to help to allocate credit, including authorship order and individual contribution statements 56 , 143 . Second, coauthorship does not reflect the complex dynamics and interactions between team members that are often instrumental for team success 53 , 144 . Third, collaborative contributions can extend beyond coauthorship in publications to include members of a research laboratory 145 or co-principal investigators (co-PIs) on a grant 146 . Initiatives such as CRediT may help to address some of these issues by recording detailed roles for each contributor 147 .

Institutions

Research institutions, such as departments, universities, national laboratories and firms, encompass wider groups of researchers and their corresponding outputs. Institutional membership can be inferred from affiliations listed on publications or patents 148 , 149 , and the output of an institution can be aggregated over all its affiliated researchers 150 . Institutional research information systems (CRIS) contain more comprehensive research outputs and activities from employees.

Some research questions consider the institution as a whole, investigating the returns to research and development investment 104 , inequality of resource allocation 22 and the flow of scientists 21 , 148 , 149 . Other questions focus on institutional structures as sources of research productivity by looking into the role of peer effects 125 , 151 , 152 , 153 , how institutional policies impact research outcomes 154 , 155 and whether interdisciplinary efforts foster innovation 55 . Institution-oriented measurement faces similar limitations as with analyses of individuals and teams, including name disambiguation for a given institution and the limited capacity of formal publication records to characterize the full range of relevant institutional outcomes. It is also unclear how to allocate credit among multiple institutions associated with a paper. Moreover, relevant institutional employees extend beyond publishing researchers: interns, technicians and administrators all contribute to research endeavours 130 .

In sum, measurements allow researchers to quantify scientific production and use across numerous dimensions, but they also raise questions of construct validity: Does the proposed metric really reflect what we want to measure? Testing the construct’s validity is important, as is understanding a construct’s limits. Where possible, using alternative measurement approaches, or qualitative methods such as interviews and surveys, can improve measurement accuracy and the robustness of findings.

Empirical methods

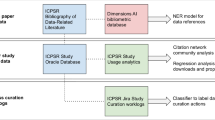

In this section, we review two broad categories of empirical approaches (Table 1 ), each with distinctive goals: (1) to discover, estimate and predict empirical regularities; and (2) to identify causal mechanisms. For each method, we give a concrete example to help to explain how the method works, summarize related work for interested readers, and discuss contributions and limitations.

Descriptive and predictive approaches

Empirical regularities and generalizable facts.

The discovery of empirical regularities in science has had a key role in driving conceptual developments and the directions of future research. By observing empirical patterns at scale, researchers unveil central facts that shape science and present core features that theories of scientific progress and practice must explain. For example, consider citation distributions. de Solla Price first proposed that citation distributions are fat-tailed 39 , indicating that a few papers have extremely high citations while most papers have relatively few or even no citations at all. de Solla Price proposed that citation distribution was a power law, while researchers have since refined this view to show that the distribution appears log-normal, a nearly universal regularity across time and fields 156 , 157 . The fat-tailed nature of citation distributions and its universality across the sciences has in turn sparked substantial theoretical work that seeks to explain this key empirical regularity 20 , 156 , 158 , 159 .

Empirical regularities are often surprising and can contest previous beliefs of how science works. For example, it has been shown that the age distribution of great achievements peaks in middle age across a wide range of fields 107 , 121 , 160 , rejecting the common belief that young scientists typically drive breakthroughs in science. A closer look at the individual careers also indicates that productivity patterns vary widely across individuals 29 . Further, a scholar’s highest-impact papers come at a remarkably constant rate across the sequence of their work 30 , 31 .

The discovery of empirical regularities has had important roles in shaping beliefs about the nature of science 10 , 45 , 161 , 162 , sources of breakthrough ideas 15 , 163 , 164 , 165 , scientific careers 21 , 29 , 126 , 127 , the network structure of ideas and scientists 23 , 98 , 136 , 137 , 138 , 139 , 166 , gender inequality 57 , 108 , 126 , 135 , 143 , 167 , 168 , and many other areas of interest to scientists and science institutions 22 , 47 , 86 , 97 , 102 , 105 , 134 , 169 , 170 , 171 . At the same time, care must be taken to ensure that findings are not merely artefacts due to data selection or inherent bias. To differentiate meaningful patterns from spurious ones, it is important to stress test the findings through different selection criteria or across non-overlapping data sources.

Regression analysis

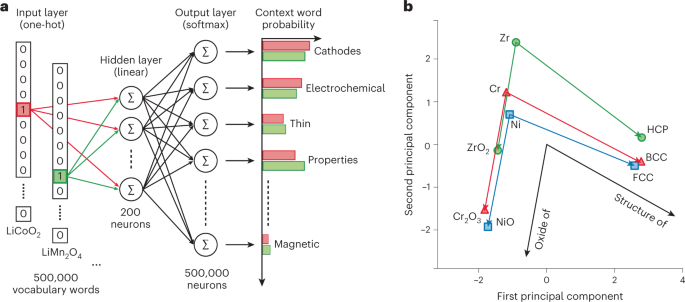

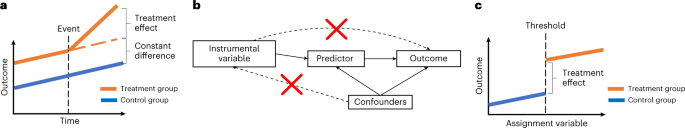

When investigating correlations among variables, a classic method is regression, which estimates how one set of variables explains variation in an outcome of interest. Regression can be used to test explicit hypotheses or predict outcomes. For example, researchers have investigated whether a paper’s novelty predicts its citation impact 172 . Adding additional control variables to the regression, one can further examine the robustness of the focal relationship.