Statistical Analysis in Research: Meaning, Methods and Types

Home » Videos » Statistical Analysis in Research: Meaning, Methods and Types

The scientific method is an empirical approach to acquiring new knowledge by making skeptical observations and analyses to develop a meaningful interpretation. It is the basis of research and the primary pillar of modern science. Researchers seek to understand the relationships between factors associated with the phenomena of interest. In some cases, research works with vast chunks of data, making it difficult to observe or manipulate each data point. As a result, statistical analysis in research becomes a means of evaluating relationships and interconnections between variables with tools and analytical techniques for working with large data. Since researchers use statistical power analysis to assess the probability of finding an effect in such an investigation, the method is relatively accurate. Hence, statistical analysis in research eases analytical methods by focusing on the quantifiable aspects of phenomena.

What is Statistical Analysis in Research? A Simplified Definition

Statistical analysis uses quantitative data to investigate patterns, relationships, and patterns to understand real-life and simulated phenomena. The approach is a key analytical tool in various fields, including academia, business, government, and science in general. This statistical analysis in research definition implies that the primary focus of the scientific method is quantitative research. Notably, the investigator targets the constructs developed from general concepts as the researchers can quantify their hypotheses and present their findings in simple statistics.

When a business needs to learn how to improve its product, they collect statistical data about the production line and customer satisfaction. Qualitative data is valuable and often identifies the most common themes in the stakeholders’ responses. On the other hand, the quantitative data creates a level of importance, comparing the themes based on their criticality to the affected persons. For instance, descriptive statistics highlight tendency, frequency, variation, and position information. While the mean shows the average number of respondents who value a certain aspect, the variance indicates the accuracy of the data. In any case, statistical analysis creates simplified concepts used to understand the phenomenon under investigation. It is also a key component in academia as the primary approach to data representation, especially in research projects, term papers and dissertations.

Most Useful Statistical Analysis Methods in Research

Using statistical analysis methods in research is inevitable, especially in academic assignments, projects, and term papers. It’s always advisable to seek assistance from your professor or you can try research paper writing by CustomWritings before you start your academic project or write statistical analysis in research paper. Consulting an expert when developing a topic for your thesis or short mid-term assignment increases your chances of getting a better grade. Most importantly, it improves your understanding of research methods with insights on how to enhance the originality and quality of personalized essays. Professional writers can also help select the most suitable statistical analysis method for your thesis, influencing the choice of data and type of study.

Descriptive Statistics

Descriptive statistics is a statistical method summarizing quantitative figures to understand critical details about the sample and population. A description statistic is a figure that quantifies a specific aspect of the data. For instance, instead of analyzing the behavior of a thousand students, research can identify the most common actions among them. By doing this, the person utilizes statistical analysis in research, particularly descriptive statistics.

- Measures of central tendency . Central tendency measures are the mean, mode, and media or the averages denoting specific data points. They assess the centrality of the probability distribution, hence the name. These measures describe the data in relation to the center.

- Measures of frequency . These statistics document the number of times an event happens. They include frequency, count, ratios, rates, and proportions. Measures of frequency can also show how often a score occurs.

- Measures of dispersion/variation . These descriptive statistics assess the intervals between the data points. The objective is to view the spread or disparity between the specific inputs. Measures of variation include the standard deviation, variance, and range. They indicate how the spread may affect other statistics, such as the mean.

- Measures of position . Sometimes researchers can investigate relationships between scores. Measures of position, such as percentiles, quartiles, and ranks, demonstrate this association. They are often useful when comparing the data to normalized information.

Inferential Statistics

Inferential statistics is critical in statistical analysis in quantitative research. This approach uses statistical tests to draw conclusions about the population. Examples of inferential statistics include t-tests, F-tests, ANOVA, p-value, Mann-Whitney U test, and Wilcoxon W test. This

Common Statistical Analysis in Research Types

Although inferential and descriptive statistics can be classified as types of statistical analysis in research, they are mostly considered analytical methods. Types of research are distinguishable by the differences in the methodology employed in analyzing, assembling, classifying, manipulating, and interpreting data. The categories may also depend on the type of data used.

Predictive Analysis

Predictive research analyzes past and present data to assess trends and predict future events. An excellent example of predictive analysis is a market survey that seeks to understand customers’ spending habits to weigh the possibility of a repeat or future purchase. Such studies assess the likelihood of an action based on trends.

Prescriptive Analysis

On the other hand, a prescriptive analysis targets likely courses of action. It’s decision-making research designed to identify optimal solutions to a problem. Its primary objective is to test or assess alternative measures.

Causal Analysis

Causal research investigates the explanation behind the events. It explores the relationship between factors for causation. Thus, researchers use causal analyses to analyze root causes, possible problems, and unknown outcomes.

Mechanistic Analysis

This type of research investigates the mechanism of action. Instead of focusing only on the causes or possible outcomes, researchers may seek an understanding of the processes involved. In such cases, they use mechanistic analyses to document, observe, or learn the mechanisms involved.

Exploratory Data Analysis

Similarly, an exploratory study is extensive with a wider scope and minimal limitations. This type of research seeks insight into the topic of interest. An exploratory researcher does not try to generalize or predict relationships. Instead, they look for information about the subject before conducting an in-depth analysis.

The Importance of Statistical Analysis in Research

As a matter of fact, statistical analysis provides critical information for decision-making. Decision-makers require past trends and predictive assumptions to inform their actions. In most cases, the data is too complex or lacks meaningful inferences. Statistical tools for analyzing such details help save time and money, deriving only valuable information for assessment. An excellent statistical analysis in research example is a randomized control trial (RCT) for the Covid-19 vaccine. You can download a sample of such a document online to understand the significance such analyses have to the stakeholders. A vaccine RCT assesses the effectiveness, side effects, duration of protection, and other benefits. Hence, statistical analysis in research is a helpful tool for understanding data.

Sources and links For the articles and videos I use different databases, such as Eurostat, OECD World Bank Open Data, Data Gov and others. You are free to use the video I have made on your site using the link or the embed code. If you have any questions, don’t hesitate to write to me!

Support statistics and data, if you have reached the end and like this project, you can donate a coffee to “statistics and data”..

Copyright © 2022 Statistics and Data

- Search by keyword

- Search by citation

Page 1 of 3

A generalization to the log-inverse Weibull distribution and its applications in cancer research

In this paper we consider a generalization of a log-transformed version of the inverse Weibull distribution. Several theoretical properties of the distribution are studied in detail including expressions for i...

- View Full Text

Approximations of conditional probability density functions in Lebesgue spaces via mixture of experts models

Mixture of experts (MoE) models are widely applied for conditional probability density estimation problems. We demonstrate the richness of the class of MoE models by proving denseness results in Lebesgue space...

Structural properties of generalised Planck distributions

A family of generalised Planck (GP) laws is defined and its structural properties explored. Sometimes subject to parameter restrictions, a GP law is a randomly scaled gamma law; it arises as the equilibrium la...

New class of Lindley distributions: properties and applications

A new generalized class of Lindley distribution is introduced in this paper. This new class is called the T -Lindley{ Y } class of distributions, and it is generated by using the quantile functions of uniform, expon...

Tolerance intervals in statistical software and robustness under model misspecification

A tolerance interval is a statistical interval that covers at least 100 ρ % of the population of interest with a 100(1− α ) % confidence, where ρ and α are pre-specified values in (0, 1). In many scientific fields, su...

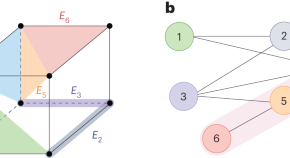

Combining assumptions and graphical network into gene expression data analysis

Analyzing gene expression data rigorously requires taking assumptions into consideration but also relies on using information about network relations that exist among genes. Combining these different elements ...

A comparison of zero-inflated and hurdle models for modeling zero-inflated count data

Counts data with excessive zeros are frequently encountered in practice. For example, the number of health services visits often includes many zeros representing the patients with no utilization during a follo...

A general stochastic model for bivariate episodes driven by a gamma sequence

We propose a new stochastic model describing the joint distribution of ( X , N ), where N is a counting variable while X is the sum of N independent gamma random variables. We present the main properties of this gene...

A flexible multivariate model for high-dimensional correlated count data

We propose a flexible multivariate stochastic model for over-dispersed count data. Our methodology is built upon mixed Poisson random vectors ( Y 1 ,…, Y d ), where the { Y i } are conditionally independent Poisson random...

Generalized fiducial inference on the mean of zero-inflated Poisson and Poisson hurdle models

Zero-inflated and hurdle models are widely applied to count data possessing excess zeros, where they can simultaneously model the process from how the zeros were generated and potentially help mitigate the eff...

Multivariate distributions of correlated binary variables generated by pair-copulas

Correlated binary data are prevalent in a wide range of scientific disciplines, including healthcare and medicine. The generalized estimating equations (GEEs) and the multivariate probit (MP) model are two of ...

On two extensions of the canonical Feller–Spitzer distribution

We introduce two extensions of the canonical Feller–Spitzer distribution from the class of Bessel densities, which comprise two distinct stochastically decreasing one-parameter families of positive absolutely ...

A new trivariate model for stochastic episodes

We study the joint distribution of stochastic events described by ( X , Y , N ), where N has a 1-inflated (or deflated) geometric distribution and X , Y are the sum and the maximum of N exponential random variables. Mod...

A flexible univariate moving average time-series model for dispersed count data

Al-Osh and Alzaid ( 1988 ) consider a Poisson moving average (PMA) model to describe the relation among integer-valued time series data; this model, however, is constrained by the underlying equi-dispersion assumpt...

Spatio-temporal analysis of flood data from South Carolina

To investigate the relationship between flood gage height and precipitation in South Carolina from 2012 to 2016, we built a conditional autoregressive (CAR) model using a Bayesian hierarchical framework. This ...

Affine-transformation invariant clustering models

We develop a cluster process which is invariant with respect to unknown affine transformations of the feature space without knowing the number of clusters in advance. Specifically, our proposed method can iden...

Distributions associated with simultaneous multiple hypothesis testing

We develop the distribution for the number of hypotheses found to be statistically significant using the rule from Simes (Biometrika 73: 751–754, 1986) for controlling the family-wise error rate (FWER). We fin...

New families of bivariate copulas via unit weibull distortion

This paper introduces a new family of bivariate copulas constructed using a unit Weibull distortion. Existing copulas play the role of the base or initial copulas that are transformed or distorted into a new f...

Generalized logistic distribution and its regression model

A new generalized asymmetric logistic distribution is defined. In some cases, existing three parameter distributions provide poor fit to heavy tailed data sets. The proposed new distribution consists of only t...

The spherical-Dirichlet distribution

Today, data mining and gene expressions are at the forefront of modern data analysis. Here we introduce a novel probability distribution that is applicable in these fields. This paper develops the proposed sph...

Item fit statistics for Rasch analysis: can we trust them?

To compare fit statistics for the Rasch model based on estimates of unconditional or conditional response probabilities.

Exact distributions of statistics for making inferences on mixed models under the default covariance structure

At this juncture when mixed models are heavily employed in applications ranging from clinical research to business analytics, the purpose of this article is to extend the exact distributional result of Wald (A...

A new discrete pareto type (IV) model: theory, properties and applications

Discrete analogue of a continuous distribution (especially in the univariate domain) is not new in the literature. The work of discretizing continuous distributions begun with the paper by Nakagawa and Osaki (197...

Density deconvolution for generalized skew-symmetric distributions

The density deconvolution problem is considered for random variables assumed to belong to the generalized skew-symmetric (GSS) family of distributions. The approach is semiparametric in that the symmetric comp...

The unifed distribution

We introduce a new distribution with support on (0,1) called unifed. It can be used as the response distribution for a GLM and it is suitable for data aggregation. We make a comparison to the beta regression. ...

On Burr III Marshal Olkin family: development, properties, characterizations and applications

In this paper, a flexible family of distributions with unimodel, bimodal, increasing, increasing and decreasing, inverted bathtub and modified bathtub hazard rate called Burr III-Marshal Olkin-G (BIIIMO-G) fam...

The linearly decreasing stress Weibull (LDSWeibull): a new Weibull-like distribution

Motivated by an engineering pullout test applied to a steel strip embedded in earth, we show how the resulting linearly decreasing force leads naturally to a new distribution, if the force under constant stress i...

Meta analysis of binary data with excessive zeros in two-arm trials

We present a novel Bayesian approach to random effects meta analysis of binary data with excessive zeros in two-arm trials. We discuss the development of likelihood accounting for excessive zeros, the prior, a...

On ( p 1 ,…, p k )-spherical distributions

The class of ( p 1 ,…, p k )-spherical probability laws and a method of simulating random vectors following such distributions are introduced using a new stochastic vector representation. A dynamic geometric disintegra...

A new class of survival distribution for degradation processes subject to shocks

Many systems experience gradual degradation while simultaneously being exposed to a stream of random shocks of varying magnitudes that eventually cause failure when a shock exceeds the residual strength of the...

A new extended normal regression model: simulations and applications

Various applications in natural science require models more accurate than well-known distributions. In this context, several generators of distributions have been recently proposed. We introduce a new four-par...

Multiclass analysis and prediction with network structured covariates

Technological advances associated with data acquisition are leading to the production of complex structured data sets. The recent development on classification with multiclass responses makes it possible to in...

High-dimensional star-shaped distributions

Stochastic representations of star-shaped distributed random vectors having heavy or light tail density generating function g are studied for increasing dimensions along with corresponding geometric measure repre...

A unified complex noncentral Wishart type distribution inspired by massive MIMO systems

The eigenvalue distributions from a complex noncentral Wishart matrix S = X H X has been the subject of interest in various real world applications, where X is assumed to be complex matrix variate normally distribute...

Particle swarm based algorithms for finding locally and Bayesian D -optimal designs

When a model-based approach is appropriate, an optimal design can guide how to collect data judiciously for making reliable inference at minimal cost. However, finding optimal designs for a statistical model w...

Admissible Bernoulli correlations

A multivariate symmetric Bernoulli distribution has marginals that are uniform over the pair {0,1}. Consider the problem of sampling from this distribution given a prescribed correlation between each pair of v...

On p -generalized elliptical random processes

We introduce rank- k -continuous axis-aligned p -generalized elliptically contoured distributions and study their properties such as stochastic representations, moments, and density-like representations. Applying th...

Parameters of stochastic models for electroencephalogram data as biomarkers for child’s neurodevelopment after cerebral malaria

The objective of this study was to test statistical features from the electroencephalogram (EEG) recordings as predictors of neurodevelopment and cognition of Ugandan children after coma due to cerebral malari...

A new generalization of generalized half-normal distribution: properties and regression models

In this paper, a new extension of the generalized half-normal distribution is introduced and studied. We assess the performance of the maximum likelihood estimators of the parameters of the new distribution vi...

Analytical properties of generalized Gaussian distributions

The family of Generalized Gaussian (GG) distributions has received considerable attention from the engineering community, due to the flexible parametric form of its probability density function, in modeling ma...

A new Weibull- X family of distributions: properties, characterizations and applications

We propose a new family of univariate distributions generated from the Weibull random variable, called a new Weibull-X family of distributions. Two special sub-models of the proposed family are presented and t...

The transmuted geometric-quadratic hazard rate distribution: development, properties, characterizations and applications

We propose a five parameter transmuted geometric quadratic hazard rate (TG-QHR) distribution derived from mixture of quadratic hazard rate (QHR), geometric and transmuted distributions via the application of t...

A nonparametric approach for quantile regression

Quantile regression estimates conditional quantiles and has wide applications in the real world. Estimating high conditional quantiles is an important problem. The regular quantile regression (QR) method often...

Mean and variance of ratios of proportions from categories of a multinomial distribution

Ratio distribution is a probability distribution representing the ratio of two random variables, each usually having a known distribution. Currently, there are results when the random variables in the ratio fo...

The power-Cauchy negative-binomial: properties and regression

We propose and study a new compounded model to extend the half-Cauchy and power-Cauchy distributions, which offers more flexibility in modeling lifetime data. The proposed model is analytically tractable and c...

Families of distributions arising from the quantile of generalized lambda distribution

In this paper, the class of T-R { generalized lambda } families of distributions based on the quantile of generalized lambda distribution has been proposed using the T-R { Y } framework. In the development of the T - R {

Risk ratios and Scanlan’s HRX

Risk ratios are distribution function tail ratios and are widely used in health disparities research. Let A and D denote advantaged and disadvantaged populations with cdfs F ...

Joint distribution of k -tuple statistics in zero-one sequences of Markov-dependent trials

We consider a sequence of n , n ≥3, zero (0) - one (1) Markov-dependent trials. We focus on k -tuples of 1s; i.e. runs of 1s of length at least equal to a fixed integer number k , 1≤ k ≤ n . The statistics denoting the n...

Quantile regression for overdispersed count data: a hierarchical method

Generalized Poisson regression is commonly applied to overdispersed count data, and focused on modelling the conditional mean of the response. However, conditional mean regression models may be sensitive to re...

Describing the Flexibility of the Generalized Gamma and Related Distributions

The generalized gamma (GG) distribution is a widely used, flexible tool for parametric survival analysis. Many alternatives and extensions to this family have been proposed. This paper characterizes the flexib...

- ISSN: 2195-5832 (electronic)

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Statistics articles from across Nature Portfolio

Statistics is the application of mathematical concepts to understanding and analysing large collections of data. A central tenet of statistics is to describe the variations in a data set or population using probability distributions. This analysis aids understanding of what underlies these variations and enables predictions of future changes.

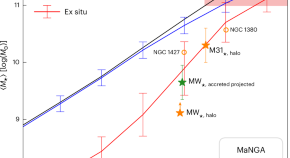

Machine learning reveals the merging history of nearby galaxies

A probabilistic machine learning method trained on cosmological simulations is used to determine whether stars in 10,000 nearby galaxies formed internally or were accreted from other galaxies during merging events. The model predicts that only 20% of the stellar mass in present day galaxies is the result of past mergers.

Latest Research and Reviews

Effective weight optimization strategy for precise deep learning forecasting models using EvoLearn approach

- Ashima Anand

- Rajender Parsad

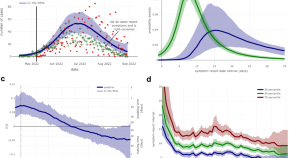

Quantification of the time-varying epidemic growth rate and of the delays between symptom onset and presenting to healthcare for the mpox epidemic in the UK in 2022

- Robert Hinch

- Jasmina Panovska-Griffiths

- Christophe Fraser

Investigating the causal relationship between wealth index and ICT skills: a mediation analysis approach

- Tarikul Islam

- Nabil Ahmed Uthso

Statistical analysis of the effect of socio-political factors on individual life satisfaction

- Ayman Alzaatreh

Improving the explainability of autoencoder factors for commodities through forecast-based Shapley values

- Roy Cerqueti

- Antonio Iovanella

- Saverio Storani

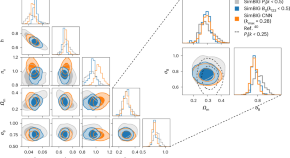

Cosmological constraints from non-Gaussian and nonlinear galaxy clustering using the SimBIG inference framework

By extracting non-Gaussian cosmological information on galaxy clustering at nonlinear scales, a framework for cosmic inference (S im BIG) provides more precise constraints for testing cosmological models.

- ChangHoon Hahn

- Pablo Lemos

- David Spergel

News and Comment

Efficient learning of many-body systems

The Hamiltonian describing a quantum many-body system can be learned using measurements in thermal equilibrium. Now, a learning algorithm applicable to many natural systems has been found that requires exponentially fewer measurements than existing methods.

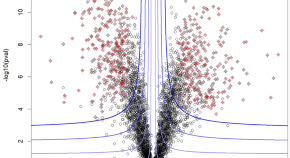

Fudging the volcano-plot without dredging the data

Selecting omic biomarkers using both their effect size and their differential status significance ( i.e. , selecting the “volcano-plot outer spray”) has long been equally biologically relevant and statistically troublesome. However, recent proposals are paving the way to resolving this dilemma.

- Thomas Burger

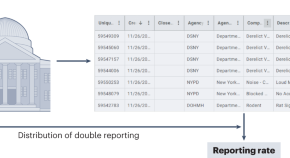

Disentangling truth from bias in naturally occurring data

A technique that leverages duplicate records in crowdsourcing data could help to mitigate the effects of biases in research and services that are dependent on government records.

- Daniel T. O’Brien

Sciama’s argument on life in a random universe and distinguishing apples from oranges

Dennis Sciama has argued that the existence of life depends on many quantities—the fundamental constants—so in a random universe life should be highly unlikely. However, without full knowledge of these constants, his argument implies a universe that could appear to be ‘intelligently designed’.

- Zhi-Wei Wang

- Samuel L. Braunstein

A method for generating constrained surrogate power laws

A paper in Physical Review X presents a method for numerically generating data sequences that are as likely to be observed under a power law as a given observed dataset.

- Zoe Budrikis

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Crit Care Med

- v.25(Suppl 2); 2021 May

An Introduction to Statistics: Choosing the Correct Statistical Test

Priya ranganathan.

1 Department of Anaesthesiology, Critical Care and Pain, Tata Memorial Centre, Homi Bhabha National Institute, Mumbai, Maharashtra, India

The choice of statistical test used for analysis of data from a research study is crucial in interpreting the results of the study. This article gives an overview of the various factors that determine the selection of a statistical test and lists some statistical testsused in common practice.

How to cite this article: Ranganathan P. An Introduction to Statistics: Choosing the Correct Statistical Test. Indian J Crit Care Med 2021;25(Suppl 2):S184–S186.

In a previous article in this series, we looked at different types of data and ways to summarise them. 1 At the end of the research study, statistical analyses are performed to test the hypothesis and either prove or disprove it. The choice of statistical test needs to be carefully performed since the use of incorrect tests could lead to misleading conclusions. Some key questions help us to decide the type of statistical test to be used for analysis of study data. 2

W hat is the R esearch H ypothesis ?

Sometimes, a study may just describe the characteristics of the sample, e.g., a prevalence study. Here, the statistical analysis involves only descriptive statistics . For example, Sridharan et al. aimed to analyze the clinical profile, species distribution, and susceptibility pattern of patients with invasive candidiasis. 3 They used descriptive statistics to express the characteristics of their study sample, including mean (and standard deviation) for normally distributed data, median (with interquartile range) for skewed data, and percentages for categorical data.

Studies may be conducted to test a hypothesis and derive inferences from the sample results to the population. This is known as inferential statistics . The goal of inferential statistics may be to assess differences between groups (comparison), establish an association between two variables (correlation), predict one variable from another (regression), or look for agreement between measurements (agreement). Studies may also look at time to a particular event, analyzed using survival analysis.

A re the C omparisons M atched (P aired ) or U nmatched (U npaired )?

Observations made on the same individual (before–after or comparing two sides of the body) are usually matched or paired . Comparisons made between individuals are usually unpaired or unmatched . Data are considered paired if the values in one set of data are likely to be influenced by the other set (as can happen in before and after readings from the same individual). Examples of paired data include serial measurements of procalcitonin in critically ill patients or comparison of pain relief during sequential administration of different analgesics in a patient with osteoarthritis.

W hat are the T ype of D ata B eing M easured ?

The test chosen to analyze data will depend on whether the data are categorical (and whether nominal or ordinal) or numerical (and whether skewed or normally distributed). Tests used to analyze normally distributed data are known as parametric tests and have a nonparametric counterpart that is used for data, which is distribution-free. 4 Parametric tests assume that the sample data are normally distributed and have the same characteristics as the population; nonparametric tests make no such assumptions. Parametric tests are more powerful and have a greater ability to pick up differences between groups (where they exist); in contrast, nonparametric tests are less efficient at identifying significant differences. Time-to-event data requires a special type of analysis, known as survival analysis.

H ow M any M easurements are B eing C ompared ?

The choice of the test differs depending on whether two or more than two measurements are being compared. This includes more than two groups (unmatched data) or more than two measurements in a group (matched data).

T ests for C omparison

( Table 1 lists the tests commonly used for comparing unpaired data, depending on the number of groups and type of data. As an example, Megahed and colleagues evaluated the role of early bronchoscopy in mechanically ventilated patients with aspiration pneumonitis. 5 Patients were randomized to receive either early bronchoscopy or conventional treatment. Between groups, comparisons were made using the unpaired t test for normally distributed continuous variables, the Mann–Whitney U -test for non-normal continuous variables, and the chi-square test for categorical variables. Chowhan et al. compared the efficacy of left ventricular outflow tract velocity time integral (LVOTVTI) and carotid artery velocity time integral (CAVTI) as predictors of fluid responsiveness in patients with sepsis and septic shock. 6 Patients were divided into three groups— sepsis, septic shock, and controls. Since there were three groups, comparisons of numerical variables were done using analysis of variance (for normally distributed data) or Kruskal–Wallis test (for skewed data).

Tests for comparison of unpaired data

| Nominal | Chi-square test or Fisher's exact test | |

| Ordinal or skewed | Mann–Whitney -test (Wilcoxon rank sum test) | Kruskal–Wallis test |

| Normally distributed | Unpaired -test | Analysis of variance (ANOVA) |

A common error is to use multiple unpaired t -tests for comparing more than two groups; i.e., for a study with three treatment groups A, B, and C, it would be incorrect to run unpaired t -tests for group A vs B, B vs C, and C vs A. The correct technique of analysis is to run ANOVA and use post hoc tests (if ANOVA yields a significant result) to determine which group is different from the others.

( Table 2 lists the tests commonly used for comparing paired data, depending on the number of groups and type of data. As discussed above, it would be incorrect to use multiple paired t -tests to compare more than two measurements within a group. In the study by Chowhan, each parameter (LVOTVTI and CAVTI) was measured in the supine position and following passive leg raise. These represented paired readings from the same individual and comparison of prereading and postreading was performed using the paired t -test. 6 Verma et al. evaluated the role of physiotherapy on oxygen requirements and physiological parameters in patients with COVID-19. 7 Each patient had pretreatment and post-treatment data for heart rate and oxygen supplementation recorded on day 1 and day 14. Since data did not follow a normal distribution, they used Wilcoxon's matched pair test to compare the prevalues and postvalues of heart rate (numerical variable). McNemar's test was used to compare the presupplemental and postsupplemental oxygen status expressed as dichotomous data in terms of yes/no. In the study by Megahed, patients had various parameters such as sepsis-related organ failure assessment score, lung injury score, and clinical pulmonary infection score (CPIS) measured at baseline, on day 3 and day 7. 5 Within groups, comparisons were made using repeated measures ANOVA for normally distributed data and Friedman's test for skewed data.

Tests for comparison of paired data

| Nominal | McNemar's test | Cochran's Q |

| Ordinal or skewed | Wilcoxon signed rank test | Friedman test |

| Normally distributed | Paired -test | Repeated measures ANOVA |

T ests for A ssociation between V ariables

( Table 3 lists the tests used to determine the association between variables. Correlation determines the strength of the relationship between two variables; regression allows the prediction of one variable from another. Tyagi examined the correlation between ETCO 2 and PaCO 2 in patients with chronic obstructive pulmonary disease with acute exacerbation, who were mechanically ventilated. 8 Since these were normally distributed variables, the linear correlation between ETCO 2 and PaCO 2 was determined by Pearson's correlation coefficient. Parajuli et al. compared the acute physiology and chronic health evaluation II (APACHE II) and acute physiology and chronic health evaluation IV (APACHE IV) scores to predict intensive care unit mortality, both of which were ordinal data. Correlation between APACHE II and APACHE IV score was tested using Spearman's coefficient. 9 A study by Roshan et al. identified risk factors for the development of aspiration pneumonia following rapid sequence intubation. 10 Since the outcome was categorical binary data (aspiration pneumonia— yes/no), they performed a bivariate analysis to derive unadjusted odds ratios, followed by a multivariable logistic regression analysis to calculate adjusted odds ratios for risk factors associated with aspiration pneumonia.

Tests for assessing the association between variables

| Both variables normally distributed | Pearson's correlation coefficient |

| One or both variables ordinal or skewed | Spearman's or Kendall's correlation coefficient |

| Nominal data | Chi-square test; odds ratio or relative risk (for binary outcomes) |

| Continuous outcome | Linear regression analysis |

| Categorical outcome (binary) | Logistic regression analysis |

T ests for A greement between M easurements

( Table 4 outlines the tests used for assessing agreement between measurements. Gunalan evaluated concordance between the National Healthcare Safety Network surveillance criteria and CPIS for the diagnosis of ventilator-associated pneumonia. 11 Since both the scores are examples of ordinal data, Kappa statistics were calculated to assess the concordance between the two methods. In the previously quoted study by Tyagi, the agreement between ETCO 2 and PaCO 2 (both numerical variables) was represented using the Bland–Altman method. 8

Tests for assessing agreement between measurements

| Categorical data | Cohen's kappa |

| Numerical data | Intraclass correlation coefficient (numerical) and Bland–Altman plot (graphical display) |

T ests for T ime-to -E vent D ata (S urvival A nalysis )

Time-to-event data represent a unique type of data where some participants have not experienced the outcome of interest at the time of analysis. Such participants are considered to be “censored” but are allowed to contribute to the analysis for the period of their follow-up. A detailed discussion on the analysis of time-to-event data is beyond the scope of this article. For analyzing time-to-event data, we use survival analysis (with the Kaplan–Meier method) and compare groups using the log-rank test. The risk of experiencing the event is expressed as a hazard ratio. Cox proportional hazards regression model is used to identify risk factors that are significantly associated with the event.

Hasanzadeh evaluated the impact of zinc supplementation on the development of ventilator-associated pneumonia (VAP) in adult mechanically ventilated trauma patients. 12 Survival analysis (Kaplan–Meier technique) was used to calculate the median time to development of VAP after ICU admission. The Cox proportional hazards regression model was used to calculate hazard ratios to identify factors significantly associated with the development of VAP.

The choice of statistical test used to analyze research data depends on the study hypothesis, the type of data, the number of measurements, and whether the data are paired or unpaired. Reviews of articles published in medical specialties such as family medicine, cytopathology, and pain have found several errors related to the use of descriptive and inferential statistics. 12 – 15 The statistical technique needs to be carefully chosen and specified in the protocol prior to commencement of the study, to ensure that the conclusions of the study are valid. This article has outlined the principles for selecting a statistical test, along with a list of tests used commonly. Researchers should seek help from statisticians while writing the research study protocol, to formulate the plan for statistical analysis.

Priya Ranganathan https://orcid.org/0000-0003-1004-5264

Source of support: Nil

Conflict of interest: None

R eferences

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

The Beginner's Guide to Statistical Analysis | 5 Steps & Examples

Statistical analysis means investigating trends, patterns, and relationships using quantitative data . It is an important research tool used by scientists, governments, businesses, and other organisations.

To draw valid conclusions, statistical analysis requires careful planning from the very start of the research process . You need to specify your hypotheses and make decisions about your research design, sample size, and sampling procedure.

After collecting data from your sample, you can organise and summarise the data using descriptive statistics . Then, you can use inferential statistics to formally test hypotheses and make estimates about the population. Finally, you can interpret and generalise your findings.

This article is a practical introduction to statistical analysis for students and researchers. We’ll walk you through the steps using two research examples. The first investigates a potential cause-and-effect relationship, while the second investigates a potential correlation between variables.

Table of contents

Step 1: write your hypotheses and plan your research design, step 2: collect data from a sample, step 3: summarise your data with descriptive statistics, step 4: test hypotheses or make estimates with inferential statistics, step 5: interpret your results, frequently asked questions about statistics.

To collect valid data for statistical analysis, you first need to specify your hypotheses and plan out your research design.

Writing statistical hypotheses

The goal of research is often to investigate a relationship between variables within a population . You start with a prediction, and use statistical analysis to test that prediction.

A statistical hypothesis is a formal way of writing a prediction about a population. Every research prediction is rephrased into null and alternative hypotheses that can be tested using sample data.

While the null hypothesis always predicts no effect or no relationship between variables, the alternative hypothesis states your research prediction of an effect or relationship.

- Null hypothesis: A 5-minute meditation exercise will have no effect on math test scores in teenagers.

- Alternative hypothesis: A 5-minute meditation exercise will improve math test scores in teenagers.

- Null hypothesis: Parental income and GPA have no relationship with each other in college students.

- Alternative hypothesis: Parental income and GPA are positively correlated in college students.

Planning your research design

A research design is your overall strategy for data collection and analysis. It determines the statistical tests you can use to test your hypothesis later on.

First, decide whether your research will use a descriptive, correlational, or experimental design. Experiments directly influence variables, whereas descriptive and correlational studies only measure variables.

- In an experimental design , you can assess a cause-and-effect relationship (e.g., the effect of meditation on test scores) using statistical tests of comparison or regression.

- In a correlational design , you can explore relationships between variables (e.g., parental income and GPA) without any assumption of causality using correlation coefficients and significance tests.

- In a descriptive design , you can study the characteristics of a population or phenomenon (e.g., the prevalence of anxiety in U.S. college students) using statistical tests to draw inferences from sample data.

Your research design also concerns whether you’ll compare participants at the group level or individual level, or both.

- In a between-subjects design , you compare the group-level outcomes of participants who have been exposed to different treatments (e.g., those who performed a meditation exercise vs those who didn’t).

- In a within-subjects design , you compare repeated measures from participants who have participated in all treatments of a study (e.g., scores from before and after performing a meditation exercise).

- In a mixed (factorial) design , one variable is altered between subjects and another is altered within subjects (e.g., pretest and posttest scores from participants who either did or didn’t do a meditation exercise).

- Experimental

- Correlational

First, you’ll take baseline test scores from participants. Then, your participants will undergo a 5-minute meditation exercise. Finally, you’ll record participants’ scores from a second math test.

In this experiment, the independent variable is the 5-minute meditation exercise, and the dependent variable is the math test score from before and after the intervention. Example: Correlational research design In a correlational study, you test whether there is a relationship between parental income and GPA in graduating college students. To collect your data, you will ask participants to fill in a survey and self-report their parents’ incomes and their own GPA.

Measuring variables

When planning a research design, you should operationalise your variables and decide exactly how you will measure them.

For statistical analysis, it’s important to consider the level of measurement of your variables, which tells you what kind of data they contain:

- Categorical data represents groupings. These may be nominal (e.g., gender) or ordinal (e.g. level of language ability).

- Quantitative data represents amounts. These may be on an interval scale (e.g. test score) or a ratio scale (e.g. age).

Many variables can be measured at different levels of precision. For example, age data can be quantitative (8 years old) or categorical (young). If a variable is coded numerically (e.g., level of agreement from 1–5), it doesn’t automatically mean that it’s quantitative instead of categorical.

Identifying the measurement level is important for choosing appropriate statistics and hypothesis tests. For example, you can calculate a mean score with quantitative data, but not with categorical data.

In a research study, along with measures of your variables of interest, you’ll often collect data on relevant participant characteristics.

| Variable | Type of data |

|---|---|

| Age | Quantitative (ratio) |

| Gender | Categorical (nominal) |

| Race or ethnicity | Categorical (nominal) |

| Baseline test scores | Quantitative (interval) |

| Final test scores | Quantitative (interval) |

| Parental income | Quantitative (ratio) |

|---|---|

| GPA | Quantitative (interval) |

In most cases, it’s too difficult or expensive to collect data from every member of the population you’re interested in studying. Instead, you’ll collect data from a sample.

Statistical analysis allows you to apply your findings beyond your own sample as long as you use appropriate sampling procedures . You should aim for a sample that is representative of the population.

Sampling for statistical analysis

There are two main approaches to selecting a sample.

- Probability sampling: every member of the population has a chance of being selected for the study through random selection.

- Non-probability sampling: some members of the population are more likely than others to be selected for the study because of criteria such as convenience or voluntary self-selection.

In theory, for highly generalisable findings, you should use a probability sampling method. Random selection reduces sampling bias and ensures that data from your sample is actually typical of the population. Parametric tests can be used to make strong statistical inferences when data are collected using probability sampling.

But in practice, it’s rarely possible to gather the ideal sample. While non-probability samples are more likely to be biased, they are much easier to recruit and collect data from. Non-parametric tests are more appropriate for non-probability samples, but they result in weaker inferences about the population.

If you want to use parametric tests for non-probability samples, you have to make the case that:

- your sample is representative of the population you’re generalising your findings to.

- your sample lacks systematic bias.

Keep in mind that external validity means that you can only generalise your conclusions to others who share the characteristics of your sample. For instance, results from Western, Educated, Industrialised, Rich and Democratic samples (e.g., college students in the US) aren’t automatically applicable to all non-WEIRD populations.

If you apply parametric tests to data from non-probability samples, be sure to elaborate on the limitations of how far your results can be generalised in your discussion section .

Create an appropriate sampling procedure

Based on the resources available for your research, decide on how you’ll recruit participants.

- Will you have resources to advertise your study widely, including outside of your university setting?

- Will you have the means to recruit a diverse sample that represents a broad population?

- Do you have time to contact and follow up with members of hard-to-reach groups?

Your participants are self-selected by their schools. Although you’re using a non-probability sample, you aim for a diverse and representative sample. Example: Sampling (correlational study) Your main population of interest is male college students in the US. Using social media advertising, you recruit senior-year male college students from a smaller subpopulation: seven universities in the Boston area.

Calculate sufficient sample size

Before recruiting participants, decide on your sample size either by looking at other studies in your field or using statistics. A sample that’s too small may be unrepresentative of the sample, while a sample that’s too large will be more costly than necessary.

There are many sample size calculators online. Different formulas are used depending on whether you have subgroups or how rigorous your study should be (e.g., in clinical research). As a rule of thumb, a minimum of 30 units or more per subgroup is necessary.

To use these calculators, you have to understand and input these key components:

- Significance level (alpha): the risk of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Statistical power : the probability of your study detecting an effect of a certain size if there is one, usually 80% or higher.

- Expected effect size : a standardised indication of how large the expected result of your study will be, usually based on other similar studies.

- Population standard deviation: an estimate of the population parameter based on a previous study or a pilot study of your own.

Once you’ve collected all of your data, you can inspect them and calculate descriptive statistics that summarise them.

Inspect your data

There are various ways to inspect your data, including the following:

- Organising data from each variable in frequency distribution tables .

- Displaying data from a key variable in a bar chart to view the distribution of responses.

- Visualising the relationship between two variables using a scatter plot .

By visualising your data in tables and graphs, you can assess whether your data follow a skewed or normal distribution and whether there are any outliers or missing data.

A normal distribution means that your data are symmetrically distributed around a center where most values lie, with the values tapering off at the tail ends.

In contrast, a skewed distribution is asymmetric and has more values on one end than the other. The shape of the distribution is important to keep in mind because only some descriptive statistics should be used with skewed distributions.

Extreme outliers can also produce misleading statistics, so you may need a systematic approach to dealing with these values.

Calculate measures of central tendency

Measures of central tendency describe where most of the values in a data set lie. Three main measures of central tendency are often reported:

- Mode : the most popular response or value in the data set.

- Median : the value in the exact middle of the data set when ordered from low to high.

- Mean : the sum of all values divided by the number of values.

However, depending on the shape of the distribution and level of measurement, only one or two of these measures may be appropriate. For example, many demographic characteristics can only be described using the mode or proportions, while a variable like reaction time may not have a mode at all.

Calculate measures of variability

Measures of variability tell you how spread out the values in a data set are. Four main measures of variability are often reported:

- Range : the highest value minus the lowest value of the data set.

- Interquartile range : the range of the middle half of the data set.

- Standard deviation : the average distance between each value in your data set and the mean.

- Variance : the square of the standard deviation.

Once again, the shape of the distribution and level of measurement should guide your choice of variability statistics. The interquartile range is the best measure for skewed distributions, while standard deviation and variance provide the best information for normal distributions.

Using your table, you should check whether the units of the descriptive statistics are comparable for pretest and posttest scores. For example, are the variance levels similar across the groups? Are there any extreme values? If there are, you may need to identify and remove extreme outliers in your data set or transform your data before performing a statistical test.

| Pretest scores | Posttest scores | |

|---|---|---|

| Mean | 68.44 | 75.25 |

| Standard deviation | 9.43 | 9.88 |

| Variance | 88.96 | 97.96 |

| Range | 36.25 | 45.12 |

| 30 | ||

From this table, we can see that the mean score increased after the meditation exercise, and the variances of the two scores are comparable. Next, we can perform a statistical test to find out if this improvement in test scores is statistically significant in the population. Example: Descriptive statistics (correlational study) After collecting data from 653 students, you tabulate descriptive statistics for annual parental income and GPA.

It’s important to check whether you have a broad range of data points. If you don’t, your data may be skewed towards some groups more than others (e.g., high academic achievers), and only limited inferences can be made about a relationship.

| Parental income (USD) | GPA | |

|---|---|---|

| Mean | 62,100 | 3.12 |

| Standard deviation | 15,000 | 0.45 |

| Variance | 225,000,000 | 0.16 |

| Range | 8,000–378,000 | 2.64–4.00 |

| 653 | ||

A number that describes a sample is called a statistic , while a number describing a population is called a parameter . Using inferential statistics , you can make conclusions about population parameters based on sample statistics.

Researchers often use two main methods (simultaneously) to make inferences in statistics.

- Estimation: calculating population parameters based on sample statistics.

- Hypothesis testing: a formal process for testing research predictions about the population using samples.

You can make two types of estimates of population parameters from sample statistics:

- A point estimate : a value that represents your best guess of the exact parameter.

- An interval estimate : a range of values that represent your best guess of where the parameter lies.

If your aim is to infer and report population characteristics from sample data, it’s best to use both point and interval estimates in your paper.

You can consider a sample statistic a point estimate for the population parameter when you have a representative sample (e.g., in a wide public opinion poll, the proportion of a sample that supports the current government is taken as the population proportion of government supporters).

There’s always error involved in estimation, so you should also provide a confidence interval as an interval estimate to show the variability around a point estimate.

A confidence interval uses the standard error and the z score from the standard normal distribution to convey where you’d generally expect to find the population parameter most of the time.

Hypothesis testing

Using data from a sample, you can test hypotheses about relationships between variables in the population. Hypothesis testing starts with the assumption that the null hypothesis is true in the population, and you use statistical tests to assess whether the null hypothesis can be rejected or not.

Statistical tests determine where your sample data would lie on an expected distribution of sample data if the null hypothesis were true. These tests give two main outputs:

- A test statistic tells you how much your data differs from the null hypothesis of the test.

- A p value tells you the likelihood of obtaining your results if the null hypothesis is actually true in the population.

Statistical tests come in three main varieties:

- Comparison tests assess group differences in outcomes.

- Regression tests assess cause-and-effect relationships between variables.

- Correlation tests assess relationships between variables without assuming causation.

Your choice of statistical test depends on your research questions, research design, sampling method, and data characteristics.

Parametric tests

Parametric tests make powerful inferences about the population based on sample data. But to use them, some assumptions must be met, and only some types of variables can be used. If your data violate these assumptions, you can perform appropriate data transformations or use alternative non-parametric tests instead.

A regression models the extent to which changes in a predictor variable results in changes in outcome variable(s).

- A simple linear regression includes one predictor variable and one outcome variable.

- A multiple linear regression includes two or more predictor variables and one outcome variable.

Comparison tests usually compare the means of groups. These may be the means of different groups within a sample (e.g., a treatment and control group), the means of one sample group taken at different times (e.g., pretest and posttest scores), or a sample mean and a population mean.

- A t test is for exactly 1 or 2 groups when the sample is small (30 or less).

- A z test is for exactly 1 or 2 groups when the sample is large.

- An ANOVA is for 3 or more groups.

The z and t tests have subtypes based on the number and types of samples and the hypotheses:

- If you have only one sample that you want to compare to a population mean, use a one-sample test .

- If you have paired measurements (within-subjects design), use a dependent (paired) samples test .

- If you have completely separate measurements from two unmatched groups (between-subjects design), use an independent (unpaired) samples test .

- If you expect a difference between groups in a specific direction, use a one-tailed test .

- If you don’t have any expectations for the direction of a difference between groups, use a two-tailed test .

The only parametric correlation test is Pearson’s r . The correlation coefficient ( r ) tells you the strength of a linear relationship between two quantitative variables.

However, to test whether the correlation in the sample is strong enough to be important in the population, you also need to perform a significance test of the correlation coefficient, usually a t test, to obtain a p value. This test uses your sample size to calculate how much the correlation coefficient differs from zero in the population.

You use a dependent-samples, one-tailed t test to assess whether the meditation exercise significantly improved math test scores. The test gives you:

- a t value (test statistic) of 3.00

- a p value of 0.0028

Although Pearson’s r is a test statistic, it doesn’t tell you anything about how significant the correlation is in the population. You also need to test whether this sample correlation coefficient is large enough to demonstrate a correlation in the population.

A t test can also determine how significantly a correlation coefficient differs from zero based on sample size. Since you expect a positive correlation between parental income and GPA, you use a one-sample, one-tailed t test. The t test gives you:

- a t value of 3.08

- a p value of 0.001

The final step of statistical analysis is interpreting your results.

Statistical significance

In hypothesis testing, statistical significance is the main criterion for forming conclusions. You compare your p value to a set significance level (usually 0.05) to decide whether your results are statistically significant or non-significant.

Statistically significant results are considered unlikely to have arisen solely due to chance. There is only a very low chance of such a result occurring if the null hypothesis is true in the population.

This means that you believe the meditation intervention, rather than random factors, directly caused the increase in test scores. Example: Interpret your results (correlational study) You compare your p value of 0.001 to your significance threshold of 0.05. With a p value under this threshold, you can reject the null hypothesis. This indicates a statistically significant correlation between parental income and GPA in male college students.

Note that correlation doesn’t always mean causation, because there are often many underlying factors contributing to a complex variable like GPA. Even if one variable is related to another, this may be because of a third variable influencing both of them, or indirect links between the two variables.

Effect size

A statistically significant result doesn’t necessarily mean that there are important real life applications or clinical outcomes for a finding.

In contrast, the effect size indicates the practical significance of your results. It’s important to report effect sizes along with your inferential statistics for a complete picture of your results. You should also report interval estimates of effect sizes if you’re writing an APA style paper .

With a Cohen’s d of 0.72, there’s medium to high practical significance to your finding that the meditation exercise improved test scores. Example: Effect size (correlational study) To determine the effect size of the correlation coefficient, you compare your Pearson’s r value to Cohen’s effect size criteria.

Decision errors

Type I and Type II errors are mistakes made in research conclusions. A Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s false.

You can aim to minimise the risk of these errors by selecting an optimal significance level and ensuring high power . However, there’s a trade-off between the two errors, so a fine balance is necessary.

Frequentist versus Bayesian statistics

Traditionally, frequentist statistics emphasises null hypothesis significance testing and always starts with the assumption of a true null hypothesis.

However, Bayesian statistics has grown in popularity as an alternative approach in the last few decades. In this approach, you use previous research to continually update your hypotheses based on your expectations and observations.

Bayes factor compares the relative strength of evidence for the null versus the alternative hypothesis rather than making a conclusion about rejecting the null hypothesis or not.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Statistical analysis is the main method for analyzing quantitative research data . It uses probabilities and models to test predictions about a population from sample data.

Is this article helpful?

Other students also liked, a quick guide to experimental design | 5 steps & examples, controlled experiments | methods & examples of control, between-subjects design | examples, pros & cons, more interesting articles.

- Central Limit Theorem | Formula, Definition & Examples

- Central Tendency | Understanding the Mean, Median & Mode

- Correlation Coefficient | Types, Formulas & Examples

- Descriptive Statistics | Definitions, Types, Examples

- How to Calculate Standard Deviation (Guide) | Calculator & Examples

- How to Calculate Variance | Calculator, Analysis & Examples

- How to Find Degrees of Freedom | Definition & Formula

- How to Find Interquartile Range (IQR) | Calculator & Examples

- How to Find Outliers | Meaning, Formula & Examples

- How to Find the Geometric Mean | Calculator & Formula

- How to Find the Mean | Definition, Examples & Calculator

- How to Find the Median | Definition, Examples & Calculator

- How to Find the Range of a Data Set | Calculator & Formula

- Inferential Statistics | An Easy Introduction & Examples

- Levels of measurement: Nominal, ordinal, interval, ratio

- Missing Data | Types, Explanation, & Imputation

- Normal Distribution | Examples, Formulas, & Uses

- Null and Alternative Hypotheses | Definitions & Examples

- Poisson Distributions | Definition, Formula & Examples

- Skewness | Definition, Examples & Formula

- T-Distribution | What It Is and How To Use It (With Examples)

- The Standard Normal Distribution | Calculator, Examples & Uses

- Type I & Type II Errors | Differences, Examples, Visualizations

- Understanding Confidence Intervals | Easy Examples & Formulas

- Variability | Calculating Range, IQR, Variance, Standard Deviation

- What is Effect Size and Why Does It Matter? (Examples)

- What Is Interval Data? | Examples & Definition

- What Is Nominal Data? | Examples & Definition

- What Is Ordinal Data? | Examples & Definition

- What Is Ratio Data? | Examples & Definition

- What Is the Mode in Statistics? | Definition, Examples & Calculator

- Submit your COVID-19 Pandemic Research

- Research Leap Manual on Academic Writing

- Conduct Your Survey Easily

- Research Tools for Primary and Secondary Research

- Useful and Reliable Article Sources for Researchers

- Tips on writing a Research Paper

- Stuck on Your Thesis Statement?

- Out of the Box

- How to Organize the Format of Your Writing

- Argumentative Versus Persuasive. Comparing the 2 Types of Academic Writing Styles

- Very Quick Academic Writing Tips and Advices

- Top 4 Quick Useful Tips for Your Introduction

- Have You Chosen the Right Topic for Your Research Paper?

- Follow These Easy 8 Steps to Write an Effective Paper

- 7 Errors in your thesis statement

- How do I even Write an Academic Paper?

- Useful Tips for Successful Academic Writing

Immigrant Entrepreneurship in Europe: Insights from a Bibliometric Analysis

Enhancing employee job performance through supportive leadership, diversity management, and employee commitment: the mediating role of affective commitment, relationship management in sales – presentation of a model with which sales employees can build interpersonal relationships with customers.

- Promoting Digital Technologies to Enhance Human Resource Progress in Banking

- Enhancing Customer Loyalty in the E-Food Industry: Examining Customer Perspectives on Lock-In Strategies

- Self-Disruption As A Strategy For Leveraging A Bank’s Sustainability During Disruptive Periods: A Perspective from the Caribbean Financial Institutions

- Chinese Direct Investments in Germany

- Evaluation of German Life Insurers Based on Published Solvency and Financial Condition Reports: An Alternative Model

- Slide Share

Understanding statistical analysis: A beginner’s guide to data interpretation

Statistical analysis is a crucial part of research in many fields. It is used to analyze data and draw conclusions about the population being studied. However, statistical analysis can be complex and intimidating for beginners. In this article, we will provide a beginner’s guide to statistical analysis and data interpretation, with the aim of helping researchers understand the basics of statistical methods and their application in research.

What is Statistical Analysis?

Statistical analysis is a collection of methods used to analyze data. These methods are used to summarize data, make predictions, and draw conclusions about the population being studied. Statistical analysis is used in a variety of fields, including medicine, social sciences, economics, and more.

Statistical analysis can be broadly divided into two categories: descriptive statistics and inferential statistics. Descriptive statistics are used to summarize data, while inferential statistics are used to draw conclusions about the population based on a sample of data.

Descriptive Statistics

Descriptive statistics are used to summarize data. This includes measures such as the mean, median, mode, and standard deviation. These measures provide information about the central tendency and variability of the data. For example, the mean provides information about the average value of the data, while the standard deviation provides information about the variability of the data.

Inferential Statistics

Inferential statistics are used to draw conclusions about the population based on a sample of data. This involves making inferences about the population based on the sample data. For example, a researcher might use inferential statistics to test whether there is a significant difference between two groups in a study.

Statistical Analysis Techniques

There are many different statistical analysis techniques that can be used in research. Some of the most common techniques include:

Correlation Analysis: This involves analyzing the relationship between two or more variables.

Regression Analysis: This involves analyzing the relationship between a dependent variable and one or more independent variables.

T-Tests: This is a statistical test used to compare the means of two groups.

Analysis of Variance (ANOVA): This is a statistical test used to compare the means of three or more groups.

Chi-Square Test: This is a statistical test used to determine whether there is a significant association between two categorical variables.

Data Interpretation

Once data has been analyzed, it must be interpreted. This involves making sense of the data and drawing conclusions based on the results of the analysis. Data interpretation is a crucial part of statistical analysis, as it is used to draw conclusions and make recommendations based on the data.

When interpreting data, it is important to consider the context in which the data was collected. This includes factors such as the sample size, the sampling method, and the population being studied. It is also important to consider the limitations of the data and the statistical methods used.

Best Practices for Statistical Analysis

To ensure that statistical analysis is conducted correctly and effectively, there are several best practices that should be followed. These include:

Clearly define the research question : This is the foundation of the study and will guide the analysis.

Choose appropriate statistical methods: Different statistical methods are appropriate for different types of data and research questions.

Use reliable and valid data: The data used for analysis should be reliable and valid. This means that it should accurately represent the population being studied and be collected using appropriate methods.

Ensure that the data is representative: The sample used for analysis should be representative of the population being studied. This helps to ensure that the results of the analysis are applicable to the population.

Follow ethical guidelines : Researchers should follow ethical guidelines when conducting research. This includes obtaining informed consent from participants, protecting their privacy, and ensuring that the study does not cause harm.

Statistical analysis and data interpretation are essential tools for any researcher. Whether you are conducting research in the social sciences, natural sciences, or humanities, understanding statistical methods and interpreting data correctly is crucial to drawing accurate conclusions and making informed decisions. By following the best practices for statistical analysis and data interpretation outlined in this article, you can ensure that your research is based on sound statistical principles and is therefore more credible and reliable. Remember to start with a clear research question, use appropriate statistical methods, and always interpret your data in context. With these guidelines in mind, you can confidently approach statistical analysis and data interpretation and make meaningful contributions to your field of study.

Suggested Articles

Types of data analysis software In research work, received cluster of results and dispersion in…

How to use quantitative data analysis software Data analysis differentiates the scientist from the…

Using free research Qualitative Data Analysis Software is a great way to save money, highlight…

Research is a vital part of advancing knowledge in any field, but it must be…

Related Posts

Comments are closed.

Statistical Power and Power Calculations

- First Online: 21 August 2024

Cite this chapter

- Peter D. Fabricant MD, MPH 2

24 Accesses

Summary take-home points for statistical power and power calculations:

The interplay among statistical power, performing power calculations, and the number of patients needed for a study is crucial in the design and planning of clinical research studies.

Statistical power measures the ability of a study to detect the existence of a true effect (difference between groups).

Prior to the start of a study, power calculations are conducted using pilot or historical data to determine the sample size needed to achieve a desired level of statistical power.

Power is influenced by several factors, including the effect size (magnitude of the effect being studied), the significance level, the sample size, and the variability of the data.

The larger the sample size, the greater the statistical power of the study.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Benjamin DJ, Berger JO, Johannesson M, et al. Redefine statistical significance. Nat Hum Behav. 2018;2:6–10. https://doi.org/10.1038/s41562-017-0189-z .

Article PubMed Google Scholar

Hoenig JM, Heisey DM. The abuse of power: the pervasive fallacy of power calculations for data analysis. Am Stat. 2001;55:1–6. https://doi.org/10.1198/000313001300339897 .

Article Google Scholar

Zumbo BD, Hubley AM. A note on misconceptions concerning prospective and retrospective power. Statistician. 1998;47:385–8. https://doi.org/10.1111/1467-9884.00139 .

Download references

Author information

Authors and affiliations.

Associate Professor of Orthopedic Surgery, Hospital for Special Surgery, New York, NY, USA

Peter D. Fabricant MD, MPH

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Peter D. Fabricant MD, MPH .

Rights and permissions

Reprints and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Fabricant, P.D. (2024). Statistical Power and Power Calculations. In: Practical Clinical Research Design and Application. Springer, Cham. https://doi.org/10.1007/978-3-031-58380-3_4

Download citation

DOI : https://doi.org/10.1007/978-3-031-58380-3_4

Published : 21 August 2024

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-58379-7

Online ISBN : 978-3-031-58380-3

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.