- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Qualitative Data Analysis: What is it, Methods + Examples

In a world rich with information and narrative, understanding the deeper layers of human experiences requires a unique vision that goes beyond numbers and figures. This is where the power of qualitative data analysis comes to light.

In this blog, we’ll learn about qualitative data analysis, explore its methods, and provide real-life examples showcasing its power in uncovering insights.

What is Qualitative Data Analysis?

Qualitative data analysis is a systematic process of examining non-numerical data to extract meaning, patterns, and insights.

In contrast to quantitative analysis, which focuses on numbers and statistical metrics, the qualitative study focuses on the qualitative aspects of data, such as text, images, audio, and videos. It seeks to understand every aspect of human experiences, perceptions, and behaviors by examining the data’s richness.

Companies frequently conduct this analysis on customer feedback. You can collect qualitative data from reviews, complaints, chat messages, interactions with support centers, customer interviews, case notes, or even social media comments. This kind of data holds the key to understanding customer sentiments and preferences in a way that goes beyond mere numbers.

Importance of Qualitative Data Analysis

Qualitative data analysis plays a crucial role in your research and decision-making process across various disciplines. Let’s explore some key reasons that underline the significance of this analysis:

In-Depth Understanding

It enables you to explore complex and nuanced aspects of a phenomenon, delving into the ‘how’ and ‘why’ questions. This method provides you with a deeper understanding of human behavior, experiences, and contexts that quantitative approaches might not capture fully.

Contextual Insight

You can use this analysis to give context to numerical data. It will help you understand the circumstances and conditions that influence participants’ thoughts, feelings, and actions. This contextual insight becomes essential for generating comprehensive explanations.

Theory Development

You can generate or refine hypotheses via qualitative data analysis. As you analyze the data attentively, you can form hypotheses, concepts, and frameworks that will drive your future research and contribute to theoretical advances.

Participant Perspectives

When performing qualitative research, you can highlight participant voices and opinions. This approach is especially useful for understanding marginalized or underrepresented people, as it allows them to communicate their experiences and points of view.

Exploratory Research

The analysis is frequently used at the exploratory stage of your project. It assists you in identifying important variables, developing research questions, and designing quantitative studies that will follow.

Types of Qualitative Data

When conducting qualitative research, you can use several qualitative data collection methods , and here you will come across many sorts of qualitative data that can provide you with unique insights into your study topic. These data kinds add new views and angles to your understanding and analysis.

Interviews and Focus Groups

Interviews and focus groups will be among your key methods for gathering qualitative data. Interviews are one-on-one talks in which participants can freely share their thoughts, experiences, and opinions.

Focus groups, on the other hand, are discussions in which members interact with one another, resulting in dynamic exchanges of ideas. Both methods provide rich qualitative data and direct access to participant perspectives.

Observations and Field Notes

Observations and field notes are another useful sort of qualitative data. You can immerse yourself in the research environment through direct observation, carefully documenting behaviors, interactions, and contextual factors.

These observations will be recorded in your field notes, providing a complete picture of the environment and the behaviors you’re researching. This data type is especially important for comprehending behavior in their natural setting.

Textual and Visual Data

Textual and visual data include a wide range of resources that can be qualitatively analyzed. Documents, written narratives, and transcripts from various sources, such as interviews or speeches, are examples of textual data.

Photographs, films, and even artwork provide a visual layer to your research. These forms of data allow you to investigate what is spoken and the underlying emotions, details, and symbols expressed by language or pictures.

When to Choose Qualitative Data Analysis over Quantitative Data Analysis

As you begin your research journey, understanding why the analysis of qualitative data is important will guide your approach to understanding complex events. If you analyze qualitative data, it will provide new insights that complement quantitative methodologies, which will give you a broader understanding of your study topic.

It is critical to know when to use qualitative analysis over quantitative procedures. You can prefer qualitative data analysis when:

- Complexity Reigns: When your research questions involve deep human experiences, motivations, or emotions, qualitative research excels at revealing these complexities.

- Exploration is Key: Qualitative analysis is ideal for exploratory research. It will assist you in understanding a new or poorly understood topic before formulating quantitative hypotheses.

- Context Matters: If you want to understand how context affects behaviors or results, qualitative data analysis provides the depth needed to grasp these relationships.

- Unanticipated Findings: When your study provides surprising new viewpoints or ideas, qualitative analysis helps you to delve deeply into these emerging themes.

- Subjective Interpretation is Vital: When it comes to understanding people’s subjective experiences and interpretations, qualitative data analysis is the way to go.

You can make informed decisions regarding the right approach for your research objectives if you understand the importance of qualitative analysis and recognize the situations where it shines.

Qualitative Data Analysis Methods and Examples

Exploring various qualitative data analysis methods will provide you with a wide collection for making sense of your research findings. Once the data has been collected, you can choose from several analysis methods based on your research objectives and the data type you’ve collected.

There are five main methods for analyzing qualitative data. Each method takes a distinct approach to identifying patterns, themes, and insights within your qualitative data. They are:

Method 1: Content Analysis

Content analysis is a methodical technique for analyzing textual or visual data in a structured manner. In this method, you will categorize qualitative data by splitting it into manageable pieces and assigning the manual coding process to these units.

As you go, you’ll notice ongoing codes and designs that will allow you to conclude the content. This method is very beneficial for detecting common ideas, concepts, or themes in your data without losing the context.

Steps to Do Content Analysis

Follow these steps when conducting content analysis:

- Collect and Immerse: Begin by collecting the necessary textual or visual data. Immerse yourself in this data to fully understand its content, context, and complexities.

- Assign Codes and Categories: Assign codes to relevant data sections that systematically represent major ideas or themes. Arrange comparable codes into groups that cover the major themes.

- Analyze and Interpret: Develop a structured framework from the categories and codes. Then, evaluate the data in the context of your research question, investigate relationships between categories, discover patterns, and draw meaning from these connections.

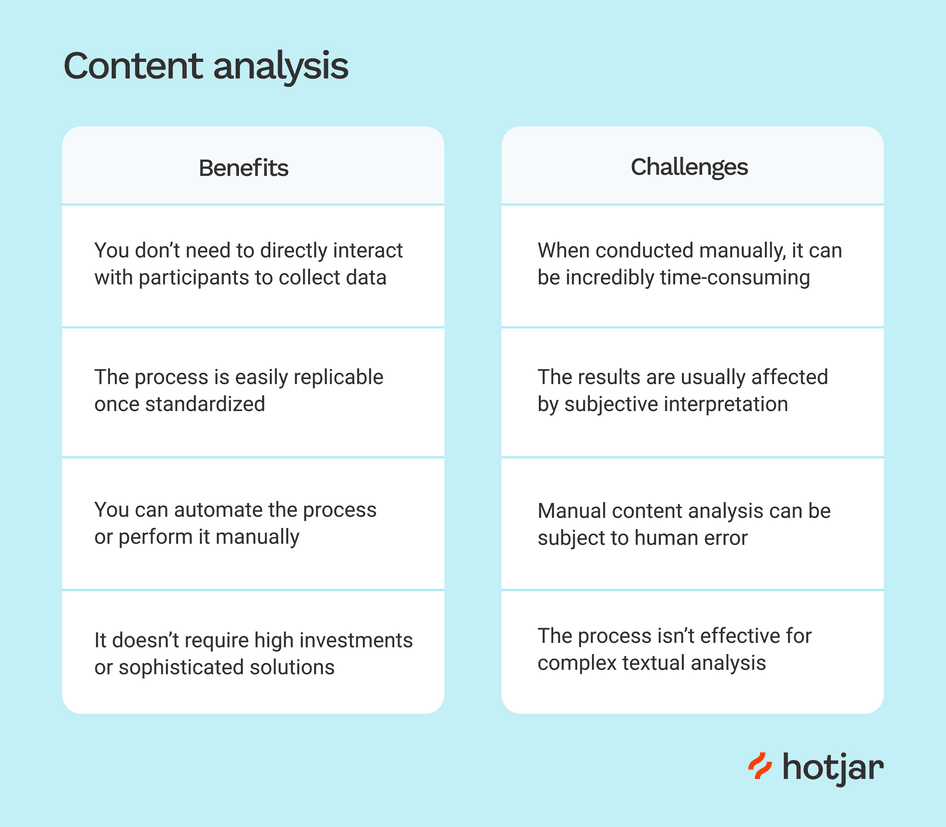

Benefits & Challenges

There are various advantages to using content analysis:

- Structured Approach: It offers a systematic approach to dealing with large data sets and ensures consistency throughout the research.

- Objective Insights: This method promotes objectivity, which helps to reduce potential biases in your study.

- Pattern Discovery: Content analysis can help uncover hidden trends, themes, and patterns that are not always obvious.

- Versatility: You can apply content analysis to various data formats, including text, internet content, images, etc.

However, keep in mind the challenges that arise:

- Subjectivity: Even with the best attempts, a certain bias may remain in coding and interpretation.

- Complexity: Analyzing huge data sets requires time and great attention to detail.

- Contextual Nuances: Content analysis may not capture all of the contextual richness that qualitative data analysis highlights.

Example of Content Analysis

Suppose you’re conducting market research and looking at customer feedback on a product. As you collect relevant data and analyze feedback, you’ll see repeating codes like “price,” “quality,” “customer service,” and “features.” These codes are organized into categories such as “positive reviews,” “negative reviews,” and “suggestions for improvement.”

According to your findings, themes such as “price” and “customer service” stand out and show that pricing and customer service greatly impact customer satisfaction. This example highlights the power of content analysis for obtaining significant insights from large textual data collections.

Method 2: Thematic Analysis

Thematic analysis is a well-structured procedure for identifying and analyzing recurring themes in your data. As you become more engaged in the data, you’ll generate codes or short labels representing key concepts. These codes are then organized into themes, providing a consistent framework for organizing and comprehending the substance of the data.

The analysis allows you to organize complex narratives and perspectives into meaningful categories, which will allow you to identify connections and patterns that may not be visible at first.

Steps to Do Thematic Analysis

Follow these steps when conducting a thematic analysis:

- Code and Group: Start by thoroughly examining the data and giving initial codes that identify the segments. To create initial themes, combine relevant codes.

- Code and Group: Begin by engaging yourself in the data, assigning first codes to notable segments. To construct basic themes, group comparable codes together.

- Analyze and Report: Analyze the data within each theme to derive relevant insights. Organize the topics into a consistent structure and explain your findings, along with data extracts that represent each theme.

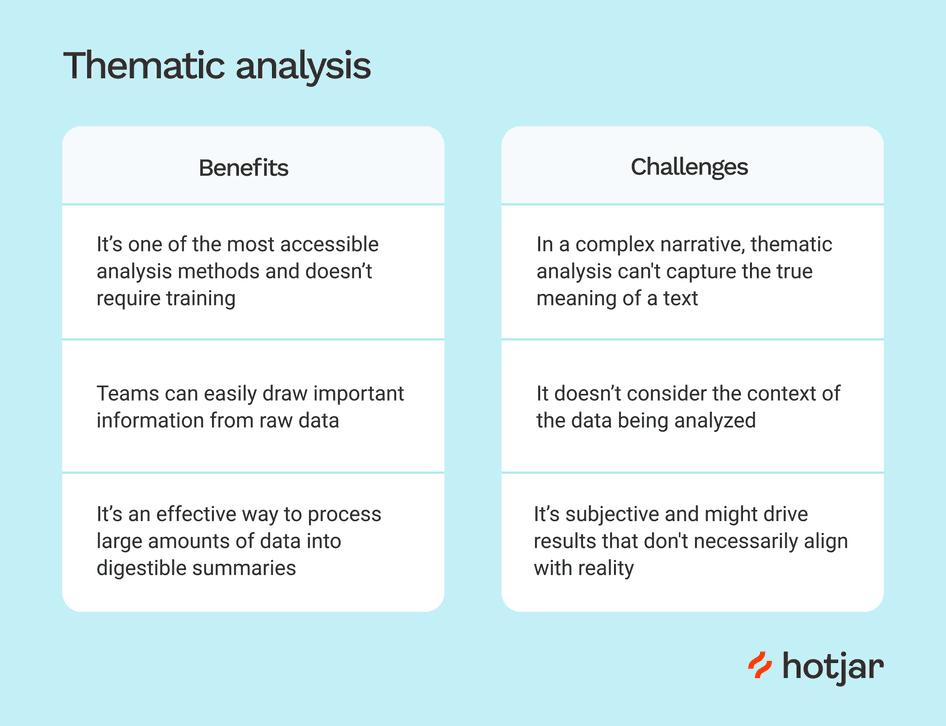

Thematic analysis has various benefits:

- Structured Exploration: It is a method for identifying patterns and themes in complex qualitative data.

- Comprehensive knowledge: Thematic analysis promotes an in-depth understanding of the complications and meanings of the data.

- Application Flexibility: This method can be customized to various research situations and data kinds.

However, challenges may arise, such as:

- Interpretive Nature: Interpreting qualitative data in thematic analysis is vital, and it is critical to manage researcher bias.

- Time-consuming: The study can be time-consuming, especially with large data sets.

- Subjectivity: The selection of codes and topics might be subjective.

Example of Thematic Analysis

Assume you’re conducting a thematic analysis on job satisfaction interviews. Following your immersion in the data, you assign initial codes such as “work-life balance,” “career growth,” and “colleague relationships.” As you organize these codes, you’ll notice themes develop, such as “Factors Influencing Job Satisfaction” and “Impact on Work Engagement.”

Further investigation reveals the tales and experiences included within these themes and provides insights into how various elements influence job satisfaction. This example demonstrates how thematic analysis can reveal meaningful patterns and insights in qualitative data.

Method 3: Narrative Analysis

The narrative analysis involves the narratives that people share. You’ll investigate the histories in your data, looking at how stories are created and the meanings they express. This method is excellent for learning how people make sense of their experiences through narrative.

Steps to Do Narrative Analysis

The following steps are involved in narrative analysis:

- Gather and Analyze: Start by collecting narratives, such as first-person tales, interviews, or written accounts. Analyze the stories, focusing on the plot, feelings, and characters.

- Find Themes: Look for recurring themes or patterns in various narratives. Think about the similarities and differences between these topics and personal experiences.

- Interpret and Extract Insights: Contextualize the narratives within their larger context. Accept the subjective nature of each narrative and analyze the narrator’s voice and style. Extract insights from the tales by diving into the emotions, motivations, and implications communicated by the stories.

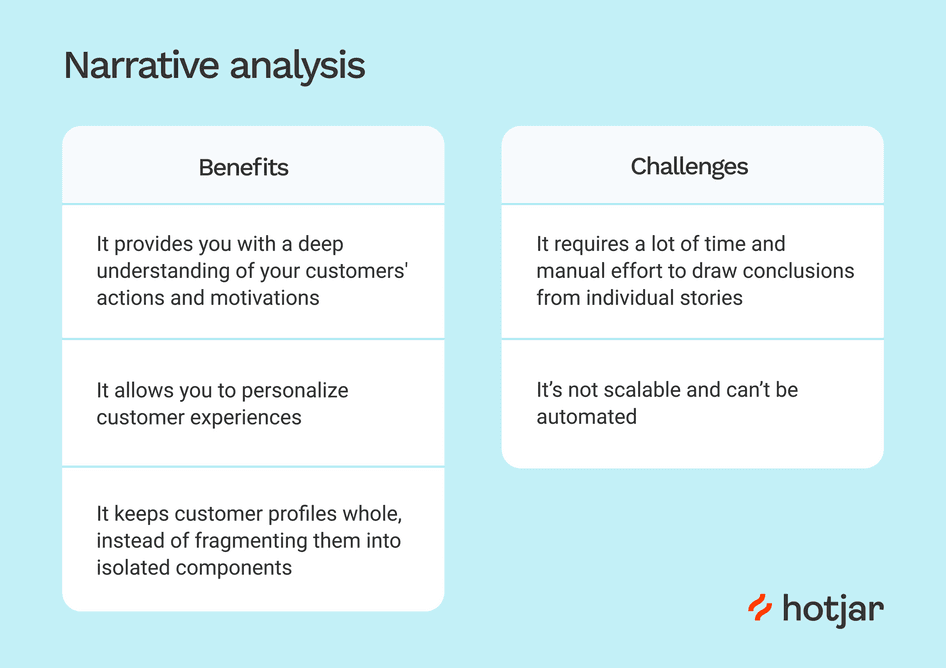

There are various advantages to narrative analysis:

- Deep Exploration: It lets you look deeply into people’s personal experiences and perspectives.

- Human-Centered: This method prioritizes the human perspective, allowing individuals to express themselves.

However, difficulties may arise, such as:

- Interpretive Complexity: Analyzing narratives requires dealing with the complexities of meaning and interpretation.

- Time-consuming: Because of the richness and complexities of tales, working with them can be time-consuming.

Example of Narrative Analysis

Assume you’re conducting narrative analysis on refugee interviews. As you read the stories, you’ll notice common themes of toughness, loss, and hope. The narratives provide insight into the obstacles that refugees face, their strengths, and the dreams that guide them.

The analysis can provide a deeper insight into the refugees’ experiences and the broader social context they navigate by examining the narratives’ emotional subtleties and underlying meanings. This example highlights how narrative analysis can reveal important insights into human stories.

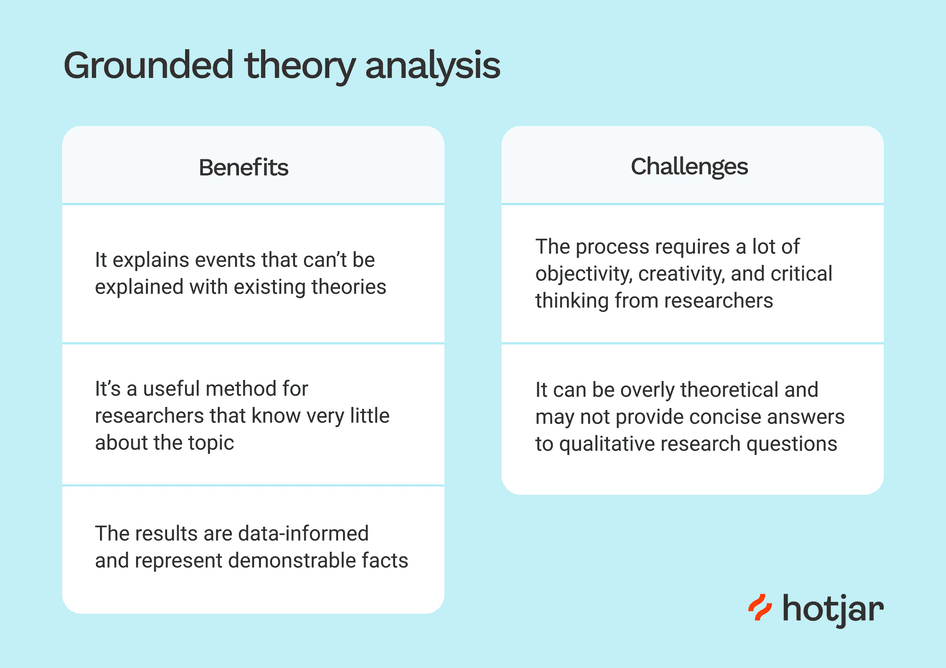

Method 4: Grounded Theory Analysis

Grounded theory analysis is an iterative and systematic approach that allows you to create theories directly from data without being limited by pre-existing hypotheses. With an open mind, you collect data and generate early codes and labels that capture essential ideas or concepts within the data.

As you progress, you refine these codes and increasingly connect them, eventually developing a theory based on the data. Grounded theory analysis is a dynamic process for developing new insights and hypotheses based on details in your data.

Steps to Do Grounded Theory Analysis

Grounded theory analysis requires the following steps:

- Initial Coding: First, immerse yourself in the data, producing initial codes that represent major concepts or patterns.

- Categorize and Connect: Using axial coding, organize the initial codes, which establish relationships and connections between topics.

- Build the Theory: Focus on creating a core category that connects the codes and themes. Regularly refine the theory by comparing and integrating new data, ensuring that it evolves organically from the data.

Grounded theory analysis has various benefits:

- Theory Generation: It provides a one-of-a-kind opportunity to generate hypotheses straight from data and promotes new insights.

- In-depth Understanding: The analysis allows you to deeply analyze the data and reveal complex relationships and patterns.

- Flexible Process: This method is customizable and ongoing, which allows you to enhance your research as you collect additional data.

However, challenges might arise with:

- Time and Resources: Because grounded theory analysis is a continuous process, it requires a large commitment of time and resources.

- Theoretical Development: Creating a grounded theory involves a thorough understanding of qualitative data analysis software and theoretical concepts.

- Interpretation of Complexity: Interpreting and incorporating a newly developed theory into existing literature can be intellectually hard.

Example of Grounded Theory Analysis

Assume you’re performing a grounded theory analysis on workplace collaboration interviews. As you open code the data, you will discover notions such as “communication barriers,” “team dynamics,” and “leadership roles.” Axial coding demonstrates links between these notions, emphasizing the significance of efficient communication in developing collaboration.

You create the core “Integrated Communication Strategies” category through selective coding, which unifies new topics.

This theory-driven category serves as the framework for understanding how numerous aspects contribute to effective team collaboration. This example shows how grounded theory analysis allows you to generate a theory directly from the inherent nature of the data.

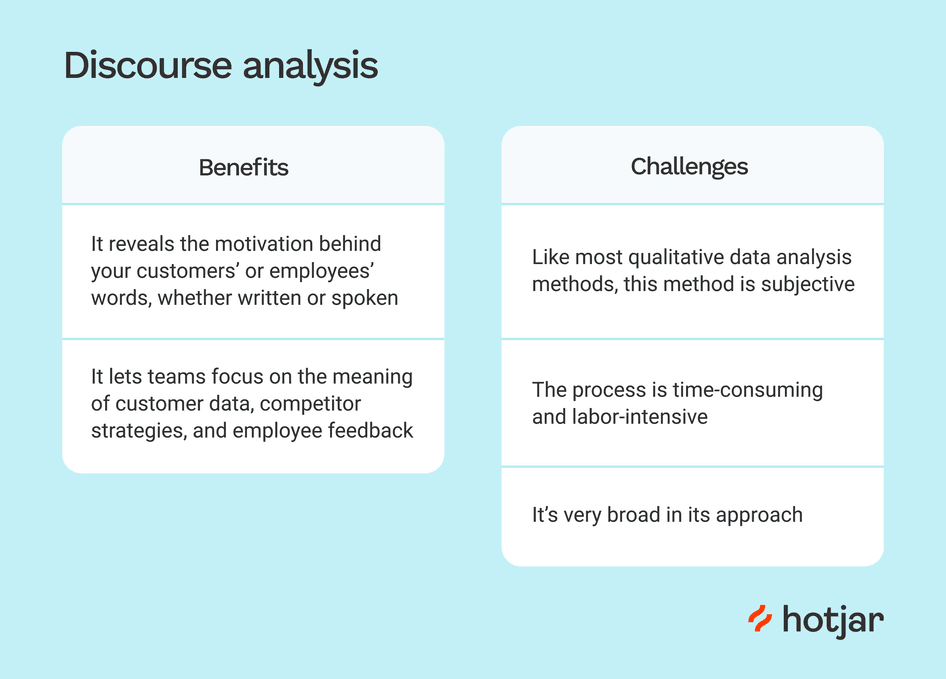

Method 5: Discourse Analysis

Discourse analysis focuses on language and communication. You’ll look at how language produces meaning and how it reflects power relations, identities, and cultural influences. This strategy examines what is said and how it is said; the words, phrasing, and larger context of communication.

The analysis is precious when investigating power dynamics, identities, and cultural influences encoded in language. By evaluating the language used in your data, you can identify underlying assumptions, cultural standards, and how individuals negotiate meaning through communication.

Steps to Do Discourse Analysis

Conducting discourse analysis entails the following steps:

- Select Discourse: For analysis, choose language-based data such as texts, speeches, or media content.

- Analyze Language: Immerse yourself in the conversation, examining language choices, metaphors, and underlying assumptions.

- Discover Patterns: Recognize the dialogue’s reoccurring themes, ideologies, and power dynamics. To fully understand the effects of these patterns, put them in their larger context.

There are various advantages of using discourse analysis:

- Understanding Language: It provides an extensive understanding of how language builds meaning and influences perceptions.

- Uncovering Power Dynamics: The analysis reveals how power dynamics appear via language.

- Cultural Insights: This method identifies cultural norms, beliefs, and ideologies stored in communication.

However, the following challenges may arise:

- Complexity of Interpretation: Language analysis involves navigating multiple levels of nuance and interpretation.

- Subjectivity: Interpretation can be subjective, so controlling researcher bias is important.

- Time-Intensive: Discourse analysis can take a lot of time because careful linguistic study is required in this analysis.

Example of Discourse Analysis

Consider doing discourse analysis on media coverage of a political event. You notice repeating linguistic patterns in news articles that depict the event as a conflict between opposing parties. Through deconstruction, you can expose how this framing supports particular ideologies and power relations.

You can illustrate how language choices influence public perceptions and contribute to building the narrative around the event by analyzing the speech within the broader political and social context. This example shows how discourse analysis can reveal hidden power dynamics and cultural influences on communication.

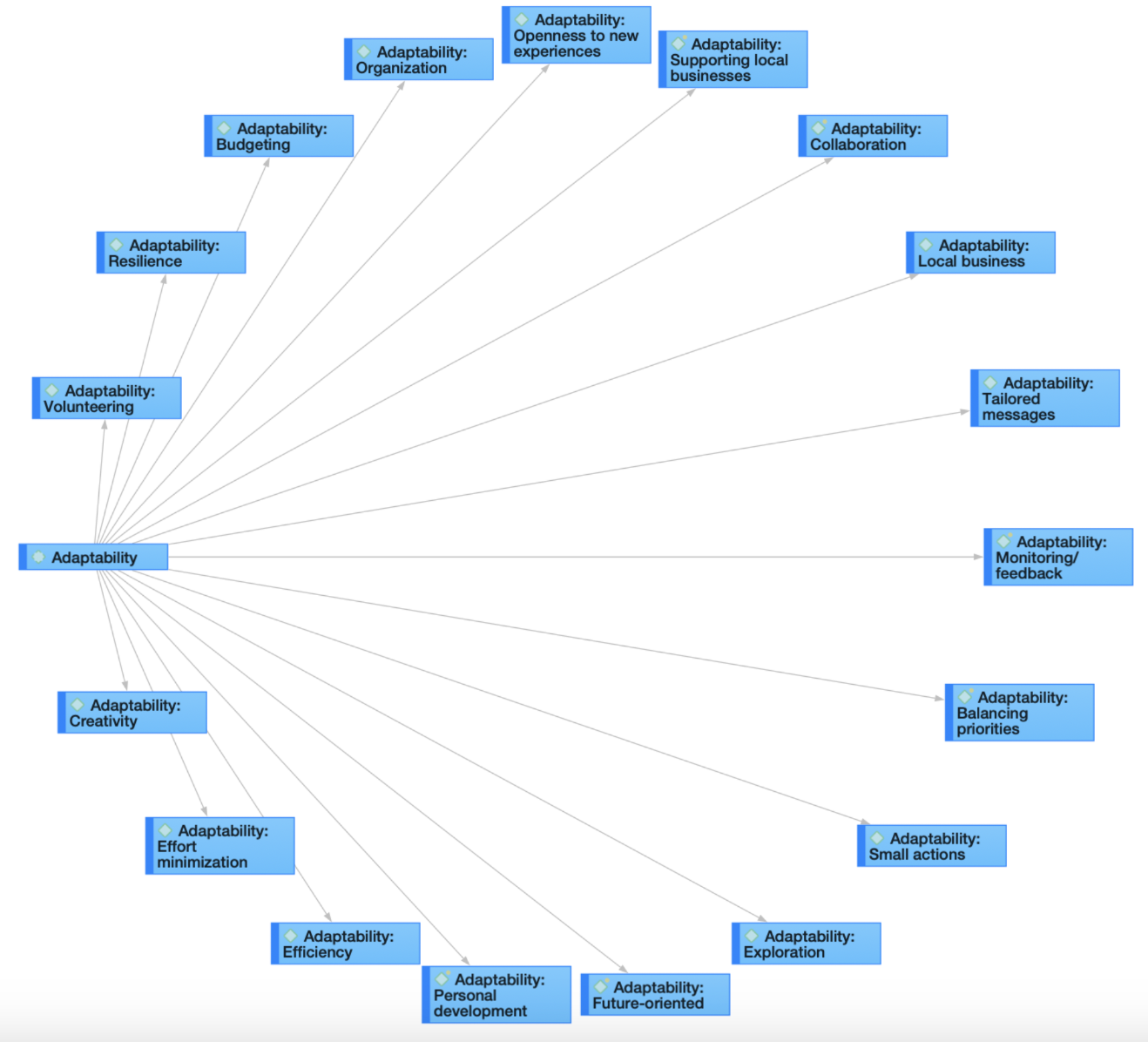

How to do Qualitative Data Analysis with the QuestionPro Research suite?

QuestionPro is a popular survey and research platform that offers tools for collecting and analyzing qualitative and quantitative data. Follow these general steps for conducting qualitative data analysis using the QuestionPro Research Suite:

- Collect Qualitative Data: Set up your survey to capture qualitative responses. It might involve open-ended questions, text boxes, or comment sections where participants can provide detailed responses.

- Export Qualitative Responses: Export the responses once you’ve collected qualitative data through your survey. QuestionPro typically allows you to export survey data in various formats, such as Excel or CSV.

- Prepare Data for Analysis: Review the exported data and clean it if necessary. Remove irrelevant or duplicate entries to ensure your data is ready for analysis.

- Code and Categorize Responses: Segment and label data, letting new patterns emerge naturally, then develop categories through axial coding to structure the analysis.

- Identify Themes: Analyze the coded responses to identify recurring themes, patterns, and insights. Look for similarities and differences in participants’ responses.

- Generate Reports and Visualizations: Utilize the reporting features of QuestionPro to create visualizations, charts, and graphs that help communicate the themes and findings from your qualitative research.

- Interpret and Draw Conclusions: Interpret the themes and patterns you’ve identified in the qualitative data. Consider how these findings answer your research questions or provide insights into your study topic.

- Integrate with Quantitative Data (if applicable): If you’re also conducting quantitative research using QuestionPro, consider integrating your qualitative findings with quantitative results to provide a more comprehensive understanding.

Qualitative data analysis is vital in uncovering various human experiences, views, and stories. If you’re ready to transform your research journey and apply the power of qualitative analysis, now is the moment to do it. Book a demo with QuestionPro today and begin your journey of exploration.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Customer Experience Lessons from 13,000 Feet — Tuesday CX Thoughts

Aug 20, 2024

Insight: Definition & meaning, types and examples

Aug 19, 2024

Employee Loyalty: Strategies for Long-Term Business Success

Jotform vs SurveyMonkey: Which Is Best in 2024

Aug 15, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

Qualitative Data Analysis Methods 101:

The “big 6” methods + examples.

By: Kerryn Warren (PhD) | Reviewed By: Eunice Rautenbach (D.Tech) | May 2020 (Updated April 2023)

Qualitative data analysis methods. Wow, that’s a mouthful.

If you’re new to the world of research, qualitative data analysis can look rather intimidating. So much bulky terminology and so many abstract, fluffy concepts. It certainly can be a minefield!

Don’t worry – in this post, we’ll unpack the most popular analysis methods , one at a time, so that you can approach your analysis with confidence and competence – whether that’s for a dissertation, thesis or really any kind of research project.

What (exactly) is qualitative data analysis?

To understand qualitative data analysis, we need to first understand qualitative data – so let’s step back and ask the question, “what exactly is qualitative data?”.

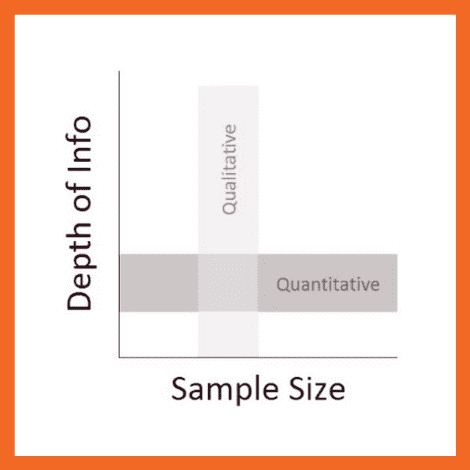

Qualitative data refers to pretty much any data that’s “not numbers” . In other words, it’s not the stuff you measure using a fixed scale or complex equipment, nor do you analyse it using complex statistics or mathematics.

So, if it’s not numbers, what is it?

Words, you guessed? Well… sometimes , yes. Qualitative data can, and often does, take the form of interview transcripts, documents and open-ended survey responses – but it can also involve the interpretation of images and videos. In other words, qualitative isn’t just limited to text-based data.

So, how’s that different from quantitative data, you ask?

Simply put, qualitative research focuses on words, descriptions, concepts or ideas – while quantitative research focuses on numbers and statistics . Qualitative research investigates the “softer side” of things to explore and describe , while quantitative research focuses on the “hard numbers”, to measure differences between variables and the relationships between them. If you’re keen to learn more about the differences between qual and quant, we’ve got a detailed post over here .

So, qualitative analysis is easier than quantitative, right?

Not quite. In many ways, qualitative data can be challenging and time-consuming to analyse and interpret. At the end of your data collection phase (which itself takes a lot of time), you’ll likely have many pages of text-based data or hours upon hours of audio to work through. You might also have subtle nuances of interactions or discussions that have danced around in your mind, or that you scribbled down in messy field notes. All of this needs to work its way into your analysis.

Making sense of all of this is no small task and you shouldn’t underestimate it. Long story short – qualitative analysis can be a lot of work! Of course, quantitative analysis is no piece of cake either, but it’s important to recognise that qualitative analysis still requires a significant investment in terms of time and effort.

Need a helping hand?

In this post, we’ll explore qualitative data analysis by looking at some of the most common analysis methods we encounter. We’re not going to cover every possible qualitative method and we’re not going to go into heavy detail – we’re just going to give you the big picture. That said, we will of course includes links to loads of extra resources so that you can learn more about whichever analysis method interests you.

Without further delay, let’s get into it.

The “Big 6” Qualitative Analysis Methods

There are many different types of qualitative data analysis, all of which serve different purposes and have unique strengths and weaknesses . We’ll start by outlining the analysis methods and then we’ll dive into the details for each.

The 6 most popular methods (or at least the ones we see at Grad Coach) are:

- Content analysis

- Narrative analysis

- Discourse analysis

- Thematic analysis

- Grounded theory (GT)

- Interpretive phenomenological analysis (IPA)

Let’s take a look at each of them…

QDA Method #1: Qualitative Content Analysis

Content analysis is possibly the most common and straightforward QDA method. At the simplest level, content analysis is used to evaluate patterns within a piece of content (for example, words, phrases or images) or across multiple pieces of content or sources of communication. For example, a collection of newspaper articles or political speeches.

With content analysis, you could, for instance, identify the frequency with which an idea is shared or spoken about – like the number of times a Kardashian is mentioned on Twitter. Or you could identify patterns of deeper underlying interpretations – for instance, by identifying phrases or words in tourist pamphlets that highlight India as an ancient country.

Because content analysis can be used in such a wide variety of ways, it’s important to go into your analysis with a very specific question and goal, or you’ll get lost in the fog. With content analysis, you’ll group large amounts of text into codes , summarise these into categories, and possibly even tabulate the data to calculate the frequency of certain concepts or variables. Because of this, content analysis provides a small splash of quantitative thinking within a qualitative method.

Naturally, while content analysis is widely useful, it’s not without its drawbacks . One of the main issues with content analysis is that it can be very time-consuming , as it requires lots of reading and re-reading of the texts. Also, because of its multidimensional focus on both qualitative and quantitative aspects, it is sometimes accused of losing important nuances in communication.

Content analysis also tends to concentrate on a very specific timeline and doesn’t take into account what happened before or after that timeline. This isn’t necessarily a bad thing though – just something to be aware of. So, keep these factors in mind if you’re considering content analysis. Every analysis method has its limitations , so don’t be put off by these – just be aware of them ! If you’re interested in learning more about content analysis, the video below provides a good starting point.

QDA Method #2: Narrative Analysis

As the name suggests, narrative analysis is all about listening to people telling stories and analysing what that means . Since stories serve a functional purpose of helping us make sense of the world, we can gain insights into the ways that people deal with and make sense of reality by analysing their stories and the ways they’re told.

You could, for example, use narrative analysis to explore whether how something is being said is important. For instance, the narrative of a prisoner trying to justify their crime could provide insight into their view of the world and the justice system. Similarly, analysing the ways entrepreneurs talk about the struggles in their careers or cancer patients telling stories of hope could provide powerful insights into their mindsets and perspectives . Simply put, narrative analysis is about paying attention to the stories that people tell – and more importantly, the way they tell them.

Of course, the narrative approach has its weaknesses , too. Sample sizes are generally quite small due to the time-consuming process of capturing narratives. Because of this, along with the multitude of social and lifestyle factors which can influence a subject, narrative analysis can be quite difficult to reproduce in subsequent research. This means that it’s difficult to test the findings of some of this research.

Similarly, researcher bias can have a strong influence on the results here, so you need to be particularly careful about the potential biases you can bring into your analysis when using this method. Nevertheless, narrative analysis is still a very useful qualitative analysis method – just keep these limitations in mind and be careful not to draw broad conclusions . If you’re keen to learn more about narrative analysis, the video below provides a great introduction to this qualitative analysis method.

QDA Method #3: Discourse Analysis

Discourse is simply a fancy word for written or spoken language or debate . So, discourse analysis is all about analysing language within its social context. In other words, analysing language – such as a conversation, a speech, etc – within the culture and society it takes place. For example, you could analyse how a janitor speaks to a CEO, or how politicians speak about terrorism.

To truly understand these conversations or speeches, the culture and history of those involved in the communication are important factors to consider. For example, a janitor might speak more casually with a CEO in a company that emphasises equality among workers. Similarly, a politician might speak more about terrorism if there was a recent terrorist incident in the country.

So, as you can see, by using discourse analysis, you can identify how culture , history or power dynamics (to name a few) have an effect on the way concepts are spoken about. So, if your research aims and objectives involve understanding culture or power dynamics, discourse analysis can be a powerful method.

Because there are many social influences in terms of how we speak to each other, the potential use of discourse analysis is vast . Of course, this also means it’s important to have a very specific research question (or questions) in mind when analysing your data and looking for patterns and themes, or you might land up going down a winding rabbit hole.

Discourse analysis can also be very time-consuming as you need to sample the data to the point of saturation – in other words, until no new information and insights emerge. But this is, of course, part of what makes discourse analysis such a powerful technique. So, keep these factors in mind when considering this QDA method. Again, if you’re keen to learn more, the video below presents a good starting point.

QDA Method #4: Thematic Analysis

Thematic analysis looks at patterns of meaning in a data set – for example, a set of interviews or focus group transcripts. But what exactly does that… mean? Well, a thematic analysis takes bodies of data (which are often quite large) and groups them according to similarities – in other words, themes . These themes help us make sense of the content and derive meaning from it.

Let’s take a look at an example.

With thematic analysis, you could analyse 100 online reviews of a popular sushi restaurant to find out what patrons think about the place. By reviewing the data, you would then identify the themes that crop up repeatedly within the data – for example, “fresh ingredients” or “friendly wait staff”.

So, as you can see, thematic analysis can be pretty useful for finding out about people’s experiences , views, and opinions . Therefore, if your research aims and objectives involve understanding people’s experience or view of something, thematic analysis can be a great choice.

Since thematic analysis is a bit of an exploratory process, it’s not unusual for your research questions to develop , or even change as you progress through the analysis. While this is somewhat natural in exploratory research, it can also be seen as a disadvantage as it means that data needs to be re-reviewed each time a research question is adjusted. In other words, thematic analysis can be quite time-consuming – but for a good reason. So, keep this in mind if you choose to use thematic analysis for your project and budget extra time for unexpected adjustments.

QDA Method #5: Grounded theory (GT)

Grounded theory is a powerful qualitative analysis method where the intention is to create a new theory (or theories) using the data at hand, through a series of “ tests ” and “ revisions ”. Strictly speaking, GT is more a research design type than an analysis method, but we’ve included it here as it’s often referred to as a method.

What’s most important with grounded theory is that you go into the analysis with an open mind and let the data speak for itself – rather than dragging existing hypotheses or theories into your analysis. In other words, your analysis must develop from the ground up (hence the name).

Let’s look at an example of GT in action.

Assume you’re interested in developing a theory about what factors influence students to watch a YouTube video about qualitative analysis. Using Grounded theory , you’d start with this general overarching question about the given population (i.e., graduate students). First, you’d approach a small sample – for example, five graduate students in a department at a university. Ideally, this sample would be reasonably representative of the broader population. You’d interview these students to identify what factors lead them to watch the video.

After analysing the interview data, a general pattern could emerge. For example, you might notice that graduate students are more likely to read a post about qualitative methods if they are just starting on their dissertation journey, or if they have an upcoming test about research methods.

From here, you’ll look for another small sample – for example, five more graduate students in a different department – and see whether this pattern holds true for them. If not, you’ll look for commonalities and adapt your theory accordingly. As this process continues, the theory would develop . As we mentioned earlier, what’s important with grounded theory is that the theory develops from the data – not from some preconceived idea.

So, what are the drawbacks of grounded theory? Well, some argue that there’s a tricky circularity to grounded theory. For it to work, in principle, you should know as little as possible regarding the research question and population, so that you reduce the bias in your interpretation. However, in many circumstances, it’s also thought to be unwise to approach a research question without knowledge of the current literature . In other words, it’s a bit of a “chicken or the egg” situation.

Regardless, grounded theory remains a popular (and powerful) option. Naturally, it’s a very useful method when you’re researching a topic that is completely new or has very little existing research about it, as it allows you to start from scratch and work your way from the ground up .

QDA Method #6: Interpretive Phenomenological Analysis (IPA)

Interpretive. Phenomenological. Analysis. IPA . Try saying that three times fast…

Let’s just stick with IPA, okay?

IPA is designed to help you understand the personal experiences of a subject (for example, a person or group of people) concerning a major life event, an experience or a situation . This event or experience is the “phenomenon” that makes up the “P” in IPA. Such phenomena may range from relatively common events – such as motherhood, or being involved in a car accident – to those which are extremely rare – for example, someone’s personal experience in a refugee camp. So, IPA is a great choice if your research involves analysing people’s personal experiences of something that happened to them.

It’s important to remember that IPA is subject – centred . In other words, it’s focused on the experiencer . This means that, while you’ll likely use a coding system to identify commonalities, it’s important not to lose the depth of experience or meaning by trying to reduce everything to codes. Also, keep in mind that since your sample size will generally be very small with IPA, you often won’t be able to draw broad conclusions about the generalisability of your findings. But that’s okay as long as it aligns with your research aims and objectives.

Another thing to be aware of with IPA is personal bias . While researcher bias can creep into all forms of research, self-awareness is critically important with IPA, as it can have a major impact on the results. For example, a researcher who was a victim of a crime himself could insert his own feelings of frustration and anger into the way he interprets the experience of someone who was kidnapped. So, if you’re going to undertake IPA, you need to be very self-aware or you could muddy the analysis.

How to choose the right analysis method

In light of all of the qualitative analysis methods we’ve covered so far, you’re probably asking yourself the question, “ How do I choose the right one? ”

Much like all the other methodological decisions you’ll need to make, selecting the right qualitative analysis method largely depends on your research aims, objectives and questions . In other words, the best tool for the job depends on what you’re trying to build. For example:

- Perhaps your research aims to analyse the use of words and what they reveal about the intention of the storyteller and the cultural context of the time.

- Perhaps your research aims to develop an understanding of the unique personal experiences of people that have experienced a certain event, or

- Perhaps your research aims to develop insight regarding the influence of a certain culture on its members.

As you can probably see, each of these research aims are distinctly different , and therefore different analysis methods would be suitable for each one. For example, narrative analysis would likely be a good option for the first aim, while grounded theory wouldn’t be as relevant.

It’s also important to remember that each method has its own set of strengths, weaknesses and general limitations. No single analysis method is perfect . So, depending on the nature of your research, it may make sense to adopt more than one method (this is called triangulation ). Keep in mind though that this will of course be quite time-consuming.

As we’ve seen, all of the qualitative analysis methods we’ve discussed make use of coding and theme-generating techniques, but the intent and approach of each analysis method differ quite substantially. So, it’s very important to come into your research with a clear intention before you decide which analysis method (or methods) to use.

Start by reviewing your research aims , objectives and research questions to assess what exactly you’re trying to find out – then select a qualitative analysis method that fits. Never pick a method just because you like it or have experience using it – your analysis method (or methods) must align with your broader research aims and objectives.

Let’s recap on QDA methods…

In this post, we looked at six popular qualitative data analysis methods:

- First, we looked at content analysis , a straightforward method that blends a little bit of quant into a primarily qualitative analysis.

- Then we looked at narrative analysis , which is about analysing how stories are told.

- Next up was discourse analysis – which is about analysing conversations and interactions.

- Then we moved on to thematic analysis – which is about identifying themes and patterns.

- From there, we went south with grounded theory – which is about starting from scratch with a specific question and using the data alone to build a theory in response to that question.

- And finally, we looked at IPA – which is about understanding people’s unique experiences of a phenomenon.

Of course, these aren’t the only options when it comes to qualitative data analysis, but they’re a great starting point if you’re dipping your toes into qualitative research for the first time.

If you’re still feeling a bit confused, consider our private coaching service , where we hold your hand through the research process to help you develop your best work.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

87 Comments

This has been very helpful. Thank you.

Thank you madam,

Thank you so much for this information

I wonder it so clear for understand and good for me. can I ask additional query?

Very insightful and useful

Good work done with clear explanations. Thank you.

Thanks so much for the write-up, it’s really good.

Thanks madam . It is very important .

thank you very good

Great presentation

This has been very well explained in simple language . It is useful even for a new researcher.

Great to hear that. Good luck with your qualitative data analysis, Pramod!

This is very useful information. And it was very a clear language structured presentation. Thanks a lot.

Thank you so much.

very informative sequential presentation

Precise explanation of method.

Hi, may we use 2 data analysis methods in our qualitative research?

Thanks for your comment. Most commonly, one would use one type of analysis method, but it depends on your research aims and objectives.

You explained it in very simple language, everyone can understand it. Thanks so much.

Thank you very much, this is very helpful. It has been explained in a very simple manner that even a layman understands

Thank nicely explained can I ask is Qualitative content analysis the same as thematic analysis?

Thanks for your comment. No, QCA and thematic are two different types of analysis. This article might help clarify – https://onlinelibrary.wiley.com/doi/10.1111/nhs.12048

This is my first time to come across a well explained data analysis. so helpful.

I have thoroughly enjoyed your explanation of the six qualitative analysis methods. This is very helpful. Thank you!

Thank you very much, this is well explained and useful

i need a citation of your book.

Thanks a lot , remarkable indeed, enlighting to the best

Hi Derek, What other theories/methods would you recommend when the data is a whole speech?

Keep writing useful artikel.

It is important concept about QDA and also the way to express is easily understandable, so thanks for all.

Thank you, this is well explained and very useful.

Very helpful .Thanks.

Hi there! Very well explained. Simple but very useful style of writing. Please provide the citation of the text. warm regards

The session was very helpful and insightful. Thank you

This was very helpful and insightful. Easy to read and understand

As a professional academic writer, this has been so informative and educative. Keep up the good work Grad Coach you are unmatched with quality content for sure.

Keep up the good work Grad Coach you are unmatched with quality content for sure.

Its Great and help me the most. A Million Thanks you Dr.

It is a very nice work

Very insightful. Please, which of this approach could be used for a research that one is trying to elicit students’ misconceptions in a particular concept ?

This is Amazing and well explained, thanks

great overview

What do we call a research data analysis method that one use to advise or determining the best accounting tool or techniques that should be adopted in a company.

Informative video, explained in a clear and simple way. Kudos

Waoo! I have chosen method wrong for my data analysis. But I can revise my work according to this guide. Thank you so much for this helpful lecture.

This has been very helpful. It gave me a good view of my research objectives and how to choose the best method. Thematic analysis it is.

Very helpful indeed. Thanku so much for the insight.

This was incredibly helpful.

Very helpful.

very educative

Nicely written especially for novice academic researchers like me! Thank you.

choosing a right method for a paper is always a hard job for a student, this is a useful information, but it would be more useful personally for me, if the author provide me with a little bit more information about the data analysis techniques in type of explanatory research. Can we use qualitative content analysis technique for explanatory research ? or what is the suitable data analysis method for explanatory research in social studies?

that was very helpful for me. because these details are so important to my research. thank you very much

I learnt a lot. Thank you

Relevant and Informative, thanks !

Well-planned and organized, thanks much! 🙂

I have reviewed qualitative data analysis in a simplest way possible. The content will highly be useful for developing my book on qualitative data analysis methods. Cheers!

Clear explanation on qualitative and how about Case study

This was helpful. Thank you

This was really of great assistance, it was just the right information needed. Explanation very clear and follow.

Wow, Thanks for making my life easy

This was helpful thanks .

Very helpful…. clear and written in an easily understandable manner. Thank you.

This was so helpful as it was easy to understand. I’m a new to research thank you so much.

so educative…. but Ijust want to know which method is coding of the qualitative or tallying done?

Thank you for the great content, I have learnt a lot. So helpful

precise and clear presentation with simple language and thank you for that.

very informative content, thank you.

You guys are amazing on YouTube on this platform. Your teachings are great, educative, and informative. kudos!

Brilliant Delivery. You made a complex subject seem so easy. Well done.

Beautifully explained.

Thanks a lot

Is there a video the captures the practical process of coding using automated applications?

Thanks for the comment. We don’t recommend using automated applications for coding, as they are not sufficiently accurate in our experience.

content analysis can be qualitative research?

THANK YOU VERY MUCH.

Thank you very much for such a wonderful content

do you have any material on Data collection

What a powerful explanation of the QDA methods. Thank you.

Great explanation both written and Video. i have been using of it on a day to day working of my thesis project in accounting and finance. Thank you very much for your support.

very helpful, thank you so much

The tutorial is useful. I benefited a lot.

This is an eye opener for me and very informative, I have used some of your guidance notes on my Thesis, I wonder if you can assist with your 1. name of your book, year of publication, topic etc., this is for citing in my Bibliography,

I certainly hope to hear from you

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is Qualitative Research? | Methods & Examples

What Is Qualitative Research? | Methods & Examples

Published on June 19, 2020 by Pritha Bhandari . Revised on June 22, 2023.

Qualitative research involves collecting and analyzing non-numerical data (e.g., text, video, or audio) to understand concepts, opinions, or experiences. It can be used to gather in-depth insights into a problem or generate new ideas for research.

Qualitative research is the opposite of quantitative research , which involves collecting and analyzing numerical data for statistical analysis.

Qualitative research is commonly used in the humanities and social sciences, in subjects such as anthropology, sociology, education, health sciences, history, etc.

- How does social media shape body image in teenagers?

- How do children and adults interpret healthy eating in the UK?

- What factors influence employee retention in a large organization?

- How is anxiety experienced around the world?

- How can teachers integrate social issues into science curriculums?

Table of contents

Approaches to qualitative research, qualitative research methods, qualitative data analysis, advantages of qualitative research, disadvantages of qualitative research, other interesting articles, frequently asked questions about qualitative research.

Qualitative research is used to understand how people experience the world. While there are many approaches to qualitative research, they tend to be flexible and focus on retaining rich meaning when interpreting data.

Common approaches include grounded theory, ethnography , action research , phenomenological research, and narrative research. They share some similarities, but emphasize different aims and perspectives.

| Approach | What does it involve? |

|---|---|

| Grounded theory | Researchers collect rich data on a topic of interest and develop theories . |

| Researchers immerse themselves in groups or organizations to understand their cultures. | |

| Action research | Researchers and participants collaboratively link theory to practice to drive social change. |

| Phenomenological research | Researchers investigate a phenomenon or event by describing and interpreting participants’ lived experiences. |

| Narrative research | Researchers examine how stories are told to understand how participants perceive and make sense of their experiences. |

Note that qualitative research is at risk for certain research biases including the Hawthorne effect , observer bias , recall bias , and social desirability bias . While not always totally avoidable, awareness of potential biases as you collect and analyze your data can prevent them from impacting your work too much.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Each of the research approaches involve using one or more data collection methods . These are some of the most common qualitative methods:

- Observations: recording what you have seen, heard, or encountered in detailed field notes.

- Interviews: personally asking people questions in one-on-one conversations.

- Focus groups: asking questions and generating discussion among a group of people.

- Surveys : distributing questionnaires with open-ended questions.

- Secondary research: collecting existing data in the form of texts, images, audio or video recordings, etc.

- You take field notes with observations and reflect on your own experiences of the company culture.

- You distribute open-ended surveys to employees across all the company’s offices by email to find out if the culture varies across locations.

- You conduct in-depth interviews with employees in your office to learn about their experiences and perspectives in greater detail.

Qualitative researchers often consider themselves “instruments” in research because all observations, interpretations and analyses are filtered through their own personal lens.

For this reason, when writing up your methodology for qualitative research, it’s important to reflect on your approach and to thoroughly explain the choices you made in collecting and analyzing the data.

Qualitative data can take the form of texts, photos, videos and audio. For example, you might be working with interview transcripts, survey responses, fieldnotes, or recordings from natural settings.

Most types of qualitative data analysis share the same five steps:

- Prepare and organize your data. This may mean transcribing interviews or typing up fieldnotes.

- Review and explore your data. Examine the data for patterns or repeated ideas that emerge.

- Develop a data coding system. Based on your initial ideas, establish a set of codes that you can apply to categorize your data.

- Assign codes to the data. For example, in qualitative survey analysis, this may mean going through each participant’s responses and tagging them with codes in a spreadsheet. As you go through your data, you can create new codes to add to your system if necessary.

- Identify recurring themes. Link codes together into cohesive, overarching themes.

There are several specific approaches to analyzing qualitative data. Although these methods share similar processes, they emphasize different concepts.

| Approach | When to use | Example |

|---|---|---|

| To describe and categorize common words, phrases, and ideas in qualitative data. | A market researcher could perform content analysis to find out what kind of language is used in descriptions of therapeutic apps. | |

| To identify and interpret patterns and themes in qualitative data. | A psychologist could apply thematic analysis to travel blogs to explore how tourism shapes self-identity. | |

| To examine the content, structure, and design of texts. | A media researcher could use textual analysis to understand how news coverage of celebrities has changed in the past decade. | |

| To study communication and how language is used to achieve effects in specific contexts. | A political scientist could use discourse analysis to study how politicians generate trust in election campaigns. |

Qualitative research often tries to preserve the voice and perspective of participants and can be adjusted as new research questions arise. Qualitative research is good for:

- Flexibility

The data collection and analysis process can be adapted as new ideas or patterns emerge. They are not rigidly decided beforehand.

- Natural settings

Data collection occurs in real-world contexts or in naturalistic ways.

- Meaningful insights

Detailed descriptions of people’s experiences, feelings and perceptions can be used in designing, testing or improving systems or products.

- Generation of new ideas

Open-ended responses mean that researchers can uncover novel problems or opportunities that they wouldn’t have thought of otherwise.

Prevent plagiarism. Run a free check.

Researchers must consider practical and theoretical limitations in analyzing and interpreting their data. Qualitative research suffers from:

- Unreliability

The real-world setting often makes qualitative research unreliable because of uncontrolled factors that affect the data.

- Subjectivity

Due to the researcher’s primary role in analyzing and interpreting data, qualitative research cannot be replicated . The researcher decides what is important and what is irrelevant in data analysis, so interpretations of the same data can vary greatly.

- Limited generalizability

Small samples are often used to gather detailed data about specific contexts. Despite rigorous analysis procedures, it is difficult to draw generalizable conclusions because the data may be biased and unrepresentative of the wider population .

- Labor-intensive

Although software can be used to manage and record large amounts of text, data analysis often has to be checked or performed manually.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square goodness of fit test

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Inclusion and exclusion criteria

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

There are five common approaches to qualitative research :

- Grounded theory involves collecting data in order to develop new theories.

- Ethnography involves immersing yourself in a group or organization to understand its culture.

- Narrative research involves interpreting stories to understand how people make sense of their experiences and perceptions.

- Phenomenological research involves investigating phenomena through people’s lived experiences.

- Action research links theory and practice in several cycles to drive innovative changes.

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organizations.

There are various approaches to qualitative data analysis , but they all share five steps in common:

- Prepare and organize your data.

- Review and explore your data.

- Develop a data coding system.

- Assign codes to the data.

- Identify recurring themes.

The specifics of each step depend on the focus of the analysis. Some common approaches include textual analysis , thematic analysis , and discourse analysis .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). What Is Qualitative Research? | Methods & Examples. Scribbr. Retrieved August 21, 2024, from https://www.scribbr.com/methodology/qualitative-research/

Is this article helpful?

Pritha Bhandari

Other students also liked, qualitative vs. quantitative research | differences, examples & methods, how to do thematic analysis | step-by-step guide & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

The Ultimate Guide to Qualitative Research - Part 2: Handling Qualitative Data

- Handling qualitative data

- Transcripts

- Field notes

- Survey data and responses

- Visual and audio data

- Data organization

- Data coding

- Coding frame

- Auto and smart coding

- Organizing codes

- Introduction

What is qualitative data analysis?

Qualitative data analysis methods, how do you analyze qualitative data, content analysis, thematic analysis.

- Thematic analysis vs. content analysis

- Narrative research

Phenomenological research

Discourse analysis, grounded theory.

- Deductive reasoning

- Inductive reasoning

- Inductive vs. deductive reasoning

- Qualitative data interpretation

- Qualitative data analysis software

Qualitative data analysis

Analyzing qualitative data is the next step after you have completed the use of qualitative data collection methods . The qualitative analysis process aims to identify themes and patterns that emerge across the data.

In simplified terms, qualitative research methods involve non-numerical data collection followed by an explanation based on the attributes of the data . For example, if you are asked to explain in qualitative terms a thermal image displayed in multiple colors, then you would explain the color differences rather than the heat's numerical value. If you have a large amount of data (e.g., of group discussions or observations of real-life situations), the next step is to transcribe and prepare the raw data for subsequent analysis.

Researchers can conduct studies fully based on qualitative methodology, or researchers can preface a quantitative research study with a qualitative study to identify issues that were not originally envisioned but are important to the study. Quantitative researchers may also collect and analyze qualitative data following their quantitative analyses to better understand the meanings behind their statistical results.

Conducting qualitative research can especially help build an understanding of how and why certain outcomes were achieved (in addition to what was achieved). For example, qualitative data analysis is often used for policy and program evaluation research since it can answer certain important questions more efficiently and effectively than quantitative approaches.

Qualitative data analysis can also answer important questions about the relevance, unintended effects, and impact of programs, such as:

- Were expectations reasonable?

- Did processes operate as expected?

- Were key players able to carry out their duties?

- Were there any unintended effects of the program?

The importance of qualitative data analysis

Qualitative approaches have the advantage of allowing for more diversity in responses and the capacity to adapt to new developments or issues during the research process itself. While qualitative analysis of data can be demanding and time-consuming to conduct, many fields of research utilize qualitative software tools that have been specifically developed to provide more succinct, cost-efficient, and timely results.

Qualitative data analysis is an important part of research and building greater understanding across fields for a number of reasons. First, cases for qualitative data analysis can be selected purposefully according to whether they typify certain characteristics or contextual locations. In other words, qualitative data permits deep immersion into a topic, phenomenon, or area of interest. Rather than seeking generalizability to the population the sample of participants represent, qualitative research aims to construct an in-depth and nuanced understanding of the research topic.

Secondly, the role or position of the researcher in qualitative analysis of data is given greater critical attention. This is because, in qualitative data analysis, the possibility of the researcher taking a ‘neutral' or transcendent position is seen as more problematic in practical and/or philosophical terms. Hence, qualitative researchers are often exhorted to reflect on their role in the research process and make this clear in the analysis.

Thirdly, while qualitative data analysis can take a wide variety of forms, it largely differs from quantitative research in the focus on language, signs, experiences, and meaning. In addition, qualitative approaches to analysis are often holistic and contextual rather than analyzing the data in a piecemeal fashion or removing the data from its context. Qualitative approaches thus allow researchers to explore inquiries from directions that could not be accessed with only numerical quantitative data.

Establishing research rigor

Systematic and transparent approaches to the analysis of qualitative data are essential for rigor . For example, many qualitative research methods require researchers to carefully code data and discern and document themes in a consistent and credible way.

Perhaps the most traditional division in the way qualitative and quantitative research have been used in the social sciences is for qualitative methods to be used for exploratory purposes (e.g., to generate new theory or propositions) or to explain puzzling quantitative results, while quantitative methods are used to test hypotheses .

After you’ve collected relevant data , what is the best way to look at your data ? As always, it will depend on your research question . For instance, if you employed an observational research method to learn about a group’s shared practices, an ethnographic approach could be appropriate to explain the various dimensions of culture. If you collected textual data to understand how people talk about something, then a discourse analysis approach might help you generate key insights about language and communication.

The qualitative data coding process involves iterative categorization and recategorization, ensuring the evolution of the analysis to best represent the data. The procedure typically concludes with the interpretation of patterns and trends identified through the coding process.

To start off, let’s look at two broad approaches to data analysis.

Deductive analysis

Deductive analysis is guided by pre-existing theories or ideas. It starts with a theoretical framework , which is then used to code the data. The researcher can thus use this theoretical framework to interpret their data and answer their research question .

The key steps include coding the data based on the predetermined concepts or categories and using the theory to guide the interpretation of patterns among the codings. Deductive analysis is particularly useful when researchers aim to verify or extend an existing theory within a new context.

Inductive analysis

Inductive analysis involves the generation of new theories or ideas based on the data. The process starts without any preconceived theories or codes, and patterns, themes, and categories emerge out of the data.

The researcher codes the data to capture any concepts or patterns that seem interesting or important to the research question . These codes are then compared and linked, leading to the formation of broader categories or themes. The main goal of inductive analysis is to allow the data to 'speak for itself' rather than imposing pre-existing expectations or ideas onto the data.

Deductive and inductive approaches can be seen as sitting on opposite poles, and all research falls somewhere within that spectrum. Most often, qualitative analysis approaches blend both deductive and inductive elements to contribute to the existing conversation around a topic while remaining open to potential unexpected findings. To help you make informed decisions about which qualitative data analysis approach fits with your research objectives, let's look at some of the common approaches for qualitative data analysis.

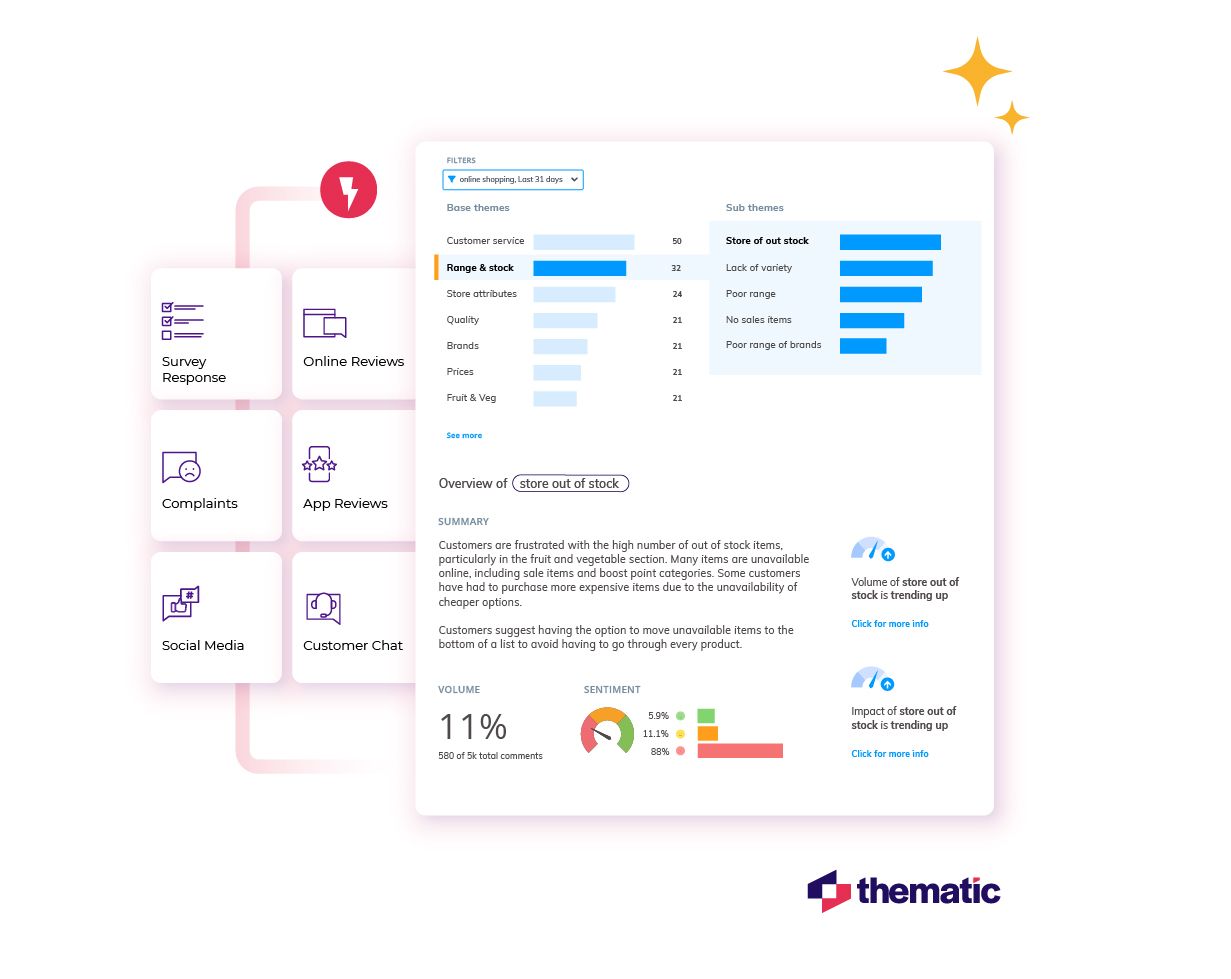

Content analysis is a research method used to identify patterns and themes within qualitative data. This approach involves systematically coding and categorizing specific aspects of the content in the data to uncover trends and patterns. An often important part of content analysis is quantifying frequencies and patterns of words or characteristics present in the data .

It is a highly flexible technique that can be adapted to various data types , including text, images, and audiovisual content . While content analysis can be exploratory in nature, it is also common to use pre-established theories and follow a more deductive approach to categorizing and quantifying the qualitative data.

Thematic analysis is a method used to identify, analyze, and report patterns or themes within the data. This approach moves beyond counting explicit words or phrases and focuses on also identifying implicit concepts and themes within the data.

Researchers conduct detailed coding of the data to ascertain repeated themes or patterns of meaning. Codes can be categorized into themes, and the researcher can analyze how the themes relate to one another. Thematic analysis is flexible in terms of the research framework, allowing for both inductive (data-driven) and deductive (theory-driven) approaches. The outcome is a rich, detailed, and complex account of the data.

Grounded theory is a systematic qualitative research methodology that is used to inductively generate theory that is 'grounded' in the data itself. Analysis takes place simultaneously with data collection , and researchers iterate between data collection and analysis until a comprehensive theory is developed.

Grounded theory is characterized by simultaneous data collection and analysis, the development of theoretical codes from the data, purposeful sampling of participants, and the constant comparison of data with emerging categories and concepts. The ultimate goal is to create a theoretical explanation that fits the data and answers the research question .

Discourse analysis is a qualitative research approach that emphasizes the role of language in social contexts. It involves examining communication and language use beyond the level of the sentence, considering larger units of language such as texts or conversations.

Discourse analysts typically investigate how social meanings and understandings are constructed in different contexts, emphasizing the connection between language and power. It can be applied to texts of all kinds, including interviews , documents, case studies , and social media posts.

Phenomenological research focuses on exploring how human beings make sense of an experience and delves into the essence of this experience. It strives to understand people's perceptions, perspectives, and understandings of a particular situation or phenomenon.

It involves in-depth engagement with participants, often through interviews or conversations, to explore their lived experiences. The goal is to derive detailed descriptions of the essence of the experience and to interpret what insights or implications this may bear on our understanding of this phenomenon.

Whatever your data analysis approach, start with ATLAS.ti

Qualitative data analysis done quickly and intuitively with ATLAS.ti. Download a free trial today.

Now that we've summarized the major approaches to data analysis, let's look at the broader process of research and data analysis. Suppose you need to do some research to find answers to any kind of research question, be it an academic inquiry, business problem, or policy decision. In that case, you need to collect some data. There are many methods of collecting data: you can collect primary data yourself by conducting interviews, focus groups , or a survey , for instance. Another option is to use secondary data sources. These are data previously collected for other projects, historical records, reports, statistics – basically everything that exists already and can be relevant to your research.

The data you collect should always be a good fit for your research question . For example, if you are interested in how many people in your target population like your brand compared to others, it is no use to conduct interviews or a few focus groups . The sample will be too small to get a representative picture of the population. If your questions are about "how many….", "what is the spread…" etc., you need to conduct quantitative research . If you are interested in why people like different brands, their motives, and their experiences, then conducting qualitative research can provide you with the answers you are looking for.

Let's describe the important steps involved in conducting research.

Step 1: Planning the research

As the saying goes: "Garbage in, garbage out." Suppose you find out after you have collected data that

- you talked to the wrong people

- asked the wrong questions

- a couple of focus groups sessions would have yielded better results because of the group interaction, or

- a survey including a few open-ended questions sent to a larger group of people would have been sufficient and required less effort.

Think thoroughly about sampling, the questions you will be asking, and in which form. If you conduct a focus group or an interview, you are the research instrument, and your data collection will only be as good as you are. If you have never done it before, seek some training and practice. If you have other people do it, make sure they have the skills.

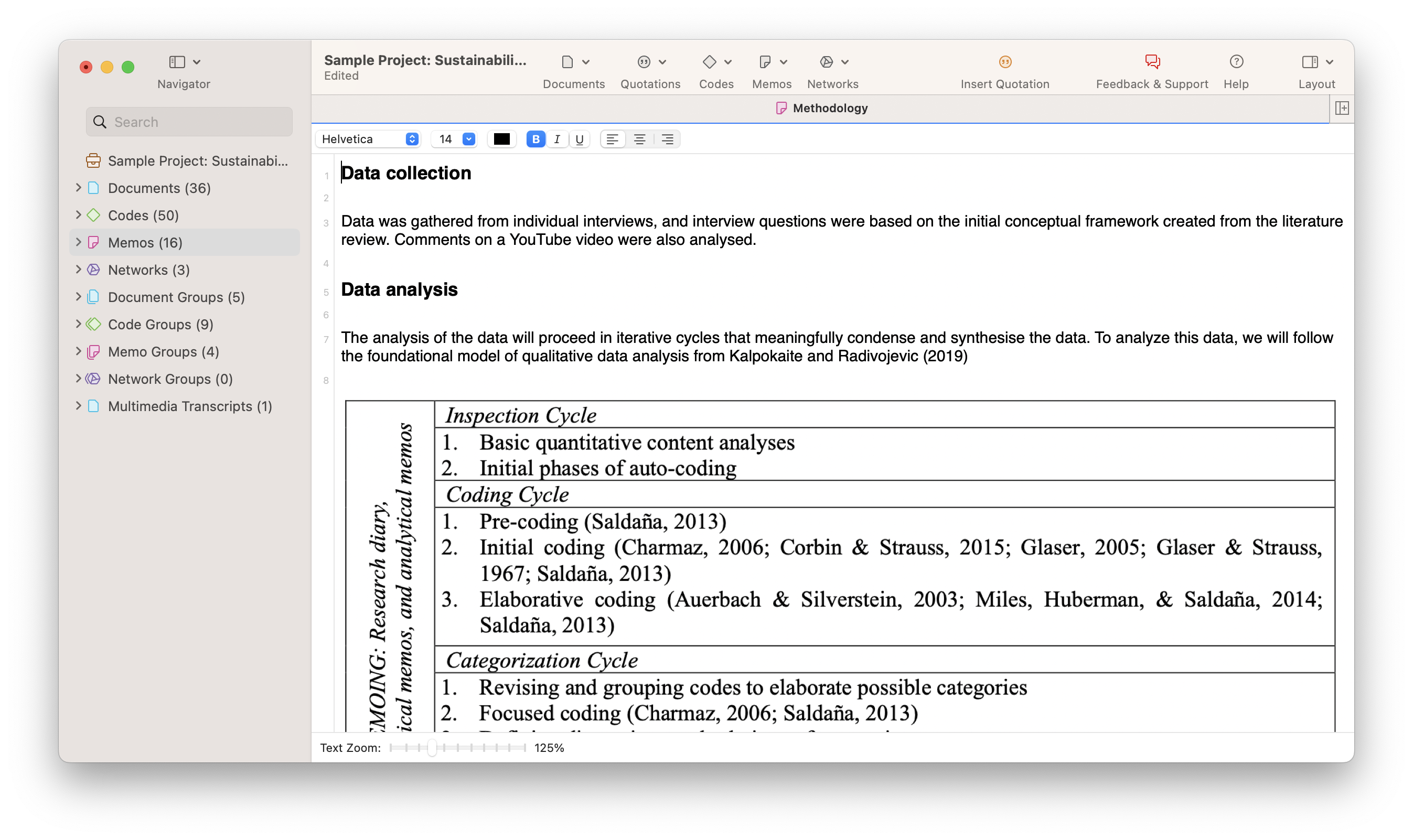

Step 2: Preparing the data

When you conduct focus groups or interviews, think about how to transcribe them. Do you want to run them online or offline? If online, check out which tools can serve your needs, both in terms of functionality and cost. For any audio or video recordings , you can consider using automatic transcription software or services. Automatically generated transcripts can save you time and money, but they still need to be checked. If you don't do this yourself, make sure that you instruct the person doing it on how to prepare the data.

- How should the final transcript be formatted for later analysis?

- Which names and locations should be anonymized?

- What kind of speaker IDs to use?

What about survey data ? Some survey data programs will immediately provide basic descriptive-level analysis of the responses. ATLAS.ti will support you with the analysis of the open-ended questions. For this, you need to export your data as an Excel file. ATLAS.ti's survey import wizard will guide you through the process.

Other kinds of data such as images, videos, audio recordings, text, and more can be imported to ATLAS.ti. You can organize all your data into groups and write comments on each source of data to maintain a systematic organization and documentation of your data.

Step 3: Exploratory data analysis

You can run a few simple exploratory analyses to get to know your data. For instance, you can create a word list or word cloud of all your text data or compare and contrast the words in different documents. You can also let ATLAS.ti find relevant concepts for you. There are many tools available that can automatically code your text data, so you can also use these codings to explore your data and refine your coding.

For instance, you can get a feeling for the sentiments expressed in the data. Who is more optimistic, pessimistic, or neutral in their responses? ATLAS.ti can auto-code the positive, negative, and neutral sentiments in your data. Naturally, you can also simply browse through your data and highlight relevant segments that catch your attention or attach codes to begin condensing the data.

Step 4: Build a code system

Whether you start with auto-coding or manual coding, after having generated some first codes, you need to get some order in your code system to develop a cohesive understanding. You can build your code system by sorting codes into groups and creating categories and subcodes. As this process requires reading and re-reading your data, you will become very familiar with your data. Counting on a tool like ATLAS.ti qualitative data analysis software will support you in the process and make it easier to review your data, modify codings if necessary, change code labels, and write operational definitions to explain what each code means.

Step 5: Query your coded data and write up the analysis

Once you have coded your data, it is time to take the analysis a step further. When using software for qualitative data analysis , it is easy to compare and contrast subsets in your data, such as groups of participants or sets of themes.