Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Literature Review | Guide, Examples, & Templates

How to Write a Literature Review | Guide, Examples, & Templates

Published on January 2, 2023 by Shona McCombes . Revised on September 11, 2023.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research that you can later apply to your paper, thesis, or dissertation topic .

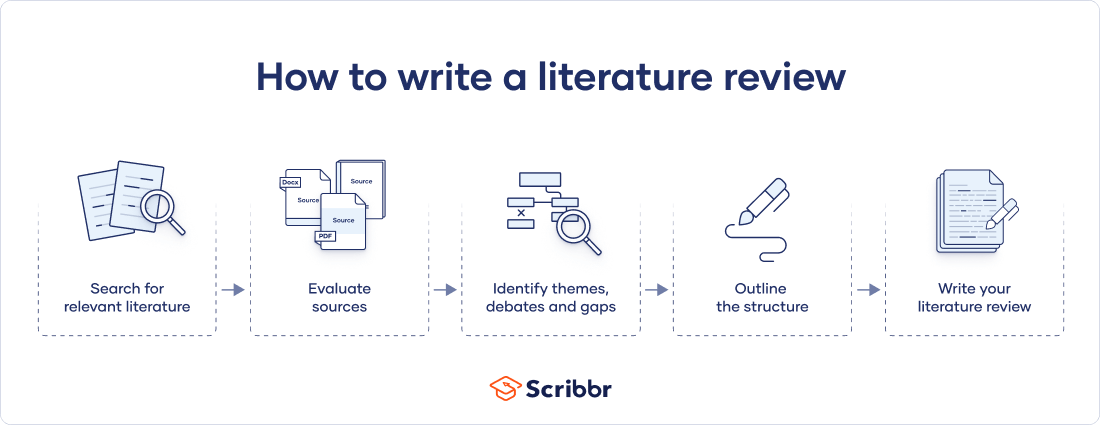

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates, and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarize sources—it analyzes, synthesizes , and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

What is the purpose of a literature review, examples of literature reviews, step 1 – search for relevant literature, step 2 – evaluate and select sources, step 3 – identify themes, debates, and gaps, step 4 – outline your literature review’s structure, step 5 – write your literature review, free lecture slides, other interesting articles, frequently asked questions, introduction.

- Quick Run-through

- Step 1 & 2

When you write a thesis , dissertation , or research paper , you will likely have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and its scholarly context

- Develop a theoretical framework and methodology for your research

- Position your work in relation to other researchers and theorists

- Show how your research addresses a gap or contributes to a debate

- Evaluate the current state of research and demonstrate your knowledge of the scholarly debates around your topic.

Writing literature reviews is a particularly important skill if you want to apply for graduate school or pursue a career in research. We’ve written a step-by-step guide that you can follow below.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .

If you are writing the literature review section of a dissertation or research paper, you will search for literature related to your research problem and questions .

Make a list of keywords

Start by creating a list of keywords related to your research question. Include each of the key concepts or variables you’re interested in, and list any synonyms and related terms. You can add to this list as you discover new keywords in the process of your literature search.

- Social media, Facebook, Instagram, Twitter, Snapchat, TikTok

- Body image, self-perception, self-esteem, mental health

- Generation Z, teenagers, adolescents, youth

Search for relevant sources

Use your keywords to begin searching for sources. Some useful databases to search for journals and articles include:

- Your university’s library catalogue

- Google Scholar

- Project Muse (humanities and social sciences)

- Medline (life sciences and biomedicine)

- EconLit (economics)

- Inspec (physics, engineering and computer science)

You can also use boolean operators to help narrow down your search.

Make sure to read the abstract to find out whether an article is relevant to your question. When you find a useful book or article, you can check the bibliography to find other relevant sources.

You likely won’t be able to read absolutely everything that has been written on your topic, so it will be necessary to evaluate which sources are most relevant to your research question.

For each publication, ask yourself:

- What question or problem is the author addressing?

- What are the key concepts and how are they defined?

- What are the key theories, models, and methods?

- Does the research use established frameworks or take an innovative approach?

- What are the results and conclusions of the study?

- How does the publication relate to other literature in the field? Does it confirm, add to, or challenge established knowledge?

- What are the strengths and weaknesses of the research?

Make sure the sources you use are credible , and make sure you read any landmark studies and major theories in your field of research.

You can use our template to summarize and evaluate sources you’re thinking about using. Click on either button below to download.

Take notes and cite your sources

As you read, you should also begin the writing process. Take notes that you can later incorporate into the text of your literature review.

It is important to keep track of your sources with citations to avoid plagiarism . It can be helpful to make an annotated bibliography , where you compile full citation information and write a paragraph of summary and analysis for each source. This helps you remember what you read and saves time later in the process.

Prevent plagiarism. Run a free check.

To begin organizing your literature review’s argument and structure, be sure you understand the connections and relationships between the sources you’ve read. Based on your reading and notes, you can look for:

- Trends and patterns (in theory, method or results): do certain approaches become more or less popular over time?

- Themes: what questions or concepts recur across the literature?

- Debates, conflicts and contradictions: where do sources disagree?

- Pivotal publications: are there any influential theories or studies that changed the direction of the field?

- Gaps: what is missing from the literature? Are there weaknesses that need to be addressed?

This step will help you work out the structure of your literature review and (if applicable) show how your own research will contribute to existing knowledge.

- Most research has focused on young women.

- There is an increasing interest in the visual aspects of social media.

- But there is still a lack of robust research on highly visual platforms like Instagram and Snapchat—this is a gap that you could address in your own research.

There are various approaches to organizing the body of a literature review. Depending on the length of your literature review, you can combine several of these strategies (for example, your overall structure might be thematic, but each theme is discussed chronologically).

Chronological

The simplest approach is to trace the development of the topic over time. However, if you choose this strategy, be careful to avoid simply listing and summarizing sources in order.

Try to analyze patterns, turning points and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred.

If you have found some recurring central themes, you can organize your literature review into subsections that address different aspects of the topic.

For example, if you are reviewing literature about inequalities in migrant health outcomes, key themes might include healthcare policy, language barriers, cultural attitudes, legal status, and economic access.

Methodological

If you draw your sources from different disciplines or fields that use a variety of research methods , you might want to compare the results and conclusions that emerge from different approaches. For example:

- Look at what results have emerged in qualitative versus quantitative research

- Discuss how the topic has been approached by empirical versus theoretical scholarship

- Divide the literature into sociological, historical, and cultural sources

Theoretical

A literature review is often the foundation for a theoretical framework . You can use it to discuss various theories, models, and definitions of key concepts.

You might argue for the relevance of a specific theoretical approach, or combine various theoretical concepts to create a framework for your research.

Like any other academic text , your literature review should have an introduction , a main body, and a conclusion . What you include in each depends on the objective of your literature review.

The introduction should clearly establish the focus and purpose of the literature review.

Depending on the length of your literature review, you might want to divide the body into subsections. You can use a subheading for each theme, time period, or methodological approach.

As you write, you can follow these tips:

- Summarize and synthesize: give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: don’t just paraphrase other researchers — add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically evaluate: mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: use transition words and topic sentences to draw connections, comparisons and contrasts

In the conclusion, you should summarize the key findings you have taken from the literature and emphasize their significance.

When you’ve finished writing and revising your literature review, don’t forget to proofread thoroughly before submitting. Not a language expert? Check out Scribbr’s professional proofreading services !

This article has been adapted into lecture slides that you can use to teach your students about writing a literature review.

Scribbr slides are free to use, customize, and distribute for educational purposes.

Open Google Slides Download PowerPoint

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarize yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

The literature review usually comes near the beginning of your thesis or dissertation . After the introduction , it grounds your research in a scholarly field and leads directly to your theoretical framework or methodology .

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, September 11). How to Write a Literature Review | Guide, Examples, & Templates. Scribbr. Retrieved August 26, 2024, from https://www.scribbr.com/dissertation/literature-review/

Is this article helpful?

Shona McCombes

Other students also liked, what is a theoretical framework | guide to organizing, what is a research methodology | steps & tips, how to write a research proposal | examples & templates, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

What is a Literature Review? How to Write It (with Examples)

A literature review is a critical analysis and synthesis of existing research on a particular topic. It provides an overview of the current state of knowledge, identifies gaps, and highlights key findings in the literature. 1 The purpose of a literature review is to situate your own research within the context of existing scholarship, demonstrating your understanding of the topic and showing how your work contributes to the ongoing conversation in the field. Learning how to write a literature review is a critical tool for successful research. Your ability to summarize and synthesize prior research pertaining to a certain topic demonstrates your grasp on the topic of study, and assists in the learning process.

Table of Contents

- What is the purpose of literature review?

- a. Habitat Loss and Species Extinction:

- b. Range Shifts and Phenological Changes:

- c. Ocean Acidification and Coral Reefs:

- d. Adaptive Strategies and Conservation Efforts:

How to write a good literature review

- Choose a Topic and Define the Research Question:

- Decide on the Scope of Your Review:

- Select Databases for Searches:

- Conduct Searches and Keep Track:

- Review the Literature:

- Organize and Write Your Literature Review:

- How to write a literature review faster with Paperpal?

- Frequently asked questions

What is a literature review?

A well-conducted literature review demonstrates the researcher’s familiarity with the existing literature, establishes the context for their own research, and contributes to scholarly conversations on the topic. One of the purposes of a literature review is also to help researchers avoid duplicating previous work and ensure that their research is informed by and builds upon the existing body of knowledge.

What is the purpose of literature review?

A literature review serves several important purposes within academic and research contexts. Here are some key objectives and functions of a literature review: 2

1. Contextualizing the Research Problem: The literature review provides a background and context for the research problem under investigation. It helps to situate the study within the existing body of knowledge.

2. Identifying Gaps in Knowledge: By identifying gaps, contradictions, or areas requiring further research, the researcher can shape the research question and justify the significance of the study. This is crucial for ensuring that the new research contributes something novel to the field.

Find academic papers related to your research topic faster. Try Research on Paperpal

3. Understanding Theoretical and Conceptual Frameworks: Literature reviews help researchers gain an understanding of the theoretical and conceptual frameworks used in previous studies. This aids in the development of a theoretical framework for the current research.

4. Providing Methodological Insights: Another purpose of literature reviews is that it allows researchers to learn about the methodologies employed in previous studies. This can help in choosing appropriate research methods for the current study and avoiding pitfalls that others may have encountered.

5. Establishing Credibility: A well-conducted literature review demonstrates the researcher’s familiarity with existing scholarship, establishing their credibility and expertise in the field. It also helps in building a solid foundation for the new research.

6. Informing Hypotheses or Research Questions: The literature review guides the formulation of hypotheses or research questions by highlighting relevant findings and areas of uncertainty in existing literature.

Literature review example

Let’s delve deeper with a literature review example: Let’s say your literature review is about the impact of climate change on biodiversity. You might format your literature review into sections such as the effects of climate change on habitat loss and species extinction, phenological changes, and marine biodiversity. Each section would then summarize and analyze relevant studies in those areas, highlighting key findings and identifying gaps in the research. The review would conclude by emphasizing the need for further research on specific aspects of the relationship between climate change and biodiversity. The following literature review template provides a glimpse into the recommended literature review structure and content, demonstrating how research findings are organized around specific themes within a broader topic.

Literature Review on Climate Change Impacts on Biodiversity:

Climate change is a global phenomenon with far-reaching consequences, including significant impacts on biodiversity. This literature review synthesizes key findings from various studies:

a. Habitat Loss and Species Extinction:

Climate change-induced alterations in temperature and precipitation patterns contribute to habitat loss, affecting numerous species (Thomas et al., 2004). The review discusses how these changes increase the risk of extinction, particularly for species with specific habitat requirements.

b. Range Shifts and Phenological Changes:

Observations of range shifts and changes in the timing of biological events (phenology) are documented in response to changing climatic conditions (Parmesan & Yohe, 2003). These shifts affect ecosystems and may lead to mismatches between species and their resources.

c. Ocean Acidification and Coral Reefs:

The review explores the impact of climate change on marine biodiversity, emphasizing ocean acidification’s threat to coral reefs (Hoegh-Guldberg et al., 2007). Changes in pH levels negatively affect coral calcification, disrupting the delicate balance of marine ecosystems.

d. Adaptive Strategies and Conservation Efforts:

Recognizing the urgency of the situation, the literature review discusses various adaptive strategies adopted by species and conservation efforts aimed at mitigating the impacts of climate change on biodiversity (Hannah et al., 2007). It emphasizes the importance of interdisciplinary approaches for effective conservation planning.

Strengthen your literature review with factual insights. Try Research on Paperpal for free!

Writing a literature review involves summarizing and synthesizing existing research on a particular topic. A good literature review format should include the following elements.

Introduction: The introduction sets the stage for your literature review, providing context and introducing the main focus of your review.

- Opening Statement: Begin with a general statement about the broader topic and its significance in the field.

- Scope and Purpose: Clearly define the scope of your literature review. Explain the specific research question or objective you aim to address.

- Organizational Framework: Briefly outline the structure of your literature review, indicating how you will categorize and discuss the existing research.

- Significance of the Study: Highlight why your literature review is important and how it contributes to the understanding of the chosen topic.

- Thesis Statement: Conclude the introduction with a concise thesis statement that outlines the main argument or perspective you will develop in the body of the literature review.

Body: The body of the literature review is where you provide a comprehensive analysis of existing literature, grouping studies based on themes, methodologies, or other relevant criteria.

- Organize by Theme or Concept: Group studies that share common themes, concepts, or methodologies. Discuss each theme or concept in detail, summarizing key findings and identifying gaps or areas of disagreement.

- Critical Analysis: Evaluate the strengths and weaknesses of each study. Discuss the methodologies used, the quality of evidence, and the overall contribution of each work to the understanding of the topic.

- Synthesis of Findings: Synthesize the information from different studies to highlight trends, patterns, or areas of consensus in the literature.

- Identification of Gaps: Discuss any gaps or limitations in the existing research and explain how your review contributes to filling these gaps.

- Transition between Sections: Provide smooth transitions between different themes or concepts to maintain the flow of your literature review.

Write and Cite as you go with Paperpal Research. Start now for free.

Conclusion: The conclusion of your literature review should summarize the main findings, highlight the contributions of the review, and suggest avenues for future research.

- Summary of Key Findings: Recap the main findings from the literature and restate how they contribute to your research question or objective.

- Contributions to the Field: Discuss the overall contribution of your literature review to the existing knowledge in the field.

- Implications and Applications: Explore the practical implications of the findings and suggest how they might impact future research or practice.

- Recommendations for Future Research: Identify areas that require further investigation and propose potential directions for future research in the field.

- Final Thoughts: Conclude with a final reflection on the importance of your literature review and its relevance to the broader academic community.

Conducting a literature review

Conducting a literature review is an essential step in research that involves reviewing and analyzing existing literature on a specific topic. It’s important to know how to do a literature review effectively, so here are the steps to follow: 1

Choose a Topic and Define the Research Question:

- Select a topic that is relevant to your field of study.

- Clearly define your research question or objective. Determine what specific aspect of the topic do you want to explore?

Decide on the Scope of Your Review:

- Determine the timeframe for your literature review. Are you focusing on recent developments, or do you want a historical overview?

- Consider the geographical scope. Is your review global, or are you focusing on a specific region?

- Define the inclusion and exclusion criteria. What types of sources will you include? Are there specific types of studies or publications you will exclude?

Select Databases for Searches:

- Identify relevant databases for your field. Examples include PubMed, IEEE Xplore, Scopus, Web of Science, and Google Scholar.

- Consider searching in library catalogs, institutional repositories, and specialized databases related to your topic.

Conduct Searches and Keep Track:

- Develop a systematic search strategy using keywords, Boolean operators (AND, OR, NOT), and other search techniques.

- Record and document your search strategy for transparency and replicability.

- Keep track of the articles, including publication details, abstracts, and links. Use citation management tools like EndNote, Zotero, or Mendeley to organize your references.

Review the Literature:

- Evaluate the relevance and quality of each source. Consider the methodology, sample size, and results of studies.

- Organize the literature by themes or key concepts. Identify patterns, trends, and gaps in the existing research.

- Summarize key findings and arguments from each source. Compare and contrast different perspectives.

- Identify areas where there is a consensus in the literature and where there are conflicting opinions.

- Provide critical analysis and synthesis of the literature. What are the strengths and weaknesses of existing research?

Organize and Write Your Literature Review:

- Literature review outline should be based on themes, chronological order, or methodological approaches.

- Write a clear and coherent narrative that synthesizes the information gathered.

- Use proper citations for each source and ensure consistency in your citation style (APA, MLA, Chicago, etc.).

- Conclude your literature review by summarizing key findings, identifying gaps, and suggesting areas for future research.

Whether you’re exploring a new research field or finding new angles to develop an existing topic, sifting through hundreds of papers can take more time than you have to spare. But what if you could find science-backed insights with verified citations in seconds? That’s the power of Paperpal’s new Research feature!

How to write a literature review faster with Paperpal?

Paperpal, an AI writing assistant, integrates powerful academic search capabilities within its writing platform. With the Research feature, you get 100% factual insights, with citations backed by 250M+ verified research articles, directly within your writing interface with the option to save relevant references in your Citation Library. By eliminating the need to switch tabs to find answers to all your research questions, Paperpal saves time and helps you stay focused on your writing.

Here’s how to use the Research feature:

- Ask a question: Get started with a new document on paperpal.com. Click on the “Research” feature and type your question in plain English. Paperpal will scour over 250 million research articles, including conference papers and preprints, to provide you with accurate insights and citations.

- Review and Save: Paperpal summarizes the information, while citing sources and listing relevant reads. You can quickly scan the results to identify relevant references and save these directly to your built-in citations library for later access.

- Cite with Confidence: Paperpal makes it easy to incorporate relevant citations and references into your writing, ensuring your arguments are well-supported by credible sources. This translates to a polished, well-researched literature review.

The literature review sample and detailed advice on writing and conducting a review will help you produce a well-structured report. But remember that a good literature review is an ongoing process, and it may be necessary to revisit and update it as your research progresses. By combining effortless research with an easy citation process, Paperpal Research streamlines the literature review process and empowers you to write faster and with more confidence. Try Paperpal Research now and see for yourself.

Frequently asked questions

A literature review is a critical and comprehensive analysis of existing literature (published and unpublished works) on a specific topic or research question and provides a synthesis of the current state of knowledge in a particular field. A well-conducted literature review is crucial for researchers to build upon existing knowledge, avoid duplication of efforts, and contribute to the advancement of their field. It also helps researchers situate their work within a broader context and facilitates the development of a sound theoretical and conceptual framework for their studies.

Literature review is a crucial component of research writing, providing a solid background for a research paper’s investigation. The aim is to keep professionals up to date by providing an understanding of ongoing developments within a specific field, including research methods, and experimental techniques used in that field, and present that knowledge in the form of a written report. Also, the depth and breadth of the literature review emphasizes the credibility of the scholar in his or her field.

Before writing a literature review, it’s essential to undertake several preparatory steps to ensure that your review is well-researched, organized, and focused. This includes choosing a topic of general interest to you and doing exploratory research on that topic, writing an annotated bibliography, and noting major points, especially those that relate to the position you have taken on the topic.

Literature reviews and academic research papers are essential components of scholarly work but serve different purposes within the academic realm. 3 A literature review aims to provide a foundation for understanding the current state of research on a particular topic, identify gaps or controversies, and lay the groundwork for future research. Therefore, it draws heavily from existing academic sources, including books, journal articles, and other scholarly publications. In contrast, an academic research paper aims to present new knowledge, contribute to the academic discourse, and advance the understanding of a specific research question. Therefore, it involves a mix of existing literature (in the introduction and literature review sections) and original data or findings obtained through research methods.

Literature reviews are essential components of academic and research papers, and various strategies can be employed to conduct them effectively. If you want to know how to write a literature review for a research paper, here are four common approaches that are often used by researchers. Chronological Review: This strategy involves organizing the literature based on the chronological order of publication. It helps to trace the development of a topic over time, showing how ideas, theories, and research have evolved. Thematic Review: Thematic reviews focus on identifying and analyzing themes or topics that cut across different studies. Instead of organizing the literature chronologically, it is grouped by key themes or concepts, allowing for a comprehensive exploration of various aspects of the topic. Methodological Review: This strategy involves organizing the literature based on the research methods employed in different studies. It helps to highlight the strengths and weaknesses of various methodologies and allows the reader to evaluate the reliability and validity of the research findings. Theoretical Review: A theoretical review examines the literature based on the theoretical frameworks used in different studies. This approach helps to identify the key theories that have been applied to the topic and assess their contributions to the understanding of the subject. It’s important to note that these strategies are not mutually exclusive, and a literature review may combine elements of more than one approach. The choice of strategy depends on the research question, the nature of the literature available, and the goals of the review. Additionally, other strategies, such as integrative reviews or systematic reviews, may be employed depending on the specific requirements of the research.

The literature review format can vary depending on the specific publication guidelines. However, there are some common elements and structures that are often followed. Here is a general guideline for the format of a literature review: Introduction: Provide an overview of the topic. Define the scope and purpose of the literature review. State the research question or objective. Body: Organize the literature by themes, concepts, or chronology. Critically analyze and evaluate each source. Discuss the strengths and weaknesses of the studies. Highlight any methodological limitations or biases. Identify patterns, connections, or contradictions in the existing research. Conclusion: Summarize the key points discussed in the literature review. Highlight the research gap. Address the research question or objective stated in the introduction. Highlight the contributions of the review and suggest directions for future research.

Both annotated bibliographies and literature reviews involve the examination of scholarly sources. While annotated bibliographies focus on individual sources with brief annotations, literature reviews provide a more in-depth, integrated, and comprehensive analysis of existing literature on a specific topic. The key differences are as follows:

| Annotated Bibliography | Literature Review | |

| Purpose | List of citations of books, articles, and other sources with a brief description (annotation) of each source. | Comprehensive and critical analysis of existing literature on a specific topic. |

| Focus | Summary and evaluation of each source, including its relevance, methodology, and key findings. | Provides an overview of the current state of knowledge on a particular subject and identifies gaps, trends, and patterns in existing literature. |

| Structure | Each citation is followed by a concise paragraph (annotation) that describes the source’s content, methodology, and its contribution to the topic. | The literature review is organized thematically or chronologically and involves a synthesis of the findings from different sources to build a narrative or argument. |

| Length | Typically 100-200 words | Length of literature review ranges from a few pages to several chapters |

| Independence | Each source is treated separately, with less emphasis on synthesizing the information across sources. | The writer synthesizes information from multiple sources to present a cohesive overview of the topic. |

References

- Denney, A. S., & Tewksbury, R. (2013). How to write a literature review. Journal of criminal justice education , 24 (2), 218-234.

- Pan, M. L. (2016). Preparing literature reviews: Qualitative and quantitative approaches . Taylor & Francis.

- Cantero, C. (2019). How to write a literature review. San José State University Writing Center .

Paperpal is an AI writing assistant that help academics write better, faster with real-time suggestions for in-depth language and grammar correction. Trained on millions of research manuscripts enhanced by professional academic editors, Paperpal delivers human precision at machine speed.

Try it for free or upgrade to Paperpal Prime , which unlocks unlimited access to premium features like academic translation, paraphrasing, contextual synonyms, consistency checks and more. It’s like always having a professional academic editor by your side! Go beyond limitations and experience the future of academic writing. Get Paperpal Prime now at just US$19 a month!

Related Reads:

- Empirical Research: A Comprehensive Guide for Academics

- How to Write a Scientific Paper in 10 Steps

- How Long Should a Chapter Be?

- How to Use Paperpal to Generate Emails & Cover Letters?

6 Tips for Post-Doc Researchers to Take Their Career to the Next Level

Self-plagiarism in research: what it is and how to avoid it, you may also like, academic integrity vs academic dishonesty: types & examples, dissertation printing and binding | types & comparison , what is a dissertation preface definition and examples , the ai revolution: authors’ role in upholding academic..., the future of academia: how ai tools are..., how to write a research proposal: (with examples..., how to write your research paper in apa..., how to choose a dissertation topic, how to write a phd research proposal, how to write an academic paragraph (step-by-step guide).

Learning and Teaching: Literature Review

- Course Texts

- Action Research

- TESOL, Literacy, and Culture

Literature Review

- Citation Resources

- Award-Winning Book Lists This link opens in a new window

- Publishing Your Work This link opens in a new window

Literature Reviews: An Overview for Graduate Students

1. Definition

Not to be confused with a book review, a literature review surveys scholarly articles, books and other sources (e.g. dissertations, conference proceedings) relevant to a particular issue, area of research, or theory, providing a description, summary, and critical evaluation of each work. The purpose is to offer an overview of significant literature published on a topic.

2. Components

Similar to primary research, development of the literature review requires four stages:

- Problem formulation—which topic or field is being examined and what are its component issues?

- Literature search—finding materials relevant to the subject being explored

- Data evaluation—determining which literature makes a significant contribution to the understanding of the topic

- Analysis and interpretation—discussing the findings and conclusions of pertinent literature

Literature reviews should comprise the following elements:

- An overview of the subject, issue or theory under consideration, along with the objectives of the literature review

- Division of works under review into categories (e.g. those in support of a particular position, those against, and those offering alternative theses entirely)

- Explanation of how each work is similar to and how it varies from the others

- Conclusions as to which pieces are best considered in their argument, are most convincing of their opinions, and make the greatest contribution to the understanding and development of their area of research

In assessing each piece, consideration should be given to:

- Provenance—What are the author's credentials? Are the author's arguments supported by evidence (e.g. primary historical material, case studies, narratives, statistics, recent scientific findings)?

- Objectivity—Is the author's perspective even-handed or prejudicial? Is contrary data considered or is certain pertinent information ignored to prove the author's point?

- Persuasiveness—Which of the author's theses are most/least convincing?

- Value—Are the author's arguments and conclusions convincing? Does the work ultimately contribute in any significant way to an understanding of the subject?

3. Definition and Use/Purpose

A literature review may constitute an essential chapter of a thesis or dissertation, or may be a self-contained review of writings on a subject. In either case, its purpose is to:

- Place each work in the context of its contribution to the understanding of the subject under review

- Describe the relationship of each work to the others under consideration

- Identify new ways to interpret, and shed light on any gaps in, previous research

- Resolve conflicts amongst seemingly contradictory previous studies

- Identify areas of prior scholarship to prevent duplication of effort

- Point the way forward for further research

- Place one's original work (in the case of theses or dissertations) in the context of existing literature

The literature review itself, however, does not present new primary scholarship.

All of the above information was obtained from UC Santa Cruz University Library http://guides.library.ucsc.edu/write-a-literature-review

Selected LitReview Resources at Copley

- << Previous: TESOL, Literacy, and Culture

- Next: Citation Resources >>

- Last Updated: May 22, 2024 2:28 PM

- URL: https://libguides.sandiego.edu/educ

Libraries | Research Guides

Literature reviews, what is a literature review, learning more about how to do a literature review.

- Planning the Review

- The Research Question

- Choosing Where to Search

- Organizing the Review

- Writing the Review

A literature review is a review and synthesis of existing research on a topic or research question. A literature review is meant to analyze the scholarly literature, make connections across writings and identify strengths, weaknesses, trends, and missing conversations. A literature review should address different aspects of a topic as it relates to your research question. A literature review goes beyond a description or summary of the literature you have read.

- Sage Research Methods Core This link opens in a new window SAGE Research Methods supports research at all levels by providing material to guide users through every step of the research process. SAGE Research Methods is the ultimate methods library with more than 1000 books, reference works, journal articles, and instructional videos by world-leading academics from across the social sciences, including the largest collection of qualitative methods books available online from any scholarly publisher. – Publisher

- Next: Planning the Review >>

- Last Updated: Jul 8, 2024 11:22 AM

- URL: https://libguides.northwestern.edu/literaturereviews

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Clinics (Sao Paulo)

Approaching literature review for academic purposes: The Literature Review Checklist

Debora f.b. leite.

I Departamento de Ginecologia e Obstetricia, Faculdade de Ciencias Medicas, Universidade Estadual de Campinas, Campinas, SP, BR

II Universidade Federal de Pernambuco, Pernambuco, PE, BR

III Hospital das Clinicas, Universidade Federal de Pernambuco, Pernambuco, PE, BR

Maria Auxiliadora Soares Padilha

Jose g. cecatti.

A sophisticated literature review (LR) can result in a robust dissertation/thesis by scrutinizing the main problem examined by the academic study; anticipating research hypotheses, methods and results; and maintaining the interest of the audience in how the dissertation/thesis will provide solutions for the current gaps in a particular field. Unfortunately, little guidance is available on elaborating LRs, and writing an LR chapter is not a linear process. An LR translates students’ abilities in information literacy, the language domain, and critical writing. Students in postgraduate programs should be systematically trained in these skills. Therefore, this paper discusses the purposes of LRs in dissertations and theses. Second, the paper considers five steps for developing a review: defining the main topic, searching the literature, analyzing the results, writing the review and reflecting on the writing. Ultimately, this study proposes a twelve-item LR checklist. By clearly stating the desired achievements, this checklist allows Masters and Ph.D. students to continuously assess their own progress in elaborating an LR. Institutions aiming to strengthen students’ necessary skills in critical academic writing should also use this tool.

INTRODUCTION

Writing the literature review (LR) is often viewed as a difficult task that can be a point of writer’s block and procrastination ( 1 ) in postgraduate life. Disagreements on the definitions or classifications of LRs ( 2 ) may confuse students about their purpose and scope, as well as how to perform an LR. Interestingly, at many universities, the LR is still an important element in any academic work, despite the more recent trend of producing scientific articles rather than classical theses.

The LR is not an isolated section of the thesis/dissertation or a copy of the background section of a research proposal. It identifies the state-of-the-art knowledge in a particular field, clarifies information that is already known, elucidates implications of the problem being analyzed, links theory and practice ( 3 - 5 ), highlights gaps in the current literature, and places the dissertation/thesis within the research agenda of that field. Additionally, by writing the LR, postgraduate students will comprehend the structure of the subject and elaborate on their cognitive connections ( 3 ) while analyzing and synthesizing data with increasing maturity.

At the same time, the LR transforms the student and hints at the contents of other chapters for the reader. First, the LR explains the research question; second, it supports the hypothesis, objectives, and methods of the research project; and finally, it facilitates a description of the student’s interpretation of the results and his/her conclusions. For scholars, the LR is an introductory chapter ( 6 ). If it is well written, it demonstrates the student’s understanding of and maturity in a particular topic. A sound and sophisticated LR can indicate a robust dissertation/thesis.

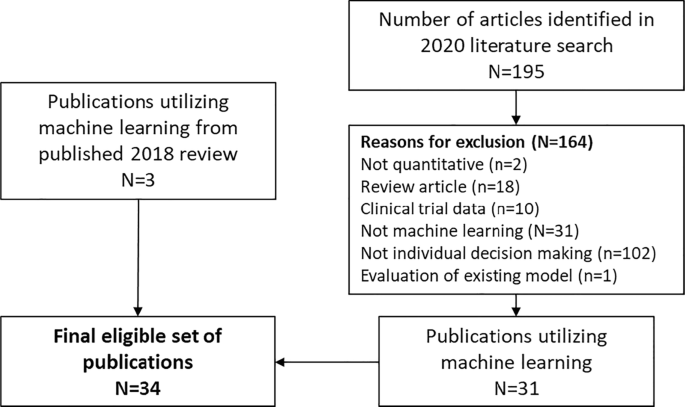

A consensus on the best method to elaborate a dissertation/thesis has not been achieved. The LR can be a distinct chapter or included in different sections; it can be part of the introduction chapter, part of each research topic, or part of each published paper ( 7 ). However, scholars view the LR as an integral part of the main body of an academic work because it is intrinsically connected to other sections ( Figure 1 ) and is frequently present. The structure of the LR depends on the conventions of a particular discipline, the rules of the department, and the student’s and supervisor’s areas of expertise, needs and interests.

Interestingly, many postgraduate students choose to submit their LR to peer-reviewed journals. As LRs are critical evaluations of current knowledge, they are indeed publishable material, even in the form of narrative or systematic reviews. However, systematic reviews have specific patterns 1 ( 8 ) that may not entirely fit with the questions posed in the dissertation/thesis. Additionally, the scope of a systematic review may be too narrow, and the strict criteria for study inclusion may omit important information from the dissertation/thesis. Therefore, this essay discusses the definition of an LR is and methods to develop an LR in the context of an academic dissertation/thesis. Finally, we suggest a checklist to evaluate an LR.

WHAT IS A LITERATURE REVIEW IN A THESIS?

Conducting research and writing a dissertation/thesis translates rational thinking and enthusiasm ( 9 ). While a strong body of literature that instructs students on research methodology, data analysis and writing scientific papers exists, little guidance on performing LRs is available. The LR is a unique opportunity to assess and contrast various arguments and theories, not just summarize them. The research results should not be discussed within the LR, but the postgraduate student tends to write a comprehensive LR while reflecting on his or her own findings ( 10 ).

Many people believe that writing an LR is a lonely and linear process. Supervisors or the institutions assume that the Ph.D. student has mastered the relevant techniques and vocabulary associated with his/her subject and conducts a self-reflection about previously published findings. Indeed, while elaborating the LR, the student should aggregate diverse skills, which mainly rely on his/her own commitment to mastering them. Thus, less supervision should be required ( 11 ). However, the parameters described above might not currently be the case for many students ( 11 , 12 ), and the lack of formal and systematic training on writing LRs is an important concern ( 11 ).

An institutional environment devoted to active learning will provide students the opportunity to continuously reflect on LRs, which will form a dialogue between the postgraduate student and the current literature in a particular field ( 13 ). Postgraduate students will be interpreting studies by other researchers, and, according to Hart (1998) ( 3 ), the outcomes of the LR in a dissertation/thesis include the following:

- To identify what research has been performed and what topics require further investigation in a particular field of knowledge;

- To determine the context of the problem;

- To recognize the main methodologies and techniques that have been used in the past;

- To place the current research project within the historical, methodological and theoretical context of a particular field;

- To identify significant aspects of the topic;

- To elucidate the implications of the topic;

- To offer an alternative perspective;

- To discern how the studied subject is structured;

- To improve the student’s subject vocabulary in a particular field; and

- To characterize the links between theory and practice.

A sound LR translates the postgraduate student’s expertise in academic and scientific writing: it expresses his/her level of comfort with synthesizing ideas ( 11 ). The LR reveals how well the postgraduate student has proceeded in three domains: an effective literature search, the language domain, and critical writing.

Effective literature search

All students should be trained in gathering appropriate data for specific purposes, and information literacy skills are a cornerstone. These skills are defined as “an individual’s ability to know when they need information, to identify information that can help them address the issue or problem at hand, and to locate, evaluate, and use that information effectively” ( 14 ). Librarian support is of vital importance in coaching the appropriate use of Boolean logic (AND, OR, NOT) and other tools for highly efficient literature searches (e.g., quotation marks and truncation), as is the appropriate management of electronic databases.

Language domain

Academic writing must be concise and precise: unnecessary words distract the reader from the essential content ( 15 ). In this context, reading about issues distant from the research topic ( 16 ) may increase students’ general vocabulary and familiarity with grammar. Ultimately, reading diverse materials facilitates and encourages the writing process itself.

Critical writing

Critical judgment includes critical reading, thinking and writing. It supposes a student’s analytical reflection about what he/she has read. The student should delineate the basic elements of the topic, characterize the most relevant claims, identify relationships, and finally contrast those relationships ( 17 ). Each scientific document highlights the perspective of the author, and students will become more confident in judging the supporting evidence and underlying premises of a study and constructing their own counterargument as they read more articles. A paucity of integration or contradictory perspectives indicates lower levels of cognitive complexity ( 12 ).

Thus, while elaborating an LR, the postgraduate student should achieve the highest category of Bloom’s cognitive skills: evaluation ( 12 ). The writer should not only summarize data and understand each topic but also be able to make judgments based on objective criteria, compare resources and findings, identify discrepancies due to methodology, and construct his/her own argument ( 12 ). As a result, the student will be sufficiently confident to show his/her own voice .

Writing a consistent LR is an intense and complex activity that reveals the training and long-lasting academic skills of a writer. It is not a lonely or linear process. However, students are unlikely to be prepared to write an LR if they have not mastered the aforementioned domains ( 10 ). An institutional environment that supports student learning is crucial.

Different institutions employ distinct methods to promote students’ learning processes. First, many universities propose modules to develop behind the scenes activities that enhance self-reflection about general skills (e.g., the skills we have mastered and the skills we need to develop further), behaviors that should be incorporated (e.g., self-criticism about one’s own thoughts), and each student’s role in the advancement of his/her field. Lectures or workshops about LRs themselves are useful because they describe the purposes of the LR and how it fits into the whole picture of a student’s work. These activities may explain what type of discussion an LR must involve, the importance of defining the correct scope, the reasons to include a particular resource, and the main role of critical reading.

Some pedagogic services that promote a continuous improvement in study and academic skills are equally important. Examples include workshops about time management, the accomplishment of personal objectives, active learning, and foreign languages for nonnative speakers. Additionally, opportunities to converse with other students promotes an awareness of others’ experiences and difficulties. Ultimately, the supervisor’s role in providing feedback and setting deadlines is crucial in developing students’ abilities and in strengthening students’ writing quality ( 12 ).

HOW SHOULD A LITERATURE REVIEW BE DEVELOPED?

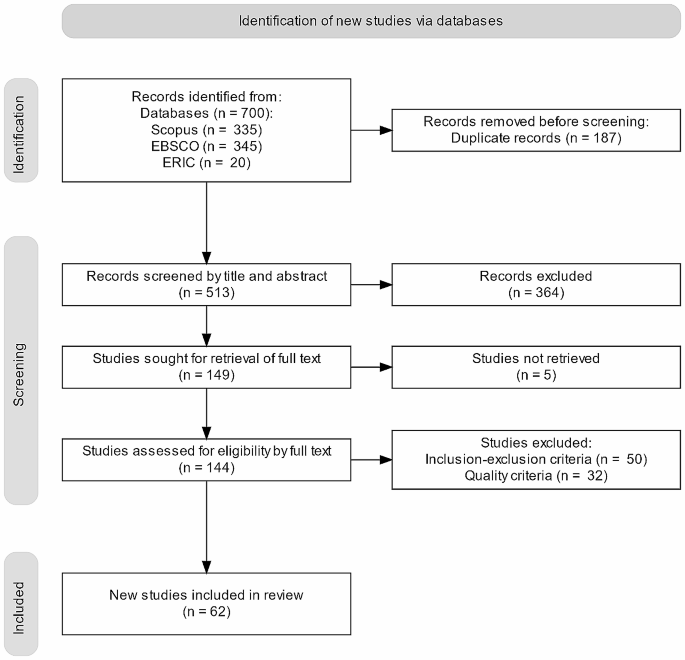

A consensus on the appropriate method for elaborating an LR is not available, but four main steps are generally accepted: defining the main topic, searching the literature, analyzing the results, and writing ( 6 ). We suggest a fifth step: reflecting on the information that has been written in previous publications ( Figure 2 ).

First step: Defining the main topic

Planning an LR is directly linked to the research main question of the thesis and occurs in parallel to students’ training in the three domains discussed above. The planning stage helps organize ideas, delimit the scope of the LR ( 11 ), and avoid the wasting of time in the process. Planning includes the following steps:

- Reflecting on the scope of the LR: postgraduate students will have assumptions about what material must be addressed and what information is not essential to an LR ( 13 , 18 ). Cooper’s Taxonomy of Literature Reviews 2 systematizes the writing process through six characteristics and nonmutually exclusive categories. The focus refers to the reviewer’s most important points of interest, while the goals concern what students want to achieve with the LR. The perspective assumes answers to the student’s own view of the LR and how he/she presents a particular issue. The coverage defines how comprehensive the student is in presenting the literature, and the organization determines the sequence of arguments. The audience is defined as the group for whom the LR is written.

- Designating sections and subsections: Headings and subheadings should be specific, explanatory and have a coherent sequence throughout the text ( 4 ). They simulate an inverted pyramid, with an increasing level of reflection and depth of argument.

- Identifying keywords: The relevant keywords for each LR section should be listed to guide the literature search. This list should mirror what Hart (1998) ( 3 ) advocates as subject vocabulary . The keywords will also be useful when the student is writing the LR since they guide the reader through the text.

- Delineating the time interval and language of documents to be retrieved in the second step. The most recently published documents should be considered, but relevant texts published before a predefined cutoff year can be included if they are classic documents in that field. Extra care should be employed when translating documents.

Second step: Searching the literature

The ability to gather adequate information from the literature must be addressed in postgraduate programs. Librarian support is important, particularly for accessing difficult texts. This step comprises the following components:

- Searching the literature itself: This process consists of defining which databases (electronic or dissertation/thesis repositories), official documents, and books will be searched and then actively conducting the search. Information literacy skills have a central role in this stage. While searching electronic databases, controlled vocabulary (e.g., Medical Subject Headings, or MeSH, for the PubMed database) or specific standardized syntax rules may need to be applied.

In addition, two other approaches are suggested. First, a review of the reference list of each document might be useful for identifying relevant publications to be included and important opinions to be assessed. This step is also relevant for referencing the original studies and leading authors in that field. Moreover, students can directly contact the experts on a particular topic to consult with them regarding their experience or use them as a source of additional unpublished documents.

Before submitting a dissertation/thesis, the electronic search strategy should be repeated. This process will ensure that the most recently published papers will be considered in the LR.

- Selecting documents for inclusion: Generally, the most recent literature will be included in the form of published peer-reviewed papers. Assess books and unpublished material, such as conference abstracts, academic texts and government reports, are also important to assess since the gray literature also offers valuable information. However, since these materials are not peer-reviewed, we recommend that they are carefully added to the LR.

This task is an important exercise in time management. First, students should read the title and abstract to understand whether that document suits their purposes, addresses the research question, and helps develop the topic of interest. Then, they should scan the full text, determine how it is structured, group it with similar documents, and verify whether other arguments might be considered ( 5 ).

Third step: Analyzing the results

Critical reading and thinking skills are important in this step. This step consists of the following components:

- Reading documents: The student may read various texts in depth according to LR sections and subsections ( defining the main topic ), which is not a passive activity ( 1 ). Some questions should be asked to practice critical analysis skills, as listed below. Is the research question evident and articulated with previous knowledge? What are the authors’ research goals and theoretical orientations, and how do they interact? Are the authors’ claims related to other scholars’ research? Do the authors consider different perspectives? Was the research project designed and conducted properly? Are the results and discussion plausible, and are they consistent with the research objectives and methodology? What are the strengths and limitations of this work? How do the authors support their findings? How does this work contribute to the current research topic? ( 1 , 19 )

- Taking notes: Students who systematically take notes on each document are more readily able to establish similarities or differences with other documents and to highlight personal observations. This approach reinforces the student’s ideas about the next step and helps develop his/her own academic voice ( 1 , 13 ). Voice recognition software ( 16 ), mind maps ( 5 ), flowcharts, tables, spreadsheets, personal comments on the referenced texts, and note-taking apps are all available tools for managing these observations, and the student him/herself should use the tool that best improves his/her learning. Additionally, when a student is considering submitting an LR to a peer-reviewed journal, notes should be taken on the activities performed in all five steps to ensure that they are able to be replicated.

Fourth step: Writing

The recognition of when a student is able and ready to write after a sufficient period of reading and thinking is likely a difficult task. Some students can produce a review in a single long work session. However, as discussed above, writing is not a linear process, and students do not need to write LRs according to a specific sequence of sections. Writing an LR is a time-consuming task, and some scholars believe that a period of at least six months is sufficient ( 6 ). An LR, and academic writing in general, expresses the writer’s proper thoughts, conclusions about others’ work ( 6 , 10 , 13 , 16 ), and decisions about methods to progress in the chosen field of knowledge. Thus, each student is expected to present a different learning and writing trajectory.

In this step, writing methods should be considered; then, editing, citing and correct referencing should complete this stage, at least temporarily. Freewriting techniques may be a good starting point for brainstorming ideas and improving the understanding of the information that has been read ( 1 ). Students should consider the following parameters when creating an agenda for writing the LR: two-hour writing blocks (at minimum), with prespecified tasks that are possible to complete in one section; short (minutes) and long breaks (days or weeks) to allow sufficient time for mental rest and reflection; and short- and long-term goals to motivate the writing itself ( 20 ). With increasing experience, this scheme can vary widely, and it is not a straightforward rule. Importantly, each discipline has a different way of writing ( 1 ), and each department has its own preferred styles for citations and references.

Fifth step: Reflecting on the writing

In this step, the postgraduate student should ask him/herself the same questions as in the analyzing the results step, which can take more time than anticipated. Ambiguities, repeated ideas, and a lack of coherence may not be noted when the student is immersed in the writing task for long periods. The whole effort will likely be a work in progress, and continuous refinements in the written material will occur once the writing process has begun.

LITERATURE REVIEW CHECKLIST

In contrast to review papers, the LR of a dissertation/thesis should not be a standalone piece or work. Instead, it should present the student as a scholar and should maintain the interest of the audience in how that dissertation/thesis will provide solutions for the current gaps in a particular field.

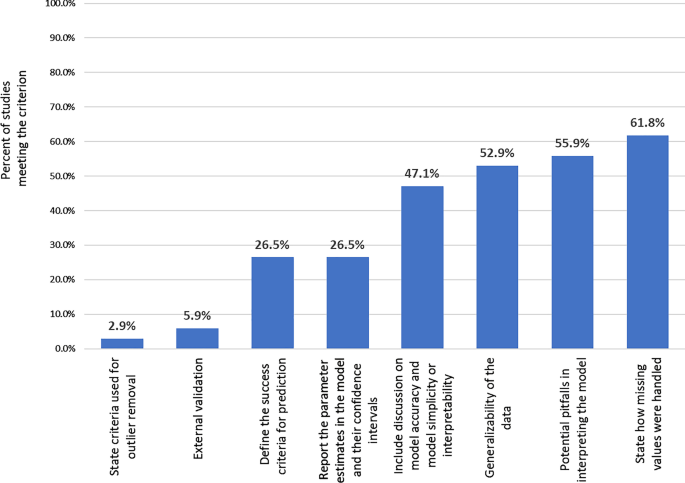

A checklist for evaluating an LR is convenient for students’ continuous academic development and research transparency: it clearly states the desired achievements for the LR of a dissertation/thesis. Here, we present an LR checklist developed from an LR scoring rubric ( 11 ). For a critical analysis of an LR, we maintain the five categories but offer twelve criteria that are not scaled ( Figure 3 ). The criteria all have the same importance and are not mutually exclusive.

First category: Coverage

1. justified criteria exist for the inclusion and exclusion of literature in the review.

This criterion builds on the main topic and areas covered by the LR ( 18 ). While experts may be confident in retrieving and selecting literature, postgraduate students must convince their audience about the adequacy of their search strategy and their reasons for intentionally selecting what material to cover ( 11 ). References from different fields of knowledge provide distinct perspective, but narrowing the scope of coverage may be important in areas with a large body of existing knowledge.

Second category: Synthesis

2. a critical examination of the state of the field exists.

A critical examination is an assessment of distinct aspects in the field ( 1 ) along with a constructive argument. It is not a negative critique but an expression of the student’s understanding of how other scholars have added to the topic ( 1 ), and the student should analyze and contextualize contradictory statements. A writer’s personal bias (beliefs or political involvement) have been shown to influence the structure and writing of a document; therefore, the cultural and paradigmatic background guide how the theories are revised and presented ( 13 ). However, an honest judgment is important when considering different perspectives.

3. The topic or problem is clearly placed in the context of the broader scholarly literature

The broader scholarly literature should be related to the chosen main topic for the LR ( how to develop the literature review section). The LR can cover the literature from one or more disciplines, depending on its scope, but it should always offer a new perspective. In addition, students should be careful in citing and referencing previous publications. As a rule, original studies and primary references should generally be included. Systematic and narrative reviews present summarized data, and it may be important to cite them, particularly for issues that should be understood but do not require a detailed description. Similarly, quotations highlight the exact statement from another publication. However, excessive referencing may disclose lower levels of analysis and synthesis by the student.

4. The LR is critically placed in the historical context of the field

Situating the LR in its historical context shows the level of comfort of the student in addressing a particular topic. Instead of only presenting statements and theories in a temporal approach, which occasionally follows a linear timeline, the LR should authentically characterize the student’s academic work in the state-of-art techniques in their particular field of knowledge. Thus, the LR should reinforce why the dissertation/thesis represents original work in the chosen research field.

5. Ambiguities in definitions are considered and resolved

Distinct theories on the same topic may exist in different disciplines, and one discipline may consider multiple concepts to explain one topic. These misunderstandings should be addressed and contemplated. The LR should not synthesize all theories or concepts at the same time. Although this approach might demonstrate in-depth reading on a particular topic, it can reveal a student’s inability to comprehend and synthesize his/her research problem.

6. Important variables and phenomena relevant to the topic are articulated

The LR is a unique opportunity to articulate ideas and arguments and to purpose new relationships between them ( 10 , 11 ). More importantly, a sound LR will outline to the audience how these important variables and phenomena will be addressed in the current academic work. Indeed, the LR should build a bidirectional link with the remaining sections and ground the connections between all of the sections ( Figure 1 ).

7. A synthesized new perspective on the literature has been established

The LR is a ‘creative inquiry’ ( 13 ) in which the student elaborates his/her own discourse, builds on previous knowledge in the field, and describes his/her own perspective while interpreting others’ work ( 13 , 17 ). Thus, students should articulate the current knowledge, not accept the results at face value ( 11 , 13 , 17 ), and improve their own cognitive abilities ( 12 ).

Third category: Methodology

8. the main methodologies and research techniques that have been used in the field are identified and their advantages and disadvantages are discussed.

The LR is expected to distinguish the research that has been completed from investigations that remain to be performed, address the benefits and limitations of the main methods applied to date, and consider the strategies for addressing the expected limitations described above. While placing his/her research within the methodological context of a particular topic, the LR will justify the methodology of the study and substantiate the student’s interpretations.

9. Ideas and theories in the field are related to research methodologies

The audience expects the writer to analyze and synthesize methodological approaches in the field. The findings should be explained according to the strengths and limitations of previous research methods, and students must avoid interpretations that are not supported by the analyzed literature. This criterion translates to the student’s comprehension of the applicability and types of answers provided by different research methodologies, even those using a quantitative or qualitative research approach.

Fourth category: Significance

10. the scholarly significance of the research problem is rationalized.

The LR is an introductory section of a dissertation/thesis and will present the postgraduate student as a scholar in a particular field ( 11 ). Therefore, the LR should discuss how the research problem is currently addressed in the discipline being investigated or in different disciplines, depending on the scope of the LR. The LR explains the academic paradigms in the topic of interest ( 13 ) and methods to advance the field from these starting points. However, an excess number of personal citations—whether referencing the student’s research or studies by his/her research team—may reflect a narrow literature search and a lack of comprehensive synthesis of ideas and arguments.

11. The practical significance of the research problem is rationalized

The practical significance indicates a student’s comprehensive understanding of research terminology (e.g., risk versus associated factor), methodology (e.g., efficacy versus effectiveness) and plausible interpretations in the context of the field. Notably, the academic argument about a topic may not always reflect the debate in real life terms. For example, using a quantitative approach in epidemiology, statistically significant differences between groups do not explain all of the factors involved in a particular problem ( 21 ). Therefore, excessive faith in p -values may reflect lower levels of critical evaluation of the context and implications of a research problem by the student.

Fifth category: Rhetoric

12. the lr was written with a coherent, clear structure that supported the review.

This category strictly relates to the language domain: the text should be coherent and presented in a logical sequence, regardless of which organizational ( 18 ) approach is chosen. The beginning of each section/subsection should state what themes will be addressed, paragraphs should be carefully linked to each other ( 10 ), and the first sentence of each paragraph should generally summarize the content. Additionally, the student’s statements are clear, sound, and linked to other scholars’ works, and precise and concise language that follows standardized writing conventions (e.g., in terms of active/passive voice and verb tenses) is used. Attention to grammar, such as orthography and punctuation, indicates prudence and supports a robust dissertation/thesis. Ultimately, all of these strategies provide fluency and consistency for the text.

Although the scoring rubric was initially proposed for postgraduate programs in education research, we are convinced that this checklist is a valuable tool for all academic areas. It enables the monitoring of students’ learning curves and a concentrated effort on any criteria that are not yet achieved. For institutions, the checklist is a guide to support supervisors’ feedback, improve students’ writing skills, and highlight the learning goals of each program. These criteria do not form a linear sequence, but ideally, all twelve achievements should be perceived in the LR.

CONCLUSIONS

A single correct method to classify, evaluate and guide the elaboration of an LR has not been established. In this essay, we have suggested directions for planning, structuring and critically evaluating an LR. The planning of the scope of an LR and approaches to complete it is a valuable effort, and the five steps represent a rational starting point. An institutional environment devoted to active learning will support students in continuously reflecting on LRs, which will form a dialogue between the writer and the current literature in a particular field ( 13 ).

The completion of an LR is a challenging and necessary process for understanding one’s own field of expertise. Knowledge is always transitory, but our responsibility as scholars is to provide a critical contribution to our field, allowing others to think through our work. Good researchers are grounded in sophisticated LRs, which reveal a writer’s training and long-lasting academic skills. We recommend using the LR checklist as a tool for strengthening the skills necessary for critical academic writing.

AUTHOR CONTRIBUTIONS

Leite DFB has initially conceived the idea and has written the first draft of this review. Padilha MAS and Cecatti JG have supervised data interpretation and critically reviewed the manuscript. All authors have read the draft and agreed with this submission. Authors are responsible for all aspects of this academic piece.

ACKNOWLEDGMENTS

We are grateful to all of the professors of the ‘Getting Started with Graduate Research and Generic Skills’ module at University College Cork, Cork, Ireland, for suggesting and supporting this article. Funding: DFBL has granted scholarship from Brazilian Federal Agency for Support and Evaluation of Graduate Education (CAPES) to take part of her Ph.D. studies in Ireland (process number 88881.134512/2016-01). There is no participation from sponsors on authors’ decision to write or to submit this manuscript.

No potential conflict of interest was reported.

1 The questions posed in systematic reviews usually follow the ‘PICOS’ acronym: Population, Intervention, Comparison, Outcomes, Study design.

2 In 1988, Cooper proposed a taxonomy that aims to facilitate students’ and institutions’ understanding of literature reviews. Six characteristics with specific categories are briefly described: Focus: research outcomes, research methodologies, theories, or practices and applications; Goals: integration (generalization, conflict resolution, and linguistic bridge-building), criticism, or identification of central issues; Perspective: neutral representation or espousal of a position; Coverage: exhaustive, exhaustive with selective citations, representative, central or pivotal; Organization: historical, conceptual, or methodological; and Audience: specialized scholars, general scholars, practitioners or policymakers, or the general public.

- Schools & departments

Literature review

A general guide on how to conduct and write a literature review.

Please check course or programme information and materials provided by teaching staff, including your project supervisor, for subject-specific guidance.

What is a literature review?

A literature review is a piece of academic writing demonstrating knowledge and understanding of the academic literature on a specific topic placed in context. A literature review also includes a critical evaluation of the material; this is why it is called a literature review rather than a literature report. It is a process of reviewing the literature, as well as a form of writing.

To illustrate the difference between reporting and reviewing, think about television or film review articles. These articles include content such as a brief synopsis or the key points of the film or programme plus the critic’s own evaluation. Similarly the two main objectives of a literature review are firstly the content covering existing research, theories and evidence, and secondly your own critical evaluation and discussion of this content.

Usually a literature review forms a section or part of a dissertation, research project or long essay. However, it can also be set and assessed as a standalone piece of work.

What is the purpose of a literature review?

…your task is to build an argument, not a library. Rudestam, K.E. and Newton, R.R. (1992)Surviving your dissertation: A comprehensive guide to content and process. California: Sage, p49.

In a larger piece of written work, such as a dissertation or project, a literature review is usually one of the first tasks carried out after deciding on a topic. Reading combined with critical analysis can help to refine a topic and frame research questions. Conducting a literature review establishes your familiarity with and understanding of current research in a particular field before carrying out a new investigation. After doing a literature review, you should know what research has already been done and be able to identify what is unknown within your topic.

When doing and writing a literature review, it is good practice to:

- summarise and analyse previous research and theories;

- identify areas of controversy and contested claims;

- highlight any gaps that may exist in research to date.

Conducting a literature review