Study record managers: refer to the Data Element Definitions if submitting registration or results information.

Search for terms

- Department of Health and Human Services

- National Institutes of Health

COVID-19 Research Studies

More information, about clinical center, clinical trials and you, participate in a study, referring a patient.

About Clinical Research

Research participants are partners in discovery at the NIH Clinical Center, the largest research hospital in America. Clinical research is medical research involving people The Clinical Center provides hope through pioneering clinical research to improve human health. We rapidly translate scientific observations and laboratory discoveries into new ways to diagnose, treat and prevent disease. More than 500,000 people from around the world have participated in clinical research since the hospital opened in 1953. We do not charge patients for participation and treatment in clinical studies at NIH. In certain emergency circumstances, you may qualify for help with travel and other expenses Read more , to see if clinical studies are for you.

Medical Information Disclaimer

Emailed inquires/requests.

Email sent to the National Institutes of Health Clinical Center may be forwarded to appropriate NIH or outside experts for response. We do not collect your name and e-mail address for any purpose other than to respond to your query. Nevertheless, email is not necessarily secure against interception. This statement applies to NIH Clinical Center Studies website. For additional inquiries regarding studies at the National Institutes of Health, please call the Office of Patient Recruitment at 1-800-411-1222

Find NIH Clinical Center Trials

The National Institutes of Health (NIH) Clinical Center Search the Studies site is a registry of publicly supported clinical studies conducted mostly in Bethesda, MD.

- U.S. Department of Health & Human Services

- Virtual Tour

- Staff Directory

- En Español

You are here

Nih clinical research trials and you, finding a clinical trial, around the nation and worldwide.

NIH conducts clinical research trials for many diseases and conditions, including cancer , Alzheimer’s disease , allergy and infectious diseases , and neurological disorders . To search for other diseases and conditions, you can visit ClinicalTrials.gov.

ClinicalTrials.gov [ How to Use Search ] This is a searchable registry and results database of federally and privately supported clinical trials conducted in the United States and around the world. ClinicalTrials.gov gives you information about a trial's purpose, who may participate, locations, and phone numbers for more details. This information should be used in conjunction with advice from health care professionals.

Listing a study does not mean it has been evaluated by the U.S. Federal Government. Read the disclaimer on ClinicalTrials.gov for details.

Before participating in a study, talk to your health care provider and learn about the risks and potential benefits.

At the NIH Clinical Center in Bethesda, Maryland

Search NIH Clinical Research Studies The NIH maintains an online database of clinical research studies taking place at its Clinical Center, which is located on the NIH campus in Bethesda, Maryland. Studies are conducted by most of the institutes and centers across the NIH. The Clinical Center hosts a wide range of studies from rare diseases to chronic health conditions, as well as studies for healthy volunteers. Visitors can search by diagnosis, sign, symptom or other key words.

Join a National Registry of Research Volunteers

ResearchMatch This is an NIH-funded initiative to connect 1) people who are trying to find research studies, and 2) researchers seeking people to participate in their studies. It is a free, secure registry to make it easier for the public to volunteer and to become involved in clinical research studies that contribute to improved health in the future.

This page last reviewed on November 6, 2018

Connect with Us

- More Social Media from NIH

Librarians/Admins

- EBSCOhost Collection Manager

- EBSCO Experience Manager

- EBSCO Connect

- Start your research

- EBSCO Mobile App

Clinical Decisions Users

- DynaMed Decisions

- Dynamic Health

- Waiting Rooms

- NoveList Blog

Free Databases

EBSCO provides free research databases covering a variety of subjects for students, researchers and librarians.

Exploring Race in Society

This free research database offers essential content covering important issues related to race in society today. Essays, articles, reports and other reliable sources provide an in-depth look at the history of race and provide critical context for learning more about topics associated with race, ethnicity, diversity and inclusiveness.

EBSCO Open Dissertations

EBSCO Open Dissertations is a collaboration between EBSCO and BiblioLabs to increase traffic and discoverability of ETD research. You can join the movement and add your theses and dissertations to the database, making them freely available to researchers everywhere.

GreenFILE is a free research database covering all aspects of human impact to the environment. Its collection of scholarly, government and general-interest titles includes content on global warming, green building, pollution, sustainable agriculture, renewable energy, recycling, and more.

Library, Information Science and Technology Abstracts

Library, Information Science & Technology Abstracts (LISTA) is a free research database for library and information science studies. LISTA provides indexing and abstracting for hundreds of key journals, books, research reports. It is EBSCO's intention to provide access to this resource on a continual basis.

Teacher Reference Center

A complimentary research database for teachers, Teacher Reference Center (TRC) provides indexing and abstracts for more than 230 peer-reviewed journals.

European Views of the Americas: 1493 to 1750

European Views of the Americas: 1493 to 1750 is a free archive of indexed publications related to the Americas and written in Europe before 1750. It includes thousands of valuable primary source records covering the history of European exploration as well as portrayals of Native American peoples.

Recommended Reading

- All Solutions

Expertly curated abstract & citation database

Your brilliance, connected.

Scopus uniquely combines a comprehensive, expertly curated abstract and citation database with enriched data and linked scholarly literature across a wide variety of disciplines.

Scopus quickly finds relevant and authoritative research, identifies experts and provides access to reliable data, metrics and analytical tools. Be confident in progressing research, teaching or research direction and priorities — all from one database and with one subscription.

Speak with us about your organization's needs

Scopus indexes content from more than 25,000 active titles and 7,000 publishers—all rigorously vetted and selected by an independent review board.

Learn more about Scopus content coverage

Download the fact sheet

2021 CiteScore metrics are available on our free layer of Scopus.com .

Learn more about CiteScore metrics

Academic Institutions

Scopus is designed to serve the information needs of researchers, educators, students, administrators and librarians across the entire academic community.

Government & Funding Agencies

Agencies and government bodies can rely on Scopus to inform their overall strategic direction, identify funding resources, measure researcher performance and more.

Research & Development

Scopus helps industry researchers track market innovations, identify key contributors and collaborators, and develop competitive benchmarking.

How Scopus works

Scopus indexes content that is rigorously vetted and selected by an independent review board of experts in their fields. The rich metadata architecture on which Scopus is built connects people, published ideas and institutions.

Using sophisticated tools and analytics, Scopus generates precise citation results, detailed researcher profiles, and insights that drive better decisions, actions and outcomes.

Discover how Scopus works

What our customers say

Many of today’s research questions have to do with public health, particularly with public policy and informatics. This creates an overlap between computer science, informatics and health sciences ... having a database as inclusive and interdisciplinary as Scopus is invaluable to us.

— Bruce Abbott, Health Sciences Librarian, University of California, Davis Health Care (USA)

Read the full customer story

Scopus really plays a vital role in helping our researchers, particularly early investigators and people who are getting ready to become early-career postgraduates, better understand the scholarly communications landscape.

— Emily Glenn, Associate Dean, University of Nebraska Medical Center Library (USA) Read the full customer story

When it comes to measuring success, you can’t compare other products to Scopus — no other output metrics offer the same kind of depth and coverage ... faculty, department chairs, college deans, they are always amazed when they discover what’s possible.

— Hector R. Perez-Gilbe, Research Librarian for the Health Sciences, University of California, Irvine (USA) Read the full customer story

Why choose Scopus

Scopus brings together superior data quality and coverage, sophisticated analytics and advanced technology in one solution that is ready to combat predatory publishing, optimize analytic powers and researcher workflows, and empower better decision making.

See why you should choose Scopus

Other helpful resources

To learn more about using and administering Scopus, how to contact us and to request corrections to Scopus profiles and content, please visit our support center .

To find Scopus fact sheets, case studies, user guides, title lists and more, please visit our resource center .

Elsevier.com visitor survey

We are always looking for ways to improve customer experience on Elsevier.com. We would like to ask you for a moment of your time to fill in a short questionnaire, at the end of your visit . If you decide to participate, a new browser tab will open so you can complete the survey after you have completed your visit to this website. Thanks in advance for your time.

Explore millions of high-quality primary sources and images from around the world, including artworks, maps, photographs, and more.

Explore migration issues through a variety of media types

- Part of Street Art Graphics

- Part of The Journal of Economic Perspectives, Vol. 34, No. 1 (Winter 2020)

- Part of Cato Institute (Aug. 3, 2021)

- Part of University of California Press

- Part of Open: Smithsonian National Museum of African American History & Culture

- Part of Indiana Journal of Global Legal Studies, Vol. 19, No. 1 (Winter 2012)

- Part of R Street Institute (Nov. 1, 2020)

- Part of Leuven University Press

- Part of UN Secretary-General Papers: Ban Ki-moon (2007-2016)

- Part of Perspectives on Terrorism, Vol. 12, No. 4 (August 2018)

- Part of Leveraging Lives: Serbia and Illegal Tunisian Migration to Europe, Carnegie Endowment for International Peace (Mar. 1, 2023)

- Part of UCL Press

Harness the power of visual materials—explore more than 3 million images now on JSTOR.

Enhance your scholarly research with underground newspapers, magazines, and journals.

Explore collections in the arts, sciences, and literature from the world’s leading museums, archives, and scholars.

Reference management. Clean and simple.

The top list of research databases for medicine and healthcare

3. Cochrane Library

4. pubmed central (pmc), 5. uptodate, frequently asked questions about research databases for medicine and healthcare, related articles.

Web of Science and Scopus are interdisciplinary research databases and have a broad scope. For biomedical research, medicine, and healthcare there are a couple of outstanding academic databases that provide true value in your daily research.

Scholarly databases can help you find scientific articles, research papers , conference proceedings, reviews and much more. We have compiled a list of the top 5 research databases with a special focus on healthcare and medicine.

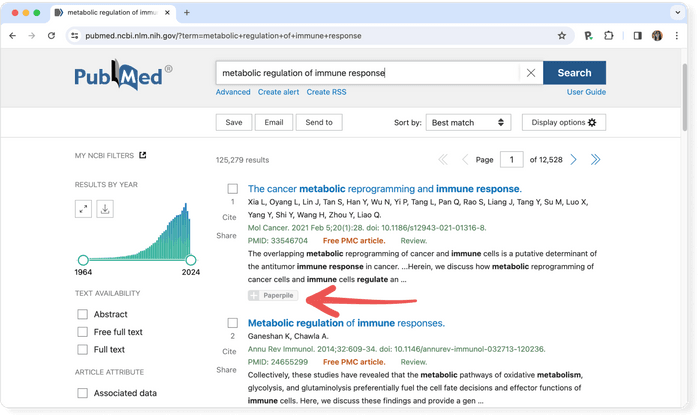

PubMed is the number one source for medical and healthcare research. It is hosted by the National Institutes of Health (NIH) and provides bibliographic information including abstracts and links to the full text publisher websites for more than 28 million articles.

- Coverage: around 35 million items

- Abstracts: ✔

- Related articles: ✔

- References: ✘

- Cited by: ✘

- Links to full text: ✔

- Export formats: XML, NBIB

Pro tip: Use a reference manager like Paperpile to keep track of all your sources. Paperpile integrates with PubMed and many popular databases. You can save references and PDFs directly to your library using the Paperpile buttons and later cite them in thousands of citation styles:

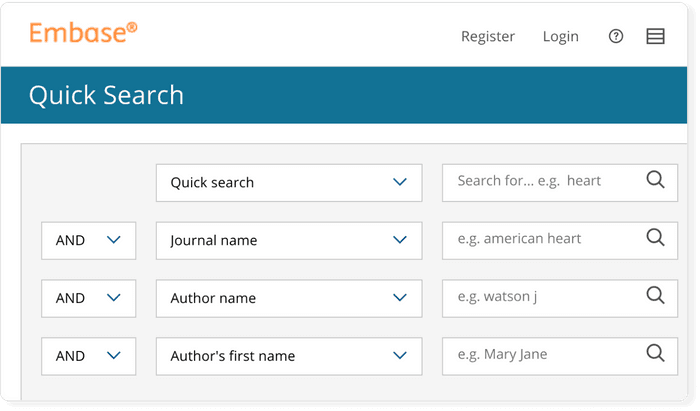

EMBASE (Excerpta Medica Database) is a proprietary research database that also includes PubMed. It can also be accessed by other database providers such as Ovid .

- Coverage: 38 million articles

- References: ✔

- Cited by: ✔

- Full text: ✔ (requires institutional subscription to EMBASE and individual publishers)

- Export formats: RIS

The Cochrane Library is best know for its systematic reviews. There are 53 review groups around the world that ensure that the published reviews are of high-quality and evidence based. Articles are updated over time to reflect new research.

- Coverage: several thousand high quality reviews

- Full text: ✔

- Export formats: RIS, BibTeX

PubMed Central is the free, open access branch of PubMed. It includes full-text versions for all indexed papers. You might also want to check out its sister site Europe PMC .

- Coverage: more than 8 million articles

- Export formats: APA, MLA, AMA, RIS, NBIB

Like the Cochrane Library, UpToDate provides detailed reviews for clinical topics. Reviews are constantly updated to provide an up-to-date view.

- Coverage: several thousand articles from over 420 peer-reviewed journals

- Related articles: ✘

- Full text: ✔ (requires institutional subscription)

- Export formats: ✘

PubMed is the number one source for medical and healthcare research. It is hosted at the National Institutes of Health (NIH) and provides bibliographic information including abstracts and links to the full text publisher websites for more than 35 million items.

EMBASE (Excerpta Medica Database) is a proprietary research database that also includes in its corpus PubMed. It can also be accessed by other database providers such as Ovid.

APA PsycInfo ®

The premier abstracting and indexing database covering the behavioral and social sciences from the authority in psychology.

Support research goals

Institutional access to APA PsycInfo provides a single source of vetted, authoritative research for users across the behavioral and social sciences. Students and researchers enjoy seamless access to cutting-edge and historical content with citations in APA Style ® .

The newest features available within APA PsycInfo leverage artificial intelligence and machine learning to equip users with a personalized research assistant that helps monitor trends, explore content analytics, and gain one-click access to full text within a centralized, essential source of credible psychology research.

Celebrating 55 years

For over 55 years, APA PsycInfo has been the most trusted index of psychological science in the world. With more than 5,000,000 interdisciplinary bibliographic records, our database delivers targeted discovery of credible and comprehensive research across the full spectrum of behavioral and social sciences. This indispensable resource continues to enhance the discovery and usage of essential psychological research to support students, scientists, and educators. Explore the past, present, and future of psychology research .

APA PsycInfo at a glance

- Over 5,000,000 peer-reviewed records

- 144 million cited references

- Spanning 600 years of content

- Updated twice-weekly

- Research in 30 languages from 50 countries

- Records from 2,400 journals

- Content from journal articles, book chapters, and dissertations

- AI and machine learning-powered research assistance

Support your campus community beyond psychology with APA PsycInfo’s broad subject coverage:

- Artificial Intelligence

- Linguistics

- Neuroscience

- Pharmacology

- Political science

- Social work

Institutional trial

Evaluate this resource free for 30 days to determine if it meets your library’s research needs.

Access options

Select from individual subscriptions or institutional licenses on your platform of choice.

Find webinars, tutorials, and guides to help promote your library’s subscription.

Key benefits of APA PsycInfo

Learn what’s new

AI-powered research tools

Join a webinar on new features courtesy of your access to APA PsycInfo

APA PsycInfo webinars

Help users search smarter this semester from APA training experts

Browse APA Databases and electronic products by publication type or subscriber type.

View Product Guide

More about APA PsycInfo

- APA PsycInfo FAQs

- Sample records

- Coverage List

- Full-Text Options

- APA PsycInfo Publishers

APA PsycInfo research services

Simplify the research process for your users with this personalized research assistant, a free tool courtesy of your institution's subscription to APA PsycInfo. This service leverages AI and machine learning to ease access to full text, content analytics, and discovery of the latest behavioral sciences research.

Get Started

Find full-text articles, book chapters, and more using APA PsycNet ® , the only search platform designed specially to deliver APA content.

MORE ABOUT APA PSYCNET

APA Publishing Blog

The blog is your source for training materials and information about APA’s databases and electronic resources. Stay up-to-date on training sessions, new features and content, and resources to support your subscription.

Follow blog

Stay connected

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

MEDLINE is the National Library of Medicine's (NLM) premier bibliographic database that contains references to journal articles in life sciences, with a concentration on biomedicine. See the MEDLINE Overview page for more information about MEDLINE.

MEDLINE content is searchable via PubMed and constitutes the primary component of PubMed, a literature database developed and maintained by the NLM National Center for Biotechnology Information (NCBI).

Last Reviewed: February 5, 2024

Database Search

What is Database Search?

Harvard Library licenses hundreds of online databases, giving you access to academic and news articles, books, journals, primary sources, streaming media, and much more.

The contents of these databases are only partially included in HOLLIS. To make sure you're really seeing everything, you need to search in multiple places. Use Database Search to identify and connect to the best databases for your topic.

In addition to digital content, you will find specialized search engines used in specific scholarly domains.

Related Services & Tools

Research and clinical trial databases

This non-exhaustive collection of research databases allows you to locate multiple study datasets as well as information on clinical trials free of charge., research databases.

- Array Express ArrayExpress is a database of functional genomics experiments that can be queried and the data downloaded.

- Cancer Cell Line Encyclopedia (CCLE) The CCLE provides public access to genomic data, analysis and visualization for about 1,000 cell lines.

- European-Genome-phenome Archive (EGA) EGA allows you to explore datasets from genomic studies, provided by a range of data providers.

- Gene Expression Omnibus (GEO) GEO is a public functional genomics data repository supporting MIAME-compliant data submissions.

- International Cancer Genomics Consortium (ICGC) The ICGC Data Portal provides tools for visualizing, querying and downloading the data released quarterly by the consortium's member projects.

- The Cancer Genome Atlas (TCGA) The TCGA Data Portal provides a platform for researchers to search, download, and analyze data sets generated by TCGA.

Clinical Trial Databases

- ClinicalTrials.gov ClinicalTrials.gov is a U.S. registry and results database of publicly and privately supported clinical studies of human participants conducted around the world.

- EORTC Clinical Trials Database The EORTC Clinical Trials Database contains information about EORTC clinical trials and clinical trials from other organisations with EORTC participation.

- EORTC Investigator's Area This section gives access to the clinical trials applications designed for the investigators.

- EU Clinical Trials Register (EU CTR) The EU CTR contains information on interventional clinical trials on medicines conducted in the European Union (EU), or the European Economic Area (EEA) which started after 1 May 2004. The Register enables you to search for information in the EudraCT database.

- WHO International Clinical Trials Registry Platform The mission of the WHO International Clinical Trials Registry Platform is to ensure that a complete view of research is accessible to all those involved in health care decision making.

This site uses cookies. Some of these cookies are essential, while others help us improve your experience by providing insights into how the site is being used.

For more detailed information on the cookies we use, please check our Privacy Policy .

Necessary cookies enable core functionality. The website cannot function properly without these cookies, and you can only disable them by changing your browser preferences.

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

National Center for Biotechnology Information

Welcome to ncbi.

The National Center for Biotechnology Information advances science and health by providing access to biomedical and genomic information.

- About the NCBI |

- Organization |

- NCBI News & Blog

Deposit data or manuscripts into NCBI databases

Transfer NCBI data to your computer

Find help documents, attend a class or watch a tutorial

Use NCBI APIs and code libraries to build applications

Identify an NCBI tool for your data analysis task

Explore NCBI research and collaborative projects

- Resource List (A-Z)

- All Resources

- Chemicals & Bioassays

- Data & Software

- DNA & RNA

- Domains & Structures

- Genes & Expression

- Genetics & Medicine

- Genomes & Maps

- Sequence Analysis

- Training & Tutorials

Popular Resources

- PubMed Central

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

Jump to navigation

- Bahasa Indonesia

- Bahasa Malaysia

Reevaluating statin trials

Latest news and events.

EMBL-EBI’s open data resources for biodiversity and climate research

More Technology and innovation

The EMBL-EBI File Replication Archive hits 100 petabytes 5 June 2024

AlphaMissense data integrated into Ensembl, UniProt, DECIPHER and AlphaFold DB 21 May 2024

AI annotations increase patent data in SureCheMBL 13 March 2024

ProteomicsML: empowering proteomics research with machine learning 26 January 2024

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Data-Driven Hypothesis Generation in Clinical Research: What We Learned from a Human Subject Study?

Affiliations.

- 1 Department of Public Health Sciences, College of Behavioral, Social and Health Sciences, Clemson University, Clemson, SC.

- 2 Informatics Institute, School of Medicine, University of Alabama, Birmingham, Birmingham, AL.

- 3 Cognitive Studies in Medicine and Public Health, The New York Academy of Medicine, New York City, NY.

- 4 Department of Educational Studies, Patton College of Education, Ohio University, Athens, OH.

- 5 Department of Clinical Sciences and Community Health, Touro University California College of Osteopathic Medicine, Vallejo, CA.

- 6 Department of Electrical Engineering and Computer Science, Russ College of Engineering and Technology, Ohio University, Athens, OH.

- 7 Department of Health Science, California State University Channel Islands, Camarillo, CA.

- PMID: 39211055

- PMCID: PMC11361316

- DOI: 10.18103/mra.v12i2.5132

Hypothesis generation is an early and critical step in any hypothesis-driven clinical research project. Because it is not yet a well-understood cognitive process, the need to improve the process goes unrecognized. Without an impactful hypothesis, the significance of any research project can be questionable, regardless of the rigor or diligence applied in other steps of the study, e.g., study design, data collection, and result analysis. In this perspective article, the authors provide a literature review on the following topics first: scientific thinking, reasoning, medical reasoning, literature-based discovery, and a field study to explore scientific thinking and discovery. Over the years, scientific thinking has shown excellent progress in cognitive science and its applied areas: education, medicine, and biomedical research. However, a review of the literature reveals the lack of original studies on hypothesis generation in clinical research. The authors then summarize their first human participant study exploring data-driven hypothesis generation by clinical researchers in a simulated setting. The results indicate that a secondary data analytical tool, VIADS-a visual interactive analytic tool for filtering, summarizing, and visualizing large health data sets coded with hierarchical terminologies, can shorten the time participants need, on average, to generate a hypothesis and also requires fewer cognitive events to generate each hypothesis. As a counterpoint, this exploration also indicates that the quality ratings of the hypotheses thus generated carry significantly lower ratings for feasibility when applying VIADS. Despite its small scale, the study confirmed the feasibility of conducting a human participant study directly to explore the hypothesis generation process in clinical research. This study provides supporting evidence to conduct a larger-scale study with a specifically designed tool to facilitate the hypothesis-generation process among inexperienced clinical researchers. A larger study could provide generalizable evidence, which in turn can potentially improve clinical research productivity and overall clinical research enterprise.

Keywords: Clinical research; data-driven hypothesis generation; medical informatics; scientific hypothesis generation; translational research; visualization.

PubMed Disclaimer

Conceptual model showing the relationships…

Conceptual model showing the relationships among scientific thinking, hypothesis generation, and their contributing…

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

- StudySkills@Sheffield

- Research skills

- Research methods

How to manage your research data

When undertaking a dissertation or final project you may collect material to help you explore your research questions and which will inform your conclusions. This is your research data. Learn how to collect, store and manage this data in a secure and appropriate way.

Research Data Management

Research data is any material used to inform or support your research conclusions. It can take many different forms including recordings, transcripts, questionnaires, photographs and code. While a taught course research project may be relatively limited in time and scope, it is important to collect, store and manage data in a secure and appropriate way.

Research Data Management for postgraduate taught course students

- How to identify your research methods

- How to plan a dissertation or final year project

- Searching for information

Use your mySkills portfolio to discover your skillset, reflect on your development, and record your progress.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 12 September 2024

An open-source framework for end-to-end analysis of electronic health record data

- Lukas Heumos 1 , 2 , 3 ,

- Philipp Ehmele 1 ,

- Tim Treis 1 , 3 ,

- Julius Upmeier zu Belzen ORCID: orcid.org/0000-0002-0966-4458 4 ,

- Eljas Roellin 1 , 5 ,

- Lilly May 1 , 5 ,

- Altana Namsaraeva 1 , 6 ,

- Nastassya Horlava 1 , 3 ,

- Vladimir A. Shitov ORCID: orcid.org/0000-0002-1960-8812 1 , 3 ,

- Xinyue Zhang ORCID: orcid.org/0000-0003-4806-4049 1 ,

- Luke Zappia ORCID: orcid.org/0000-0001-7744-8565 1 , 5 ,

- Rainer Knoll 7 ,

- Niklas J. Lang 2 ,

- Leon Hetzel 1 , 5 ,

- Isaac Virshup 1 ,

- Lisa Sikkema ORCID: orcid.org/0000-0001-9686-6295 1 , 3 ,

- Fabiola Curion 1 , 5 ,

- Roland Eils 4 , 8 ,

- Herbert B. Schiller 2 , 9 ,

- Anne Hilgendorff 2 , 10 &

- Fabian J. Theis ORCID: orcid.org/0000-0002-2419-1943 1 , 3 , 5

Nature Medicine ( 2024 ) Cite this article

86 Altmetric

Metrics details

- Epidemiology

- Translational research

With progressive digitalization of healthcare systems worldwide, large-scale collection of electronic health records (EHRs) has become commonplace. However, an extensible framework for comprehensive exploratory analysis that accounts for data heterogeneity is missing. Here we introduce ehrapy, a modular open-source Python framework designed for exploratory analysis of heterogeneous epidemiology and EHR data. ehrapy incorporates a series of analytical steps, from data extraction and quality control to the generation of low-dimensional representations. Complemented by rich statistical modules, ehrapy facilitates associating patients with disease states, differential comparison between patient clusters, survival analysis, trajectory inference, causal inference and more. Leveraging ontologies, ehrapy further enables data sharing and training EHR deep learning models, paving the way for foundational models in biomedical research. We demonstrate ehrapy’s features in six distinct examples. We applied ehrapy to stratify patients affected by unspecified pneumonia into finer-grained phenotypes. Furthermore, we reveal biomarkers for significant differences in survival among these groups. Additionally, we quantify medication-class effects of pneumonia medications on length of stay. We further leveraged ehrapy to analyze cardiovascular risks across different data modalities. We reconstructed disease state trajectories in patients with severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) based on imaging data. Finally, we conducted a case study to demonstrate how ehrapy can detect and mitigate biases in EHR data. ehrapy, thus, provides a framework that we envision will standardize analysis pipelines on EHR data and serve as a cornerstone for the community.

Similar content being viewed by others

Data-driven identification of heart failure disease states and progression pathways using electronic health records

EHR foundation models improve robustness in the presence of temporal distribution shift

Harnessing EHR data for health research

Electronic health records (EHRs) are becoming increasingly common due to standardized data collection 1 and digitalization in healthcare institutions. EHRs collected at medical care sites serve as efficient storage and sharing units of health information 2 , enabling the informed treatment of individuals using the patient’s complete history 3 . Routinely collected EHR data are approaching genomic-scale size and complexity 4 , posing challenges in extracting information without quantitative analysis methods. The application of such approaches to EHR databases 1 , 5 , 6 , 7 , 8 , 9 has enabled the prediction and classification of diseases 10 , 11 , study of population health 12 , determination of optimal treatment policies 13 , 14 , simulation of clinical trials 15 and stratification of patients 16 .

However, current EHR datasets suffer from serious limitations, such as data collection issues, inconsistencies and lack of data diversity. EHR data collection and sharing problems often arise due to non-standardized formats, with disparate systems using exchange protocols, such as Health Level Seven International (HL7) and Fast Healthcare Interoperability Resources (FHIR) 17 . In addition, EHR data are stored in various on-disk formats, including, but not limited to, relational databases and CSV, XML and JSON formats. These variations pose challenges with respect to data retrieval, scalability, interoperability and data sharing.

Beyond format variability, inherent biases of the collected data can compromise the validity of findings. Selection bias stemming from non-representative sample composition can lead to skewed inferences about disease prevalence or treatment efficacy 18 , 19 . Filtering bias arises through inconsistent criteria for data inclusion, obscuring true variable relationships 20 . Surveillance bias exaggerates associations between exposure and outcomes due to differential monitoring frequencies 21 . EHR data are further prone to missing data 22 , 23 , which can be broadly classified into three categories: missing completely at random (MCAR), where missingness is unrelated to the data; missing at random (MAR), where missingness depends on observed data; and missing not at random (MNAR), where missingness depends on unobserved data 22 , 23 . Information and coding biases, related to inaccuracies in data recording or coding inconsistencies, respectively, can lead to misclassification and unreliable research conclusions 24 , 25 . Data may even contradict itself, such as when measurements were reported for deceased patients 26 , 27 . Technical variation and differing data collection standards lead to distribution differences and inconsistencies in representation and semantics across EHR datasets 28 , 29 . Attrition and confounding biases, resulting from differential patient dropout rates or unaccounted external variable effects, can significantly skew study outcomes 30 , 31 , 32 . The diversity of EHR data that comprise demographics, laboratory results, vital signs, diagnoses, medications, x-rays, written notes and even omics measurements amplifies all the aforementioned issues.

Addressing these challenges requires rigorous study design, careful data pre-processing and continuous bias evaluation through exploratory data analysis. Several EHR data pre-processing and analysis workflows were previously developed 4 , 33 , 34 , 35 , 36 , 37 , but none of them enables the analysis of heterogeneous data, provides in-depth documentation, is available as a software package or allows for exploratory visual analysis. Current EHR analysis pipelines, therefore, differ considerably in their approaches and are often commercial, vendor-specific solutions 38 . This is in contrast to strategies using community standards for the analysis of omics data, such as Bioconductor 39 or scverse 40 . As a result, EHR data frequently remain underexplored and are commonly investigated only for a particular research question 41 . Even in such cases, EHR data are then frequently input into machine learning models with serious data quality issues that greatly impact prediction performance and generalizability 42 .

To address this lack of analysis tooling, we developed the EHR Analysis in Python framework, ehrapy, which enables exploratory analysis of diverse EHR datasets. The ehrapy package is purpose-built to organize, analyze, visualize and statistically compare complex EHR data. ehrapy can be applied to datasets of different data types, sizes, diseases and origins. To demonstrate this versatility, we applied ehrapy to datasets obtained from EHR and population-based studies. Using the Pediatric Intensive Care (PIC) EHR database 43 , we stratified patients diagnosed with ‘unspecified pneumonia’ into distinct clinically relevant groups, extracted clinical indicators of pneumonia through statistical analysis and quantified medication-class effects on length of stay (LOS) with causal inference. Using the UK Biobank 44 (UKB), a population-scale cohort comprising over 500,000 participants from the United Kingdom, we employed ehrapy to explore cardiovascular risk factors using clinical predictors, metabolomics, genomics and retinal imaging-derived features. Additionally, we performed image analysis to project disease progression through fate mapping in patients affected by coronavirus disease 2019 (COVID-19) using chest x-rays. Finally, we demonstrate how exploratory analysis with ehrapy unveils and mitigates biases in over 100,000 visits by patients with diabetes across 130 US hospitals. We provide online links to additional use cases that demonstrate ehrapy’s usage with further datasets, including MIMIC-II (ref. 45 ), and for various medical conditions, such as patients subject to indwelling arterial catheter usage. ehrapy is compatible with any EHR dataset that can be transformed into vectors and is accessible as a user-friendly open-source software package hosted at https://github.com/theislab/ehrapy and installable from PyPI. It comes with comprehensive documentation, tutorials and further examples, all available at https://ehrapy.readthedocs.io .

ehrapy: a framework for exploratory EHR data analysis

The foundation of ehrapy is a robust and scalable data storage backend that is combined with a series of pre-processing and analysis modules. In ehrapy, EHR data are organized as a data matrix where observations are individual patient visits (or patients, in the absence of follow-up visits), and variables represent all measured quantities ( Methods ). These data matrices are stored together with metadata of observations and variables. By leveraging the AnnData (annotated data) data structure that implements this design, ehrapy builds upon established standards and is compatible with analysis and visualization functions provided by the omics scverse 40 ecosystem. Readers are also available in R, Julia and Javascript 46 . We additionally provide a dataset module with more than 20 public loadable EHR datasets in AnnData format to kickstart analysis and development with ehrapy.

For standardized analysis of EHR data, it is crucial that these data are encoded and stored in consistent, reusable formats. Thus, ehrapy requires that input data are organized in structured vectors. Readers for common formats, such as CSV, OMOP 47 or SQL databases, are available in ehrapy. Data loaded into AnnData objects can be mapped against several hierarchical ontologies 48 , 49 , 50 , 51 ( Methods ). Clinical keywords of free text notes can be automatically extracted ( Methods ).

Powered by scanpy, which scales to millions of observations 52 ( Methods and Supplementary Table 1 ) and the machine learning library scikit-learn 53 , ehrapy provides more than 100 composable analysis functions organized in modules from which custom analysis pipelines can be built. Each function directly interacts with the AnnData object and adds all intermediate results for simple access and reuse of information to it. To facilitate setting up these pipelines, ehrapy guides analysts through a general analysis pipeline (Fig. 1 ). At any step of an analysis pipeline, community software packages can be integrated without any vendor lock-in. Because ehrapy is built on open standards, it can be purposefully extended to solve new challenges, such as the development of foundational models ( Methods ).

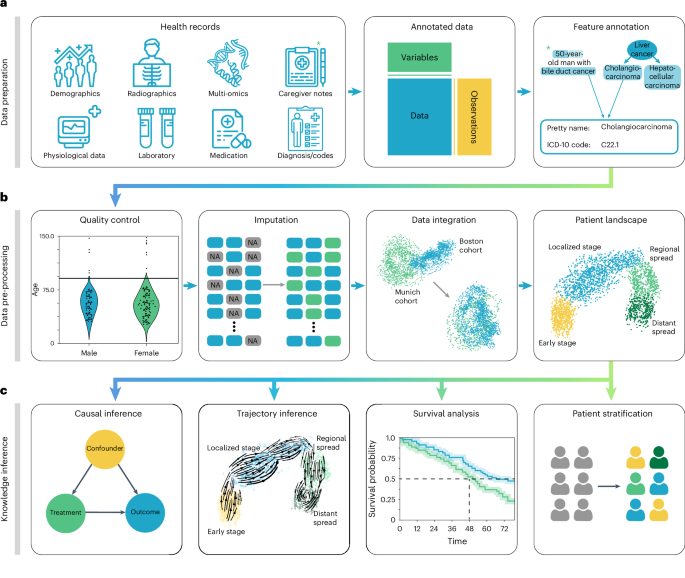

a , Heterogeneous health data are first loaded into memory as an AnnData object with patient visits as observational rows and variables as columns. Next, the data can be mapped against ontologies, and key terms are extracted from free text notes. b , The EHR data are subject to quality control where low-quality or spurious measurements are removed or imputed. Subsequently, numerical data are normalized, and categorical data are encoded. Data from different sources with data distribution shifts are integrated, embedded, clustered and annotated in a patient landscape. c , Further downstream analyses depend on the question of interest and can include the inference of causal effects and trajectories, survival analysis or patient stratification.

In the ehrapy analysis pipeline, EHR data are initially inspected for quality issues by analyzing feature distributions that may skew results and by detecting visits and features with high missing rates that ehrapy can then impute ( Methods ). ehrapy tracks all filtering steps while keeping track of population dynamics to highlight potential selection and filtering biases ( Methods ). Subsequently, ehrapy’s normalization and encoding functions ( Methods ) are applied to achieve a uniform numerical representation that facilitates data integration and corrects for dataset shift effects ( Methods ). Calculated lower-dimensional representations can subsequently be visualized, clustered and annotated to obtain a patient landscape ( Methods ). Such annotated groups of patients can be used for statistical comparisons to find differences in features among them to ultimately learn markers of patient states.

As analysis goals can differ between users and datasets, the ehrapy analysis pipeline is customizable during the final knowledge inference step. ehrapy provides statistical methods for group comparison and extensive support for survival analysis ( Methods ), enabling the discovery of biomarkers. Furthermore, ehrapy offers functions for causal inference to go from statistically determined associations to causal relations ( Methods ). Moreover, patient visits in aggregated EHR data can be regarded as snapshots where individual measurements taken at specific timepoints might not adequately reflect the underlying progression of disease and result from unrelated variation due to, for example, day-to-day differences 54 , 55 , 56 . Therefore, disease progression models should rely on analysis of the underlying clinical data, as disease progression in an individual patient may not be monotonous in time. ehrapy allows for the use of advanced trajectory inference methods to overcome sparse measurements 57 , 58 , 59 . We show that this approach can order snapshots to calculate a pseudotime that can adequately reflect the progression of the underlying clinical process. Given a sufficient number of snapshots, ehrapy increases the potential to understand disease progression, which is likely not robustly captured within a single EHR but, rather, across several.

ehrapy enables patient stratification in pneumonia cases

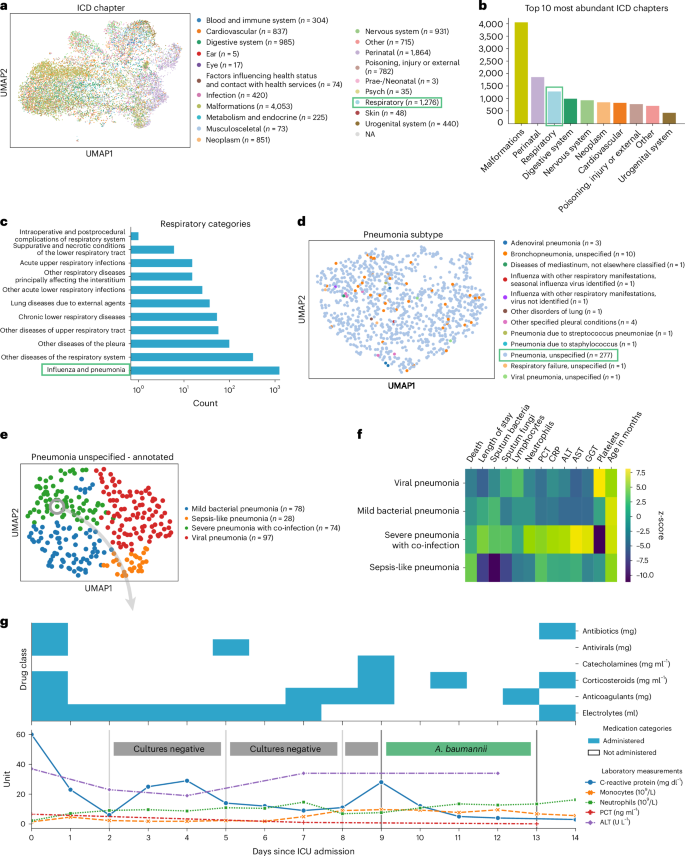

To demonstrate ehrapy’s capability to analyze heterogeneous datasets from a broad patient set across multiple care units, we applied our exploratory strategy to the PIC 43 database. The PIC database is a single-center database hosting information on children admitted to critical care units at the Children’s Hospital of Zhejiang University School of Medicine in China. It contains 13,499 distinct hospital admissions of 12,881 individual pediatric patients admitted between 2010 and 2018 for whom demographics, diagnoses, doctors’ notes, vital signs, laboratory and microbiology tests, medications, fluid balances and more were collected (Extended Data Figs. 1 and 2a and Methods ). After missing data imputation and subsequent pre-processing (Extended Data Figs. 2b,c and 3 and Methods ), we generated a uniform manifold approximation and projection (UMAP) embedding to visualize variation across all patients using ehrapy (Fig. 2a ). This visualization of the low-dimensional patient manifold shows the heterogeneity of the collected data in the PIC database, with malformations, perinatal and respiratory being the most abundant International Classification of Diseases (ICD) chapters (Fig. 2b ). The most common respiratory disease categories (Fig. 2c ) were labeled pneumonia and influenza ( n = 984). We focused on pneumonia to apply ehrapy to a challenging, broad-spectrum disease that affects all age groups. Pneumonia is a prevalent respiratory infection that poses a substantial burden on public health 60 and is characterized by inflammation of the alveoli and distal airways 60 . Individuals with pre-existing chronic conditions are particularly vulnerable, as are children under the age of 5 (ref. 61 ). Pneumonia can be caused by a range of microorganisms, encompassing bacteria, respiratory viruses and fungi.

a , UMAP of all patient visits in the ICU with primary discharge diagnosis grouped by ICD chapter. b , The prevalence of respiratory diseases prompted us to investigate them further. c , Respiratory categories show the abundance of influenza and pneumonia diagnoses that we investigated more closely. d , We observed the ‘unspecified pneumonia’ subgroup, which led us to investigate and annotate it in more detail. e , The previously ‘unspecified pneumonia’-labeled patients were annotated using several clinical features (Extended Data Fig. 5 ), of which the most important ones are shown in the heatmap ( f ). g , Example disease progression of an individual child with pneumonia illustrating pharmacotherapy over time until positive A. baumannii swab.

We selected the age group ‘youths’ (13 months to 18 years of age) for further analysis, addressing a total of 265 patients who dominated the pneumonia cases and were diagnosed with ‘unspecified pneumonia’ (Fig. 2d and Extended Data Fig. 4 ). Neonates (0–28 d old) and infants (29 d to 12 months old) were excluded from the analysis as the disease context is significantly different in these age groups due to distinct anatomical and physical conditions. Patients were 61% male, had a total of 277 admissions, had a mean age at admission of 54 months (median, 38 months) and had an average LOS of 15 d (median, 7 d). Of these, 152 patients were admitted to the pediatric intensive care unit (PICU), 118 to the general ICU (GICU), four to the surgical ICU (SICU) and three to the cardiac ICU (CICU). Laboratory measurements typically had 12–14% missing data, except for serum procalcitonin (PCT), a marker for bacterial infections, with 24.5% missing, and C-reactive protein (CRP), a marker of inflammation, with 16.8% missing. Measurements assigned as ‘vital signs’ contained between 44% and 54% missing values. Stratifying patients with unspecified pneumonia further enables a more nuanced understanding of the disease, potentially facilitating tailored approaches to treatment.

To deepen clinical phenotyping for the disease group ‘unspecified pneumonia’, we calculated a k -nearest neighbor graph to cluster patients into groups and visualize these in UMAP space ( Methods ). Leiden clustering 62 identified four patient groupings with distinct clinical features that we annotated (Fig. 2e ). To identify the laboratory values, medications and pathogens that were most characteristic for these four groups (Fig. 2f ), we applied t -tests for numerical data and g -tests for categorical data between the identified groups using ehrapy (Extended Data Fig. 5 and Methods ). Based on this analysis, we identified patient groups with ‘sepsis-like, ‘severe pneumonia with co-infection’, ‘viral pneumonia’ and ‘mild pneumonia’ phenotypes. The ‘sepsis-like’ group of patients ( n = 28) was characterized by rapid disease progression as exemplified by an increased number of deaths (adjusted P ≤ 5.04 × 10 −3 , 43% ( n = 28), 95% confidence interval (CI): 23%, 62%); indication of multiple organ failure, such as elevated creatinine (adjusted P ≤ 0.01, 52.74 ± 23.71 μmol L −1 ) or reduced albumin levels (adjusted P ≤ 2.89 × 10 −4 , 33.40 ± 6.78 g L −1 ); and increased expression levels and peaks of inflammation markers, including PCT (adjusted P ≤ 3.01 × 10 −2 , 1.42 ± 2.03 ng ml −1 ), whole blood cell count, neutrophils, lymphocytes, monocytes and lower platelet counts (adjusted P ≤ 6.3 × 10 −2 , 159.30 ± 142.00 × 10 9 per liter) and changes in electrolyte levels—that is, lower potassium levels (adjusted P ≤ 0.09 × 10 −2 , 3.14 ± 0.54 mmol L −1 ). Patients whom we associated with the term ‘severe pneumonia with co-infection’ ( n = 74) were characterized by prolonged ICU stays (adjusted P ≤ 3.59 × 10 −4 , 15.01 ± 29.24 d); organ affection, such as higher levels of creatinine (adjusted P ≤ 1.10 × 10 −4 , 52.74 ± 23.71 μmol L −1 ) and lower platelet count (adjusted P ≤ 5.40 × 10 −23 , 159.30 ± 142.00 × 10 9 per liter); increased inflammation markers, such as peaks of PCT (adjusted P ≤ 5.06 × 10 −5 , 1.42 ± 2.03 ng ml −1 ), CRP (adjusted P ≤ 1.40 × 10 −6 , 50.60 ± 37.58 mg L −1 ) and neutrophils (adjusted P ≤ 8.51 × 10 −6 , 13.01 ± 6.98 × 10 9 per liter); detection of bacteria in combination with additional pathogen fungals in sputum samples (adjusted P ≤ 1.67 × 10 −2 , 26% ( n = 74), 95% CI: 16%, 36%); and increased application of medication, including antifungals (adjusted P ≤ 1.30 × 10 −4 , 15% ( n = 74), 95% CI: 7%, 23%) and catecholamines (adjusted P ≤ 2.0 × 10 −2 , 45% ( n = 74), 95% CI: 33%, 56%). Patients in the ‘mild pneumonia’ group were characterized by positive sputum cultures in the presence of relatively lower inflammation markers, such as PCT (adjusted P ≤ 1.63 × 10 −3 , 1.42 ± 2.03 ng ml −1 ) and CRP (adjusted P ≤ 0.03 × 10 −1 , 50.60 ± 37.58 mg L −1 ), while receiving antibiotics more frequently (adjusted P ≤ 1.00 × 10 −5 , 80% ( n = 78), 95% CI: 70%, 89%) and additional medications (electrolytes, blood thinners and circulation-supporting medications) (adjusted P ≤ 1.00 × 10 −5 , 82% ( n = 78), 95% CI: 73%, 91%). Finally, patients in the ‘viral pneumonia’ group were characterized by shorter LOSs (adjusted P ≤ 8.00 × 10 −6 , 15.01 ± 29.24 d), a lack of non-viral pathogen detection in combination with higher lymphocyte counts (adjusted P ≤ 0.01, 4.11 ± 2.49 × 10 9 per liter), lower levels of PCT (adjusted P ≤ 0.03 × 10 −2 , 1.42 ± 2.03 ng ml −1 ) and reduced application of catecholamines (adjusted P ≤ 5.96 × 10 −7 , 15% (n = 97), 95% CI: 8%, 23%), antibiotics (adjusted P ≤ 8.53 × 10 −6 , 41% ( n = 97), 95% CI: 31%, 51%) and antifungals (adjusted P ≤ 5.96 × 10 −7 , 0% ( n = 97), 95% CI: 0%, 0%).

To demonstrate the ability of ehrapy to examine EHR data from different levels of resolution, we additionally reconstructed a case from the ‘severe pneumonia with co-infection’ group (Fig. 2g ). In this case, the analysis revealed that CRP levels remained elevated despite broad-spectrum antibiotic treatment until a positive Acinetobacter baumannii result led to a change in medication and a subsequent decrease in CRP and monocyte levels.

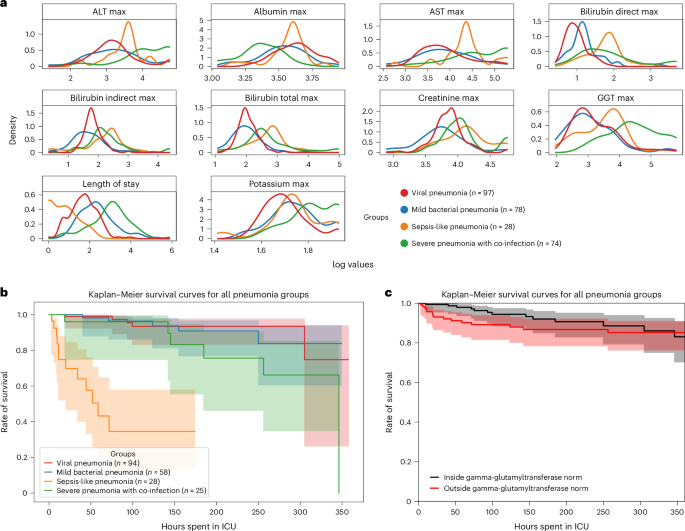

ehrapy facilitates extraction of pneumonia indicators

ehrapy’s survival analysis module allowed us to identify clinical indicators of disease stages that could be used as biomarkers through Kaplan–Meier analysis. We found strong variance in overall aspartate aminotransferase (AST), alanine aminotransferase (ALT), gamma-glutamyl transferase (GGT) and bilirubin levels (Fig. 3a ), including changes over time (Extended Data Fig. 6a,b ), in all four ‘unspecified pneumonia’ groups. Routinely used to assess liver function, studies provide evidence that AST, ALT and GGT levels are elevated during respiratory infections 63 , including severe pneumonia 64 , and can guide diagnosis and management of pneumonia in children 63 . We confirmed reduced survival in more severely affected children (‘sepsis-like pneumonia’ and ‘severe pneumonia with co-infection’) using Kaplan–Meier curves and a multivariate log-rank test (Fig. 3b ; P ≤ 1.09 × 10 −18 ) through ehrapy. To verify the association of this trajectory with altered AST, ALT and GGT expression levels, we further grouped all patients based on liver enzyme reference ranges ( Methods and Supplementary Table 2 ). By Kaplan–Meier survival analysis, cases with peaks of GGT ( P ≤ 1.4 × 10 −2 , 58.01 ± 2.03 U L −1 ), ALT ( P ≤ 2.9 × 10 −2 , 43.59 ± 38.02 U L −1 ) and AST ( P ≤ 4.8 × 10 −4 , 78.69 ± 60.03 U L −1 ) in ‘outside the norm’ were found to correlate with lower survival in all groups (Fig. 3c and Extended Data Fig. 6 ), in line with previous studies 63 , 65 . Bilirubin was not found to significantly affect survival ( P ≤ 2.1 × 10 −1 , 12.57 ± 21.22 mg dl −1 ).

a , Line plots of major hepatic system laboratory measurements per group show variance in the measurements per pneumonia group. b , Kaplan–Meier survival curves demonstrate lower survival for ‘sepsis-like’ and ‘severe pneumonia with co-infection’ groups. c , Kaplan–Meier survival curves for children with GGT measurements outside the norm range display lower survival.

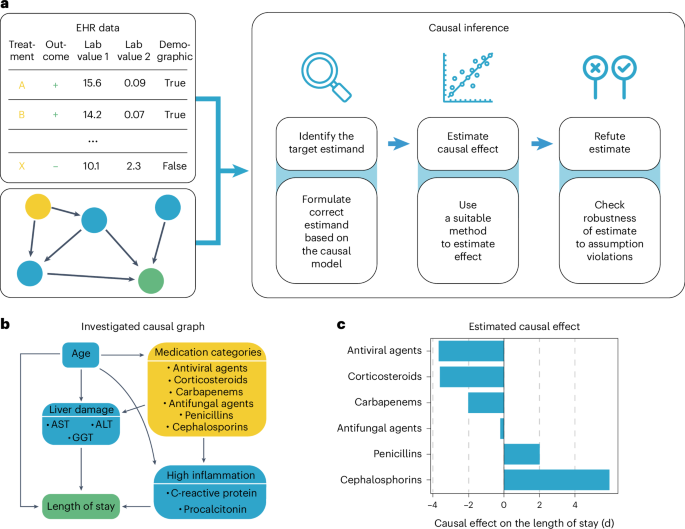

ehrapy quantifies medication class effect on LOS

Pneumonia requires case-specific medications due to its diverse causes. To demonstrate the potential of ehrapy’s causal inference module, we quantified the effect of medication on ICU LOS to evaluate case-specific administration of medication. In contrast to causal discovery that attempts to find a causal graph reflecting the causal relationships, causal inference is a statistical process used to investigate possible effects when altering a provided system, as represented by a causal graph and observational data (Fig. 4a ) 66 . This approach allows identifying and quantifying the impact of specific interventions or treatments on outcome measures, thereby providing insight for evidence-based decision-making in healthcare. Causal inference relies on datasets incorporating interventions to accurately quantify effects.

a , ehrapy’s causal module is based on the strategy of the tool ‘dowhy’. Here, EHR data containing treatment, outcome and measurements and a causal graph serve as input for causal effect quantification. The process includes the identification of the target estimand based on the causal graph, the estimation of causal effects using various models and, finally, refutation where sensitivity analyses and refutation tests are performed to assess the robustness of the results and assumptions. b , Curated causal graph using age, liver damage and inflammation markers as disease progression proxies together with medications as interventions to assess the causal effect on length of ICU stay. c , Determined causal effect strength on LOS in days of administered medication categories.

We manually constructed a minimal causal graph with ehrapy (Fig. 4b ) on records of treatment with corticosteroids, carbapenems, penicillins, cephalosporins and antifungal and antiviral medications as interventions (Extended Data Fig. 7 and Methods ). We assumed that the medications affect disease progression proxies, such as inflammation markers and markers of organ function. The selection of ‘interventions’ is consistent with current treatment standards for bacterial pneumonia and respiratory distress 67 , 68 . Based on the approach of the tool ‘dowhy’ 69 (Fig. 4a ), ehrapy’s causal module identified the application of corticosteroids, antivirals and carbapenems to be associated with shorter LOSs, in line with current evidence 61 , 70 , 71 , 72 . In contrast, penicillins and cephalosporins were associated with longer LOSs, whereas antifungal medication did not strongly influence LOS (Fig. 4c ).

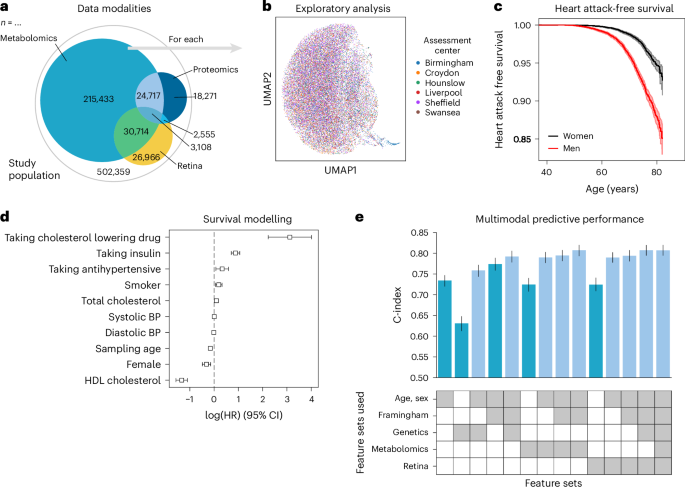

ehrapy enables deriving population-scale risk factors

To illustrate the advantages of using a unified data management and quality control framework, such as ehrapy, we modeled myocardial infarction risk using Cox proportional hazards models on UKB 44 data. Large population cohort studies, such as the UKB, enable the investigation of common diseases across a wide range of modalities, including genomics, metabolomics, proteomics, imaging data and common clinical variables (Fig. 5a,b ). From these, we used a publicly available polygenic risk score for coronary heart disease 73 comprising 6.6 million variants, 80 nuclear magnetic resonance (NMR) spectroscopy-based metabolomics 74 features, 81 features derived from retinal optical coherence tomography 75 , 76 and the Framingham Risk Score 77 feature set, which includes known clinical predictors, such as age, sex, body mass index, blood pressure, smoking behavior and cholesterol levels. We excluded features with more than 10% missingness and imputed the remaining missing values ( Methods ). Furthermore, individuals with events up to 1 year after the sampling time were excluded from the analyses, ultimately selecting 29,216 individuals for whom all mentioned data types were available (Extended Data Figs. 8 and 9 and Methods ). Myocardial infarction, as defined by our mapping to the phecode nomenclature 51 , was defined as the endpoint (Fig. 5c ). We modeled the risk for myocardial infarction 1 year after either the metabolomic sample was obtained or imaging was performed.

a , The UKB includes 502,359 participants from 22 assessment centers. Most participants have genetic data (97%) and physical measurement data (93%), but fewer have data for complex measures, such as metabolomics, retinal imaging or proteomics. b , We found a distinct cluster of individuals (bottom right) from the Birmingham assessment center in the retinal imaging data, which is an artifact of the image acquisition process and was, thus, excluded. c , Myocardial infarctions are recorded for 15% of the male and 7% of the female study population. Kaplan–Meier estimators with 95% CIs are shown. d , For every modality combination, a linear Cox proportional hazards model was fit to determine the prognostic potential of these for myocardial infarction. Cardiovascular risk factors show expected positive log hazard ratios (log (HRs)) for increased blood pressure or total cholesterol and negative ones for sampling age and systolic blood pressure (BP). log (HRs) with 95% CIs are shown. e , Combining all features yields a C-index of 0.81. c – e , Error bars indicate 95% CIs ( n = 29,216).

Predictive performance for each modality was assessed by fitting Cox proportional hazards (Fig. 5c ) models on each of the feature sets using ehrapy (Fig. 5d ). The age of the first occurrence served as the time to event; alternatively, date of death or date of the last record in the EHR served as censoring times. Models were evaluated using the concordance index (C-index) ( Methods ). The combination of multiple modalities successfully improved the predictive performance for coronary heart disease by increasing the C-index from 0.63 (genetic) to 0.76 (genetics, age and sex) and to 0.77 (clinical predictors) with 0.81 (imaging and clinical predictors) for combinations of feature sets (Fig. 5e ). Our finding is in line with previous observations of complementary effects between different modalities, where a broader ‘major adverse cardiac event’ phenotype was modeled in the UKB achieving a C-index of 0.72 (ref. 78 ). Adding genetic data improves predictive potential, as it is independent of sampling age and has limited prediction of other modalities 79 . The addition of metabolomic data did not improve predictive power (Fig. 5e ).

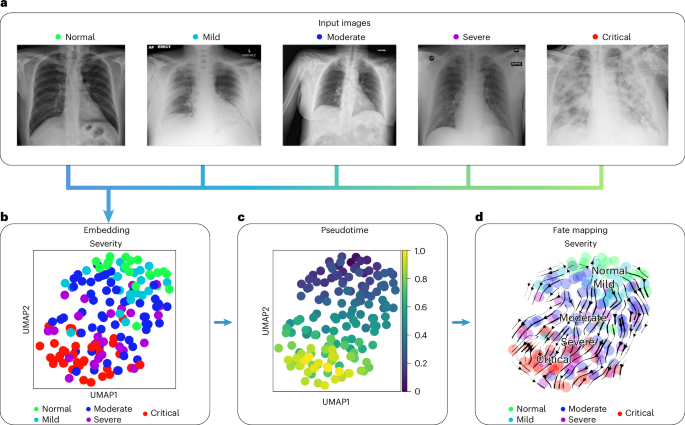

Imaging-based disease severity projection via fate mapping

To demonstrate ehrapy’s ability to handle diverse image data and recover disease stages, we embedded pulmonary imaging data obtained from patients with COVID-19 into a lower-dimensional space and computationally inferred disease progression trajectories using pseudotemporal ordering. This describes a continuous trajectory or ordering of individual points based on feature similarity 80 . Continuous trajectories enable mapping the fate of new patients onto precise states to potentially predict their future condition.

In COVID-19, a highly contagious respiratory illness caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2), symptoms range from mild flu-like symptoms to severe respiratory distress. Chest x-rays typically show opacities (bilateral patchy, ground glass) associated with disease severity 81 .

We used COVID-19 chest x-ray images from the BrixIA 82 dataset consisting of 192 images (Fig. 6a ) with expert annotations of disease severity. We used the BrixIA database scores, which are based on six regions annotated by radiologists, to classify disease severity ( Methods ). We embedded raw image features using a pre-trained DenseNet model ( Methods ) and further processed this embedding into a nearest-neighbors-based UMAP space using ehrapy (Fig. 6b and Methods ). Fate mapping based on imaging information ( Methods ) determined a severity ordering from mild to critical cases (Fig. 6b–d ). Images labeled as ‘normal’ are projected to stay within the healthy group, illustrating the robustness of our approach. Images of diseased patients were ordered by disease severity, highlighting clear trajectories from ‘normal’ to ‘critical’ states despite the heterogeneity of the x-ray images stemming from, for example, different zoom levels (Fig. 6a ).

a , Randomly selected chest x-ray images from the BrixIA dataset demonstrate its variance. b , UMAP visualization of the BrixIA dataset embedding shows a separation of disease severity classes. c , Calculated pseudotime for all images increases with distance to the ‘normal’ images. d , Stream projection of fate mapping in UMAP space showcases disease severity trajectory of the COVID-19 chest x-ray images.

Detecting and mitigating biases in EHR data with ehrapy

To showcase how exploratory analysis using ehrapy can reveal and mitigate biases, we analyzed the Fairlearn 83 version of the Diabetes 130-US Hospitals 84 dataset. The dataset covers 10 years (1999–2008) of clinical records from 130 US hospitals, detailing 47 features of diabetes diagnoses, laboratory tests, medications and additional data from up to 14 d of inpatient care of 101,766 diagnosed patient visits ( Methods ). It was originally collected to explore the link between the measurement of hemoglobin A1c (HbA1c) and early readmission.

The cohort primarily consists of White and African American individuals, with only a minority of cases from Asian or Hispanic backgrounds (Extended Data Fig. 10a ). ehrapy’s cohort tracker unveiled selection and surveillance biases when filtering for Medicare recipients for further analysis, resulting in a shift of age distribution toward an age of over 60 years in addition to an increasing ratio of White participants. Using ehrapy’s visualization modules, our analysis showed that HbA1c was measured in only 18.4% of inpatients, with a higher frequency in emergency admissions compared to referral cases (Extended Data Fig. 10b ). Normalization biases can skew data relationships when standardization techniques ignore subgroup variability or assume incorrect distributions. The choice of normalization strategy must be carefully considered to avoid obscuring important factors. When normalizing the number of applied medications individually, differences in distributions between age groups remained. However, when normalizing both distributions jointly with age group as an additional group variable, differences between age groups were masked (Extended Data Fig. 10c ). To investigate missing data and imputation biases, we introduced missingness for the number of applied medications according to an MCAR mechanism, which we verified using ehrapy’s Little’s test ( P ≤ 0.01 × 10 −2 ), and an MAR mechanism ( Methods ). Whereas imputing the mean in the MCAR case did not affect the overall location of the distribution, it led to an underestimation of the variance, with the standard deviation dropping from 8.1 in the original data to 6.8 in the imputed data (Extended Data Fig. 10d ). Mean imputation in the MAR case skewed both location and variance of the mean from 16.02 to 14.66, with a standard deviation of only 5.72 (Extended Data Fig. 10d ). Using ehrapy’s multiple imputation based MissForest 85 imputation on the MAR data resulted in a mean of 16.04 and a standard deviation of 6.45. To predict patient readmission in fewer than 30 d, we merged the three smallest race groups, ‘Asian’, ‘Hispanic’ and ‘Other’. Furthermore, we dropped the gender group ‘Unknown/Invalid’ owing to the small sample size making meaningful assessment impossible, and we performed balanced random undersampling, resulting in 5,677 cases from each condition. We observed an overall balanced accuracy of 0.59 using a logistic regression model. However, the false-negative rate was highest for the races ‘Other’ and ‘Unknown’, whereas their selection rate was lowest, and this model was, therefore, biased (Extended Data Fig. 10e ). Using ehrapy’s compatibility with existing machine learning packages, we used Fairlearn’s ThresholdOptimizer ( Methods ), which improved the selection rates for ‘Other’ from 0.32 to 0.38 and for ‘Unknown’ from 0.23 to 0.42 and the false-negative rates for ‘Other’ from 0.48 to 0.42 and for ‘Unknown’ from 0.61 to 0.45 (Extended Data Fig. 10e ).

Clustering offers a hypothesis-free alternative to supervised classification when clear hypotheses or labels are missing. It has enabled the identification of heart failure subtypes 86 and progression pathways 87 and COVID-19 severity states 88 . This concept, which is central to ehrapy, further allowed us to identify fine-grained groups of ‘unspecified pneumonia’ cases in the PIC dataset while discovering biomarkers and quantifying effects of medications on LOS. Such retroactive characterization showcases ehrapy’s ability to put complex evidence into context. This approach supports feedback loops to improve diagnostic and therapeutic strategies, leading to more efficiently allocated resources in healthcare.

ehrapy’s flexible data structures enabled us to integrate the heterogeneous UKB data for predictive performance in myocardial infarction. The different data types and distributions posed a challenge for predictive models that were overcome with ehrapy’s pre-processing modules. Our analysis underscores the potential of combining phenotypic and health data at population scale through ehrapy to enhance risk prediction.

By adapting pseudotime approaches that are commonly used in other omics domains, we successfully recovered disease trajectories from raw imaging data with ehrapy. The determined pseudotime, however, only orders data but does not necessarily provide a future projection per patient. Understanding the driver features for fate mapping in image-based datasets is challenging. The incorporation of image segmentation approaches could mitigate this issue and provide a deeper insight into the spatial and temporal dynamics of disease-related processes.

Limitations of our analyses include the lack of control for informative missingness where the absence of information represents information in itself 89 . Translation from Chinese to English in the PIC database can cause information loss and inaccuracies because the Chinese ICD-10 codes are seven characters long compared to the five-character English codes. Incompleteness of databases, such as the lack of radiology images in the PIC database, low sample sizes, underrepresentation of non-White ancestries and participant self-selection, cannot be accounted for and limit generalizability. This restricts deeper phenotyping of, for example, all ‘unspecified pneumonia’ cases with respect to their survival, which could be overcome by the use of multiple databases. Our causal inference use case is limited by unrecorded variables, such as Sequential Organ Failure Assessment (SOFA) scores, and pneumonia-related pathogens that are missing in the causal graph due to dataset constraints, such as high sparsity and substantial missing data, which risk overfitting and can lead to overinterpretation. We counterbalanced this by employing several refutation methods that statistically reject the causal hypothesis, such as a placebo treatment, a random common cause or an unobserved common cause. The longer hospital stays associated with penicillins and cephalosporins may be dataset specific and stem from higher antibiotic resistance, their use as first-line treatments, more severe initial cases, comorbidities and hospital-specific protocols.

Most analysis steps can introduce algorithmic biases where results are misleading or unfavorably affect specific groups. This is particularly relevant in the context of missing data 22 where determining the type of missing data is necessary to handle it correctly. ehrapy includes an implementation of Little’s test 90 , which tests whether data are distributed MCAR to discern missing data types. For MCAR data single-imputation approaches, such as mean, median or mode, imputation can suffice, but these methods are known to reduce variability 91 , 92 . Multiple imputation strategies, such as Multiple Imputation by Chained Equations (MICE) 93 and MissForest 85 , as implemented in ehrapy, are effective for both MCAR and MAR data 22 , 94 , 95 . MNAR data require pattern-mixture or shared-parameter models that explicitly incorporate the mechanism by which data are missing 96 . Because MNAR involves unobserved data, the assumptions about the missingness mechanism cannot be directly verified, making sensitivity analysis crucial 21 . ehrapy’s wide range of normalization functions and grouping functionality enables to account for intrinsic variability within subgroups, and its compatibility with Fairlearn 83 can potentially mitigate predictor biases. Generally, we recommend to assess all pre-processing in an iterative manner with respect to downstream applications, such as patient stratification. Moreover, sensitivity analysis can help verify the robustness of all inferred knowledge 97 .

These diverse use cases illustrate ehrapy’s potential to sufficiently address the need for a computationally efficient, extendable, reproducible and easy-to-use framework. ehrapy is compatible with major standards, such as Observational Medical Outcomes Partnership (OMOP), Common Data Model (CDM) 47 , HL7, FHIR or openEHR, with flexible support for common tabular data formats. Once loaded into an AnnData object, subsequent sharing of analysis results is made easy because AnnData objects can be stored and read platform independently. ehrapy’s rich documentation of the application programming interface (API) and extensive hands-on tutorials make EHR analysis accessible to both novices and experienced analysts.

As ehrapy remains under active development, users can expect ehrapy to continuously evolve. We are improving support for the joint analysis of EHR, genetics and molecular data where ehrapy serves as a bridge between the EHR and the omics communities. We further anticipate the generation of EHR-specific reference datasets, so-called atlases 98 , to enable query-to-reference mapping where new datasets get contextualized by transferring annotations from the reference to the new dataset. To promote the sharing and collective analysis of EHR data, we envision adapted versions of interactive single-cell data explorers, such as CELLxGENE 99 or the UCSC Cell Browser 100 , for EHR data. Such web interfaces would also include disparity dashboards 20 to unveil trends of preferential outcomes for distinct patient groups. Additional modules specifically for high-frequency time-series data, natural language processing and other data types are currently under development. With the widespread availability of code-generating large language models, frameworks such as ehrapy are becoming accessible to medical professionals without coding expertise who can leverage its analytical power directly. Therefore, ehrapy, together with a lively ecosystem of packages, has the potential to enhance the scientific discovery pipeline to shape the era of EHR analysis.

All datasets that were used during the development of ehrapy and the use cases were used according to their terms of use as indicated by each provider.

Design and implementation of ehrapy

A unified pipeline as provided by our ehrapy framework streamlines the analysis of EHR data by providing an efficient, standardized approach, which reduces the complexity and variability in data pre-processing and analysis. This consistency ensures reproducibility of results and facilitates collaboration and sharing within the research community. Additionally, the modular structure allows for easy extension and customization, enabling researchers to adapt the pipeline to their specific needs while building on a solid foundational framework.

ehrapy was designed from the ground up as an open-source effort with community support. The package, as well as all associated tutorials and dataset preparation scripts, are open source. Development takes place publicly on GitHub where the developers discuss feature requests and issues directly with users. This tight interaction between both groups ensures that we implement the most pressing needs to cater the most important use cases and can guide users when difficulties arise. The open-source nature, extensive documentation and modular structure of ehrapy are designed for other developers to build upon and extend ehrapy’s functionality where necessary. This allows us to focus ehrapy on the most important features to keep the number of dependencies to a minimum.

ehrapy was implemented in the Python programming language and builds upon numerous existing numerical and scientific open-source libraries, specifically matplotlib 101 , seaborn 102 , NumPy 103 , numba 104 , Scipy 105 , scikit-learn 53 and Pandas 106 . Although taking considerable advantage of all packages implemented, ehrapy also shares the limitations of these libraries, such as a lack of GPU support or small performance losses due to the translation layer cost for operations between the Python interpreter and the lower-level C language for matrix operations. However, by building on very widely used open-source software, we ensure seamless integration and compatibility with a broad range of tools and platforms to promote community contributions. Additionally, by doing so, we enhance security by allowing a larger pool of developers to identify and address vulnerabilities 107 . All functions are grouped into task-specific modules whose implementation is complemented with additional dependencies.

Data preparation

Dataloaders.

ehrapy is compatible with any type of vectorized data, where vectorized refers to the data being stored in structured tables in either on-disk or database form. The input and output module of ehrapy provides readers for common formats, such as OMOP, CSV tables or SQL databases through Pandas. When reading in such datasets, the data are stored in the appropriate slots in a new AnnData 46 object. ehrapy’s data module provides access to more than 20 public EHR datasets that feature diseases, including, but not limited to, Parkinson’s disease, breast cancer, chronic kidney disease and more. All dataloaders return AnnData objects to allow for immediate analysis.

AnnData for EHR data

Our framework required a versatile data structure capable of handling various matrix formats, including Numpy 103 for general use cases and interoperability, Scipy 105 sparse matrices for efficient storage, Dask 108 matrices for larger-than-memory analysis and Awkward array 109 for irregular time-series data. We needed a single data structure that not only stores data but also includes comprehensive annotations for thorough contextual analysis. It was essential for this structure to be widely used and supported, which ensures robustness and continual updates. Interoperability with other analytical packages was a key criterion to facilitate seamless integration within existing tools and workflows. Finally, the data structure had to support both in-memory operations and on-disk storage using formats such as HDF5 (ref. 110 ) and Zarr 111 , ensuring efficient handling and accessibility of large datasets and the ability to easily share them with collaborators.