Rubric Best Practices, Examples, and Templates

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

Rubrics can help instructors communicate expectations to students and assess student work fairly, consistently and efficiently. Rubrics can provide students with informative feedback on their strengths and weaknesses so that they can reflect on their performance and work on areas that need improvement.

How to Get Started

Best practices, moodle how-to guides.

- Workshop Recording (Spring 2024)

- Workshop Registration

Step 1: Analyze the assignment

The first step in the rubric creation process is to analyze the assignment or assessment for which you are creating a rubric. To do this, consider the following questions:

- What is the purpose of the assignment and your feedback? What do you want students to demonstrate through the completion of this assignment (i.e. what are the learning objectives measured by it)? Is it a summative assessment, or will students use the feedback to create an improved product?

- Does the assignment break down into different or smaller tasks? Are these tasks equally important as the main assignment?

- What would an “excellent” assignment look like? An “acceptable” assignment? One that still needs major work?

- How detailed do you want the feedback you give students to be? Do you want/need to give them a grade?

Step 2: Decide what kind of rubric you will use

Types of rubrics: holistic, analytic/descriptive, single-point

Holistic Rubric. A holistic rubric includes all the criteria (such as clarity, organization, mechanics, etc.) to be considered together and included in a single evaluation. With a holistic rubric, the rater or grader assigns a single score based on an overall judgment of the student’s work, using descriptions of each performance level to assign the score.

Advantages of holistic rubrics:

- Can p lace an emphasis on what learners can demonstrate rather than what they cannot

- Save grader time by minimizing the number of evaluations to be made for each student

- Can be used consistently across raters, provided they have all been trained

Disadvantages of holistic rubrics:

- Provide less specific feedback than analytic/descriptive rubrics

- Can be difficult to choose a score when a student’s work is at varying levels across the criteria

- Any weighting of c riteria cannot be indicated in the rubric

Analytic/Descriptive Rubric . An analytic or descriptive rubric often takes the form of a table with the criteria listed in the left column and with levels of performance listed across the top row. Each cell contains a description of what the specified criterion looks like at a given level of performance. Each of the criteria is scored individually.

Advantages of analytic rubrics:

- Provide detailed feedback on areas of strength or weakness

- Each criterion can be weighted to reflect its relative importance

Disadvantages of analytic rubrics:

- More time-consuming to create and use than a holistic rubric

- May not be used consistently across raters unless the cells are well defined

- May result in giving less personalized feedback

Single-Point Rubric . A single-point rubric is breaks down the components of an assignment into different criteria, but instead of describing different levels of performance, only the “proficient” level is described. Feedback space is provided for instructors to give individualized comments to help students improve and/or show where they excelled beyond the proficiency descriptors.

Advantages of single-point rubrics:

- Easier to create than an analytic/descriptive rubric

- Perhaps more likely that students will read the descriptors

- Areas of concern and excellence are open-ended

- May removes a focus on the grade/points

- May increase student creativity in project-based assignments

Disadvantage of analytic rubrics: Requires more work for instructors writing feedback

Step 3 (Optional): Look for templates and examples.

You might Google, “Rubric for persuasive essay at the college level” and see if there are any publicly available examples to start from. Ask your colleagues if they have used a rubric for a similar assignment. Some examples are also available at the end of this article. These rubrics can be a great starting point for you, but consider steps 3, 4, and 5 below to ensure that the rubric matches your assignment description, learning objectives and expectations.

Step 4: Define the assignment criteria

Make a list of the knowledge and skills are you measuring with the assignment/assessment Refer to your stated learning objectives, the assignment instructions, past examples of student work, etc. for help.

Helpful strategies for defining grading criteria:

- Collaborate with co-instructors, teaching assistants, and other colleagues

- Brainstorm and discuss with students

- Can they be observed and measured?

- Are they important and essential?

- Are they distinct from other criteria?

- Are they phrased in precise, unambiguous language?

- Revise the criteria as needed

- Consider whether some are more important than others, and how you will weight them.

Step 5: Design the rating scale

Most ratings scales include between 3 and 5 levels. Consider the following questions when designing your rating scale:

- Given what students are able to demonstrate in this assignment/assessment, what are the possible levels of achievement?

- How many levels would you like to include (more levels means more detailed descriptions)

- Will you use numbers and/or descriptive labels for each level of performance? (for example 5, 4, 3, 2, 1 and/or Exceeds expectations, Accomplished, Proficient, Developing, Beginning, etc.)

- Don’t use too many columns, and recognize that some criteria can have more columns that others . The rubric needs to be comprehensible and organized. Pick the right amount of columns so that the criteria flow logically and naturally across levels.

Step 6: Write descriptions for each level of the rating scale

Artificial Intelligence tools like Chat GPT have proven to be useful tools for creating a rubric. You will want to engineer your prompt that you provide the AI assistant to ensure you get what you want. For example, you might provide the assignment description, the criteria you feel are important, and the number of levels of performance you want in your prompt. Use the results as a starting point, and adjust the descriptions as needed.

Building a rubric from scratch

For a single-point rubric , describe what would be considered “proficient,” i.e. B-level work, and provide that description. You might also include suggestions for students outside of the actual rubric about how they might surpass proficient-level work.

For analytic and holistic rubrics , c reate statements of expected performance at each level of the rubric.

- Consider what descriptor is appropriate for each criteria, e.g., presence vs absence, complete vs incomplete, many vs none, major vs minor, consistent vs inconsistent, always vs never. If you have an indicator described in one level, it will need to be described in each level.

- You might start with the top/exemplary level. What does it look like when a student has achieved excellence for each/every criterion? Then, look at the “bottom” level. What does it look like when a student has not achieved the learning goals in any way? Then, complete the in-between levels.

- For an analytic rubric , do this for each particular criterion of the rubric so that every cell in the table is filled. These descriptions help students understand your expectations and their performance in regard to those expectations.

Well-written descriptions:

- Describe observable and measurable behavior

- Use parallel language across the scale

- Indicate the degree to which the standards are met

Step 7: Create your rubric

Create your rubric in a table or spreadsheet in Word, Google Docs, Sheets, etc., and then transfer it by typing it into Moodle. You can also use online tools to create the rubric, but you will still have to type the criteria, indicators, levels, etc., into Moodle. Rubric creators: Rubistar , iRubric

Step 8: Pilot-test your rubric

Prior to implementing your rubric on a live course, obtain feedback from:

- Teacher assistants

Try out your new rubric on a sample of student work. After you pilot-test your rubric, analyze the results to consider its effectiveness and revise accordingly.

- Limit the rubric to a single page for reading and grading ease

- Use parallel language . Use similar language and syntax/wording from column to column. Make sure that the rubric can be easily read from left to right or vice versa.

- Use student-friendly language . Make sure the language is learning-level appropriate. If you use academic language or concepts, you will need to teach those concepts.

- Share and discuss the rubric with your students . Students should understand that the rubric is there to help them learn, reflect, and self-assess. If students use a rubric, they will understand the expectations and their relevance to learning.

- Consider scalability and reusability of rubrics. Create rubric templates that you can alter as needed for multiple assignments.

- Maximize the descriptiveness of your language. Avoid words like “good” and “excellent.” For example, instead of saying, “uses excellent sources,” you might describe what makes a resource excellent so that students will know. You might also consider reducing the reliance on quantity, such as a number of allowable misspelled words. Focus instead, for example, on how distracting any spelling errors are.

Example of an analytic rubric for a final paper

| Above Average (4) | Sufficient (3) | Developing (2) | Needs improvement (1) | |

|---|---|---|---|---|

| (Thesis supported by relevant information and ideas | The central purpose of the student work is clear and supporting ideas always are always well-focused. Details are relevant, enrich the work. | The central purpose of the student work is clear and ideas are almost always focused in a way that supports the thesis. Relevant details illustrate the author’s ideas. | The central purpose of the student work is identified. Ideas are mostly focused in a way that supports the thesis. | The purpose of the student work is not well-defined. A number of central ideas do not support the thesis. Thoughts appear disconnected. |

| (Sequencing of elements/ ideas) | Information and ideas are presented in a logical sequence which flows naturally and is engaging to the audience. | Information and ideas are presented in a logical sequence which is followed by the reader with little or no difficulty. | Information and ideas are presented in an order that the audience can mostly follow. | Information and ideas are poorly sequenced. The audience has difficulty following the thread of thought. |

| (Correctness of grammar and spelling) | Minimal to no distracting errors in grammar and spelling. | The readability of the work is only slightly interrupted by spelling and/or grammatical errors. | Grammatical and/or spelling errors distract from the work. | The readability of the work is seriously hampered by spelling and/or grammatical errors. |

Example of a holistic rubric for a final paper

| The audience is able to easily identify the central message of the work and is engaged by the paper’s clear focus and relevant details. Information is presented logically and naturally. There are minimal to no distracting errors in grammar and spelling. : The audience is easily able to identify the focus of the student work which is supported by relevant ideas and supporting details. Information is presented in a logical manner that is easily followed. The readability of the work is only slightly interrupted by errors. : The audience can identify the central purpose of the student work without little difficulty and supporting ideas are present and clear. The information is presented in an orderly fashion that can be followed with little difficulty. Grammatical and spelling errors distract from the work. : The audience cannot clearly or easily identify the central ideas or purpose of the student work. Information is presented in a disorganized fashion causing the audience to have difficulty following the author’s ideas. The readability of the work is seriously hampered by errors. |

Single-Point Rubric

| Advanced (evidence of exceeding standards) | Criteria described a proficient level | Concerns (things that need work) |

|---|---|---|

| Criteria #1: Description reflecting achievement of proficient level of performance | ||

| Criteria #2: Description reflecting achievement of proficient level of performance | ||

| Criteria #3: Description reflecting achievement of proficient level of performance | ||

| Criteria #4: Description reflecting achievement of proficient level of performance | ||

| 90-100 points | 80-90 points | <80 points |

More examples:

- Single Point Rubric Template ( variation )

- Analytic Rubric Template make a copy to edit

- A Rubric for Rubrics

- Bank of Online Discussion Rubrics in different formats

- Mathematical Presentations Descriptive Rubric

- Math Proof Assessment Rubric

- Kansas State Sample Rubrics

- Design Single Point Rubric

Technology Tools: Rubrics in Moodle

- Moodle Docs: Rubrics

- Moodle Docs: Grading Guide (use for single-point rubrics)

Tools with rubrics (other than Moodle)

- Google Assignments

- Turnitin Assignments: Rubric or Grading Form

Other resources

- DePaul University (n.d.). Rubrics .

- Gonzalez, J. (2014). Know your terms: Holistic, Analytic, and Single-Point Rubrics . Cult of Pedagogy.

- Goodrich, H. (1996). Understanding rubrics . Teaching for Authentic Student Performance, 54 (4), 14-17. Retrieved from

- Miller, A. (2012). Tame the beast: tips for designing and using rubrics.

- Ragupathi, K., Lee, A. (2020). Beyond Fairness and Consistency in Grading: The Role of Rubrics in Higher Education. In: Sanger, C., Gleason, N. (eds) Diversity and Inclusion in Global Higher Education. Palgrave Macmillan, Singapore.

Assessment Rubrics

A rubric is commonly defined as a tool that articulates the expectations for an assignment by listing criteria, and for each criteria, describing levels of quality (Andrade, 2000; Arter & Chappuis, 2007; Stiggins, 2001). Criteria are used in determining the level at which student work meets expectations. Markers of quality give students a clear idea about what must be done to demonstrate a certain level of mastery, understanding, or proficiency (i.e., "Exceeds Expectations" does xyz, "Meets Expectations" does only xy or yz, "Developing" does only x or y or z). Rubrics can be used for any assignment in a course, or for any way in which students are asked to demonstrate what they've learned. They can also be used to facilitate self and peer-reviews of student work.

Rubrics aren't just for summative evaluation. They can be used as a teaching tool as well. When used as part of a formative assessment, they can help students understand both the holistic nature and/or specific analytics of learning expected, the level of learning expected, and then make decisions about their current level of learning to inform revision and improvement (Reddy & Andrade, 2010).

Why use rubrics?

Rubrics help instructors:

Provide students with feedback that is clear, directed and focused on ways to improve learning.

Demystify assignment expectations so students can focus on the work instead of guessing "what the instructor wants."

Reduce time spent on grading and develop consistency in how you evaluate student learning across students and throughout a class.

Rubrics help students:

Focus their efforts on completing assignments in line with clearly set expectations.

Self and Peer-reflect on their learning, making informed changes to achieve the desired learning level.

Developing a Rubric

During the process of developing a rubric, instructors might:

Select an assignment for your course - ideally one you identify as time intensive to grade, or students report as having unclear expectations.

Decide what you want students to demonstrate about their learning through that assignment. These are your criteria.

Identify the markers of quality on which you feel comfortable evaluating students’ level of learning - often along with a numerical scale (i.e., "Accomplished," "Emerging," "Beginning" for a developmental approach).

Give students the rubric ahead of time. Advise them to use it in guiding their completion of the assignment.

It can be overwhelming to create a rubric for every assignment in a class at once, so start by creating one rubric for one assignment. See how it goes and develop more from there! Also, do not reinvent the wheel. Rubric templates and examples exist all over the Internet, or consider asking colleagues if they have developed rubrics for similar assignments.

Sample Rubrics

Examples of holistic and analytic rubrics : see Tables 2 & 3 in “Rubrics: Tools for Making Learning Goals and Evaluation Criteria Explicit for Both Teachers and Learners” (Allen & Tanner, 2006)

Examples across assessment types : see “Creating and Using Rubrics,” Carnegie Mellon Eberly Center for Teaching Excellence and & Educational Innovation

“VALUE Rubrics” : see the Association of American Colleges and Universities set of free, downloadable rubrics, with foci including creative thinking, problem solving, and information literacy.

Andrade, H. 2000. Using rubrics to promote thinking and learning. Educational Leadership 57, no. 5: 13–18. Arter, J., and J. Chappuis. 2007. Creating and recognizing quality rubrics. Upper Saddle River, NJ: Pearson/Merrill Prentice Hall. Stiggins, R.J. 2001. Student-involved classroom assessment. 3rd ed. Upper Saddle River, NJ: Prentice-Hall. Reddy, Y., & Andrade, H. (2010). A review of rubric use in higher education. Assessment & Evaluation In Higher Education, 35(4), 435-448.

- Grades 6-12

- School Leaders

Free Attendance Questions Slideshow ✨

15 Helpful Scoring Rubric Examples for All Grades and Subjects

In the end, they actually make grading easier.

When it comes to student assessment and evaluation, there are a lot of methods to consider. In some cases, testing is the best way to assess a student’s knowledge, and the answers are either right or wrong. But often, assessing a student’s performance is much less clear-cut. In these situations, a scoring rubric is often the way to go, especially if you’re using standards-based grading . Here’s what you need to know about this useful tool, along with lots of rubric examples to get you started.

What is a scoring rubric?

In the United States, a rubric is a guide that lays out the performance expectations for an assignment. It helps students understand what’s required of them, and guides teachers through the evaluation process. (Note that in other countries, the term “rubric” may instead refer to the set of instructions at the beginning of an exam. To avoid confusion, some people use the term “scoring rubric” instead.)

A rubric generally has three parts:

- Performance criteria: These are the various aspects on which the assignment will be evaluated. They should align with the desired learning outcomes for the assignment.

- Rating scale: This could be a number system (often 1 to 4) or words like “exceeds expectations, meets expectations, below expectations,” etc.

- Indicators: These describe the qualities needed to earn a specific rating for each of the performance criteria. The level of detail may vary depending on the assignment and the purpose of the rubric itself.

Rubrics take more time to develop up front, but they help ensure more consistent assessment, especially when the skills being assessed are more subjective. A well-developed rubric can actually save teachers a lot of time when it comes to grading. What’s more, sharing your scoring rubric with students in advance often helps improve performance . This way, students have a clear picture of what’s expected of them and what they need to do to achieve a specific grade or performance rating.

Learn more about why and how to use a rubric here.

Types of Rubric

There are three basic rubric categories, each with its own purpose.

Holistic Rubric

Source: Cambrian College

This type of rubric combines all the scoring criteria in a single scale. They’re quick to create and use, but they have drawbacks. If a student’s work spans different levels, it can be difficult to decide which score to assign. They also make it harder to provide feedback on specific aspects.

Traditional letter grades are a type of holistic rubric. So are the popular “hamburger rubric” and “ cupcake rubric ” examples. Learn more about holistic rubrics here.

Analytic Rubric

Source: University of Nebraska

Analytic rubrics are much more complex and generally take a great deal more time up front to design. They include specific details of the expected learning outcomes, and descriptions of what criteria are required to meet various performance ratings in each. Each rating is assigned a point value, and the total number of points earned determines the overall grade for the assignment.

Though they’re more time-intensive to create, analytic rubrics actually save time while grading. Teachers can simply circle or highlight any relevant phrases in each rating, and add a comment or two if needed. They also help ensure consistency in grading, and make it much easier for students to understand what’s expected of them.

Learn more about analytic rubrics here.

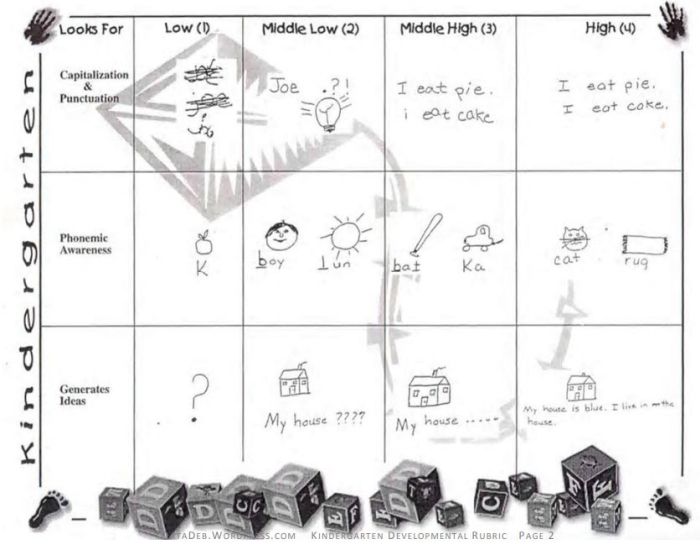

Developmental Rubric

Source: Deb’s Data Digest

A developmental rubric is a type of analytic rubric, but it’s used to assess progress along the way rather than determining a final score on an assignment. The details in these rubrics help students understand their achievements, as well as highlight the specific skills they still need to improve.

Developmental rubrics are essentially a subset of analytic rubrics. They leave off the point values, though, and focus instead on giving feedback using the criteria and indicators of performance.

Learn how to use developmental rubrics here.

Ready to create your own rubrics? Find general tips on designing rubrics here. Then, check out these examples across all grades and subjects to inspire you.

Elementary School Rubric Examples

These elementary school rubric examples come from real teachers who use them with their students. Adapt them to fit your needs and grade level.

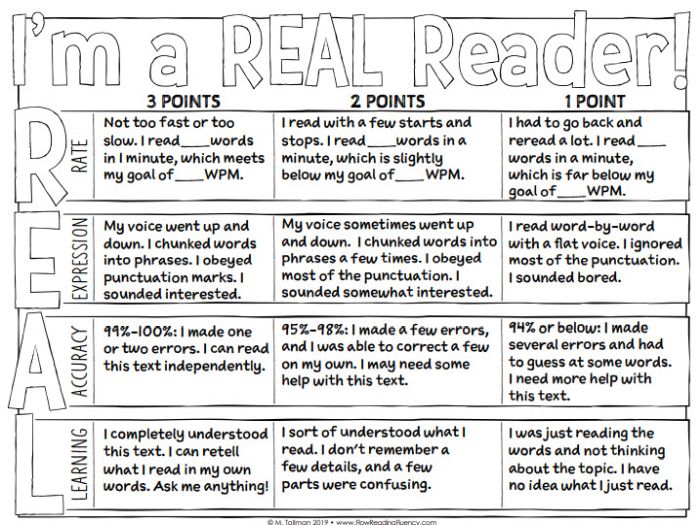

Reading Fluency Rubric

You can use this one as an analytic rubric by counting up points to earn a final score, or just to provide developmental feedback. There’s a second rubric page available specifically to assess prosody (reading with expression).

Learn more: Teacher Thrive

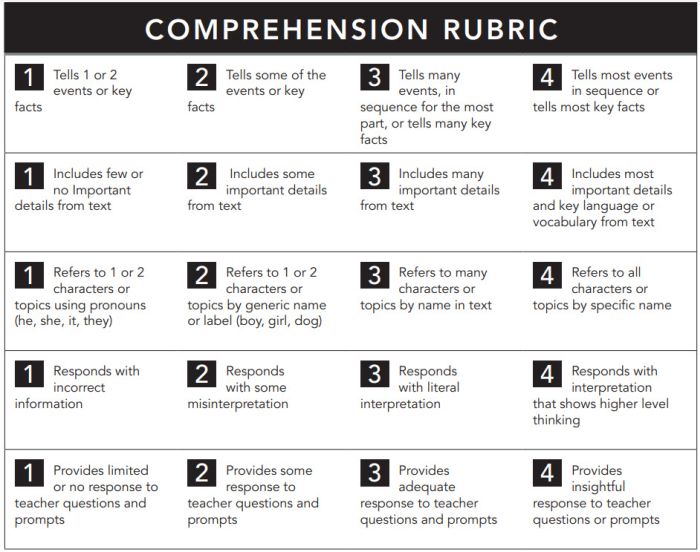

Reading Comprehension Rubric

The nice thing about this rubric is that you can use it at any grade level, for any text. If you like this style, you can get a reading fluency rubric here too.

Learn more: Pawprints Resource Center

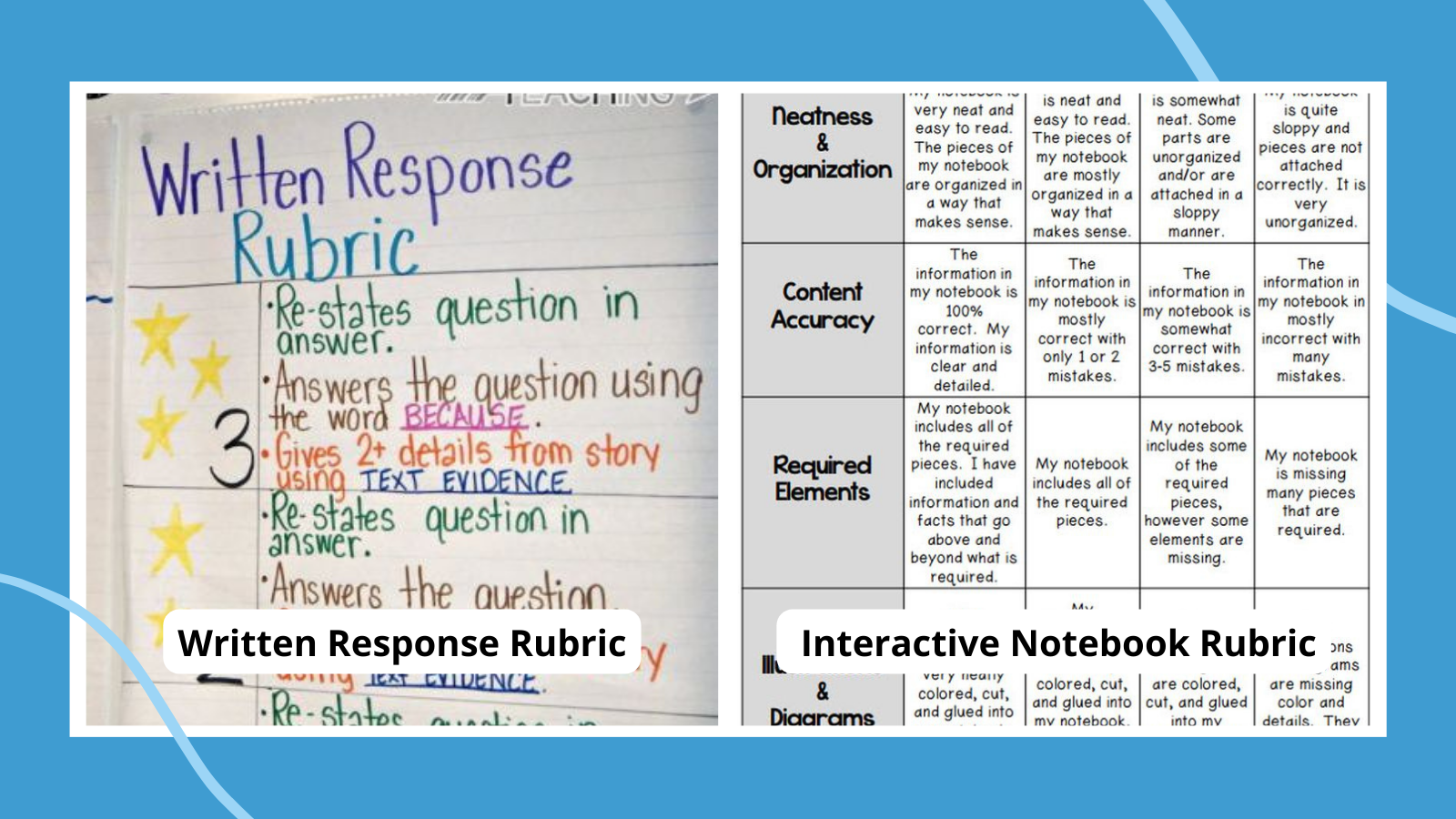

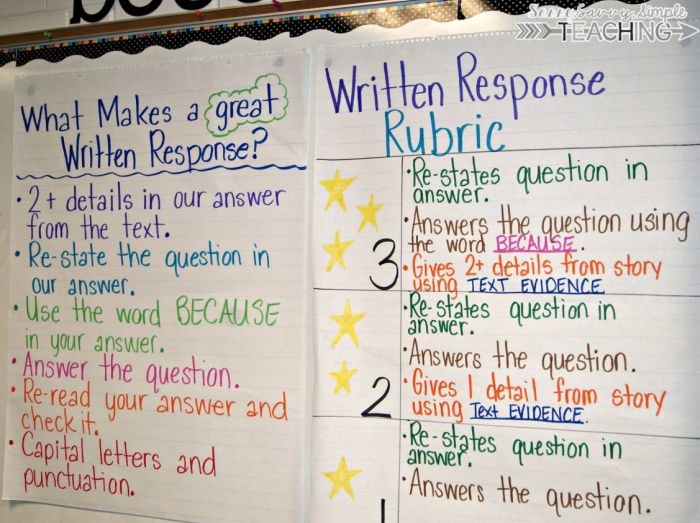

Written Response Rubric

Rubrics aren’t just for huge projects. They can also help kids work on very specific skills, like this one for improving written responses on assessments.

Learn more: Dianna Radcliffe: Teaching Upper Elementary and More

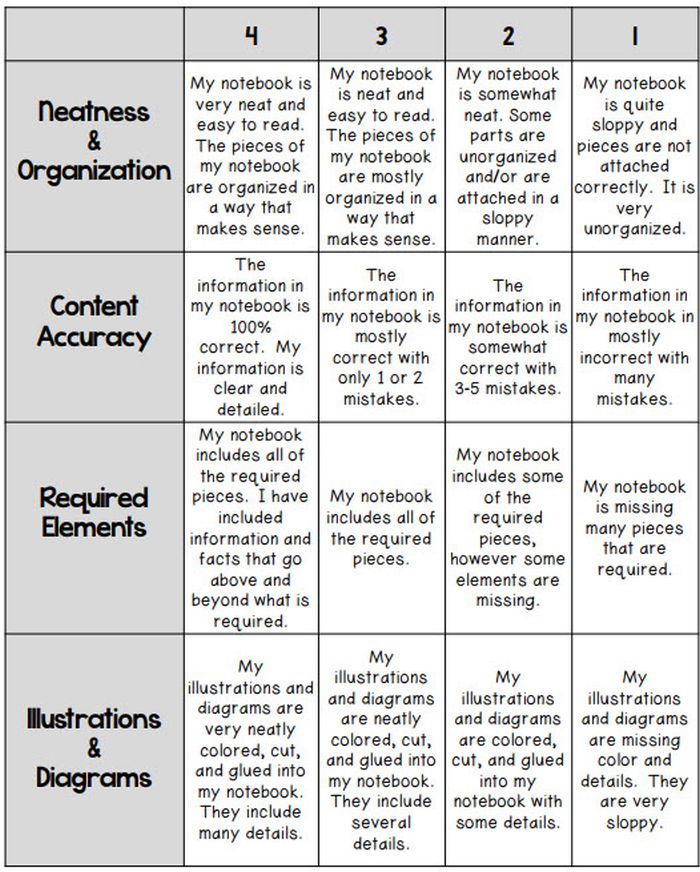

Interactive Notebook Rubric

If you use interactive notebooks as a learning tool , this rubric can help kids stay on track and meet your expectations.

Learn more: Classroom Nook

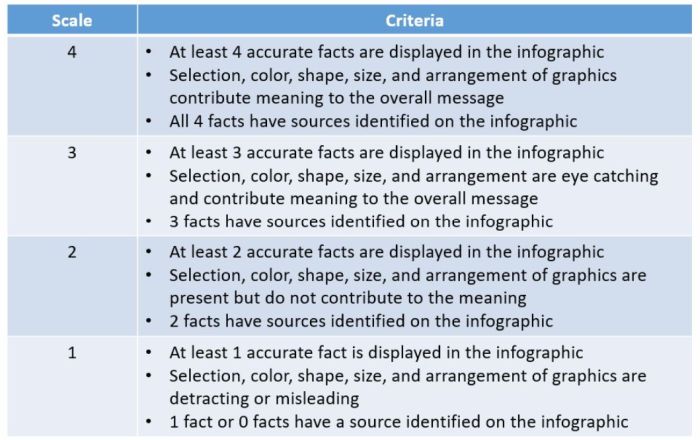

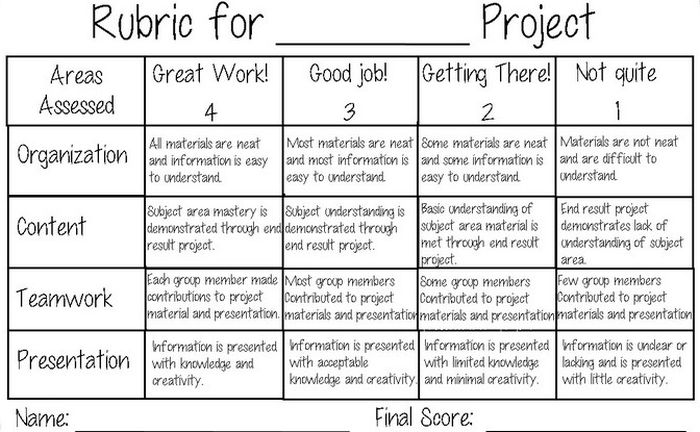

Project Rubric

Use this simple rubric as it is, or tweak it to include more specific indicators for the project you have in mind.

Learn more: Tales of a Title One Teacher

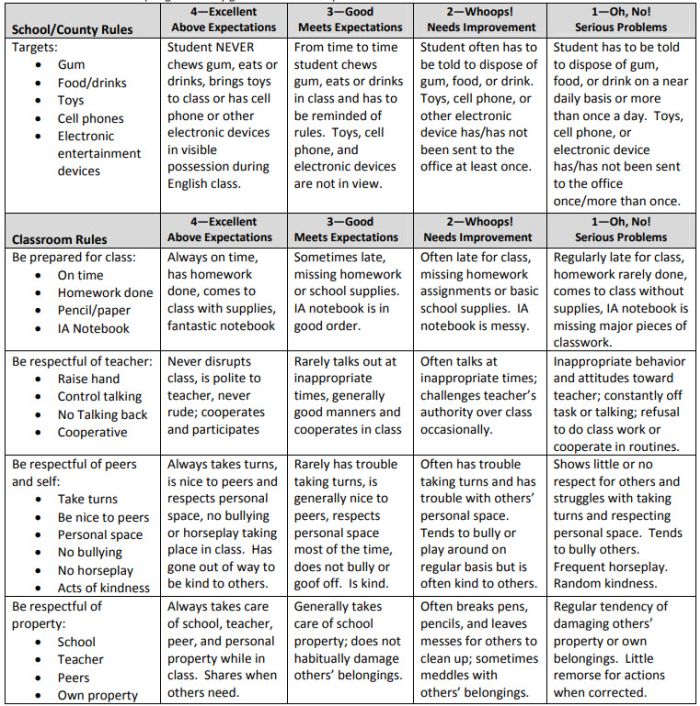

Behavior Rubric

Developmental rubrics are perfect for assessing behavior and helping students identify opportunities for improvement. Send these home regularly to keep parents in the loop.

Learn more: Teachers.net Gazette

Middle School Rubric Examples

In middle school, use rubrics to offer detailed feedback on projects, presentations, and more. Be sure to share them with students in advance, and encourage them to use them as they work so they’ll know if they’re meeting expectations.

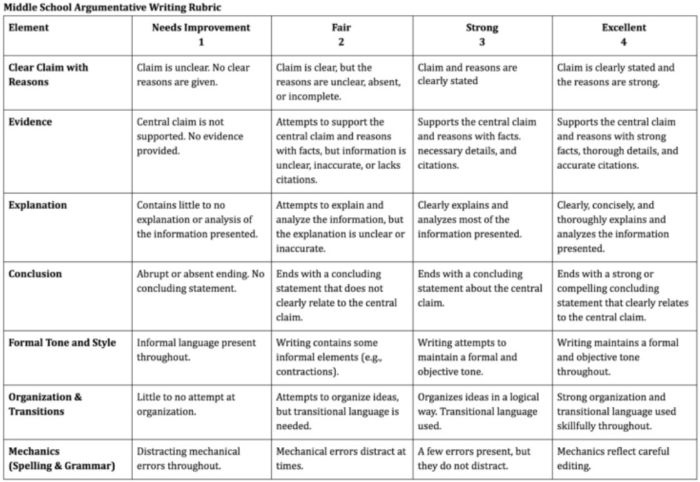

Argumentative Writing Rubric

Argumentative writing is a part of language arts, social studies, science, and more. That makes this rubric especially useful.

Learn more: Dr. Caitlyn Tucker

Role-Play Rubric

Role-plays can be really useful when teaching social and critical thinking skills, but it’s hard to assess them. Try a rubric like this one to evaluate and provide useful feedback.

Learn more: A Question of Influence

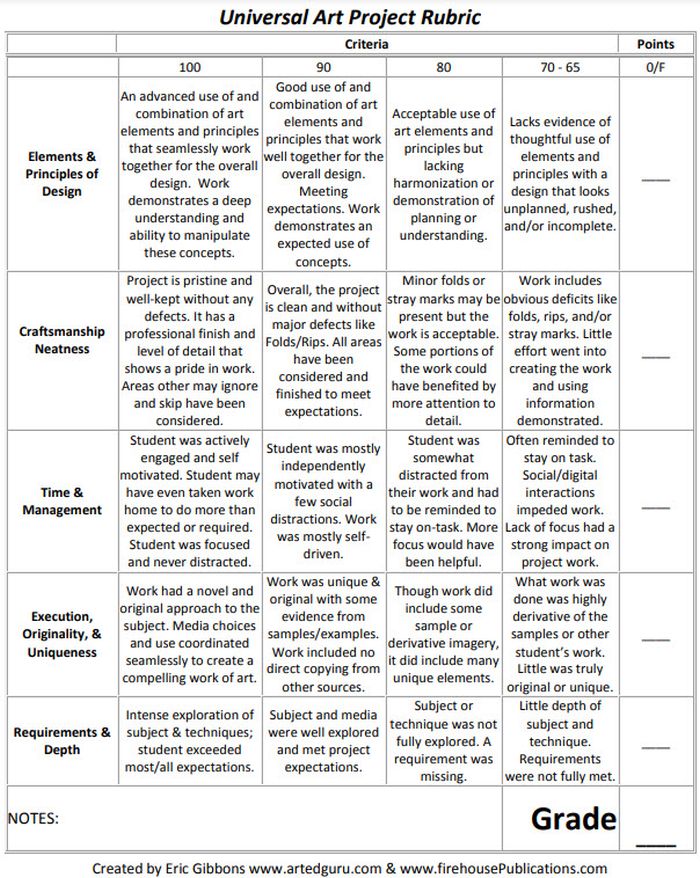

Art Project Rubric

Art is one of those subjects where grading can feel very subjective. Bring some objectivity to the process with a rubric like this.

Source: Art Ed Guru

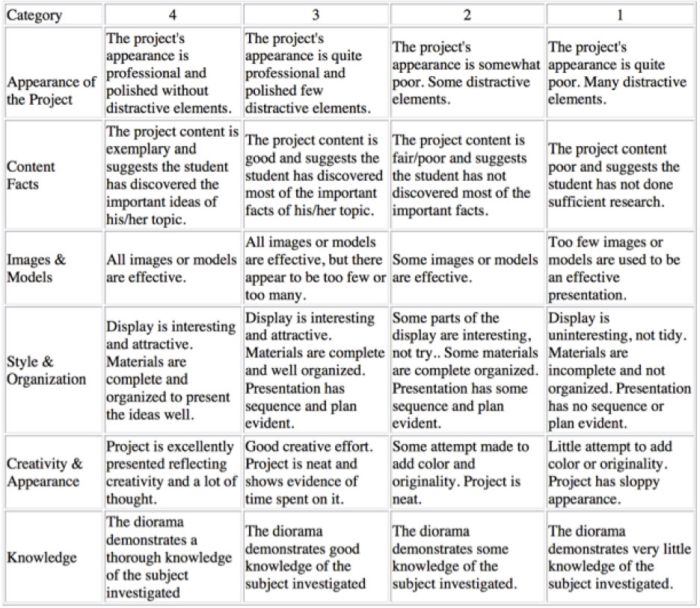

Diorama Project Rubric

You can use diorama projects in almost any subject, and they’re a great chance to encourage creativity. Simplify the grading process and help kids know how to make their projects shine with this scoring rubric.

Learn more: Historyourstory.com

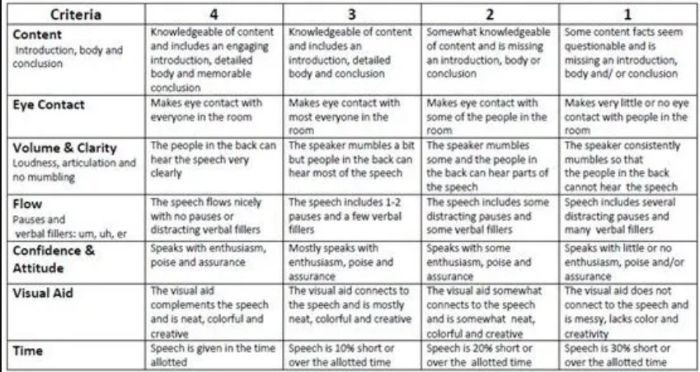

Oral Presentation Rubric

Rubrics are terrific for grading presentations, since you can include a variety of skills and other criteria. Consider letting students use a rubric like this to offer peer feedback too.

Learn more: Bright Hub Education

High School Rubric Examples

In high school, it’s important to include your grading rubrics when you give assignments like presentations, research projects, or essays. Kids who go on to college will definitely encounter rubrics, so helping them become familiar with them now will help in the future.

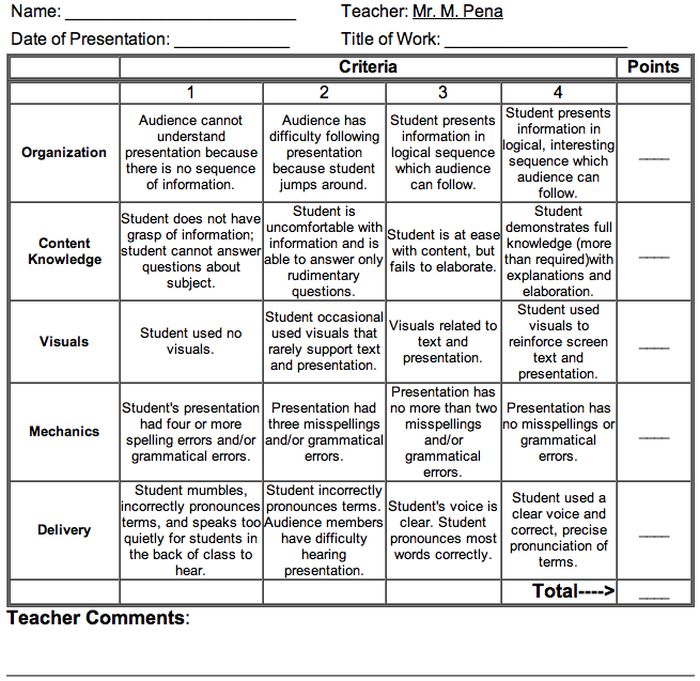

Presentation Rubric

Analyze a student’s presentation both for content and communication skills with a rubric like this one. If needed, create a separate one for content knowledge with even more criteria and indicators.

Learn more: Michael A. Pena Jr.

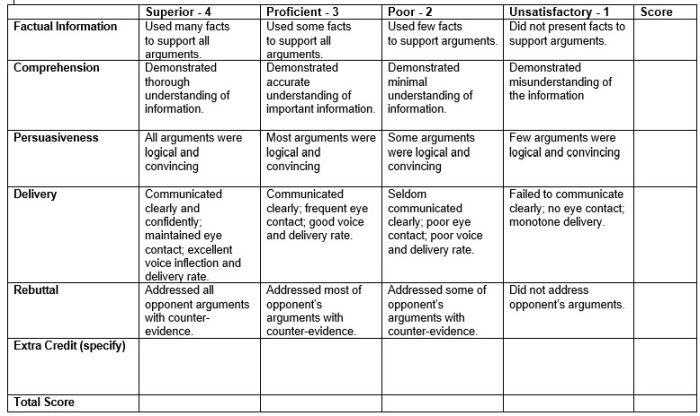

Debate Rubric

Debate is a valuable learning tool that encourages critical thinking and oral communication skills. This rubric can help you assess those skills objectively.

Learn more: Education World

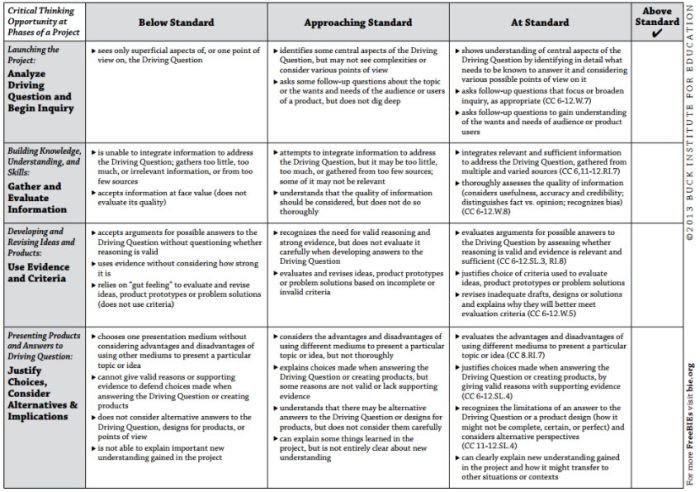

Project-Based Learning Rubric

Implementing project-based learning can be time-intensive, but the payoffs are worth it. Try this rubric to make student expectations clear and end-of-project assessment easier.

Learn more: Free Technology for Teachers

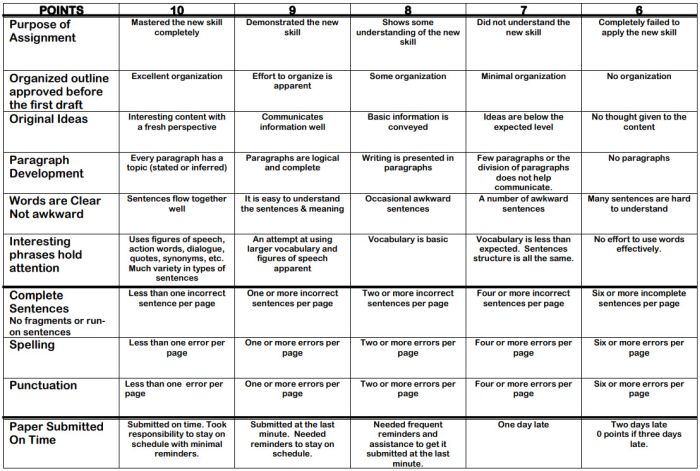

100-Point Essay Rubric

Need an easy way to convert a scoring rubric to a letter grade? This example for essay writing earns students a final score out of 100 points.

Learn more: Learn for Your Life

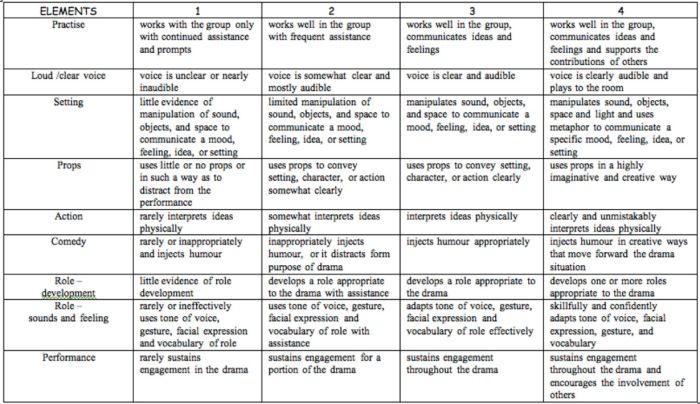

Drama Performance Rubric

If you’re unsure how to grade a student’s participation and performance in drama class, consider this example. It offers lots of objective criteria and indicators to evaluate.

Learn more: Chase March

How do you use rubrics in your classroom? Come share your thoughts and exchange ideas in the WeAreTeachers HELPLINE group on Facebook .

Plus, 25 of the best alternative assessment ideas ..

You Might Also Like

How To Get Started With Interactive Notebooks (Plus 25 Terrific Examples)

It's so much more than a place to take notes during class. Continue Reading

Copyright © 2024. All rights reserved. 5335 Gate Parkway, Jacksonville, FL 32256

Writing Rubrics [Examples, Best Practices, & Free Templates]

Writing rubrics are essential tools for teachers.

Rubrics can improve both teaching and learning. This guide will explain writing rubrics, their benefits, and how to create and use them effectively.

What Is a Writing Rubric?

Table of Contents

A writing rubric is a scoring guide used to evaluate written work.

It lists criteria and describes levels of quality from excellent to poor. Rubrics provide a standardized way to assess writing.

They make expectations clear and grading consistent.

Key Components of a Writing Rubric

- Criteria : Specific aspects of writing being evaluated (e.g., grammar, organization).

- Descriptors : Detailed descriptions of what each level of performance looks like.

- Scoring Levels : Typically, a range (e.g., 1-4 or 1-6) showing levels of mastery.

Example Breakdown

| Criteria | 4 (Excellent) | 3 (Good) | 2 (Fair) | 1 (Poor) |

|---|---|---|---|---|

| Grammar | No errors | Few minor errors | Several errors | Many errors |

| Organization | Clear and logical | Mostly clear | Somewhat clear | Not clear |

| Content | Thorough and insightful | Good, but not thorough | Basic, lacks insight | Incomplete or off-topic |

Benefits of Using Writing Rubrics

Writing rubrics offer many advantages:

- Clarity : Rubrics clarify expectations for students. They know what is required for each level of performance.

- Consistency : Rubrics standardize grading. This ensures fairness and consistency across different students and assignments.

- Feedback : Rubrics provide detailed feedback. Students understand their strengths and areas for improvement.

- Efficiency : Rubrics streamline the grading process. Teachers can evaluate work more quickly and systematically.

- Self-Assessment : Students can use rubrics to self-assess. This promotes reflection and responsibility for their learning.

Examples of Writing Rubrics

Here are some examples of writing rubrics.

Narrative Writing Rubric

| Criteria | 4 (Excellent) | 3 (Good) | 2 (Fair) | 1 (Poor) |

|---|---|---|---|---|

| Story Elements | Well-developed | Developed, some details | Basic, missing details | Underdeveloped |

| Creativity | Highly creative | Creative | Some creativity | Lacks creativity |

| Grammar | No errors | Few minor errors | Several errors | Many errors |

| Organization | Clear and logical | Mostly clear | Somewhat clear | Not clear |

| Language Use | Rich and varied | Varied | Limited | Basic or inappropriate |

Persuasive Writing Rubric

| Criteria | 4 (Excellent) | 3 (Good) | 2 (Fair) | 1 (Poor) |

|---|---|---|---|---|

| Argument | Strong and convincing | Convincing, some gaps | Basic, lacks support | Weak or unsupported |

| Evidence | Strong and relevant | Relevant, but not strong | Some relevant, weak | Irrelevant or missing |

| Grammar | No errors | Few minor errors | Several errors | Many errors |

| Organization | Clear and logical | Mostly clear | Somewhat clear | Not clear |

| Language Use | Persuasive and engaging | Engaging | Somewhat engaging | Not engaging |

Best Practices for Creating Writing Rubrics

Let’s look at some best practices for creating useful writing rubrics.

1. Define Clear Criteria

Identify specific aspects of writing to evaluate. Be clear and precise.

The criteria should reflect the key components of the writing task. For example, for a narrative essay, criteria might include plot development, character depth, and use of descriptive language.

Clear criteria help students understand what is expected and allow teachers to provide targeted feedback.

Insider Tip : Collaborate with colleagues to establish consistent criteria across grade levels. This ensures uniformity in expectations and assessments.

2. Use Detailed Descriptors

Describe what each level of performance looks like.

This ensures transparency and clarity. Avoid vague language. Instead of saying “good,” describe what “good” entails. For example, “Few minor grammatical errors that do not impede readability.”

Detailed descriptors help students gauge their performance accurately.

Insider Tip : Use student work samples to illustrate each performance level. This provides concrete examples and helps students visualize expectations.

3. Involve Students

Involve students in the rubric creation process. This increases their understanding and buy-in.

Ask for their input on what they think is important in their writing.

This collaborative approach not only demystifies the grading process but also fosters a sense of ownership and responsibility in students.

Insider Tip : Conduct a workshop where students help create a rubric for an upcoming assignment. This interactive session can clarify doubts and make students more invested in their work.

4. Align with Objectives

Ensure the rubric aligns with learning objectives. This ensures relevance and focus.

If the objective is to enhance persuasive writing skills, the rubric should emphasize argument strength, evidence quality, and persuasive techniques.

Alignment ensures that the assessment directly supports instructional goals.

Insider Tip : Regularly revisit and update rubrics to reflect changes in curriculum and instructional priorities. This keeps the rubrics relevant and effective.

5. Review and Revise

Regularly review and revise rubrics. Ensure they remain accurate and effective.

Solicit feedback from students and colleagues. Continuous improvement of rubrics ensures they remain a valuable tool for both assessment and instruction.

Insider Tip : After using a rubric, take notes on its effectiveness. Were students confused by any criteria? Did the rubric cover all necessary aspects of the assignment? Use these observations to make adjustments.

6. Be Consistent

Use the rubric consistently across all assignments.

This ensures fairness and reliability. Consistency in applying the rubric helps build trust with students and maintains the integrity of the assessment process.

Insider Tip : Develop a grading checklist to accompany the rubric. This can help ensure that all criteria are consistently applied and none are overlooked during the grading process.

7. Provide Examples

Provide examples of each performance level.

This helps students understand expectations. Use annotated examples to show why a particular piece of writing meets a specific level.

This visual and practical demonstration can be more effective than descriptions alone.

Insider Tip : Create a portfolio of exemplar works for different assignments. This can be a valuable resource for both new and experienced teachers to standardize grading.

How to Use Writing Rubrics Effectively

Here is how to use writing rubrics like the pros.

1. Introduce Rubrics Early

Introduce rubrics at the beginning of the assignment.

Explain each criterion and performance level. This upfront clarity helps students understand what is expected and guides their work from the start.

Insider Tip : Conduct a rubric walkthrough session where you discuss each part of the rubric in detail. Allow students to ask questions and provide examples to illustrate each criterion.

2. Use Rubrics as a Teaching Tool

Use rubrics to teach writing skills. Discuss what constitutes good writing and why.

This can be an opportunity to reinforce lessons on grammar, organization, and other writing components.

Insider Tip : Pair the rubric with writing workshops. Use the rubric to critique sample essays and show students how to apply the rubric to improve their own writing.

3. Provide Feedback

Use the rubric to give detailed feedback. Highlight strengths and areas for improvement.

This targeted feedback helps students understand their performance and learn how to improve.

Insider Tip : Instead of just marking scores, add comments next to each criterion on the rubric. This personalized feedback can be more impactful and instructive for students.

4. Encourage Self-Assessment

Encourage students to use rubrics to self-assess.

This promotes reflection and growth. Before submitting their work, ask students to evaluate their own writing against the rubric.

This practice fosters self-awareness and critical thinking.

Insider Tip : Incorporate self-assessment as a mandatory step in the assignment process. Provide a simplified version of the rubric for students to use during self-assessment.

5. Use Rubrics for Peer Assessment

Use rubrics for peer assessment. This allows students to learn from each other.

Peer assessments can provide new perspectives and reinforce learning.

Insider Tip : Conduct a peer assessment workshop. Train students on how to use the rubric to evaluate each other’s work constructively. This can improve the quality of peer feedback.

6. Reflect and Improve

Reflect on the effectiveness of the rubric. Make adjustments as needed for future assignments.

Continuous reflection ensures that rubrics remain relevant and effective tools for assessment and learning.

Insider Tip : After an assignment, hold a debrief session with students to gather their feedback on the rubric. Use their insights to make improvements.

Check out this video about using writing rubrics:

Common Mistakes with Writing Rubrics

Creating and using writing rubrics can be incredibly effective, but there are common mistakes that can undermine their effectiveness.

Here are some pitfalls to avoid:

1. Vague Criteria

Vague criteria can confuse students and lead to inconsistent grading.

Ensure that each criterion is specific and clearly defined. Ambiguous terms like “good” or “satisfactory” should be replaced with concrete descriptions of what those levels of performance look like.

2. Overly Complex Rubrics

While detail is important, overly complex rubrics can be overwhelming for both students and teachers.

Too many criteria and performance levels can complicate the grading process and make it difficult for students to understand what is expected.

Keep rubrics concise and focused on the most important aspects of the assignment.

3. Inconsistent Application

Applying the rubric inconsistently can lead to unfair grading.

Ensure that you apply the rubric in the same way for all students and all assignments. Consistency builds trust and ensures that grades accurately reflect student performance.

4. Ignoring Student Input

Ignoring student input when creating rubrics can result in criteria that do not align with student understanding or priorities.

Involving students in the creation process can enhance their understanding and engagement with the rubric.

5. Failing to Update Rubrics

Rubrics should evolve to reflect changes in instructional goals and student needs.

Failing to update rubrics can result in outdated criteria that no longer align with current teaching objectives.

Regularly review and revise rubrics to keep them relevant and effective.

6. Lack of Examples

Without examples, students may struggle to understand the expectations for each performance level.

Providing annotated examples of work that meets each criterion can help students visualize what is required and guide their efforts more effectively.

7. Not Providing Feedback

Rubrics should be used as a tool for feedback, not just scoring.

Simply assigning a score without providing detailed feedback can leave students unclear about their strengths and areas for improvement.

Use the rubric to give comprehensive feedback that guides students’ growth.

8. Overlooking Self-Assessment and Peer Assessment

Self-assessment and peer assessment are valuable components of the learning process.

Overlooking these opportunities can limit students’ ability to reflect on their own work and learn from their peers.

Encourage students to use the rubric for self and peer assessment to deepen their understanding and enhance their skills.

What Is a Holistic Scoring Rubric for Writing?

A holistic scoring rubric for writing is a type of rubric that evaluates a piece of writing as a whole rather than breaking it down into separate criteria

This approach provides a single overall score based on the general impression of the writing’s quality and effectiveness.

Here’s a closer look at holistic scoring rubrics.

Key Features of Holistic Scoring Rubrics

- Single Overall Score : Assigns one score based on the overall quality of the writing.

- General Criteria : Focuses on the overall effectiveness, coherence, and impact of the writing.

- Descriptors : Uses broad descriptors for each score level to capture the general characteristics of the writing.

Example Holistic Scoring Rubric

| Score | Description |

|---|---|

| 5 | : Exceptionally clear, engaging, and well-organized writing. Demonstrates excellent control of language, grammar, and style. |

| 4 | : Clear and well-organized writing. Minor errors do not detract from the overall quality. Demonstrates good control of language and style. |

| 3 | : Satisfactory writing with some organizational issues. Contains a few errors that may distract but do not impede understanding. |

| 2 | : Basic writing that lacks organization and contains several errors. Demonstrates limited control of language and style. |

| 1 | : Unclear and poorly organized writing. Contains numerous errors that impede understanding. Demonstrates poor control of language and style. |

Advantages of Holistic Scoring Rubrics

- Efficiency : Faster to use because it involves a single overall judgment rather than multiple criteria.

- Flexibility : Allows for a more intuitive assessment of the writing’s overall impact and effectiveness.

- Comprehensiveness : Captures the overall quality of writing, considering all elements together.

Disadvantages of Holistic Scoring Rubrics

- Less Detailed Feedback : Provides a general score without specific feedback on individual aspects of writing.

- Subjectivity : Can be more subjective, as it relies on the assessor’s overall impression rather than specific criteria.

- Limited Diagnostic Use : Less useful for identifying specific areas of strength and weakness for instructional purposes.

When to Use Holistic Scoring Rubrics

- Quick Assessments : When a quick, overall evaluation is needed.

- Standardized Testing : Often used in standardized testing scenarios where consistency and efficiency are priorities.

- Initial Impressions : Useful for providing an initial overall impression before more detailed analysis.

Free Writing Rubric Templates

Feel free to use the following writing rubric templates.

You can easily copy and paste them into a Word Document. Please do credit this website on any written, printed, or published use.

Otherwise, go wild.

| Criteria | 4 (Excellent) | 3 (Good) | 2 (Fair) | 1 (Poor) |

|---|---|---|---|---|

| Well-developed, engaging, and clear plot, characters, and setting. | Developed plot, characters, and setting with some details missing. | Basic plot, characters, and setting; lacks details. | Underdeveloped plot, characters, and setting. | |

| Highly creative and original. | Creative with some originality. | Some creativity but lacks originality. | Lacks creativity and originality. | |

| No grammatical errors. | Few minor grammatical errors. | Several grammatical errors. | Numerous grammatical errors. | |

| Clear and logical structure. | Mostly clear structure. | Somewhat clear structure. | Lacks clear structure. | |

| Rich, varied, and appropriate language. | Varied and appropriate language. | Limited language variety. | Basic or inappropriate language. |

| Criteria | 4 (Excellent) | 3 (Good) | 2 (Fair) | 1 (Poor) |

|---|---|---|---|---|

| Strong, clear, and convincing argument. | Convincing argument with minor gaps. | Basic argument; lacks strong support. | Weak or unsupported argument. | |

| Strong, relevant, and well-integrated evidence. | Relevant evidence but not strong. | Some relevant evidence, but weak. | Irrelevant or missing evidence. | |

| No grammatical errors. | Few minor grammatical errors. | Several grammatical errors. | Numerous grammatical errors. | |

| Clear and logical structure. | Mostly clear structure. | Somewhat clear structure. | Lacks clear structure. | |

| Persuasive and engaging language. | Engaging language. | Somewhat engaging language. | Not engaging language. |

Expository Writing Rubric

| Criteria | 4 (Excellent) | 3 (Good) | 2 (Fair) | 1 (Poor) |

|---|---|---|---|---|

| Thorough, accurate, and insightful content. | Accurate content with some details missing. | Basic content; lacks depth. | Incomplete or inaccurate content. | |

| Clear and concise explanations. | Mostly clear explanations. | Somewhat clear explanations. | Unclear explanations. | |

| No grammatical errors. | Few minor grammatical errors. | Several grammatical errors. | Numerous grammatical errors. | |

| Clear and logical structure. | Mostly clear structure. | Somewhat clear structure. | Lacks clear structure. | |

| Precise and appropriate language. | Appropriate language. | Limited language variety. | Basic or inappropriate language. |

Descriptive Writing Rubric

| Criteria | 4 (Excellent) | 3 (Good) | 2 (Fair) | 1 (Poor) |

|---|---|---|---|---|

| Vivid and detailed imagery that engages the senses. | Detailed imagery with minor gaps. | Basic imagery; lacks vivid details. | Little to no imagery. | |

| Highly creative and original descriptions. | Creative with some originality. | Some creativity but lacks originality. | Lacks creativity and originality. | |

| No grammatical errors. | Few minor grammatical errors. | Several grammatical errors. | Numerous grammatical errors. | |

| Clear and logical structure. | Mostly clear structure. | Somewhat clear structure. | Lacks clear structure. | |

| Rich, varied, and appropriate language. | Varied and appropriate language. | Limited language variety. | Basic or inappropriate language. |

Analytical Writing Rubric

| Criteria | 4 (Excellent) | 3 (Good) | 2 (Fair) | 1 (Poor) |

|---|---|---|---|---|

| Insightful, thorough, and well-supported analysis. | Good analysis with some depth. | Basic analysis; lacks depth. | Weak or unsupported analysis. | |

| Strong, relevant, and well-integrated evidence. | Relevant evidence but not strong. | Some relevant evidence, but weak. | Irrelevant or missing evidence. | |

| No grammatical errors. | Few minor grammatical errors. | Several grammatical errors. | Numerous grammatical errors. | |

| Clear and logical structure. | Mostly clear structure. | Somewhat clear structure. | Lacks clear structure. | |

| Precise and appropriate language. | Appropriate language. | Limited language variety. | Basic or inappropriate language. |

Final Thoughts: Writing Rubrics

I have a lot more resources for teaching on this site.

Check out some of the blog posts I’ve listed below. I think you might enjoy them.

Read This Next:

- Narrative Writing Graphic Organizer [Guide + Free Templates]

- 100 Best A Words for Kids (+ How to Use Them)

- 100 Best B Words For Kids (+How to Teach Them)

- 100 Dictation Word Ideas for Students and Kids

- 50 Tricky Words to Pronounce and Spell (How to Teach Them)

Center for Teaching Innovation

Resource library.

- AACU VALUE Rubrics

Using rubrics

A rubric is a type of scoring guide that assesses and articulates specific components and expectations for an assignment. Rubrics can be used for a variety of assignments: research papers, group projects, portfolios, and presentations.

Why use rubrics?

Rubrics help instructors:

- Assess assignments consistently from student-to-student.

- Save time in grading, both short-term and long-term.

- Give timely, effective feedback and promote student learning in a sustainable way.

- Clarify expectations and components of an assignment for both students and course teaching assistants (TAs).

- Refine teaching methods by evaluating rubric results.

Rubrics help students:

- Understand expectations and components of an assignment.

- Become more aware of their learning process and progress.

- Improve work through timely and detailed feedback.

Considerations for using rubrics

When developing rubrics consider the following:

- Although it takes time to build a rubric, time will be saved in the long run as grading and providing feedback on student work will become more streamlined.

- A rubric can be a fillable pdf that can easily be emailed to students.

- They can be used for oral presentations.

- They are a great tool to evaluate teamwork and individual contribution to group tasks.

- Rubrics facilitate peer-review by setting evaluation standards. Have students use the rubric to provide peer assessment on various drafts.

- Students can use them for self-assessment to improve personal performance and learning. Encourage students to use the rubrics to assess their own work.

- Motivate students to improve their work by using rubric feedback to resubmit their work incorporating the feedback.

Getting Started with Rubrics

- Start small by creating one rubric for one assignment in a semester.

- Ask colleagues if they have developed rubrics for similar assignments or adapt rubrics that are available online. For example, the AACU has rubrics for topics such as written and oral communication, critical thinking, and creative thinking. RubiStar helps you to develop your rubric based on templates.

- Examine an assignment for your course. Outline the elements or critical attributes to be evaluated (these attributes must be objectively measurable).

- Create an evaluative range for performance quality under each element; for instance, “excellent,” “good,” “unsatisfactory.”

- Avoid using subjective or vague criteria such as “interesting” or “creative.” Instead, outline objective indicators that would fall under these categories.

- The criteria must clearly differentiate one performance level from another.

- Assign a numerical scale to each level.

- Give a draft of the rubric to your colleagues and/or TAs for feedback.

- Train students to use your rubric and solicit feedback. This will help you judge whether the rubric is clear to them and will identify any weaknesses.

- Rework the rubric based on the feedback.

Skip to Content

Other ways to search:

- Events Calendar

Rubrics are a set of criteria to evaluate performance on an assignment or assessment. Rubrics can communicate expectations regarding the quality of work to students and provide a standardized framework for instructors to assess work. Rubrics can be used for both formative and summative assessment. They are also crucial in encouraging self-assessment of work and structuring peer-assessments.

Why use rubrics?

Rubrics are an important tool to assess learning in an equitable and just manner. This is because they enable:

- A common set of standards and criteria to be uniformly applied, which can mitigate bias

- Transparency regarding the standards and criteria on which students are evaluated

- Efficient grading with timely and actionable feedback

- Identifying areas in which students need additional support and guidance

- The use of objective, criterion-referenced metrics for evaluation

Some instructors may be reluctant to provide a rubric to grade assessments under the perception that it stifles student creativity (Haugnes & Russell, 2018). However, sharing the purpose of an assessment and criteria for success in the form of a rubric along with relevant examples has been shown to particularly improve the success of BIPOC, multiracial, and first-generation students (Jonsson, 2014; Winkelmes, 2016). Improved success in assessments is generally associated with an increased sense of belonging which, in turn, leads to higher student retention and more equitable outcomes in the classroom (Calkins & Winkelmes, 2018; Weisz et al., 2023). By not providing a rubric, faculty may risk having students guess the criteria on which they will be evaluated. When students have to guess what expectations are, it may unfairly disadvantage students who are first-generation, BIPOC, international, or otherwise have not been exposed to the cultural norms that have dominated higher-ed institutions in the U.S (Shapiro et al., 2023). Moreover, in such cases, criteria may be applied inconsistently for students leading to biases in grades awarded to students.

Steps for Creating a Rubric

Clearly state the purpose of the assessment, which topic(s) learners are being tested on, the type of assessment (e.g., a presentation, essay, group project), the skills they are being tested on (e.g., writing, comprehension, presentation, collaboration), and the goal of the assessment for instructors (e.g., gauging formative or summative understanding of the topic).

Determine the specific criteria or dimensions to assess in the assessment. These criteria should align with the learning objectives or outcomes to be evaluated. These criteria typically form the rows in a rubric grid and describe the skills, knowledge, or behavior to be demonstrated. The set of criteria may include, for example, the idea/content, quality of arguments, organization, grammar, citations and/or creativity in writing. These criteria may form separate rows or be compiled in a single row depending on the type of rubric.

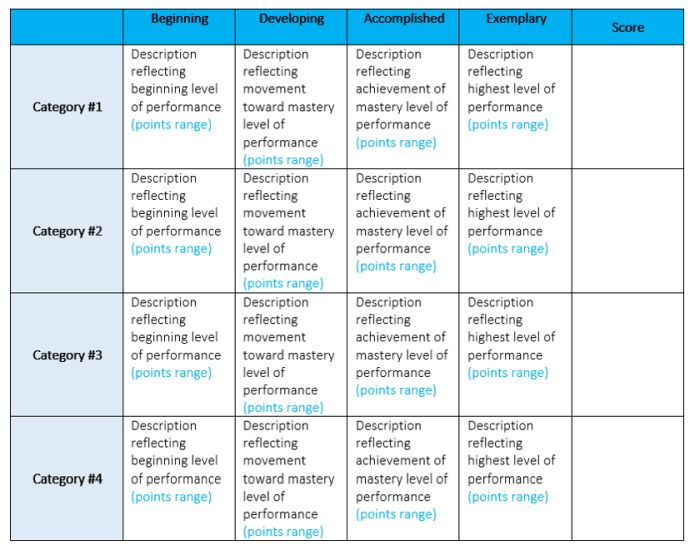

(See row headers of Figure 1 )

Create a scale of performance levels that describe the degree of proficiency attained for each criterion. The scale typically has 4 to 5 levels (although there may be fewer levels depending on the type of rubrics used). The rubrics should also have meaningful labels (e.g., not meeting expectations, approaching expectations, meeting expectations, exceeding expectations). When assigning levels of performance, use inclusive language that can inculcate a growth mindset among students, especially when work may be otherwise deemed to not meet the mark. Some examples include, “Does not yet meet expectations,” “Considerable room for improvement,” “ Progressing,” “Approaching,” “Emerging,” “Needs more work,” instead of using terms like “Unacceptable,” “Fails,” “Poor,” or “Below Average.”

(See column headers of Figure 1 )

Develop a clear and concise descriptor for each combination of criterion and performance level. These descriptors should provide examples or explanations of what constitutes each level of performance for each criterion. Typically, instructors should start by describing the highest and lowest level of performance for that criterion and then describing intermediate performance for that criterion. It is important to keep the language uniform across all columns, e.g., use syntax and words that are aligned in each column for a given criteria.

(See cells of Figure 1 )

It is important to consider how each criterion is weighted and for each criterion to reflect the importance of learning objectives being tested. For example, if the primary goal of a research proposal is to test mastery of content and application of knowledge, these criteria should be weighted more heavily compared to other criteria (e.g., grammar, style of presentation). This can be done by associating a different scoring system for each criteria (e.g., Following a scale of 8-6-4-2 points for each level of performance in higher weight criteria and 4-3-2-1 points for each level of performance for lower weight criteria). Further, the number of points awarded across levels of performance should be evenly spaced (e.g., 10-8-6-4 instead of 10-6-3-1). Finally, if there is a letter grade associated with a particular assessment, consider how it relates to scores. For example, instead of having students receive an A only if they received the highest level of performance on each criterion, consider assigning an A grade to a range of scores (28 - 30 total points) or a combination of levels of performance (e.g., exceeds expectations on higher weight criteria and meets expectations on other criteria).

(See the numerical values in the column headers of Figure 1 )

Figure 1: Graphic describing the five basic elements of a rubric

Note : Consider using a template rubric that can be used to evaluate similar activities in the classroom to avoid the fatigue of developing multiple rubrics. Some tools include Rubistar or iRubric which provide suggested words for each criteria depending on the type of assessment. Additionally, the above format can be incorporated in rubrics that can be directly added in Canvas or in the grid view of rubrics in gradescope which are common grading tools. Alternately, tables within a Word processor or Spreadsheet may also be used to build a rubric. You may also adapt the example rubrics provided below to the specific learning goals for the assessment using the blank template rubrics we have provided against each type of rubric. Watch the linked video for a quick introduction to designing a rubric . Word document (docx) files linked below will automatically download to your device whereas pdf files will open in a new tab.

Types of Rubrics

In these rubrics, one specifies at least two criteria and provides a separate score for each criterion. The steps outlined above for creating a rubric are typical for an analytic style rubric. Analytic rubrics are used to provide detailed feedback to students and help identify strengths as well as particular areas in need of improvement. These can be particularly useful when providing formative feedback to students, for student peer assessment and self-assessments, or for project-based summative assessments that evaluate student learning across multiple criteria. You may use a blank analytic rubric template (docx) or adapt an existing sample of an analytic rubric (pdf) .

Fig 2: Graphic describing a sample analytic rubric (adopted from George Mason University, 2013)

These are a subset of analytical rubrics that are typically used to assess student performance and engagement during a learning period but not the end product. Such rubrics are typically used to assess soft skills and behaviors that are less tangible (e.g., intercultural maturity, empathy, collaboration skills). These rubrics are useful in assessing the extent to which students develop a particular skill, ability, or value in experiential learning based programs or skills. They are grounded in the theory of development (King, 2005). Examples include an intercultural knowledge and competence rubric (docx) and a global learning rubric (docx) .

These rubrics consider all criteria evaluated on one scale, providing a single score that gives an overall impression of a student’s performance on an assessment.These rubrics also emphasize the overall quality of a student’s work, rather than delineating shortfalls of their work. However, a limitation of the holistic rubrics is that they are not useful for providing specific, nuanced feedback or to identify areas of improvement. Thus, they might be useful when grading summative assessments in which students have previously received detailed feedback using analytic or single-point rubrics. They may also be used to provide quick formative feedback for smaller assignments where not more than 2-3 criteria are being tested at once. Try using our blank holistic rubric template docx) or adapt an existing sample of holistic rubric (pdf) .

Fig 3: Graphic describing a sample holistic rubric (adopted from Teaching Commons, DePaul University)

These rubrics contain only two levels of performance (e.g., yes/no, present/absent) across a longer list of criteria (beyond 5 levels). Checklist rubrics have the advantage of providing a quick assessment of criteria given the binary assessment of criteria that are either met or are not met. Consequently, they are preferable when initiating self- or peer-assessments of learning given that it simplifies evaluations to be more objective and criteria can elicit only one of two responses allowing uniform and quick grading. For similar reasons, such rubrics are useful for faculty in providing quick formative feedback since it immediately highlights the specific criteria to improve on. Such rubrics are also used in grading summative assessments in courses utilizing alternative grading systems such as specifications grading, contract grading or a credit/no credit grading system wherein a minimum threshold of performance has to be met for the assessment. Having said that, developing rubrics from existing analytical rubrics may require considerable investment upfront given that criteria have to be phrased in a way that can only elicit binary responses. Here is a link to the checklist rubric template (docx) .

Fig. 4: Graphic describing a sample checklist rubric

A single point rubric is a modified version of a checklist style rubric, in that it specifies a single column of criteria. However, rather than only indicating whether expectations are met or not, as happens in a checklist rubric, a single point rubric allows instructors to specify ways in which criteria exceeds or does not meet expectations. Here the criteria to be tested are laid out in a central column describing the average expectation for the assignment. Instructors indicate areas of improvement on the left side of the criteria, whereas areas of strength in student performance are indicated on the right side. These types of rubrics provide flexibility in scoring, and are typically used in courses with alternative grading systems such as ungrading or contract grading. However, they do require the instructors to provide detailed feedback for each student, which can be unfeasible for assessments in large classes. Here is a link to the single point rubric template (docx) .

Fig. 5 Graphic describing a single point rubric (adopted from Teaching Commons, DePaul University)

Best Practices for Designing and Implementing Rubrics

When designing the rubric format, descriptors and criteria should be presented in a way that is compatible with screen readers and reading assistive technology. For example, avoid using only color, jargon, or complex terminology to convey information. In case you do use color, pictures or graphics, try providing alternative formats for rubrics, such as plain text documents. Explore resources from the CU Digital Accessibility Office to learn more.

Co-creating rubrics can help students to engage in higher-order thinking skills such as analysis and evaluation. Further, it allows students to take ownership of their own learning by determining the criteria of their work they aspire towards. For graduate classes or upper-level students, one way of doing this may be to provide learning outcomes of the project, and let students develop the rubric on their own. However, students in introductory classes may need more scaffolding by providing them a draft and leaving room for modification (Stevens & Levi 2013). Watch the linked video for tips on co-creating rubrics with students . Further, involving teaching assistants in designing a rubric can help in getting feedback on expectations for an assessment prior to implementing and norming a rubric.

When first designing a rubric, it is important to compare grades awarded for the same assessment by multiple graders to make sure the criteria are applied uniformly and reliably for the same level of performance. Further, ensure that the levels of performance in student work can be adequately distinguished using a rubric. Such a norming protocol is particularly important to also do at the start of any course in which multiple graders use the same rubric to grade an assessment (e.g., recitation sections, lab sections, teaching team). Here, instructors may select a subset of assignments that all graders evaluate using the same rubric, followed by a discussion to identify any discrepancies in criteria applied and ways to address them. Such strategies can make the rubrics more reliable, effective, and clear.

Sharing the rubric with students prior to an assessment can help familiarize students with an instructor’s expectations. This can help students master their learning outcomes by guiding their work in the appropriate direction and increase student motivation. Further, providing the rubric to students can help encourage metacognition and ability to self-assess learning.

Sample Rubrics

Below are links to rubric templates designed by a team of experts assembled by the Association of American Colleges and Universities (AAC&U) to assess 16 major learning goals. These goals are a part of the Valid Assessment of Learning in Undergraduate Education (VALUE) program. All of these examples are analytic rubrics and have detailed criteria to test specific skills. However, since any given assessment typically tests multiple skills, instructors are encouraged to develop their own rubric by utilizing criteria picked from a combination of the rubrics linked below.

- Civic knowledge and engagement-local and global

- Creative thinking

- Critical thinking

- Ethical reasoning

- Foundations and skills for lifelong learning

- Information literacy

- Integrative and applied learning

- Intercultural knowledge and competence

- Inquiry and analysis

- Oral communication

- Problem solving

- Quantitative literacy

- Written Communication

Note : Clicking on the above links will automatically download them to your device in Microsoft Word format. These links have been created and are hosted by Kansas State University . Additional information regarding the VALUE Rubrics may be found on the AAC&U homepage .

Below are links to sample rubrics that have been developed for different types of assessments. These rubrics follow the analytical rubric template, unless mentioned otherwise. However, these rubrics can be modified into other types of rubrics (e.g., checklist, holistic or single point rubrics) based on the grading system and goal of assessment (e.g., formative or summative). As mentioned previously, these rubrics can be modified using the blank template provided.

- Oral presentations

- Painting Portfolio (single-point rubric)

- Research Paper

- Video Storyboard

Additional information:

Office of Assessment and Curriculum Support. (n.d.). Creating and using rubrics . University of Hawai’i, Mānoa

Calkins, C., & Winkelmes, M. A. (2018). A teaching method that boosts UNLV student retention . UNLV Best Teaching Practices Expo , 3.

Fraile, J., Panadero, E., & Pardo, R. (2017). Co-creating rubrics: The effects on self-regulated learning, self-efficacy and performance of establishing assessment criteria with students. Studies In Educational Evaluation , 53, 69-76

Haugnes, N., & Russell, J. L. (2016). Don’t box me in: Rubrics for àrtists and Designers . To Improve the Academy , 35 (2), 249–283.

Jonsson, A. (2014). Rubrics as a way of providing transparency in assessment , Assessment & Evaluation in Higher Education , 39(7), 840-852

McCartin, L. (2022, February 1). Rubrics! an equity-minded practice . University of Northern Colorado

Shapiro, S., Farrelly, R., & Tomaš, Z. (2023). Chapter 4: Effective and Equitable Assignments and Assessments. Fostering International Student Success in higher education (pp, 61-87, second edition). TESOL Press.

Stevens, D. D., & Levi, A. J. (2013). Introduction to rubrics: An assessment tool to save grading time, convey effective feedback, and promote student learning (second edition). Sterling, VA: Stylus.

Teaching Commons (n.d.). Types of Rubrics . DePaul University

Teaching Resources (n.d.). Rubric best practices, examples, and templates . NC State University

Winkelmes, M., Bernacki, M., Butler, J., Zochowski, M., Golanics, J., & Weavil, K.H. (2016). A teaching intervention that increases underserved college students’ success . Peer Review , 8(1/2), 31-36.

Weisz, C., Richard, D., Oleson, K., Winkelmes, M.A., Powley, C., Sadik, A., & Stone, B. (in progress, 2023). Transparency, confidence, belonging and skill development among 400 community college students in the state of Washington .

Association of American Colleges and Universities. (2009). Valid Assessment of Learning in Undergraduate Education (VALUE) .

Canvas Community. (2021, August 24). How do I add a rubric in a course? Canvas LMS Community.

Center for Teaching & Learning. (2021, March 03). Overview of Rubrics . University of Colorado, Boulder

Center for Teaching & Learning. (2021, March 18). Best practices to co-create rubrics with students . University of Colorado, Boulder.

Chase, D., Ferguson, J. L., & Hoey, J. J. (2014). Assessment in creative disciplines: Quantifying and qualifying the aesthetic . Common Ground Publishing.

Feldman, J. (2018). Grading for equity: What it is, why it matters, and how it can transform schools and classrooms . Corwin Press, CA.

Gradescope (n.d.). Instructor: Assignment - Grade Submissions . Gradescope Help Center.

Henning, G., Baker, G., Jankowski, N., Lundquist, A., & Montenegro, E. (Eds.). (2022). Reframing assessment to center equity . Stylus Publishing.

King, P. M. & Baxter Magolda, M. B. (2005). A developmental model of intercultural maturity . Journal of College Student Development . 46(2), 571-592.

Selke, M. J. G. (2013). Rubric assessment goes to college: Objective, comprehensive evaluation of student work. Lanham, MD: Rowman & Littlefield.

The Institute for Habits of Mind. (2023, January 9). Creativity Rubrics - The Institute for Habits of Mind .

- Assessment in Large Enrollment Classes

- Classroom Assessment Techniques

- Creating and Using Learning Outcomes

- Early Feedback

- Five Misconceptions on Writing Feedback

- Formative Assessments

- Frequent Feedback

- Online and Remote Exams

- Student Learning Outcomes Assessment

- Student Peer Assessment

- Student Self-assessment

- Summative Assessments: Best Practices

- Summative Assessments: Types

- Assessing & Reflecting on Teaching

- Departmental Teaching Evaluation

- Equity in Assessment

- Glossary of Terms

- Attendance Policies

- Books We Recommend

- Classroom Management

- Community-Developed Resources

- Compassion & Self-Compassion

- Course Design & Development

- Course-in-a-box for New CU Educators

- Enthusiasm & Teaching

- First Day Tips

- Flexible Teaching

- Grants & Awards

- Inclusivity

- Learner Motivation

- Making Teaching & Learning Visible

- National Center for Faculty Development & Diversity

- Open Education

- Student Support Toolkit

- Sustainaiblity

- TA/Instructor Agreement

- Teaching & Learning in the Age of AI

- Teaching Well with Technology

How to Use Rubrics

A rubric is a document that describes the criteria by which students’ assignments are graded. Rubrics can be helpful for:

- Making grading faster and more consistent (reducing potential bias).

- Communicating your expectations for an assignment to students before they begin.

Moreover, for assignments whose criteria are more subjective, the process of creating a rubric and articulating what it looks like to succeed at an assignment provides an opportunity to check for alignment with the intended learning outcomes and modify the assignment prompt, as needed.

Why rubrics?

Rubrics are best for assignments or projects that require evaluation on multiple dimensions. Creating a rubric makes the instructor’s standards explicit to both students and other teaching staff for the class, showing students how to meet expectations.

Additionally, the more comprehensive a rubric is, the more it allows for grading to be streamlined—students will get informative feedback about their performance from the rubric, even if they don’t have as many individualized comments. Grading can be more standardized and efficient across graders.

Finally, rubrics allow for reflection, as the instructor has to think about their standards and outcomes for the students. Using rubrics can help with self-directed learning in students as well, especially if rubrics are used to review students’ own work or their peers’, or if students are involved in creating the rubric.

How to design a rubric

1. consider the desired learning outcomes.

What learning outcomes is this assignment reinforcing and assessing? If the learning outcome seems “fuzzy,” iterate on the outcome by thinking about the expected student work product. This may help you more clearly articulate the learning outcome in a way that is measurable.

2. Define criteria

What does a successful assignment submission look like? As described by Allen and Tanner (2006), it can help develop an initial list of categories that the student should demonstrate proficiency in by completing the assignment. These categories should correlate with the intended learning outcomes you identified in Step 1, although they may be more granular in some cases. For example, if the task assesses students’ ability to formulate an effective communication strategy, what components of their communication strategy will you be looking for? Talking with colleagues or looking at existing rubrics for similar tasks may give you ideas for categories to consider for evaluation.

If you have assigned this task to students before and have samples of student work, it can help create a qualitative observation guide. This is described in Linda Suskie’s book Assessing Student Learning , where she suggests thinking about what made you decide to give one assignment an A and another a C, as well as taking notes when grading assignments and looking for common patterns. The often repeated themes that you comment on may show what your goals and expectations for students are. An example of an observation guide used to take notes on predetermined areas of an assignment is shown here .

In summary, consider the following list of questions when defining criteria for a rubric (O’Reilly and Cyr, 2006):

- What do you want students to learn from the task?

- How will students demonstrate that they have learned?

- What knowledge, skills, and behaviors are required for the task?

- What steps are required for the task?

- What are the characteristics of the final product?

After developing an initial list of criteria, prioritize the most important skills you want to target and eliminate unessential criteria or combine similar skills into one group. Most rubrics have between 3 and 8 criteria. Rubrics that are too lengthy make it difficult to grade and challenging for students to understand the key skills they need to achieve for the given assignment.

3. Create the rating scale

According to Suskie, you will want at least 3 performance levels: for adequate and inadequate performance, at the minimum, and an exemplary level to motivate students to strive for even better work. Rubrics often contain 5 levels, with an additional level between adequate and exemplary and a level between adequate and inadequate. Usually, no more than 5 levels are needed, as having too many rating levels can make it hard to consistently distinguish which rating to give an assignment (such as between a 6 or 7 out of 10). Suskie also suggests labeling each level with names to clarify which level represents the minimum acceptable performance. Labels will vary by assignment and subject, but some examples are:

- Exceeds standard, meets standard, approaching standard, below standard

- Complete evidence, partial evidence, minimal evidence, no evidence

4. Fill in descriptors

Fill in descriptors for each criterion at each performance level. Expand on the list of criteria you developed in Step 2. Begin to write full descriptions, thinking about what an exemplary example would look like for students to strive towards. Avoid vague terms like “good” and make sure to use explicit, concrete terms to describe what would make a criterion good. For instance, a criterion called “organization and structure” would be more descriptive than “writing quality.” Describe measurable behavior and use parallel language for clarity; the wording for each criterion should be very similar, except for the degree to which standards are met. For example, in a sample rubric from Chapter 9 of Suskie’s book, the criterion of “persuasiveness” has the following descriptors:

- Well Done (5): Motivating questions and advance organizers convey the main idea. Information is accurate.

- Satisfactory (3-4): Includes persuasive information.

- Needs Improvement (1-2): Include persuasive information with few facts.

- Incomplete (0): Information is incomplete, out of date, or incorrect.

These sample descriptors generally have the same sentence structure that provides consistent language across performance levels and shows the degree to which each standard is met.

5. Test your rubric

Test your rubric using a range of student work to see if the rubric is realistic. You may also consider leaving room for aspects of the assignment, such as effort, originality, and creativity, to encourage students to go beyond the rubric. If there will be multiple instructors grading, it is important to calibrate the scoring by having all graders use the rubric to grade a selected set of student work and then discuss any differences in the scores. This process helps develop consistency in grading and making the grading more valid and reliable.

Types of Rubrics