Data Analysis with Python: Zero to Pandas

"Data Analysis with Python: Zero to Pandas" is a practical and beginner-friendly introduction to data analysis covering the basics of Python, Numpy, Pandas, Data Visualization, and Exploratory Data Analysis.

- Watch hands-on coding-focused video tutorials

- Practice coding with cloud Jupyter notebooks

- Build an end-to-end real-world course project

- Earn a verified certificate of accomplishment

There are no prerequisites for this course.

Lesson 1 - Introduction to Programming with Python Preview

- First steps with Python & Jupyter notebooks

- Arithmetic, conditional & logical operators in Python

- Quick tour with Variables and common data types

Lesson 2 - Next Steps with Python Preview

- Branching with if, elif, and else

- Iteration with while and for loops

- Write reusable code with Functions

- Scope of variables and exceptions

Assignment 1 - Python Basics Practice Preview

- Solve word problems using variables & arithmetic operations

- Manipulate data types using methods & operators

- Use branching and iterations to translate ideas into code

- Explore the documentation and get help from the community

Lesson 3 - Numerical Computing with Numpy

- Going from Python lists to Numpy arrays

- Working with multi-dimensional arrays

- Array operations, slicing and broadcasting

- Working with CSV data files

Assignment 2 - Numpy Array Operations

- Explore the Numpy documentation website

- Demonstrate usage 5 numpy array operations

- Publish a Jupyter notebook with explanations

- Share your work with the course community

Lesson 4 - Analyzing Tabular Data with Pandas

- Reading and writing CSV data with Pandas

- Querying, filtering and sorting data frames

- Grouping and aggregation for data summarization

- Merging and joining data from multiple sources

Assignment 3 - Pandas Practice

- Create data frames from CSV files

- Query and index operations on data frames

- Group, merge and aggregate data frames

- Fix missing and invalid values in data

Lesson 5 - Visualization with Matplotlib and Seaborn

- Basic visualizations with Matplotlib

- Advanced visualizations with Seaborn

- Tips for customizing and styling charts

- Plotting images and grids of charts

Course Project - Exploratory Data Analysis

- Find a real-world dataset of your choice online

- Use Numpy & Pandas to parse, clean & analyze data

- Use Matplotlib & Seaborn to create visualizations

- Ask and answer interesting questions about the data

Lesson 6 - Exploratory Data Analysis - A Case Study

- Finding a good real-world dataset for EDA

- Data loading, cleaning and preprocessing

- Exploratory analysis and visualization

- Answering questions and making inferences

Certificate of Accomplishment

Earn a verified certificate of accomplishment ( sample by completing all weekly assignments and the course project. The certificate can be added to your LinkedIn profile, linked from your Resume, and downloaded as a PDF.

Instructor - Aakash N S

Aakash N S is the co-founder and CEO of Jovian . Previously, Aakash has worked as a software engineer (APIs & Data Platforms) at Twitter in Ireland & San Francisco and graduated from the Indian Institute of Technology, Bombay. He’s also an avid blogger, open-source contributor, and online educator.

Course FAQs

If you have general questions about the course, please browse through this list first. Click/tap on a question to expand it and view the answer. If there’s something that’s not answered here, please reply to this topic with your question. For lecture & assignment related queries, please ask question on the respective threads.

Data Analysis with Python: Zero to Pandas is an online course intended to provide a coding-first introduction to data analysis.

The course takes a hands-on coding-focused approach and will be taught using live interactive Jupyter notebooks, allowing students to follow along and experiment. Theoretical concepts will be explained in simple terms using code. Participants will receive weekly assignments and work on a project with a real-world dataset to test their skills. Upon successful completion of the course, participants will receive a certificate of completion.

The following topics are covered:

- Python & Jupyter Fundamentals

- Numpy for data processing

- Pandas for working with tabular data

- Visualization with Matplotlib and Seaborn

- Exploratory Data Analysis: A Case Study

The course is called “Zero to Pandas” because it assumes no prior knowledge of Python (i.e. you can start from Zero), and by the end of the five weeks, you’ll be familiar with running data analysis with Python.

Access the Course Syllabus for more details.

This course runs for 6 weeks. You can enroll, watch the session recordings and submit the assignments and course project during this period. The submissions will be evaluated by us and you shall be provided with the certificate on successful completion of all assignments and project.

This is a beginner-friendly course, and no prior knowledge of Data Science or Python is assumed. You DON’T require a college degree (B.Tech, Masters, PhD etc.) to participate in this course.

You do need to have a computer (laptop/desktop) with a good internet connection to watch the video lectures, run the code online, and participate in the forum discussions.

To become eligible for a “Certificate of Completion”, you need to satisfy the all of following criteria:

- Make valid submissions for all 3 weekly assignments in the course (the course team will evaluate & accept/reject submission)

- Make a valid submission towards the course project

- Do not violate the Code of Conduct

More details regarding the assignments and the course project will be shared during the course. Please note that we reserve the right to withhold/cancel any participant’s certificate if we are not satisfied with the quality of their submissions or find them in violation of the Code of Conduct and Academic Honesty Policy .

The Certificate of Completion will be issued by Jovian . Please note that Jovian is not a registered educational institution, and this certificate will not count towards your higher education/college credits. The certificate simply indicates that you have completed all the required coursework for this course. Moreover, Jovian reserves the right to withhold/cancel any participant’s certificate if we are not satisfied with the quality of their submissions or find them in violation of the Code of Conduct.

Video lectures are available on the course page. Go to zerotopandas.com and open the particular lesson. You will find the video inside the lesson page.

No, you do not need to install any additional software on your computer to participate in this course. You just need a computer (laptop/desktop) with a working internet connection and a modern web browser (like Google Chrome or Firefox) to watch the lectures, participate in forum discussions, and complete the assignments.

You will be able to do all the assignments using free online computing platforms that you can access from your web browser. More details about these will be shared during the video lectures and on the individual assignment threads.

No, for this course you do not require any Graphical Processing Unit(GPU). If in case you require a GPU, you don't have to buy it. Online Platforms like Google Colab provides free access to GPUs for a limited amount of time every week. The free tier should be sufficient to fulfill all your requirements.

The lectures will be taught using Jupyter notebooks , an browser-based interactive programming environment. The lecture notebooks and assignements will be shared using Jovian , a platform for sharing Jupyter notebooks and data science projects. You will be able to run the shared Jupyter notebooks directly from Jovian.

The coursework should not take up more than 8-10 hours per week. If you’re able to do it in lesser time, that’s great.

In general, even if you’re a full-time student or working professional, you should be able to follow along and complete the coursework comfortably, if you remain motivated.

Sure, you can audit the course by just viewing the video lectures, but we highly recommend that you try out the assignments and put in the work required to earn a certificate. Doing the assignments will help you apply the concepts and get hands-on experience with data analysis. Interactive Juptyer notebooks are a great way to learn & experiment with the code, and we’ve put in a lot of effort to prepare these resources for you. We hope you will find it worthwhile to do the assignments & exercises.

No, there is no textbook for this course. This course is taught entirely using Jupyter notebook, which include a fair bit of explanation along with code, graphs, links to references etc. We will provide links to reading material, blog posts & other free resources online.

The instructor for this course is Aakash N S Aakash is the co-founder and CEO of Jovian, a platform to learn Data Science & Machine Learning. Jovian is also a project management and collaboration platform for Jupyter notebooks. Prior to starting Jovian, Aakash worked asa software engineer (APIs & Data Platforms) at Twitter in Ireland & San Francisco and graduated from IIT Bombay. He’s also a Competitions Expert on Kaggle, an avid blogger, open source contributor and online educator.

- Assignments will require completing tasks such as creating a Jovian notebook, writing a blog post etc.

- Assignment submission can be done on assignment page using the Jovian notebook link

- Some assignments are automated, which means they will be evaluated automatically. Few assignments will be evaluated by the course team.

- You will be graded as "PASS" or "FAIL" according to the assignment evaluation. If you get "FAIL" grade, you will get chance to work on the assignment again and resubmit it.

More details about the submission will be provided in the individual topics for each assignment.

Depending on the type of question, please choose one of the following:

If you have questions on any topic covered in a lecture/assignment, you can post them in the respective lessons' discusison page. Someone from the course team or the community will try to answer your question. Before asking, please scroll through the thread to check if your question has already been asked/answered.

If you have questions about the course itself, you can post your question on the discussion page of the course

- You can also ask your question in the zerotopandas channel of Jovian community slack group.

If you do not want to ask a question publicly or need more assistance, you can send an email to [email protected] , and someone from the course team will respond to you over email.

We recommend asking question on the discussion pages, since in many cases other members of the community will be able to answer questions faster than us, and your question will also be useful for others. Remember, no question to too simple to be asked.

Yes, please spread the word and invite your friends to join in.

We expect all participants to follow the Code of Conduct , and we take harassment and abuse very seriously. Please reach out to us at [email protected] if you are a victim of harassment/abuse by another user, and we’ll investigate the matter and take strict action immediately. Once verified, we will remove the participant from the course, and for more serious matters, report it to relevant authorities.

Instantly share code, notes, and snippets.

azizrajab / Final Assignment.ipynb

- Download ZIP

- Star ( 6 ) 6 You must be signed in to star a gist

- Fork ( 4 ) 4 You must be signed in to fork a gist

- Embed Embed this gist in your website.

- Share Copy sharable link for this gist.

- Clone via HTTPS Clone using the web URL.

- Learn more about clone URLs

- Save azizrajab/3fb610c1b129e03adc7baaa3f9696410 to your computer and use it in GitHub Desktop.

Mohamed1Raafat commented Sep 9, 2023

Sorry, something went wrong.

Tawfiqul1983 commented Oct 5, 2023

Python Programming

Practice Python Exercises and Challenges with Solutions

Free Coding Exercises for Python Developers. Exercises cover Python Basics , Data structure , to Data analytics . As of now, this page contains 18 Exercises.

What included in these Python Exercises?

Each exercise contains specific Python topic questions you need to practice and solve. These free exercises are nothing but Python assignments for the practice where you need to solve different programs and challenges.

- All exercises are tested on Python 3.

- Each exercise has 10-20 Questions.

- The solution is provided for every question.

- Practice each Exercise in Online Code Editor

These Python programming exercises are suitable for all Python developers. If you are a beginner, you will have a better understanding of Python after solving these exercises. Below is the list of exercises.

Select the exercise you want to solve .

Basic Exercise for Beginners

Practice and Quickly learn Python’s necessary skills by solving simple questions and problems.

Topics : Variables, Operators, Loops, String, Numbers, List

Python Input and Output Exercise

Solve input and output operations in Python. Also, we practice file handling.

Topics : print() and input() , File I/O

Python Loop Exercise

This Python loop exercise aims to help developers to practice branching and Looping techniques in Python.

Topics : If-else statements, loop, and while loop.

Python Functions Exercise

Practice how to create a function, nested functions, and use the function arguments effectively in Python by solving different questions.

Topics : Functions arguments, built-in functions.

Python String Exercise

Solve Python String exercise to learn and practice String operations and manipulations.

Python Data Structure Exercise

Practice widely used Python types such as List, Set, Dictionary, and Tuple operations in Python

Python List Exercise

This Python list exercise aims to help Python developers to learn and practice list operations.

Python Dictionary Exercise

This Python dictionary exercise aims to help Python developers to learn and practice dictionary operations.

Python Set Exercise

This exercise aims to help Python developers to learn and practice set operations.

Python Tuple Exercise

This exercise aims to help Python developers to learn and practice tuple operations.

Python Date and Time Exercise

This exercise aims to help Python developers to learn and practice DateTime and timestamp questions and problems.

Topics : Date, time, DateTime, Calendar.

Python OOP Exercise

This Python Object-oriented programming (OOP) exercise aims to help Python developers to learn and practice OOP concepts.

Topics : Object, Classes, Inheritance

Python JSON Exercise

Practice and Learn JSON creation, manipulation, Encoding, Decoding, and parsing using Python

Python NumPy Exercise

Practice NumPy questions such as Array manipulations, numeric ranges, Slicing, indexing, Searching, Sorting, and splitting, and more.

Python Pandas Exercise

Practice Data Analysis using Python Pandas. Practice Data-frame, Data selection, group-by, Series, sorting, searching, and statistics.

Python Matplotlib Exercise

Practice Data visualization using Python Matplotlib. Line plot, Style properties, multi-line plot, scatter plot, bar chart, histogram, Pie chart, Subplot, stack plot.

Random Data Generation Exercise

Practice and Learn the various techniques to generate random data in Python.

Topics : random module, secrets module, UUID module

Python Database Exercise

Practice Python database programming skills by solving the questions step by step.

Use any of the MySQL, PostgreSQL, SQLite to solve the exercise

Exercises for Intermediate developers

The following practice questions are for intermediate Python developers.

If you have not solved the above exercises, please complete them to understand and practice each topic in detail. After that, you can solve the below questions quickly.

Exercise 1: Reverse each word of a string

Expected Output

- Use the split() method to split a string into a list of words.

- Reverse each word from a list

- finally, use the join() function to convert a list into a string

Steps to solve this question :

- Split the given string into a list of words using the split() method

- Use a list comprehension to create a new list by reversing each word from a list.

- Use the join() function to convert the new list into a string

- Display the resultant string

Exercise 2: Read text file into a variable and replace all newlines with space

Given : Assume you have a following text file (sample.txt).

Expected Output :

- First, read a text file.

- Next, use string replace() function to replace all newlines ( \n ) with space ( ' ' ).

Steps to solve this question : -

- First, open the file in a read mode

- Next, read all content from a file using the read() function and assign it to a variable.

- Display final string

Exercise 3: Remove items from a list while iterating

Description :

In this question, You need to remove items from a list while iterating but without creating a different copy of a list.

Remove numbers greater than 50

Expected Output : -

- Get the list's size

- Iterate list using while loop

- Check if the number is greater than 50

- If yes, delete the item using a del keyword

- Reduce the list size

Solution 1: Using while loop

Solution 2: Using for loop and range()

Exercise 4: Reverse Dictionary mapping

Exercise 5: display all duplicate items from a list.

- Use the counter() method of the collection module.

- Create a dictionary that will maintain the count of each item of a list. Next, Fetch all keys whose value is greater than 2

Solution 1 : - Using collections.Counter()

Solution 2 : -

Exercise 6: Filter dictionary to contain keys present in the given list

Exercise 7: print the following number pattern.

Refer to Print patterns in Python to solve this question.

- Use two for loops

- The outer loop is reverse for loop from 5 to 0

- Increment value of x by 1 in each iteration of an outer loop

- The inner loop will iterate from 0 to the value of i of the outer loop

- Print value of x in each iteration of an inner loop

- Print newline at the end of each outer loop

Exercise 8: Create an inner function

Question description : -

- Create an outer function that will accept two strings, x and y . ( x= 'Emma' and y = 'Kelly' .

- Create an inner function inside an outer function that will concatenate x and y.

- At last, an outer function will join the word 'developer' to it.

Exercise 9: Modify the element of a nested list inside the following list

Change the element 35 to 3500

Exercise 10: Access the nested key increment from the following dictionary

Under Exercises: -

Python Object-Oriented Programming (OOP) Exercise: Classes and Objects Exercises

Updated on: December 8, 2021 | 52 Comments

Python Date and Time Exercise with Solutions

Updated on: December 8, 2021 | 10 Comments

Python Dictionary Exercise with Solutions

Updated on: May 6, 2023 | 56 Comments

Python Tuple Exercise with Solutions

Updated on: December 8, 2021 | 96 Comments

Python Set Exercise with Solutions

Updated on: October 20, 2022 | 27 Comments

Python if else, for loop, and range() Exercises with Solutions

Updated on: July 6, 2024 | 296 Comments

Updated on: August 2, 2022 | 155 Comments

Updated on: September 6, 2021 | 109 Comments

Python List Exercise with Solutions

Updated on: December 8, 2021 | 200 Comments

Updated on: December 8, 2021 | 7 Comments

Python Data Structure Exercise for Beginners

Updated on: December 8, 2021 | 116 Comments

Python String Exercise with Solutions

Updated on: October 6, 2021 | 221 Comments

Updated on: March 9, 2021 | 23 Comments

Updated on: March 9, 2021 | 51 Comments

Updated on: July 20, 2021 | 29 Comments

Python Basic Exercise for Beginners

Updated on: August 31, 2023 | 497 Comments

Useful Python Tips and Tricks Every Programmer Should Know

Updated on: May 17, 2021 | 23 Comments

Python random Data generation Exercise

Updated on: December 8, 2021 | 13 Comments

Python Database Programming Exercise

Updated on: March 9, 2021 | 17 Comments

- Online Python Code Editor

Updated on: June 1, 2022 |

About PYnative

PYnative.com is for Python lovers. Here, You can get Tutorials, Exercises, and Quizzes to practice and improve your Python skills .

Explore Python

- Learn Python

- Python Basics

- Python Databases

- Python Exercises

- Python Quizzes

- Python Tricks

To get New Python Tutorials, Exercises, and Quizzes

Legal Stuff

We use cookies to improve your experience. While using PYnative, you agree to have read and accepted our Terms Of Use , Cookie Policy , and Privacy Policy .

Copyright © 2018–2024 pynative.com

7 Datasets to Practice Data Analysis in Python

- data analysis

- online practice

Data analysis is a skill that is becoming more essential in today's data-driven world. One effective way to practice with Python is to take on your own data analysis projects. In this article, we’ll show you 7 datasets you can start working on.

Python is a great tool for data analysis – in fact, it has become very popular, as we discuss in Python’s Role in Big Data and Analytics . For Python beginners to become proficient in data analysis, they need to develop their programming and analysis knowledge. And the best way to do this is by creating your own data analysis projects.

Doing projects gives you a deep understanding of Python as well as the entire data analysis process. We’ve discussed this process in our Python Exploratory Data Analysis Cheat Sheet . It’s important to learn how to effectively explore different kinds of datasets – numerical, image, text, and even audio data.

But the first step is getting your hands on data, and it isn’t always obvious how to go about this. In this article, we’ll provide you with 7 datasets that you can use to practice data analysis in Python. We’ll explain what the data is, what it can be used for, and show you some code examples to get you on your feet. The examples will range from beginner-friendly to more advanced datasets used for deep learning.

For those looking for some beginner friendly Python learning material, I recommend our Learn Programming with Python track. It bundles together 5 courses, all designed to teach you the fundamentals. For the aspiring data scientists, our Introduction to Python for Data Science course contains 141 interactive exercises. If you just want to try things out, our article 10 Python Practice Exercises for Beginners with Detailed Solutions contains exercises from some of our courses.

7 Free Python Datasets

Diabetes dataset.

The Diabetes dataset from scikit-learn is a collection of 442 patient medical records from a diabetes study conducted in the US. It contains 10 variables, including age, sex, body mass index, average blood pressure, and six blood serum measurements. The data was collected by the National Institute of Diabetes and Digestive and Kidney Diseases.

Here’s how to load the dataset into a pandas DataFrame and print the first couple of rows of some of the variables:

Here you can see the age, sex and body mass index. These variables have already been preprocessed to have a mean of zero and a standard deviation of one. The target is a quantitative measure of disease progression. To get started with a correlation analysis of some of the features in the dataset, do the following:

This shows that BMI is positively correlated with disease progression, meaning the higher the BMI, the higher the chance of having diabetes. What relationships can you find between other variables in the data?

Forest Cover Types

The Forest covertype dataset , also from scikit-learn, is a collection of data from the US Forest Service (USFS). It includes cartographic variables that measure the forest cover type for 30 x 30 meter cells and includes a total of 54 attributes.

This rich dataset can be used for a variety of projects, such as predicting the forest cover type of a given area, analyzing the relationship between different forest cover types and environmental factors, or creating a model to predict the probability of a certain type of forest cover in a given area. It can also be used to study the effects of human activities on forest cover.

Here’s how to read the data into a DataFrame and print the first 5 rows:

You can see the variables include things like elevation, slope, and soil type. The target variable is an integer and corresponds to a forest cover type. Here’s how to print the most common types:

The most commonly occurring type of forest in this dataset is type 2, with 283,301 occurrences. This corresponds to Lodgepole Pine. Type 4, the Cottonwood/Willow type, is the least frequently occurring type.

To get started in an analysis project, first start learning more about this data . Since this DataFrame is quite large with many different variables, check out How to Filter Rows and Select Columns in a Python DataFrame with p andas for some tips on manipulating the data.

Yahoo Finance

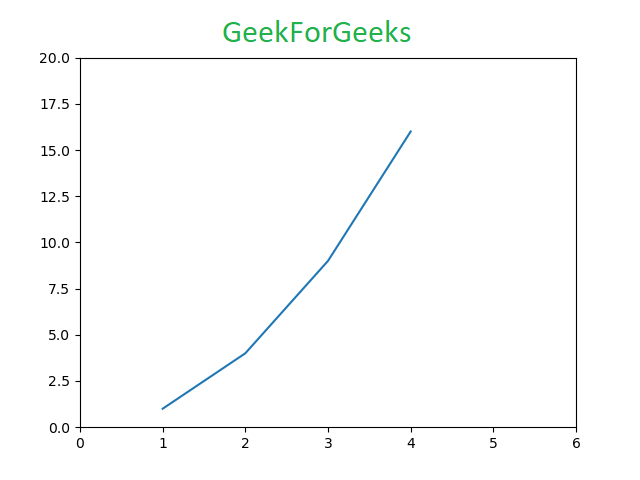

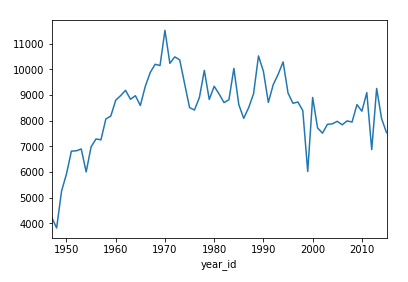

Python’s yfinance library is a powerful tool for downloading financial data from the Yahoo Finance website. You’ll need to install this library, which can be done with pip . It allows you to download data in a variety of formats; the data includes variables such as stock prices, dividends, splits, and more. To download data for Microsoft and plot the close price, do the following:

This uses the built-in pandas.DataFrame.plot() method. Running this code produces the following visualization:

There are many options open for the analysis at this stage. A regression analysis can be used to model the relationship between different financial variables. In the article Regression Analysis in Python , we show an example of how to implement this.

Atmospheric Soundings

Atmospheric sounding data is data collected from weather balloons. A comprehensive dataset is maintained on the University of Wyoming's Upper Air Sounding website . The data includes variables such as temperature, pressure, dew point, wind speed, and wind direction. This data can be used for a variety of projects, such as forecasting temperature and wind speed for your home town. Since it has decades of observations, you could use it to study the effects of climate change on the atmosphere.

Simply select an observation site and choose a time from the web interface. You can highlight the tabular data and copy-paste into a text document. Save it as ‘weather_data.txt’. Then you can read it into Python like this:

This is a nice example of having to read the data in line by line. Using Matplotlib , you can plot the temperature as a function of height for your data as follows:

Note that your plot may look a little different depending on what site and date you chose to download. If you want to download a large amount of data, you’ll need to write a web scraper. See our article Web Scraping with Python Libraries for more details.

IMDB movie review

The IMDB Movie Review dataset is a collection of movie reviews from the Internet Movie Database (IMDB). It includes reviews from tens of thousands of movies, with each review consisting of a text review and a sentiment score. The sentiment score is a binary value, either positive or negative, that indicates the sentiment of the review.

This dataset can be used for a variety of projects, such as sentiment analysis – which aims to build models that can predict the sentiment of a review. It can also be used to identify the topics and themes of a movie. You can download the dataset from Kaggle . To read in the CSV data and start preprocessing, do the following:

Text data is often quite messy, so cleaning and standardizing it as much as possible is important. See our article The Most Helpful Python Data Cleaning Modules for more information. Here, we have changed all characters to lowercase, removed stopwords (unimportant words), and tokenized the reviews (created a list of words from sentences). The article Null in Python: A Complete Guide has some more examples of working with text data.

There is more cleaning that could be done – for example, removing grammar. But this could be the starting point of a natural language processing project. Try seeing if there is a correlation between the most frequently occurring words and the sentiment.

Berlin Database of Emotional Speech

The Berlin Database of Emotional Speech (BDES) is a collection of German-language audio recordings of emotional speech. It was generated by having actors read out a set of sentences in different emotional states, such as anger, happiness, sadness, and fear. The data includes audio recordings of the actors' voices as well as annotations of the emotional states. This data can be used to study the acoustic features of emotional speech.

The data is available for download here . Metadata for the type of speech is recorded in the filename. For example, the ‘F’ in the filename ‘03a01Fa.wav’ means ‘Freude’ or Happiness. To plot the spectrogram of a happy German, do the following:

This produces the following plot of frequency against time. The yellow colors indicate higher signal strength.

Try plotting the same for angry speech, and see how the frequency, speed, and intensity of the speech changes. For more details on working with audio data in Python, check out the article How to Visualize Sound in Python .

The MNIST dataset is a collection of handwritten digits (ranging from 0 to 9) that is commonly used for training various image processing systems. It was created by the National Institute of Standards and Technology (NIST) and is widely used in machine learning and computer vision. The dataset consists of a training set of 60,000 examples and a test set of 10,000 examples. Each example is a 28x28 pixel grayscale image associated with a label from 0 to 9.

To load and start working with this data, you’ll need to install Keras , which is a powerful Python library for deep learning. The easiest way to do this is with a quick pip install keras command from the terminal. You can import the MNIST data and plot some of the digit images like this:

You can see the images of the handwritten digits with their labels above them. This dataset can be used to train a supervised image recognition model. The pixel values are the input data, and the labels are the truth that the model uses to adjust the internal weights. You can see how this is implemented in the Keras code examples section.

Improve Your Analysis Skills with Python Datasets

Getting started is often the hardest part of any challenge. In this article, we shared 7 datasets that you can use to start your next analysis project. The code examples we provided should serve as a starting point and allow you to delve deep into the data. From analyzing financial data to predicting the weather, Python can be used to explore and understand data in a variety of ways.

These datasets were chosen to give you exposure to working with a variety of different data types – numbers, text, and even images and audio. Our article An Introduction to NumPy in Python has more examples of working with numerical data.

With the right resources and practice, you can become an expert in data analysis and use Python datasets to make sense of the world around you. So, take the time to learn Python and start exploring the world of data!

You may also like

How Do You Write a SELECT Statement in SQL?

What Is a Foreign Key in SQL?

Enumerate and Explain All the Basic Elements of an SQL Query

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

Data Analysis with Python

In this article, we will discuss how to do data analysis with Python. We will discuss all sorts of data analysis i.e. analyzing numerical data with NumPy, Tabular data with Pandas, data visualization Matplotlib, and Exploratory data analysis.

Data Analysis With Python

Data Analysis is the technique of collecting, transforming, and organizing data to make future predictions and informed data-driven decisions. It also helps to find possible solutions for a business problem. There are six steps for Data Analysis. They are:

- Ask or Specify Data Requirements

- Prepare or Collect Data

- Clean and Process

- Act or Report

Data Analysis with Python

Note: To know more about these steps refer to our Six Steps of Data Analysis Process tutorial.

Analyzing Numerical Data with NumPy

NumPy is an array processing package in Python and provides a high-performance multidimensional array object and tools for working with these arrays. It is the fundamental package for scientific computing with Python.

Arrays in NumPy

NumPy Array is a table of elements (usually numbers), all of the same types, indexed by a tuple of positive integers. In Numpy, the number of dimensions of the array is called the rank of the array. A tuple of integers giving the size of the array along each dimension is known as the shape of the array.

Creating NumPy Array

NumPy arrays can be created in multiple ways, with various ranks. It can also be created with the use of different data types like lists, tuples, etc. The type of the resultant array is deduced from the type of elements in the sequences. NumPy offers several functions to create arrays with initial placeholder content. These minimize the necessity of growing arrays, an expensive operation.

Create Array using numpy.empty(shape, dtype=float, order=’C’)

Empty Matrix using pandas

Create Array using numpy.zeros(shape, dtype = None, order = ‘C’)

Operations on Numpy Arrays

Arithmetic operations.

- Subtraction:

- Multiplication:

For more information, refer to our NumPy – Arithmetic Operations Tutorial

NumPy Array Indexing

Indexing can be done in NumPy by using an array as an index. In the case of the slice, a view or shallow copy of the array is returned but in the index array, a copy of the original array is returned. Numpy arrays can be indexed with other arrays or any other sequence with the exception of tuples. The last element is indexed by -1 second last by -2 and so on.

Python NumPy Array Indexing

Numpy array slicing.

Consider the syntax x[obj] where x is the array and obj is the index. The slice object is the index in the case of basic slicing . Basic slicing occurs when obj is :

- a slice object that is of the form start: stop: step

- or a tuple of slice objects and integers

All arrays generated by basic slicing are always the view in the original array.

Ellipsis can also be used along with basic slicing. Ellipsis (…) is the number of : objects needed to make a selection tuple of the same length as the dimensions of the array.

NumPy Array Broadcasting

The term broadcasting refers to how numpy treats arrays with different Dimensions during arithmetic operations which lead to certain constraints, the smaller array is broadcast across the larger array so that they have compatible shapes.

Let’s assume that we have a large data set, each datum is a list of parameters. In Numpy we have a 2-D array, where each row is a datum and the number of rows is the size of the data set. Suppose we want to apply some sort of scaling to all these data every parameter gets its own scaling factor or say Every parameter is multiplied by some factor.

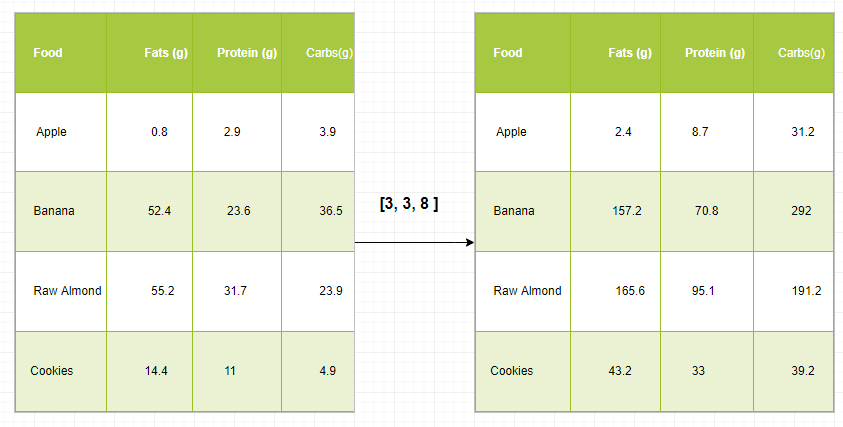

Just to have a clear understanding, let’s count calories in foods using a macro-nutrient breakdown. Roughly put, the caloric parts of food are made of fats (9 calories per gram), protein (4 CPG), and carbs (4 CPG). So if we list some foods (our data), and for each food list its macro-nutrient breakdown (parameters), we can then multiply each nutrient by its caloric value (apply scaling) to compute the caloric breakdown of every food item.

With this transformation, we can now compute all kinds of useful information. For example, what is the total number of calories present in some food or, given a breakdown of my dinner know how many calories did I get from protein and so on.

Let’s see a naive way of producing this computation with Numpy:

Broadcasting Rules: Broadcasting two arrays together follow these rules:

- If the arrays don’t have the same rank then prepend the shape of the lower rank array with 1s until both shapes have the same length.

- The two arrays are compatible in a dimension if they have the same size in the dimension or if one of the arrays has size 1 in that dimension.

- The arrays can be broadcast together if they are compatible with all dimensions.

- After broadcasting, each array behaves as if it had a shape equal to the element-wise maximum of shapes of the two input arrays.

- In any dimension where one array had a size of 1 and the other array had a size greater than 1, the first array behaves as if it were copied along that dimension.

Note: For more information, refer to our Python NumPy Tutorial .

Analyzing Data Using Pandas

Python Pandas Is used for relational or labeled data and provides various data structures for manipulating such data and time series. This library is built on top of the NumPy library. This module is generally imported as:

Here, pd is referred to as an alias to the Pandas. However, it is not necessary to import the library using the alias, it just helps in writing less amount code every time a method or property is called. Pandas generally provide two data structures for manipulating data, They are:

Pandas Series is a one-dimensional labeled array capable of holding data of any type (integer, string, float, python objects, etc.). The axis labels are collectively called indexes. Pandas Series is nothing but a column in an excel sheet. Labels need not be unique but must be a hashable type. The object supports both integer and label-based indexing and provides a host of methods for performing operations involving the index.

.webp)

Pandas Series

It can be created using the Series() function by loading the dataset from the existing storage like SQL, Database, CSV Files, Excel Files, etc., or from data structures like lists, dictionaries, etc.

Python Pandas Creating Series

pnadas series

Pandas DataFrame is a two-dimensional size-mutable, potentially heterogeneous tabular data structure with labeled axes (rows and columns). A Data frame is a two-dimensional data structure, i.e., data is aligned in a tabular fashion in rows and columns. Pandas DataFrame consists of three principal components, the data, rows, and columns.

.webp)

Pandas Dataframe

It can be created using the Dataframe() method and just like a series, it can also be from different file types and data structures.

Python Pandas Creating Dataframe

Creating Dataframe from python list

Creating Dataframe from CSV

We can create a dataframe from the CSV files using the read_csv() function.

Python Pandas read CSV

head of a dataframe

Filtering DataFrame

Pandas dataframe.filter() function is used to Subset rows or columns of dataframe according to labels in the specified index. Note that this routine does not filter a dataframe on its contents. The filter is applied to the labels of the index.

Python Pandas Filter Dataframe

Applying filter on dataset

Sorting DataFrame

In order to sort the data frame in pandas, the function sort_values() is used. Pandas sort_values() can sort the data frame in Ascending or Descending order.

Python Pandas Sorting Dataframe in Ascending Order

Sorted dataset based on a column value

Pandas GroupBy

Groupby is a pretty simple concept. We can create a grouping of categories and apply a function to the categories. In real data science projects, you’ll be dealing with large amounts of data and trying things over and over, so for efficiency, we use the Groupby concept. Groupby mainly refers to a process involving one or more of the following steps they are:

- Splitting: It is a process in which we split data into group by applying some conditions on datasets.

- Applying: It is a process in which we apply a function to each group independently.

- Combining: It is a process in which we combine different datasets after applying groupby and results into a data structure.

The following image will help in understanding the process involve in the Groupby concept.

1. Group the unique values from the Team column

Pandas Groupby Method

2. Now there’s a bucket for each group

3. Toss the other data into the buckets

4. Apply a function on the weight column of each bucket.

Applying Function on the weight column of each column

Python Pandas GroupBy

pandas groupby

Applying function to group:

After splitting a data into a group, we apply a function to each group in order to do that we perform some operations they are:

- Aggregation: It is a process in which we compute a summary statistic (or statistics) about each group. For Example, Compute group sums or means

- Transformation: It is a process in which we perform some group-specific computations and return a like-indexed. For Example, Filling NAs within groups with a value derived from each group

- Filtration: It is a process in which we discard some groups, according to a group-wise computation that evaluates True or False. For Example, Filtering out data based on the group sum or mean

Pandas Aggregation

Aggregation is a process in which we compute a summary statistic about each group. The aggregated function returns a single aggregated value for each group. After splitting data into groups using groupby function, several aggregation operations can be performed on the grouped data.

Python Pandas Aggregation

Use of sum aggregate function on dataset

Concatenating DataFrame

In order to concat the dataframe, we use concat() function which helps in concatenating the dataframe. This function does all the heavy lifting of performing concatenation operations along with an axis of Pandas objects while performing optional set logic (union or intersection) of the indexes (if any) on the other axes.

Python Pandas Concatenate Dataframe

Merging DataFrame

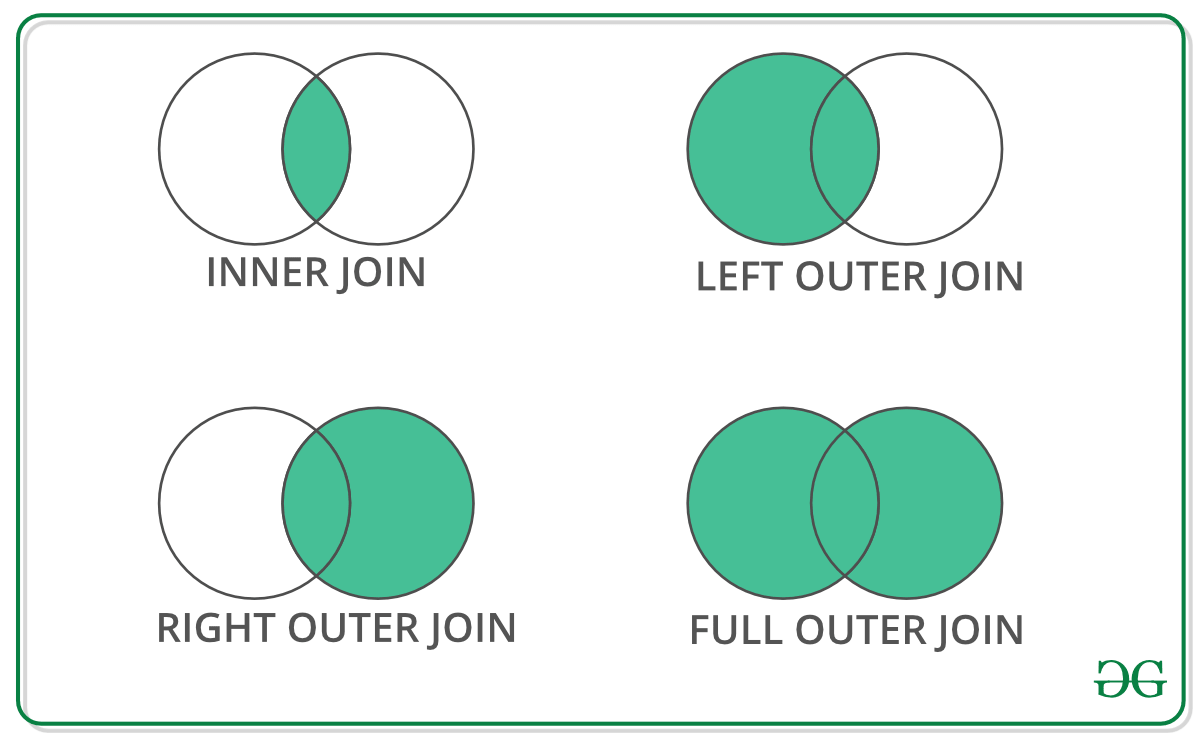

When we need to combine very large DataFrames, joins serve as a powerful way to perform these operations swiftly. Joins can only be done on two DataFrames at a time, denoted as left and right tables. The key is the common column that the two DataFrames will be joined on. It’s a good practice to use keys that have unique values throughout the column to avoid unintended duplication of row values. Pandas provide a single function, merge() , as the entry point for all standard database join operations between DataFrame objects.

There are four basic ways to handle the join (inner, left, right, and outer), depending on which rows must retain their data.

Python Pandas Merge Dataframe

Concatinating Two datasets

Joining DataFrame

In order to join the dataframe, we use .join() function this function is used for combining the columns of two potentially differently indexed DataFrames into a single result DataFrame.

Python Pandas Join Dataframe

Joining two datasets

For more information, refer to our Pandas Merging, Joining, and Concatenating tutorial

For a complete guide on Pandas refer to our Pandas Tutorial .

Visualization with Matplotlib

Matplotlib is easy to use and an amazing visualizing library in Python. It is built on NumPy arrays and designed to work with the broader SciPy stack and consists of several plots like line, bar, scatter, histogram, etc.

Pyplot is a Matplotlib module that provides a MATLAB-like interface. Pyplot provides functions that interact with the figure i.e. creates a figure, decorates the plot with labels, and creates a plotting area in a figure.

A bar plot or bar chart is a graph that represents the category of data with rectangular bars with lengths and heights that is proportional to the values which they represent. The bar plots can be plotted horizontally or vertically. A bar chart describes the comparisons between the discrete categories. It can be created using the bar() method.

Python Matplotlib Bar Chart

Here we will use the iris dataset only

Bar chart using matplotlib library

A histogram is basically used to represent data in the form of some groups. It is a type of bar plot where the X-axis represents the bin ranges while the Y-axis gives information about frequency. To create a histogram the first step is to create a bin of the ranges, then distribute the whole range of the values into a series of intervals, and count the values which fall into each of the intervals. Bins are clearly identified as consecutive, non-overlapping intervals of variables. The hist() function is used to compute and create a histogram of x.

Python Matplotlib Histogram

Histplot using matplotlib library

Scatter Plot

Scatter plots are used to observe relationship between variables and uses dots to represent the relationship between them. The scatter() method in the matplotlib library is used to draw a scatter plot.

Python Matplotlib Scatter Plot

Scatter plot using matplotlib library

A boxplot ,Correlation also known as a box and whisker plot. It is a very good visual representation when it comes to measuring the data distribution. Clearly plots the median values, outliers and the quartiles. Understanding data distribution is another important factor which leads to better model building. If data has outliers, box plot is a recommended way to identify them and take necessary actions. The box and whiskers chart shows how data is spread out. Five pieces of information are generally included in the chart

- The minimum is shown at the far left of the chart, at the end of the left ‘whisker’

- First quartile, Q1, is the far left of the box (left whisker)

- The median is shown as a line in the center of the box

- Third quartile, Q3, shown at the far right of the box (right whisker)

- The maximum is at the far right of the box

Representation of box plot

Inter quartile range

Illustrating box plot

Python Matplotlib Box Plot

Boxplot using matplotlib library

Correlation Heatmaps

A 2-D Heatmap is a data visualization tool that helps to represent the magnitude of the phenomenon in form of colors. A correlation heatmap is a heatmap that shows a 2D correlation matrix between two discrete dimensions, using colored cells to represent data from usually a monochromatic scale. The values of the first dimension appear as the rows of the table while the second dimension is a column. The color of the cell is proportional to the number of measurements that match the dimensional value. This makes correlation heatmaps ideal for data analysis since it makes patterns easily readable and highlights the differences and variation in the same data. A correlation heatmap, like a regular heatmap, is assisted by a colorbar making data easily readable and comprehensible.

Note: The data here has to be passed with corr() method to generate a correlation heatmap. Also, corr() itself eliminates columns that will be of no use while generating a correlation heatmap and selects those which can be used.

Python Matplotlib Correlation Heatmap

Heatmap using matplotlib library

For more information on data visualization refer to our below tutorials – Data Visualization using Matplotlib Data Visualization with Python Seaborn Data Visualisation in Python using Matplotlib and Seaborn Using Plotly for Interactive Data Visualization in Python Interactive Data Visualization with Bokeh

Exploratory Data Analysis

Exploratory Data Analysis (EDA) is a technique to analyze data using some visual Techniques. With this technique, we can get detailed information about the statistical summary of the data. We will also be able to deal with the duplicates values, outliers, and also see some trends or patterns present in the dataset.

Note: We will be using Iris Dataset.

Getting Information about the Dataset

We will use the shape parameter to get the shape of the dataset.

Shape of Dataframe

We can see that the dataframe contains 6 columns and 150 rows.

Now, let’s also the columns and their data types. For this, we will use the info() method.

Information about Dataset

information about the dataset

We can see that only one column has categorical data and all the other columns are of the numeric type with non-Null entries.

Let’s get a quick statistical summary of the dataset using the describe() method. The describe() function applies basic statistical computations on the dataset like extreme values, count of data points standard deviation, etc. Any missing value or NaN value is automatically skipped. describe() function gives a good picture of the distribution of data.

Description of dataset

Description about the dataset

We can see the count of each column along with their mean value, standard deviation, minimum and maximum values.

Checking Missing Values

We will check if our data contains any missing values or not. Missing values can occur when no information is provided for one or more items or for a whole unit. We will use the isnull() method.

python code for missing value

Missing values in the dataset

We can see that no column has any missing value.

Checking Duplicates

Let’s see if our dataset contains any duplicates or not. Pandas drop_duplicates() method helps in removing duplicates from the data frame.

Pandas function for missing values

Dropping duplicate value in the dataset

We can see that there are only three unique species. Let’s see if the dataset is balanced or not i.e. all the species contain equal amounts of rows or not. We will use the Series.value_counts() function. This function returns a Series containing counts of unique values.

Python code for value counts in the column

value count in the dataset

We can see that all the species contain an equal amount of rows, so we should not delete any entries.

Relation between variables

We will see the relationship between the sepal length and sepal width and also between petal length and petal width.

Comparing Sepal Length and Sepal Width

From the above plot, we can infer that –

- Species Setosa has smaller sepal lengths but larger sepal widths.

- Versicolor Species lies in the middle of the other two species in terms of sepal length and width

- Species Virginica has larger sepal lengths but smaller sepal widths.

Comparing Petal Length and Petal Width

sactter plot petal length

- The species Setosa has smaller petal lengths and widths.

- Versicolor Species lies in the middle of the other two species in terms of petal length and width

- Species Virginica has the largest petal lengths and widths.

Let’s plot all the column’s relationships using a pairplot. It can be used for multivariate analysis.

Python code for pairplot

.webp)

Pairplot for the dataset

We can see many types of relationships from this plot such as the species Seotsa has the smallest of petals widths and lengths. It also has the smallest sepal length but larger sepal widths. Such information can be gathered about any other species.

Handling Correlation

Pandas dataframe.corr() is used to find the pairwise correlation of all columns in the dataframe. Any NA values are automatically excluded. Any non-numeric data type columns in the dataframe are ignored.

correlation between columns in the dataset

The heatmap is a data visualization technique that is used to analyze the dataset as colors in two dimensions. Basically, it shows a correlation between all numerical variables in the dataset. In simpler terms, we can plot the above-found correlation using the heatmaps.

python code for heatmap

Heatmap for correlation in the dataset

From the above graph, we can see that –

- Petal width and petal length have high correlations.

- Petal length and sepal width have good correlations.

- Petal Width and Sepal length have good correlations.

Handling Outliers

An Outlier is a data item/object that deviates significantly from the rest of the (so-called normal)objects. They can be caused by measurement or execution errors. The analysis for outlier detection is referred to as outlier mining. There are many ways to detect outliers, and the removal process is the data frame same as removing a data item from the panda’s dataframe.

Let’s consider the iris dataset and let’s plot the boxplot for the SepalWidthCm column.

python code for Boxplot

Boxplot for sepalwidth column

In the above graph, the values above 4 and below 2 are acting as outliers.

Removing Outliers

For removing the outlier, one must follow the same process of removing an entry from the dataset using its exact position in the dataset because in all the above methods of detecting the outliers end result is the list of all those data items that satisfy the outlier definition according to the method used.

We will detect the outliers using IQR and then we will remove them. We will also draw the boxplot to see if the outliers are removed or not.

boxplot using seaborn library

For more information about EDA, refer to our below tutorials – What is Exploratory Data Analysis ? Exploratory Data Analysis in Python | Set 1 Exploratory Data Analysis in Python | Set 2 Exploratory Data Analysis on Iris Dataset

Please Login to comment...

Similar reads.

- AI-ML-DS With Python

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Using pandas and Python to Explore Your Dataset

Table of Contents

Setting Up Your Environment

Using the pandas python library, displaying data types, showing basics statistics, exploring your dataset, understanding series objects, understanding dataframe objects, using the indexing operator, using .loc and .iloc, querying your dataset, grouping and aggregating your data, manipulating columns, specifying data types, missing values, invalid values, inconsistent values, combining multiple datasets, visualizing your pandas dataframe.

Watch Now This tutorial has a related video course created by the Real Python team. Watch it together with the written tutorial to deepen your understanding: Explore Your Dataset With pandas

Do you have a large dataset that’s full of interesting insights, but you’re not sure where to start exploring it? Has your boss asked you to generate some statistics from it, but they’re not so easy to extract? These are precisely the use cases where pandas and Python can help you! With these tools, you’ll be able to slice a large dataset down into manageable parts and glean insight from that information.

In this tutorial, you’ll learn how to:

- Calculate metrics about your data

- Perform basic queries and aggregations

- Discover and handle incorrect data, inconsistencies, and missing values

- Visualize your data with plots

You’ll also learn about the differences between the main data structures that pandas and Python use. To follow along, you can get all of the example code in this tutorial at the link below:

Get Jupyter Notebook: Click here to get the Jupyter Notebook you’ll use to explore data with Pandas in this tutorial.

There are a few things you’ll need to get started with this tutorial. First is a familiarity with Python’s built-in data structures, especially lists and dictionaries . For more information, check out Lists and Tuples in Python and Dictionaries in Python .

The second thing you’ll need is a working Python environment . You can follow along in any terminal that has Python 3 installed. If you want to see nicer output, especially for the large NBA dataset you’ll be working with, then you might want to run the examples in a Jupyter notebook .

Note: If you don’t have Python installed at all, then check out Python 3 Installation & Setup Guide . You can also follow along online in a try-out Jupyter notebook .

The last thing you’ll need is pandas and other Python libraries, which you can install with pip :

You can also use the Conda package manager:

If you’re using the Anaconda distribution, then you’re good to go! Anaconda already comes with the pandas Python library installed.

Note: Have you heard that there are multiple package managers in the Python world and are somewhat confused about which one to pick? pip and conda are both excellent choices, and they each have their advantages.

If you’re going to use Python mainly for data science work, then conda is perhaps the better choice. In the conda ecosystem, you have two main alternatives:

- If you want to get a stable data science environment up and running quickly, and you don’t mind downloading 500 MB of data, then check out the Anaconda distribution .

- If you prefer a more minimalist setup, then check out the section on installing Miniconda in Setting Up Python for Machine Learning on Windows .

The examples in this tutorial have been tested with Python 3.7 and pandas 0.25.0, but they should also work in older versions. You can get all the code examples you’ll see in this tutorial in a Jupyter notebook by clicking the link below:

Let’s get started!

Now that you’ve installed pandas, it’s time to have a look at a dataset. In this tutorial, you’ll analyze NBA results provided by FiveThirtyEight in a 17MB CSV file . Create a script download_nba_all_elo.py to download the data:

When you execute the script, it will save the file nba_all_elo.csv in your current working directory.

Note: You could also use your web browser to download the CSV file.

However, having a download script has several advantages:

- You can tell where you got your data.

- You can repeat the download anytime! That’s especially handy if the data is often refreshed.

- You don’t need to share the 17MB CSV file with your co-workers. Usually, it’s enough to share the download script.

Now you can use the pandas Python library to take a look at your data:

Here, you follow the convention of importing pandas in Python with the pd alias. Then, you use .read_csv() to read in your dataset and store it as a DataFrame object in the variable nba .

Note: Is your data not in CSV format? No worries! The pandas Python library provides several similar functions like read_json() , read_html() , and read_sql_table() . To learn how to work with these file formats, check out Reading and Writing Files With pandas or consult the docs .

You can see how much data nba contains:

You use the Python built-in function len() to determine the number of rows. You also use the .shape attribute of the DataFrame to see its dimensionality . The result is a tuple containing the number of rows and columns.

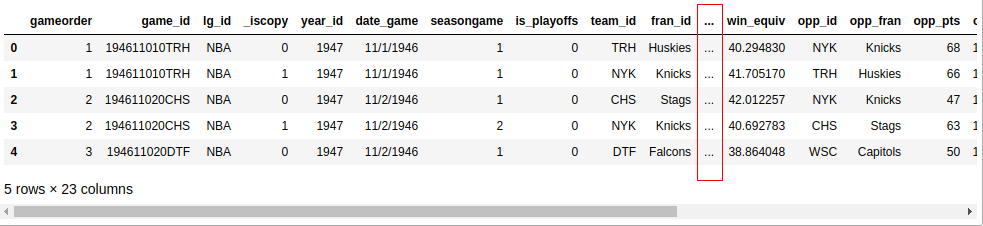

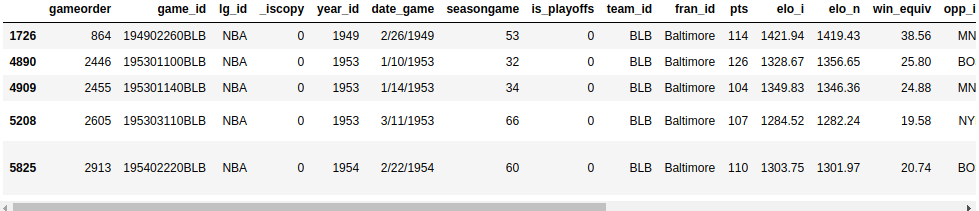

Now you know that there are 126,314 rows and 23 columns in your dataset. But how can you be sure the dataset really contains basketball stats? You can have a look at the first five rows with .head() :

If you’re following along with a Jupyter notebook, then you’ll see a result like this:

Unless your screen is quite large, your output probably won’t display all 23 columns. Somewhere in the middle, you’ll see a column of ellipses ( ... ) indicating the missing data. If you’re working in a terminal, then that’s probably more readable than wrapping long rows. However, Jupyter notebooks will allow you to scroll. You can configure pandas to display all 23 columns like this:

While it’s practical to see all the columns, you probably won’t need six decimal places! Change it to two:

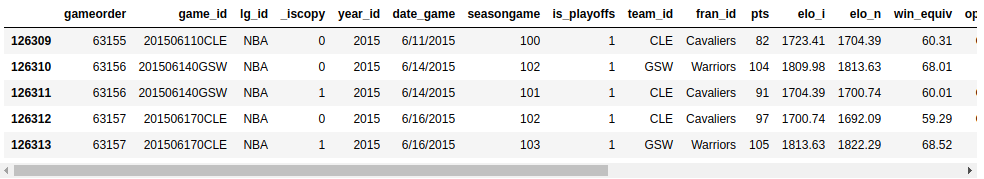

To verify that you’ve changed the options successfully, you can execute .head() again, or you can display the last five rows with .tail() instead:

Now, you should see all the columns, and your data should show two decimal places:

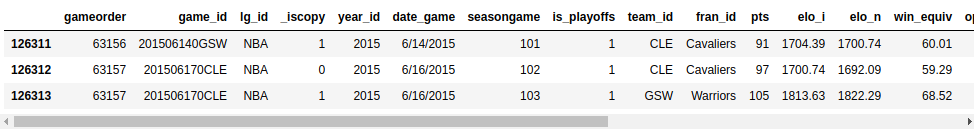

You can discover some further possibilities of .head() and .tail() with a small exercise. Can you print the last three lines of your DataFrame ? Expand the code block below to see the solution:

Solution: head & tail Show/Hide

Here’s how to print the last three lines of nba :

Your output should look something like this:

You can see the last three lines of your dataset with the options you’ve set above.

Similar to the Python standard library, functions in pandas also come with several optional parameters. Whenever you bump into an example that looks relevant but is slightly different from your use case, check out the official documentation . The chances are good that you’ll find a solution by tweaking some optional parameters!

Getting to Know Your Data

You’ve imported a CSV file with the pandas Python library and had a first look at the contents of your dataset. So far, you’ve only seen the size of your dataset and its first and last few rows. Next, you’ll learn how to examine your data more systematically.

The first step in getting to know your data is to discover the different data types it contains. While you can put anything into a list, the columns of a DataFrame contain values of a specific data type. When you compare pandas and Python data structures, you’ll see that this behavior makes pandas much faster!

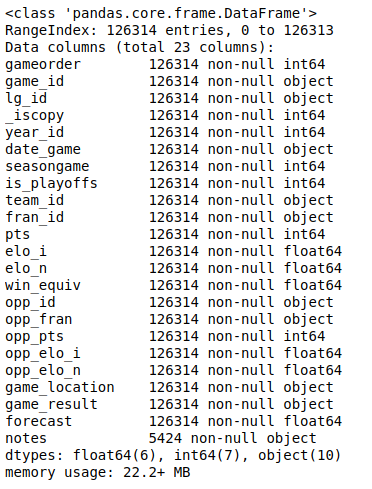

You can display all columns and their data types with .info() :

This will produce the following output:

You’ll see a list of all the columns in your dataset and the type of data each column contains. Here, you can see the data types int64 , float64 , and object . pandas uses the NumPy library to work with these types. Later, you’ll meet the more complex categorical data type, which the pandas Python library implements itself.

The object data type is a special one. According to the pandas Cookbook , the object data type is “a catch-all for columns that pandas doesn’t recognize as any other specific type.” In practice, it often means that all of the values in the column are strings.

Although you can store arbitrary Python objects in the object data type, you should be aware of the drawbacks to doing so. Strange values in an object column can harm pandas’ performance and its interoperability with other libraries. For more information, check out the official getting started guide .

Now that you’ve seen what data types are in your dataset, it’s time to get an overview of the values each column contains. You can do this with .describe() :

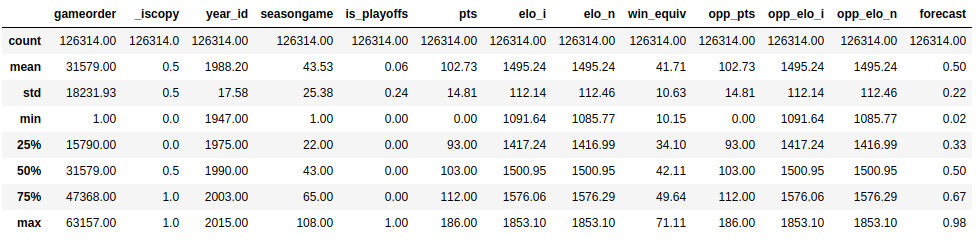

This function shows you some basic descriptive statistics for all numeric columns:

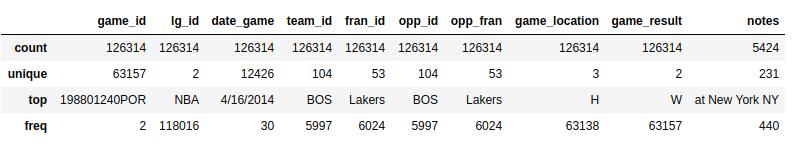

.describe() only analyzes numeric columns by default, but you can provide other data types if you use the include parameter:

.describe() won’t try to calculate a mean or a standard deviation for the object columns, since they mostly include text strings. However, it will still display some descriptive statistics:

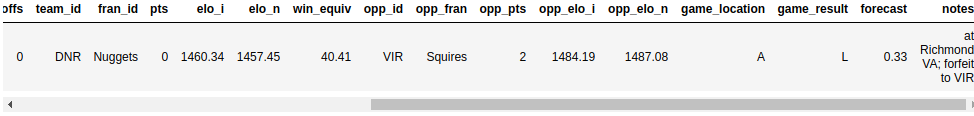

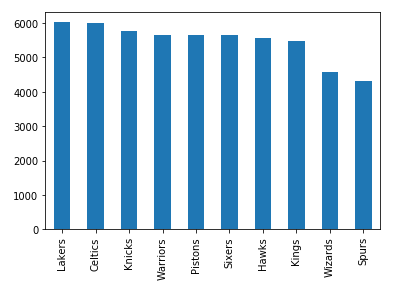

Take a look at the team_id and fran_id columns. Your dataset contains 104 different team IDs, but only 53 different franchise IDs. Furthermore, the most frequent team ID is BOS , but the most frequent franchise ID Lakers . How is that possible? You’ll need to explore your dataset a bit more to answer this question.

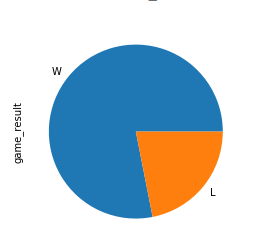

Exploratory data analysis can help you answer questions about your dataset. For example, you can examine how often specific values occur in a column:

It seems that a team named "Lakers" played 6024 games, but only 5078 of those were played by the Los Angeles Lakers. Find out who the other "Lakers" team is:

Indeed, the Minneapolis Lakers ( "MNL" ) played 946 games. You can even find out when they played those games. For that, you’ll first define a column that converts the value of date_game to the datetime data type. Then you can use the min and max aggregate functions, to find the first and last games of Minneapolis Lakers:

It looks like the Minneapolis Lakers played between the years of 1948 and 1960. That explains why you might not recognize this team!

You’ve also found out why the Boston Celtics team "BOS" played the most games in the dataset. Let’s analyze their history also a little bit. Find out how many points the Boston Celtics have scored during all matches contained in this dataset. Expand the code block below for the solution:

Solution: DataFrame intro Show/Hide

Similar to the .min() and .max() aggregate functions, you can also use .sum() :

The Boston Celtics scored a total of 626,484 points.

You’ve got a taste for the capabilities of a pandas DataFrame . In the following sections, you’ll expand on the techniques you’ve just used, but first, you’ll zoom in and learn how this powerful data structure works.

Getting to Know pandas’ Data Structures

While a DataFrame provides functions that can feel quite intuitive, the underlying concepts are a bit trickier to understand. For this reason, you’ll set aside the vast NBA DataFrame and build some smaller pandas objects from scratch.

Python’s most basic data structure is the list , which is also a good starting point for getting to know pandas.Series objects. Create a new Series object based on a list:

You’ve used the list [5555, 7000, 1980] to create a Series object called revenues . A Series object wraps two components:

- A sequence of values

- A sequence of identifiers , which is the index

You can access these components with .values and .index , respectively:

revenues.values returns the values in the Series , whereas revenues.index returns the positional index.

Note: If you’re familiar with NumPy , then it might be interesting for you to note that the values of a Series object are actually n-dimensional arrays:

If you’re not familiar with NumPy, then there’s no need to worry! You can explore the ins and outs of your dataset with the pandas Python library alone. However, if you’re curious about what pandas does behind the scenes, then check out Look Ma, No for Loops: Array Programming With NumPy .

While pandas builds on NumPy, a significant difference is in their indexing . Just like a NumPy array, a pandas Series also has an integer index that’s implicitly defined. This implicit index indicates the element’s position in the Series .

However, a Series can also have an arbitrary type of index. You can think of this explicit index as labels for a specific row:

Here, the index is a list of city names represented by strings. You may have noticed that Python dictionaries use string indices as well, and this is a handy analogy to keep in mind! You can use the code blocks above to distinguish between two types of Series :

- revenues : This Series behaves like a Python list because it only has a positional index.

- city_revenues : This Series acts like a Python dictionary because it features both a positional and a label index.

Here’s how to construct a Series with a label index from a Python dictionary:

The dictionary keys become the index, and the dictionary values are the Series values.

Just like dictionaries, Series also support .keys() and the in keyword :

You can use these methods to answer questions about your dataset quickly.

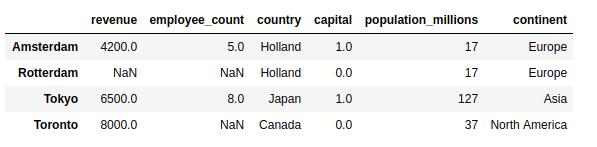

While a Series is a pretty powerful data structure, it has its limitations. For example, you can only store one attribute per key. As you’ve seen with the nba dataset, which features 23 columns, the pandas Python library has more to offer with its DataFrame . This data structure is a sequence of Series objects that share the same index.

If you’ve followed along with the Series examples, then you should already have two Series objects with cities as keys:

- city_revenues

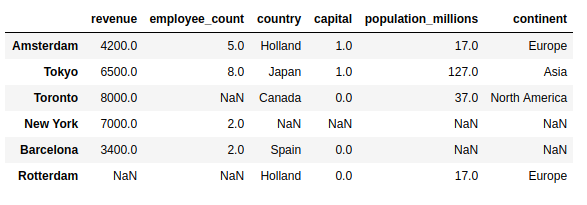

- city_employee_count

You can combine these objects into a DataFrame by providing a dictionary in the constructor. The dictionary keys will become the column names, and the values should contain the Series objects:

Note how pandas replaced the missing employee_count value for Toronto with NaN .

The new DataFrame index is the union of the two Series indices:

Just like a Series , a DataFrame also stores its values in a NumPy array:

You can also refer to the 2 dimensions of a DataFrame as axes :

The axis marked with 0 is the row index , and the axis marked with 1 is the column index . This terminology is important to know because you’ll encounter several DataFrame methods that accept an axis parameter.

A DataFrame is also a dictionary-like data structure, so it also supports .keys() and the in keyword. However, for a DataFrame these don’t relate to the index, but to the columns:

You can see these concepts in action with the bigger NBA dataset. Does it contain a column called "points" , or was it called "pts" ? To answer this question, display the index and the axes of the nba dataset, then expand the code block below for the solution:

Solution: NBA index Show/Hide

Because you didn’t specify an index column when you read in the CSV file, pandas has assigned a RangeIndex to the DataFrame :

nba , like all DataFrame objects, has two axes:

You can check the existence of a column with .keys() :

The column is called "pts" , not "points" .

As you use these methods to answer questions about your dataset, be sure to keep in mind whether you’re working with a Series or a DataFrame so that your interpretation is accurate.

Accessing Series Elements

In the section above, you’ve created a pandas Series based on a Python list and compared the two data structures. You’ve seen how a Series object is similar to lists and dictionaries in several ways. A further similarity is that you can use the indexing operator ( [] ) for Series as well.

You’ll also learn how to use two pandas-specific access methods :

You’ll see that these data access methods can be much more readable than the indexing operator.

Recall that a Series has two indices:

- A positional or implicit index , which is always a RangeIndex

- A label or explicit index , which can contain any hashable objects

Next, revisit the city_revenues object:

You can conveniently access the values in a Series with both the label and positional indices:

You can also use negative indices and slices, just like you would for a list:

If you want to learn more about the possibilities of the indexing operator, then check out Lists and Tuples in Python .

The indexing operator ( [] ) is convenient, but there’s a caveat. What if the labels are also numbers? Say you have to work with a Series object like this:

What will colors[1] return? For a positional index, colors[1] is "purple" . However, if you go by the label index, then colors[1] is referring to "red" .

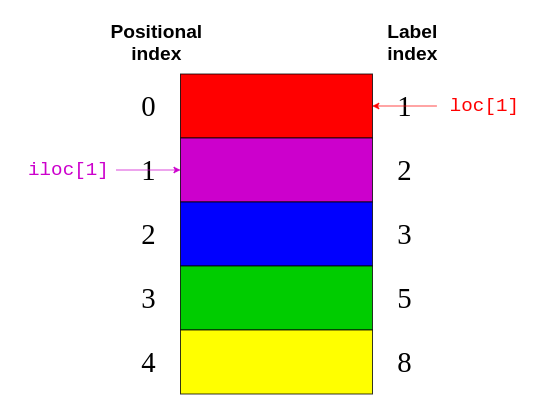

The good news is, you don’t have to figure it out! Instead, to avoid confusion, the pandas Python library provides two data access methods :

- .loc refers to the label index .

- .iloc refers to the positional index .

These data access methods are much more readable:

colors.loc[1] returned "red" , the element with the label 1 . colors.iloc[1] returned "purple" , the element with the index 1 .

The following figure shows which elements .loc and .iloc refer to:

Again, .loc points to the label index on the right-hand side of the image. Meanwhile, .iloc points to the positional index on the left-hand side of the picture.

It’s easier to keep in mind the distinction between .loc and .iloc than it is to figure out what the indexing operator will return. Even if you’re familiar with all the quirks of the indexing operator, it can be dangerous to assume that everybody who reads your code has internalized those rules as well!

Note: In addition to being confusing for Series with numeric labels, the Python indexing operator has some performance drawbacks . It’s perfectly okay to use it in interactive sessions for ad-hoc analysis, but for production code, the .loc and .iloc data access methods are preferable. For further details, check out the pandas User Guide section on indexing and selecting data .

.loc and .iloc also support the features you would expect from indexing operators, like slicing. However, these data access methods have an important difference. While .iloc excludes the closing element, .loc includes it. Take a look at this code block:

If you compare this code with the image above, then you can see that colors.iloc[1:3] returns the elements with the positional indices of 1 and 2 . The closing item "green" with a positional index of 3 is excluded.

On the other hand, .loc includes the closing element:

This code block says to return all elements with a label index between 3 and 8 . Here, the closing item "yellow" has a label index of 8 and is included in the output.

You can also pass a negative positional index to .iloc :

You start from the end of the Series and return the second element.

Note: There used to be an .ix indexer, which tried to guess whether it should apply positional or label indexing depending on the data type of the index. Because it caused a lot of confusion, it has been deprecated since pandas version 0.20.0.

It’s highly recommended that you do not use .ix for indexing. Instead, always use .loc for label indexing and .iloc for positional indexing. For further details, check out the pandas User Guide .

You can use the code blocks above to distinguish between two Series behaviors:

- You can use .iloc on a Series similar to using [] on a list .

- You can use .loc on a Series similar to using [] on a dictionary .

Be sure to keep these distinctions in mind as you access elements of your Series objects.

Accessing DataFrame Elements

Since a DataFrame consists of Series objects, you can use the very same tools to access its elements. The crucial difference is the additional dimension of the DataFrame . You’ll use the indexing operator for the columns and the access methods .loc and .iloc on the rows.

If you think of a DataFrame as a dictionary whose values are Series , then it makes sense that you can access its columns with the indexing operator:

Here, you use the indexing operator to select the column labeled "revenue" .

If the column name is a string, then you can use attribute-style accessing with dot notation as well:

city_data["revenue"] and city_data.revenue return the same output.

There’s one situation where accessing DataFrame elements with dot notation may not work or may lead to surprises. This is when a column name coincides with a DataFrame attribute or method name:

The indexing operation toys["shape"] returns the correct data, but the attribute-style operation toys.shape still returns the shape of the DataFrame . You should only use attribute-style accessing in interactive sessions or for read operations. You shouldn’t use it for production code or for manipulating data (such as defining new columns).

Similar to Series , a DataFrame also provides .loc and .iloc data access methods . Remember, .loc uses the label and .iloc the positional index:

Each line of code selects a different row from city_data :

- city_data.loc["Amsterdam"] selects the row with the label index "Amsterdam" .

- city_data.loc["Tokyo": "Toronto"] selects the rows with label indices from "Tokyo" to "Toronto" . Remember, .loc is inclusive.

- city_data.iloc[1] selects the row with the positional index 1 , which is "Tokyo" .

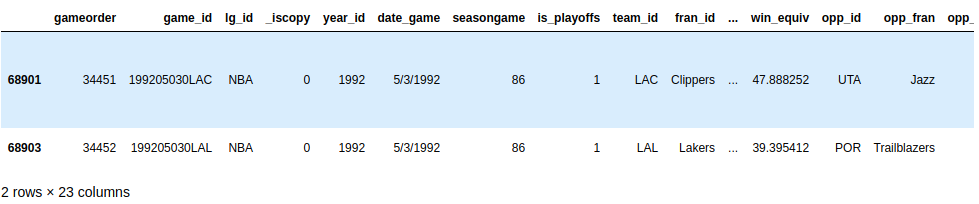

Alright, you’ve used .loc and .iloc on small data structures. Now, it’s time to practice with something bigger! Use a data access method to display the second-to-last row of the nba dataset. Then, expand the code block below to see a solution:

Solution: NBA accessing rows Show/Hide

The second-to-last row is the row with the positional index of -2 . You can display it with .iloc :

You’ll see the output as a Series object.

For a DataFrame , the data access methods .loc and .iloc also accept a second parameter. While the first parameter selects rows based on the indices, the second parameter selects the columns. You can use these parameters together to select a subset of rows and columns from your DataFrame :

Note that you separate the parameters with a comma ( , ). The first parameter, "Amsterdam" : "Tokyo," says to select all rows between those two labels. The second parameter comes after the comma and says to select the "revenue" column.

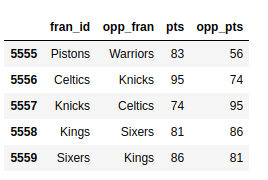

It’s time to see the same construct in action with the bigger nba dataset. Select all games between the labels 5555 and 5559 . You’re only interested in the names of the teams and the scores, so select those elements as well. Expand the code block below to see a solution:

Solution: NBA accessing a subset Show/Hide

First, define which rows you want to see, then list the relevant columns:

You use .loc for the label index and a comma ( , ) to separate your two parameters.

You should see a small part of your quite huge dataset:

The output is much easier to read!