Is Using AI Tools the Same as Plagiarizing? A Legal Perspective

Is using ai to write plagiarism is ai-generated content original discover the truth about ai tools vs. plagiarism. stay informed — learn legal and ethical insights..

Disclaimer: This article is for informational purposes only. Don’t use it in place of legal advice. If you have any concerns about using AI and plagiarism, consult an attorney.

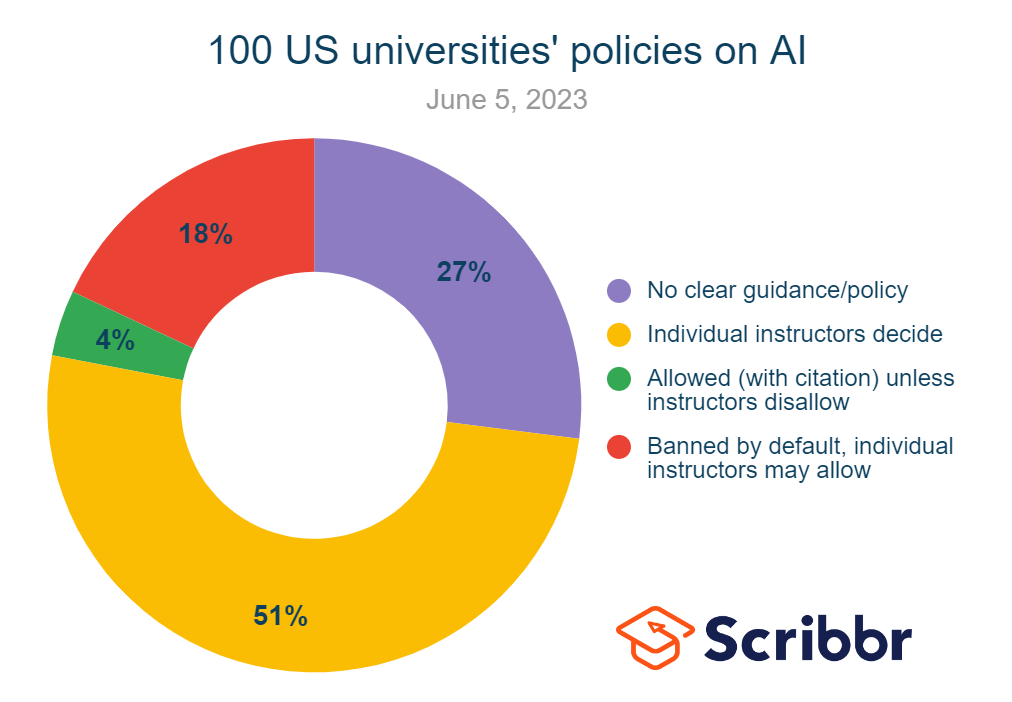

ChatGPT and other artificial intelligence (AI) writing tools have exploded into the market, creating controversy around their use. So much so, in fact, that schools have been creating policies on navigating AI use in the classroom , content marketers are turning to AI detection tools to help ensure original, human content, and even authors are wondering if and how AI-generated content can have a place in their work .

While the exact reasons for AI-related concerns can vary by industry, there is one topic that comes up time and time again: plagiarism.

More specifically, these questions arise:

- Is using AI tools the same as plagiarizing content?

- Does AI show up as plagiarism?

- Can you count AI as original work?

Note : Since the widespread use of AI technology is still so new, the laws and ethics regarding AI plagiarism, and the question of ‘does AI plagiarize?’ are a little murky.

In this article, we’re going to explore what we know so far about the use of AI writing tools from a legal perspective to help you stay on the right side of the law.

What Is Plagiarism?

Simply put, plagiarism occurs when you try to pass off someone else’s work as your own. For example, a student copies another student’s essay (whether it’s just a section or the entire thing), puts their name on it, and then hands it in as their own original work. They don’t acknowledge the source material or author at all, as they want to keep the credit for themselves.

This is, of course, highly unethical, and the reason why schools and workplaces often have strict policies in place surrounding plagiarism. Here’s the thing: when determining if using AI is plagiarism, can you really apply this traditional definition of plagiarism to AI writing tools?

Is Using AI Tools Considered Plagiarism?

So, is using AI to write plagiarism? It’s a bit of a gray area for now, but generally speaking, no, the use of AI tools isn’t considered plagiarism. When you consider how AI tools work, the traditional definition of plagiarism doesn’t really apply.

AI doesn’t usually copy from other sources word-for-word when it’s generating content. Its goal isn’t even to paraphrase specific pieces of content. These machine learning programs do just what their name implies: learn.

Large language models like GPT-3 are trained on massive datasets that allow them to recognize patterns, structures, and styles of text. They then try to replicate these elements — not necessarily copy the sentences and phrases it has been trained on — to generate what sounds like original, human-written content.

So does AI plagiarize? Since the intention of AI isn’t specifically to pass off someone else’s work as its own (and it’s difficult to prove if AI is directly copying from other sources), it’s not considered plagiarism. At least, in the traditional sense.

So why is using AI viewed as plagiarism by some? Some people argue that it should be because it depends almost entirely on the original work of others. And this is where some of the legal issues come into play.

The Legal Perspective on AI Plagiarism

Let’s start off by clarifying something: in most cases, plagiarism isn’t illegal in the United States . A plagiarizer may face serious repercussions due to a school or work policy, sure, but it’s more of an ethical issue than a legal one. Copyright infringement, on the other hand, is a different story.

Plagiarism vs. copyright infringement

While plagiarism is about using someone else’s work and not giving credit where credit is due, copyright infringement is a little different. It involves not obtaining permission to use an original, copyrighted work in the first place.

You can often fix a plagiarism issue by simply citing the original source, but it’s more complicated with copyright infringement. If you don’t get permission to use the work from the copyright holder themselves, you can get charged.

And in the world of artificial intelligence, no one knows this better than the AI companies themselves.

Examples of legal challenges to AI

While the US Copyright Office, Library of Congress has issued a statement of policy on AI-generated content , there are still some major legal battles going on regarding AI and copyrighted works. And they don’t just involve big companies — authors are getting in on the action too.

For example, OpenAI, the creator of ChatGPT, and Microsoft are being sued by nonfiction book authors and the New York Times for similar copyright infringement cases . They both allege that the companies used their copyrighted work without permission to train their large language models. This comes after the Authors Guild of America, including author George R.R. Martin, had already sued OpenAI for copyright infringement themselves.

These are just a few examples of the current legal challenges to AI, so it will be interesting to see how they affect the definition of AI plagiarism and copyright infringement in the future. But in the meantime, if you’d like to use AI in your own content creation process, it’s important to do so responsibly.

Best Practices for Using AI Tools Responsibly

The conversation and laws surrounding AI plagiarism and copyright infringement may be murky, but that doesn’t mean you should avoid using this technology entirely. After all, there are some real benefits to using AI in the content creation process — you just need to do so responsibly.

Here are some best practices for responsible AI use in the content creation process.

- Cite sources: AI doesn’t always cite its sources, so it’s up to you to make sure you’re giving credit to the appropriate parties. And if you can’t find high-quality, reputable sources for any AI-generated facts, then it may be best to leave them out of the final product. AI hallucinations can cause some serious problems , so be sure to go over any of its outputs to make sure it makes sense.

- Use AI to enhance the content creation process, not replace it: AI can help you save time by taking care of some of your less creative tasks, like creating outlines, proofreading, and brainstorming content ideas. If you stick with using AI as more of a writing assistant than an author, then you’re less likely to run into plagiarism issues.

- Use a plagiarism detector: It’s always a good idea to run any AI-generated articles through a plagiarism detector tool before publishing. This can help you catch any duplicate content (or, at least, what sounds like duplicate content) that AI may have accidentally included in its output.

Final Thoughts

So, is using AI plagiarism? Well, from a legal perspective, the answer is no — at least, not yet. While there are various cases regarding AI and plagiarism’s cousin, copyright infringement, currently going on in the courts, the laws surrounding this situation are still murky at best.

In the meantime, you can help avoid any plagiarism-related issues by following the best practices for using AI tools responsibly in the content creation process. By citing your sources, using AI as an assistant instead of a primary author, and taking advantage of plagiarism checkers , you can ensure that you’re reaping the benefits of AI tools in an ethical way.

Jess Sawyer

More from the blog, writing contract, what is copyright.

Learn about copyright in the US. Review what it is, what is and isn’t protected under the current copyright law, and get tips on how to avoid copyright infringement.

What Is the Purpose of the Digital Millennium Copyright Act?

Find out the primary aim of the Digital Millennium Copyright Act (DMCA) of 1998. Then, get insight into how the age of generative AI could potentially change the DMCA.

Navigating fair use as a publisher or freelance writer

Avoid copyright trouble! Understand "Fair Use" for publishers & freelance writers. Get the lowdown & protect your work!

AI Content Detector & Plagiarism Checker for Marketers and Writers

Use our leading tools to ensure you can hit publish with integrity.

AI & Plagiarism Detector for Serious Content Publishers

KNOWLEDGE BASE

© 0000 Originality.ai

Is Using AI Content Plagiarism?

- Posted on January 10, 2024 January 10, 2024

Artificial intelligence, AI for short, is a booming industry. The global AI market is expected to reach $1,597.1 by 2030, with a compound annual growth rate of over 38% in the coming years. Artificial intelligence has been transforming many industries, such as healthcare, where it helps doctors identify disease patterns, streamline the experience, and improve patient care.

Recently, the topic of AI-generated content has been gaining steam with the introduction of ChatGPT. This powerful AI tool can write essays, compose poems, spit out novels, and even write computer programs with code.

Like spun content, AI technology can generate content that can pass many plagiarism detectors, such as Grammarly, without the writer doing any work. Various people have used ChatGPT and other AI tools to generate content.

Students use AI copywriting tools to write essays instead of submitting original work, and businesses and bloggers use it for their content marketing campaigns. However, is AI content plagiarism? Is it ethical to use these tools?

Some people mistakenly believe that AI writing tools can replace human content writers in the future. However, AI writing has significant pitfalls and risks, and relying on it instead of investing in human content writing can lead to significant consequences, whether you’re a student or a small business owner.

How Is AI Content Created?

AI content creation is usually quick and easy. You’ll start by creating an account with an artificial intelligence company offering AI technology that can write content from scratch. While ChatGPT is popular, various other AI copywriting tools exist, such as Jasper.ai .

These writing tools respond to specific prompts. For example, you can tell the content generator which post format you want to generate, such as a blog post, social media post, email newsletter, written essay, poem, musical lyrics, or even a computer program (in the case of ChatGPT-3).

You will also inform the copywriting tool which topics you want it to cover. For example, you can tell it to write about healthcare or a historical figure such as Benjamin Franklin. Depending on the tool, you can specify the tone of voice, whether professional and cold or emotional and heartfelt.

Then, the tool will spit out seemingly unique content. These tools use complex algorithms to create content. They analyze existing content, whether published on the web or generated by previous users and use paraphrasing and machine learning to come up with content that seems unique.

The goal of these tools is to generate content that seems human-written. The tool may use synonyms to ensure a diverse text, similar to how rewriting tools use synonyms to avoid plagiarism detection.

The result, however, needs to be quality content. While the quality of AI-generated content varies significantly from one tool to another, mistakes often creep in.

An AI writer is no match for a human writer, and it is common for AI-generated content to sound robotic and use awkward phrasing that makes it seem unnatural. While a plagiarism detector may not detect it, your professor or website visitors may notice something is off.

Besides, the market is reacting to the introduction of AI content writing tools. Various developers have created tools to detect AI content, using complex algorithms that differentiate between human and machine writing patterns.

AI Content and Plagiarism

Technically, AI-generated content is not plagiarism. Plagiarism, by definition, refers to copying other people’s content, whether you copy an article word for word or paraphrase it, to avoid detection.

Merriam-Webster defines plagiarism as taking someone else’s ideas or words and presenting them as one’s own. Since artificial intelligence tools generate unique content from scratch instead of stealing someone’s ideas, using an AI tool isn’t technically plagiarism, unlike copy-paste plagiarism.

However, that’s if the content creation tool creates the content from scratch using machine learning.

However, if the AI writer searches for existing content on the web and then uses paraphrasing to generate unique content when you run it through a plagiarism detector, so it doesn’t get flagged as duplicate content, that would be considered plagiarized content.

It can be challenging to know if a random AI tool generates content from scratch or paraphrases existing content without further research into how the tool works.

If the latter, you would be held responsible for the plagiarism, not the tool creators, as they simply provide a rewriting tool for you. When using information from any source, quoting when paraphrasing is essential. Thus, random AI writing tools carry an inherent risk of AI plagiarism.

It’s okay to cite sources and draw inspiration from other texts, but use these tips for adequately quoting any texts you paraphrase or cite to avoid accusations of plagiarism.

Is AI Content Ethical?

While artificial intelligence content creation might not fall under the technical definition of plagiarism, is it ethical? There is a strong argument that, no, it is not ethical. Many people consider AI writing unethical because you are presenting an idea or text as your own when, in fact, you used AI technology to create it. You are taking credit for a creation you did not create.

According to the Oxford Learner’s Dictionary , plagiarizing is “to copy another person’s ideas, words, or work and pretending that they are your own.” While an AI writer isn’t “another person,” and you’re not using a rewriting tool to rewrite an existing text, passing its work off as your own still comes dangerously close to plagiarism.

Using AI writing and falsely pretending the content is your own can cause you to get credit when you shouldn’t. You may pass your grade with your AI-generated essay, even if you know barely anything about the subject and haven’t put in any work. You can get content for your blogs without any original ideas.

AI-generated content goes against Google’s guidelines and is grounds for SEO penalties. Whether Google can detect AI content is debatable, although it is likely improving its algorithms to better differentiate between human and machine writing.

Ultimately, though, the ethics of using an AI tool depend on the case’s specifics and the purpose for which you use the content. If you use AI content on your blog and specify that it is AI content, you are at least informing your readers that the content is not your own.

However, if you pass AI content as your own, most people would not consider it ethical, regardless of the situation.

You should never use AI content to mislead or manipulate people; properly labeling it as AI content will help you avoid that. If you do that, you can use AI content to supplement your existing content marketing strategy. For example, you can use it to help you write sales copy for your emails when promoting a product.

If you are a student, be aware that most professors and institutions consider AI content on par with plagiarized content, they don’t tend to differentiate between the two.

Your professor may or may not use a tool to detect AI content. However, if they discover that you were using an AI tool to write your essays, it may be grounds for a penalty.

That penalty may automatically fail, depending on the institution and circumstances. Some institutions will consider it grounds for expulsion. You must carefully consider the consequences of using AI content creation tools before deciding whether it’s worth it.

There are other ethical problems with using AI content. Since AI tools use machine learning and large datasets to get information and create articles, those datasets may be biased in one direction or another.

Furthermore, AI content can create false news stories based on carefully selected information to support an argument and present misleading proof. As AI tools get more advanced, they can respond to prompts that tell them which stances to take on various issues.

Utilizing a Plagiarism Checker

Is AI content plagiarism? While AI content is not usually considered plagiarism in the technical sense of the word, that doesn’t mean that using an AI tool guarantees your content to be plagiarism-free even if it passes a plagiarism check. If the content is based on someone else’s work, and the tool used paraphrasing to avoid plagiarism detection, it is no different from copy-paste plagiarism.

When citing or paraphrasing articles, citing your sources properly is crucial to avoid plagiarized content. A tool like Quetext can help you properly use citation styles, such as MLA, APA, or Chicago, and correctly insert them into your text. This is to avoid accusations of duplicate content when rewriting or paraphrasing sources.

Using citations in your essays will also help you get a better score, demonstrating to your professor that you have excellent research capabilities.

If you want to create unique content, using plagiarism detection software like Quetext to ensure no plagiarism in your articles is best. Pricing can vary from one tool to another, but you can try Quetext’s online plagiarism checker for free.

Sign Up for Quetext Today!

Click below to find a pricing plan that fits your needs.

You May Also Like

- Tips & Guides

The Importance of Proofreading: Techniques for Catching Errors and Polishing Your Writing

- Posted on August 16, 2024 August 19, 2024

The Benefits of Peer Review: How to Give and Receive Constructive Feedback on Your Writing

- Posted on August 9, 2024

Teaching Students About Plagiarism: Strategies for Promoting Academic Integrity

- Posted on August 2, 2024

Encouraging Proper Citation Practices: Tips for Teaching Students How to Cite Sources Correctly and Ethically

- Posted on July 22, 2024

A Guide to Paraphrasing Poetry, With Examples

- Posted on July 12, 2024

Preparing Students for the Future: AI Literacy and Digital Citizenship

- Posted on July 5, 2024

How to Summarize a Paper, a Story, a Book, a Report or an Essay

- Posted on June 25, 2024 June 25, 2024

How to Use AI to Enhance Your Storytelling Process

- Posted on June 12, 2024

Input your search keywords and press Enter.

Microsoft 365 Life Hacks > Writing > Is using AI the same as plagiarism?

Is using AI the same as plagiarism?

Generative AI tools such as ChatGPT have become extremely popular, with the potential to disrupt nearly every industry and academic field. With a simple prompt, AI tools can generate written content of any length, related to any subject. However, is using AI plagiarism? Discover the relationship between AI and plagiarism, when they overlap, and how to use AI as a writing assistant to avoid serious issues of credibility.

What is plagiarism?

Stemming from the Latin word plagiarius, which means “kidnapping,” plagiarism became a metaphor during the Roman Empire for “kidnapping” one’s words from another. It is a form of literary theft, where one person’s ideas are directly copied and passed off as someone’s own. It is often considered a violation of integrity and ethics and is frowned upon in nearly every field, especially academia.

Write with Confidence using Editor

Elevate your writing with real-time, intelligent assistance

Plagiarism can lead to serious consequences: for example, journalists at publications around the world have lost their jobs after being caught plagiarizing. This is because the act of plagiarism leads to serious issues of credibility, damaging the reputations of both the journalists in question as well as their publications. In education, students who are caught plagiarizing can fail their assignments, exams, or classes, as well as face suspension and other academic deterrents. Financial consequences can also stem from accusations of copyright infringement.

While plagiarism isn’t always done maliciously—for example, when describing a simple idea or concept, one can inadvertently use a similar language as another writer—it can still lead to the same consequences. Fortunately, there are ways to avoid plagiarism: by using tools that can detect if one text is similar enough to existing sources, students can know where to focus on rewriting, and teachers can alert students to instances of plagiarism.

How AI relates to plagiarism

Since AI draws and paraphrases from many different sources, it can be tricky to determine how much of it is plagiarized from a specific source. AI-generated content draws from existing, real-world sources. The algorithms that form the foundation of AI tools analyze existing content from across the internet, whether they stem from academic and scientific journals to blog posts—or even previously generated AI content.

The goal of AI tools is to generate content that is seemingly natural-sounding and written by humans. However, their credibility can waver depending on the subject, the sources from which they draw, and the ever-evolving capabilities of their algorithm. In addition, AI writing can feel clunky, with outdated or awkward phrasing that can draw unwanted attention to itself. Creating one’s own text allows writers to develop their own voice, as well as add depth and create interest in their own work.

AI detectors can parse text of any length to see what parts match the patterns having been generated by AI. Just like plagiarism detection tools, this can undermine your work.

How to use AI to avoid plagiarism

Ultimately, using AI to generate content and passing it off as your own is plagiarism. Since it is not your own original work, it falls squarely into that category: using any AI software to generate a final product will lead to the same academic misconduct as plagiarism.

Instead, AI is best thought of as a writing assistant, rather than a generator. Use AI to brainstorm ideas and create inspiration for what you should research and ask it to cite sources that you can reference in your own work. Learn how to cite your research and use tools like ChatGPT for source citation . By analyzing and rewriting any AI-generated content, you can understand your subject from top to bottom—all while reinterpreting your newfound knowledge in your own voice and personality.

This is how AI can empower students to develop and foster their own critical thinking skills. Learn how to write a research paper or make a persuasive argument , find credible sources , or harness the power of AI to create better content .

Get started with Microsoft 365

It’s the Office you know, plus the tools to help you work better together, so you can get more done—anytime, anywhere.

Topics in this article

More articles like this one.

How to write a plot twist in your story

When executed carefully, a plot twist has the power to shock and dazzle your reader. Learn how you can incorporate one into your writing.

What's the difference between a memoir and an autobiography?

Explore the differences between memoirs, autobiographies, and biographies.

When to use 'while' vs. 'whilst'

“While” and “whilst” are usually interchangeable, but not always. See how they differ and learn how to use them effectively.

What is touch typing (and why is it important)?

Learn about the benefits of touch typing and how it can help you type faster and more accurately.

Everything you need to achieve more in less time

Get powerful productivity and security apps with Microsoft 365

Explore Other Categories

Artificial intelligence is getting better at writing, and universities should worry about plagiarism

Assistant Professor, Faculty of Education, Brock University

Educational Leader in Residence, Academic Integrity and Assistant Professor, University of Calgary

Disclosure statement

The authors do not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and have disclosed no relevant affiliations beyond their academic appointment.

University of Calgary provides funding as a founding partner of The Conversation CA.

Brock University and University of Calgary provide funding as members of The Conversation CA-FR.

Brock University provides funding as a member of The Conversation CA.

View all partners

The dramatic rise of online learning during the COVID-19 pandemic has spotlit concerns about the role of technology in exam surveillance — and also in student cheating .

Some universities have reported more cheating during the pandemic, and such concerns are unfolding in a climate where technologies that allow for the automation of writing continue to improve.

Over the past two years, the ability of artificial intelligence to generate writing has leapt forward significantly , particularly with the development of what’s known as the language generator GPT-3. With this, companies such as Google , Microsoft and NVIDIA can now produce “human-like” text .

AI-generated writing has raised the stakes of how universities and schools will gauge what constitutes academic misconduct, such as plagiarism . As scholars with an interest in academic integrity and the intersections of work, society and educators’ labour, we believe that educators and parents should be, at the very least, paying close attention to these significant developments .

AI & academic writing

The use of technology in academic writing is already widespread. For example, many universities already use text-based plagiarism detectors like Turnitin , while students might use Grammarly , a cloud-based writing assistant. Examples of writing support include automatic text generation, extraction, prediction, mining, form-filling, paraphrasing , translation and transcription.

Read more: In an AI world we need to teach students how to work with robot writers

Advancements in AI technology have led to new tools, products and services being offered to writers to improve content and efficiency . As these improve, soon entire articles or essays might be generated and written entirely by artificial intelligence . In schools, the implications of such developments will undoubtedly shape the future of learning, writing and teaching.

Misconduct concerns already widespread

Research has revealed that concerns over academic misconduct are already widespread across institutions higher education in Canada and internationally.

In Canada, there is little data regarding the rates of misconduct. Research published in 2006 based on data from mostly undergraduate students at 11 higher education institutions found 53 per cent reported having engaged in one or more instances of serious cheating on written work, which was defined as copying material without footnoting, copying material almost word for word, submitting work done by someone else, fabricating or falsifying a bibliography, submitting a paper they either bought or got from someone else for free.

Academic misconduct is in all likelihood under-reported across Canadian higher education institutions .

There are different types of violations of academic integrity, including plagiarism , contract cheating (where students hire other people to write their papers) and exam cheating, among others .

Unfortunately, with technology, students can use their ingenuity and entrepreneurialism to cheat. These concerns are also applicable to faculty members, academics and writers in other fields, bringing new concerns surrounding academic integrity and AI such as:

- If a piece of writing was 49 per cent written by AI, with the remaining 51 per cent written by a human, is this considered original work?

- What if an essay was 100 per cent written by AI, but a student did some of the coding themselves?

- What qualifies as “AI assistance” as opposed to “academic cheating”?

- Do the same rules apply to students as they would to academics and researchers?

We are asking these questions in our own research , and we know that in the face of all this, educators will be required to consider how writing can be effectively assessed or evaluated as these technologies improve.

Augmenting or diminishing integrity?

At the moment, little guidance, policy or oversight is available regarding technology, AI and academic integrity for teachers and educational leaders.

Over the past year, COVID-19 has pushed more students towards online learning — a sphere where teachers may become less familiar with their own students and thus, potentially, their writing.

While it remains impossible to predict the future of these technologies and their implications in education, we can attempt to discern some of the larger trends and trajectories that will impact teaching, learning and research.

Technology & automation in education

A key concern moving forward is the apparent movement towards the increased automation of education where educational technology companies offer commodities such as writing tools as proposed solutions for the various “problems” within education.

An example of this is automated assessment of student work, such as automated grading of student writing . Numerous commercial products already exist for automated grading, though the ethics of these technologies are yet to be fully explored by scholars and educators.

Read more: Online exam monitoring can invade privacy and erode trust at universities

Overall, the traditional landscape surrounding academic integrity and authorship is being rapidly reshaped by technological developments. Such technological developments also spark concerns about a shift of professional control away from educators and ever-increasing new expectations of digital literacy in precarious working environments .

Read more: Precarious employment in education impacts workers, families and students

These complexities, concerns and questions will require further thought and discussion. Educational stakeholders at all levels will be required to respond and rethink definitions as well as values surrounding plagiarism, originality, academic ethics and academic labour in the very near future.

The authors would like to sincerely thank Ryan Morrison, from George Brown College, who provided significant expertise, advice and assistance with the development of this article.

- Artificial intelligence (AI)

- Academic cheating

- Academic integrity

- Academic writing

Senior Student & Programs Coordinator

Casual Facilitator: GERRIC Student Programs - Arts, Design and Architecture

Senior Lecturer, Digital Advertising

Manager, Centre Policy and Translation

Newsletter and Deputy Social Media Producer

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- NEWS FEATURE

- 30 July 2024

AI is complicating plagiarism. How should scientists respond?

- Diana Kwon 0

Diana Kwon is a freelance science journalist based in Berlin.

You can also search for this author in PubMed Google Scholar

Illustration by Piotr Kowalczyk

From accusations that led Harvard University’s president to resign in January , to revelations in February of plagiarized text in peer-review reports , the academic world has been roiled by cases of plagiarism this year.

But a bigger problem looms in scholarly writing. The rapid uptake of generative artificial intelligence (AI) tools — which create text in response to prompts — has raised questions about whether this constitutes plagiarism and under what circumstances it should be allowed. “There’s a whole spectrum of AI use, from completely human-written to completely AI-written — and in the middle, there’s this vast wasteland of confusion,” says Jonathan Bailey, a copyright and plagiarism consultant based in New Orleans, Louisiana.

Generative AI tools such as ChatGPT, which are based on algorithms known as large language models (LLMs), can save time, improve clarity and reduce language barriers. Many researchers now argue that they are permissible in some circumstances and that their use should be fully disclosed.

But such tools complicate an already fraught debate around the improper use of others’ work. LLMs are trained to generate text by digesting vast amounts of previously published writing. As a result, their use could result in something akin to plagiarism — if a researcher passes off the work of a machine as their own, for instance, or if a machine generates text that is very close to a person’s work without attributing the source. The tools can also be used to disguise deliberately plagiarized text, and any use of them is hard to spot. “Defining what we actually mean by academic dishonesty or plagiarism, and where the boundaries are, is going to be very, very difficult,” says Pete Cotton, an ecologist at the University of Plymouth, UK.

In a 2023 survey of 1,600 researchers , 68% of respondents said that AI will make plagiarism easier and harder to detect. “Everybody’s worried about everybody else using these systems, and they’re worried about themselves not using them when they should,” says Debora Weber-Wulff, a plagiarism specialist at the University of Applied Sciences Berlin. “Everybody’s kind of in a tizzy about this.”

Plagiarism meets AI

Plagiarism, which the US Office of Research Integrity defines as “the appropriation of another person’s ideas, processes, results, or words without giving appropriate credit”, is a familiar problem. A 2015 study estimated that 1.7% of scientists had admitted to plagiarism and that 30% knew colleagues who had committed it 1 .

LLMs could make things worse. Intentional plagiarism of human-written text can easily be disguised if someone asks an LLM to paraphrase the wording first. The tools can be prompted to paraphrase in sophisticated ways, such as in the style of an academic journal, says Muhammad Abdul-Mageed, a computer scientist and linguist at the University of British Columbia in Vancouver, Canada.

A central question is whether using unattributed content written entirely by a machine — rather than by a human — counts as plagiarism. Not necessarily, say many researchers. For example, the European Network for Academic Integrity, which includes universities and individuals, defines the prohibited or undeclared use of AI tools for writing as “unauthorized content generation” rather than as plagiarism as such 2 . “Plagiarism, for me, would have things that are attributable to another, identifiable person,” says Weber-Wulff. Although there have been instances of generative AI producing text that looks almost identical to existing, human-written content, it is usually not close enough to be considered plagiarism, she adds.

Plagiarism in peer-review reports could be the ‘tip of the iceberg’

However, some people argue that generative AI tools are infringing copyright. Both plagiarism and copyright infringement are the improper use of someone else’s work, and whereas plagiarism is a breach of academic ethics, unauthorized use of copyrighted work can be a breach of the law. “These AI systems are built on the work of millions or hundreds of millions of people,” says Rada Mihalcea, a computer scientist at the University of Michigan in Ann Arbor.

Some media companies and authors have protested against what they see as copyright breaches by AI. In December 2023, The New York Times launched a copyright lawsuit against the tech giant Microsoft and OpenAI, the US company behind the LLM GPT-4, which powers the chatbot ChatGPT. The lawsuit claims that the two firms copied and used millions of the newspaper’s articles to train LLMs, which now compete with the publication’s content. The lawsuit includes instances in which prompts caused GPT-4 to reproduce several paragraphs of the newspaper’s articles almost word for word.

In February, OpenAI filed a motion in federal court to dismiss parts of the lawsuit, arguing that “ChatGPT is not in any way a substitute for a subscription” to The New York Times . A spokesperson for Microsoft says that “lawfully developed AI-powered tools should be allowed to advance responsibly”, and “they are also not a substitute for the vital role that journalists play”.

If a court rules that training an AI on text without permission is indeed copyright infringement, “that’s going to be a huge shake up for AI companies”, says Bailey. Without extensive training sets, tools such as ChatGPT “can’t exist”, he says.

AI explosion

Whether it’s called plagiarism or not, the use of AI in academic writing has exploded since ChatGPT was released in November 2022.

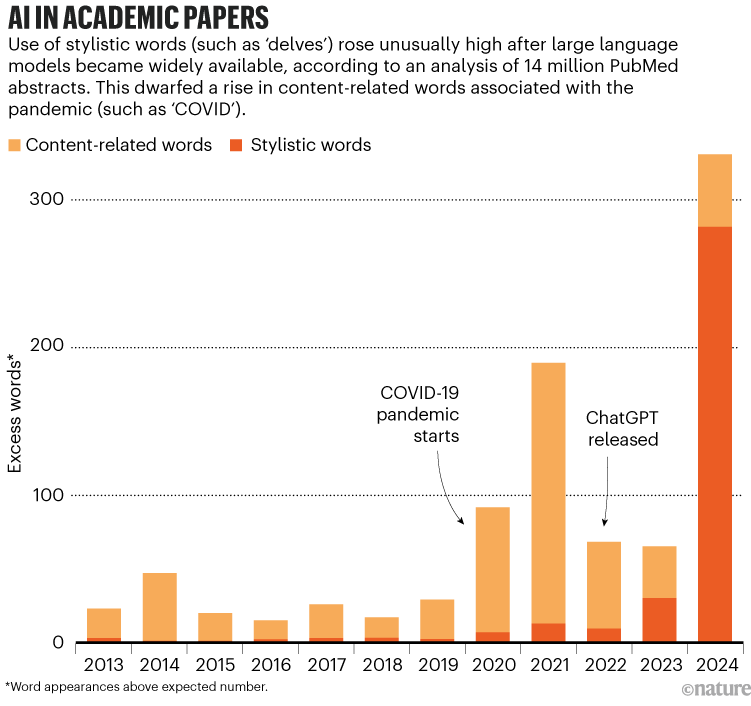

In a preprint updated in July 3 , researchers estimated that at least 10% of abstracts in biomedical papers in the first six months of 2024 had used LLMs for writing — equivalent to 150,000 papers per year. The authors, led by data scientist Dmitry Kobak at the University of Tübingen in Germany, analysed 14 million abstracts in the academic database PubMed that had been published between 2010 and June 2024. They showed that the arrival of LLMs was associated with the increased use of stylistic words — such as ‘delves’, ‘showcasing’ and ‘underscores’ — and then used these unusual word patterns to estimate the proportion of abstracts that had been processed using AI (see ‘AI in academic papers’). “The appearance of LLM-based writing assistants has had an unprecedented impact in the scientific literature,” they wrote.

Source: Ref. 3

Kobak and his colleagues found that papers from countries including China and South Korea showed signs of heavier LLM use than did those from countries where English is the dominant language. However, says Kobak, authors in this latter group of countries might be using these tools just as often, but in ways that are more difficult to spot. Use of LLMs “will certainly continue to increase”, Kobak predicts, and will “probably get harder to detect”.

The undisclosed use of software in academic writing is not new. Since 2015, Guillaume Cabanac, a computer scientist at the University of Toulouse, France, and his colleagues have been uncovering gibberish papers made by software called SCIgen, and ones containing ‘ tortured phrases ’ that were created by automated software that translates or paraphrases text. “Even before generative AI, people had tools to fly under the radar,” Cabanac says.

And some use of AI in academic writing has value. Researchers say that it can make text and concepts clearer, reduce language barriers and free up time for experiments and thought. Hend Al-Khalifa, an information-technology researcher at King Saud University in Riyadh, says that before generative AI tools became available, many of her colleagues for whom English is a second language would struggle to write papers. “Now, they are focusing on the research and removing the hassle of writing with these tools,” she says.

But confusion reigns about when the use of AI constitutes plagiarism or contravenes ethics. Soheil Feizi, a computer scientist at the University of Maryland, College Park, says that using LLMs to paraphrase content from existing papers is clearly plagiarism. But using an LLM to help express ideas — either by generating text based on a detailed prompt, or by editing a draft — should not be penalized if it is done transparently. “We should allow people to leverage large language models to be able to express their ideas effortlessly and in a clearer manner,” Feizi says.

Many journals now have policies that allow some level of LLM use. After originally banning text generated by ChatGPT, Science updated its policy in November 2023 to say that use of AI technologies in writing a manuscript should be disclosed in full — including the system and prompts used. Authors are accountable for accuracy and “ensuring that there is no plagiarism”, it says. Nature , too, says authors of research manuscripts should use the methods section to document any LLM use . ( Nature ’s news and features team is editorially independent of its journals team.)

‘Obviously ChatGPT’ — how reviewers accused me of scientific fraud

An analysis of 100 large academic publishers and 100 highly ranked journals found that by October 2023, 24% of publishers and 87% of journals had guidelines on the use of generative AI 4 . Almost all of those that provided guidance said that an AI tool could not be included as an author, but policies differed on the types of AI use allowed and the level of disclosure required. Clearer guidelines on AI use in academic writing are urgently needed, says Weber-Wulff.

For now, the rampant use of LLMs for writing scientific papers is curbed by their limitations, says Abdul-Mageed. Users need to create detailed prompts describing the audience, the style of language and the subfield of research. “It’s actually very difficult for a language model to give you exactly what you want,” he says.

But developers are building applications that will make it easier for researchers to generate specialized scientific content, says Abdul-Mageed. Rather than having to write a detailed prompt, a user could in future simply pick from a drop-down menu of options and push a button to produce an entire paper from scratch, he says.

Detective work

The rapid adoption of LLMs to write text has been accompanied by a flurry of tools that aim to detect it. Although many boast high rates of accuracy — more than 90%, in some cases — research has suggested that most do not live up to their claims. In a study published last December 5 , Weber-Wulff and her colleagues assessed 14 AI-detection tools that are widely used in academia. Only 5 accurately identified 70% or more of texts as AI- or human-written, and none scored above 80%.

The detectors’ accuracy dropped below 50%, on average, when spotting AI-generated text that someone had lightly edited by replacing synonyms and reordering sentences. Such text is “almost undetectable by current tools”, the authors wrote. Other studies have shown that asking an AI to paraphrase text multiple times drastically reduces the accuracy of the detectors 6 .

AI and science: what 1,600 researchers think

There are other problems with AI detectors. One study showed that they are more likely to misclassify English writing as AI-generated if it was penned by people for whom English is not a first language 7 . Feizi says the detectors cannot reliably distinguish between text written entirely by AI and cases in which an author used AI-based services that polish text by helping with grammar and sentence clarity. “Differentiating between these cases would be quite difficult and unreliable — and could lead to a huge rate of false positives,” he says. Being falsely accused of using AI, he adds, can be “quite damaging to the reputation of those scholars or students”.

The boundary between legitimate and illegitimate use of AI is likely to blur further. In March 2023, Microsoft started to incorporate generative AI tools into its applications, including Word, PowerPoint and Outlook. Some versions of its AI assistant, called Copilot, can draft or edit content. In June, Google also began integrating its generative AI model, Gemini, into tools such as Docs and Gmail.

“AI is becoming so embedded in everything we use, I think it’ll become increasingly difficult to know whether something you’ve done has been influenced by AI,” says Debby Cotton, a specialist in higher education at Plymouth Marjon University, UK. “I think it’ll carry on evolving more rapidly than we can keep pace with.”

doi: https://doi.org/10.1038/d41586-024-02371-z

Pupovac, V. & Fanelli, D. Sci. Eng. Ethics 21 , 1331–1352 (2015).

Article PubMed Google Scholar

Foltynek, T. et al. Int. J. Educ. Integr. 19 , 12 (2023).

Article Google Scholar

Kobak, D., González-Márquez, R., Horvát, E.-Á. & Lause, J. Preprint at arXiv https://doi.org/10.48550/arXiv.2406.07016 (2024).

Ganjavi, C. et al. BMJ 384 , e077192 (2024).

Weber-Wulff, D. et al. Int. J. Educ. Integr. 19 , 26 (2023).

Sadasivan, V. S., Kumar, A., Balasubramanian, S., Wang, W. & Feizi, S. Preprint at arXiv https://doi.org/10.48550/arXiv.2303.11156 (2023).

Liang, W., Yuksekgonul, M., Mao, Y., Wu, E. & Zou, J. Patterns 4 , 100779 (2023).

Download references

Reprints and permissions

Related Articles

- Machine learning

- Scientific community

Five ways the brain can age: 50,000 scans reveal possible patterns of damage

News 19 AUG 24

Chatbots in science: What can ChatGPT do for you?

Career Column 14 AUG 24

Has your paper been used to train an AI model? Almost certainly

News 14 AUG 24

Gender bias might be working at level of whole disciplines

Nature Index 19 AUG 24

Cash for catching scientific errors

Technology Feature 19 AUG 24

‘There’s a lot of privilege masquerading as merit’: why inclusion matters in academia

Career Q&A 19 AUG 24

The need for equity in Brazilian scientific funding

Correspondence 13 AUG 24

Canadian graduate-salary boost will only go to a select few

Postdoc Fellow / Senior Scientist

The Yakoub and Sulzer labs at Harvard Medical School-Brigham and Women’s Hospital and Columbia University

Boston, Massachusetts (US)

Harvard Medical School and Brigham and Women's Hospital

Faculty Positions at the Center for Machine Learning Research (CMLR), Peking University

CMLR's goal is to advance machine learning-related research across a wide range of disciplines.

Beijing, China

Center for Machine Learning Research (CMLR), Peking University

Postdoctoral Research Fellows at Suzhou Institute of Systems Medicine (ISM)

ISM, based on this program, is implementing the reserve talent strategy with postdoctoral researchers.

Suzhou, Jiangsu, China

Suzhou Institute of Systems Medicine (ISM)

Career Opportunities at the Yazhouwan National Laboratory, Hainan, China

YNL recruits leading scientists in agriculture: crop/animal genetics, biotech, photosynthesis, disease resistance, data analysis, and more.

Sanya, Hainan, China

Yazhouwan National Laboratory

Global Recruitment for Center Directors and Principal Investigators, GIBH

We aim to foster cutting-edge scientific and technological advancements in the field of molecular tissue biology at the single-cell level.

Guangzhou, Guangdong, China

Guangzhou Institutes of Biomedicine and Health(GIBH), Chinese Academy of Sciences

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Plagiarism and AI

Plagiarism and artificial intelligence.

Last Updated Winter/Spring 202 4

Introduction

Most writers already use some forms of AI writing tools without even thinking about it–consider, for example, how often you use grammar checks or predictive text algorithms on an average day. The newest generation of AI tools, however, can perform extremely sophisticated writing tasks with very little input or effort from the human user, and this raises some difficult issues for students and instructors.

At what point does a student’s use of artificial intelligence in their writing stop being the legitimate use of a tool and become plagiarism or academic misconduct? There is no single answer to this question, and there are few, if any, hard rules around AI that all instructors would agree on. Given the newness of this technology, there’s a lot we all need to learn about what AI writing tools can do and how students can, should and shouldn’t use them in their academic work.

As a student, this can make the choice to use AI tools a new and somewhat complicated spin on the academic integrity issues discussed throughout this site. As with any issue related to academic integrity, your first response should be to make sure you understand your instructor’s expectations for a given class and assignment. However, the newness of AI and the relatively uncharted range of things it can do means that instructors don’t always know how to articulate what they consider appropriate or inappropriate use of AI. This places a heavier responsibility on students to think through the ethical implications of their use of AI.

This is not just a matter of avoiding the consequences of accidentally or deliberately plagiarizing; it is also about making sure that you actually receive the benefits of the education you’re spending your time and resources pursuing. AI can unquestionably make many writing tasks easier, but as a student you should consider the degree to which making a given assignment easier is better or more beneficial for you in the long run. This page is intended to help you navigate these issues and make informed decisions about how to use AI writing tools ethically in your academic work.

Things AI Can Do:

For our purposes here, it’s easiest to think of AI writing tools in two broad categories:

AI Editing tools help writers improve text that they have written themselves . This category includes a number of tools that nearly all writers use routinely, such as spell checkers and bibliography generators like Zotero or EasyBib. In their most sophisticated form, though, AI editors can proofread and correct the grammar in whole texts or even revise them entirely to make the writing more “formal,” more “academic,” or otherwise more appropriate for the intended audience (at least to the degree the AI understands them).

Generative AI Tools actually create new text (or, in some cases, images, slides, charts, music, or video) for their users. Again, this category includes tools that many of us use regularly, like the predictive text algorithm integrated into most messaging apps. At the far end of this category, though, are tools like ChatGPT and Gemini, which can theoretically write entire essays if they’re simply fed the prompt.

Some of the simpler and more common ways to use these tools don’t present much of an ethical quandary. Very few instructors would object to students using the spelling and grammar checks built into their word processors.* Conversely, most instructors would consider it plagiarism if a student fed their assignment prompt into ChatGPT and submitted the essay that the AI generated as their own work.

In between these two extremes, though, lies a whole range of more complex uses for AI tools that aren’t so easy to label as acceptable or unacceptable in academic contexts. We give several examples of this in the Sample Scenarios below, but before we get to that, we should consider some general principles you and your instructor might use to decide what uses for AI are acceptable:

*A major exception here is introductory language classes (e.g. Spanish 101-104). Since learning basic spelling, grammar, and phrasing is a major part of the curriculum in these courses, many instructors in these courses would object to the use of spell check or predictive text.

How to Evaluate Your Use of AI

First and foremost, it’s important to reiterate that your instructor has the final say on what does and does not constitute plagiarism or academic misconduct on a given assignment . So, if your instructor explicitly says that a given use of AI is acceptable or unacceptable, then there’s nothing more you need to consider. If your instructor’s expectations are unclear, though, or if you’re considering using an AI tool that seems to fall outside the guidelines your instructor has given, you’ll also need to evaluate the ethics yourself.

The good news is that, while the uses of artificial intelligence in academic writing may be new, the same five basic principles around plagiarism that we discussed elsewhere on this site still apply here. So, to help you consider whether a given use of AI tools is ethical or not, you can start by considering…

Education: What am I supposed to learn from this assignment? How is it intended to help me develop my writing or thinking skills, or to better understand the course material? Will a particular use of AI undermine or defeat the purpose of this assignment?

Attribution of Credit : Could a particular use of AI tools cause me to take credit for ideas that aren’t my own? How might an AI obscure my use of sources or draw on sources that I’m not aware of and therefore can’t document properly?

Maintaining a Scholarly Discourse: Will a particular use of AI help me to build on the ideas of others and express new ideas of my own? Or will it cause me to simply restate ideas that have already been articulated elsewhere?

Academic Integrity: What aspects of this assignment does the instructor expect to be the result of my effort alone? Would a particular use of AI cause me to deceive my instructor into thinking I put intellectual labor into my writing that I did not? Is a particular use of AI likely to produce false data or misinformation, which I would take responsibility for by submitting it under my name?

Intellectual Property: Could a particular use of AI cause me to appropriate text or ideas that are owned by other people?

Finally, if considering these questions still leaves you unsure about a given use of AI, ask yourself this: If I asked a human being to do the work that this AI is doing for me, would it still be okay? If the answer is “no,” or even “I’m not sure,” then your best bet would be to avoid that use of AI.

Sample Scenarios:

Unlike the scenarios described elsewhere on this site , we’re not providing analysis to go with these examples. This is because the applications for AI in academic writing are so new that there isn’t a clear consensus or general practice that we can provide. Instead, we’re providing these scenarios to help you and your instructors open a dialogue about what uses for AI are acceptable or unacceptable in their classes. We encourage you to think about each of these situations using the questions outlined above and to ask your instructors what they think.

Scenario A:

A student is extremely insecure about their grammar or writing style. So, for an essay in their History class, they feed their draft into an AI tool that promises to “tune” their language to make it more formal and academic. The tool makes several dozen changes to the punctuation, sentence structure, and phrasing throughout the draft, returning a new version of the essay that reads to the student as a more polished version of the essay they wrote. The student then submits the AI-polished version of the essay to their instructor with no additional changes on their part.

Scenario B:

A student in a Psychology class is writing a literature review that discusses the existing scholarship around violence in video games. To get started, they go to an AI text generator and ask “do psychologists believe that video games cause violent behavior?” The AI writes three or four paragraphs in response that summarize the various ways that psychologists have answered that question, though it cites no sources and provides no details about particular experiments, dates, or psychologists. The student then uses the AI’s response as a kind of outline: they write their literature review following the main ideas expressed by the AI, plugging in references to specific articles and sources they’ve found through their own research. The final draft that the student submit to their instructor is much more detailed than the AI’s version, and the actual text is almost entirely written by the student, but it makes basically the same points in the same order as the draft written by the AI.

Scenario C:

A student in a Philosophy class is struggling to keep up with the reading, which they find dense and confusing. To make things easier, they ask an AI chatbot to summarize the readings for them. For each reading, the AI creates a summary that seems to cover the major ideas, but in much simpler language. At first, the student uses these summaries as a guide to help them read the assigned texts, but as the term goes on the student finds that they only read the summaries, and that seems to be enough to participate in class discussion and keep up with the written work in the course.

Scenario D:

A student in an Economics class is having difficulty getting started on an essay assignment with a fairly open prompt, so they enter the prompt into a generative AI tool multiple times to see a range of different topics and approaches. Ultimately, the student decides to write on one of the topics the AI came up with, but as soon as they do they delete the AI’s essay and write the paper themselves.

Scenario E:

A student in a Political Science class is writing a position paper in response to the argument in one of their readings. They create a detailed outline that includes multiple quotes from the reading and long bullet points laying out the student’s response. They paste this outline into a text generator and ask it to “write a position paper that follows this outline.” The resulting essay is roughly 90% text written by the student, but the AI has added connecting words, punctuation, and transition sentences at the beginning of each paragraph. The student revises this essay, making various changes to both their language and the AI’s, before turning in the final draft.

Scenario F:

A student in a Religious Studies class is assigned to write an annotated bibliography. The assignment calls for the student to find six academic sources on their topic and write a one-paragraph description of each source. The student will later use these sources in a formal research essay. The student asks an AI to list “the six most important academic sources” on their topic, and the AI gives them a list of six books. The student then asks the AI to write a one-paragraph summary of each book. The AI can’t manage to write citations, so the student writes those themselves, using information on Amazon.com (this also allows the student to confirm that all six books really exist, and weren’t just made up by the AI). The student then turns in the annotated bibliography, which contains their citations of the six sources found by the AI and the descriptions written by the AI.

- You Might Be Plagiarizing If...

- Self-Plagiarism

- Artificial Intelligence (AI) & Plagiarism

- Who Is Going to Know I Plagiarized?

- Paraphrasing

- How to Paraphrase

- Paraphrasing: Some Details

- Is This Plagiarism? (A)

- What's Wrong With This Paraphrase? (A)

- Is This Plagiarism? (B)

- What's Wrong With This Paraphrase? (B)

- What About Charts and Graphs?

- What Doesn't Need to Be Cited?

- Common Citation Methods

- Want to Learn More About Citing?

- Two More Tips

- Test for Class Credit

Artificial Intelligence (AI) & Plagiarism

Photo by Markus Winkler on Unsplash

A growing concern is the use of artificial intelligence (AI) chatbots to write entire essays and articles. While it may be tempting to use AI in this way, please be aware that this is also considered a form of plagiarism.

To see why, take a look at section 1.2 from SJSU’s Academic Integrity Policy :

San José State University defines plagiarism as the act of representing the work of another as one’s own without giving appropriate credit, regardless of how that work was obtained , and submitting it to fulfill academic requirements.

Notice the parts of the policy that have been italicized for emphasis. These highlight a couple of important things:

- Doing academic work requires that the work you turn in is your own. A paper that is written by AI is not considered your own original work.

- It doesn’t matter which AI program/software you use. Using any of these to write your papers is considered a form of plagiarism.

It is important to note that tools that check your writing are okay to use. Examples can include the autocorrect feature in Google Docs and the app Grammarly. These tools, which scan pieces of writing for errors and/or make suggestions for edits, are very different from AI programs that write entire papers. The key difference is that it is your own original writing that is being scanned for possible mistakes versus AI that does all the writing for you.

- << Previous: Self-Plagiarism

- Next: The Effects of Plagiarism >>

- Last Updated: May 30, 2024 11:27 AM

- URL: https://libguides.sjsu.edu/plagiarism

To revisit this article, visit My Profile, then View saved stories .

- The Big Story

- Newsletters

- Steven Levy's Plaintext Column

- WIRED Classics from the Archive

- WIRED Insider

- WIRED Consulting

If you buy something using links in our stories, we may earn a commission. Learn more.

Students Are Likely Writing Millions of Papers With AI

Students have submitted more than 22 million papers that may have used generative AI in the past year, new data released by plagiarism detection company Turnitin shows.

A year ago, Turnitin rolled out an AI writing detection tool that was trained on its trove of papers written by students as well as other AI-generated texts. Since then, more than 200 million papers have been reviewed by the detector, predominantly written by high school and college students. Turnitin found that 11 percent may contain AI-written language in 20 percent of its content, with 3 percent of the total papers reviewed getting flagged for having 80 percent or more AI writing. (Turnitin is owned by Advance, which also owns Condé Nast, publisher of WIRED.) Turnitin says its detector has a false positive rate of less than 1 percent when analyzing full documents.

ChatGPT’s launch was met with knee-jerk fears that the English class essay would die . The chatbot can synthesize information and distill it near-instantly—but that doesn’t mean it always gets it right. Generative AI has been known to hallucinate , creating its own facts and citing academic references that don’t actually exist. Generative AI chatbots have also been caught spitting out biased text on gender and race . Despite those flaws, students have used chatbots for research, organizing ideas, and as a ghostwriter . Traces of chatbots have even been found in peer-reviewed, published academic writing .

Teachers understandably want to hold students accountable for using generative AI without permission or disclosure. But that requires a reliable way to prove AI was used in a given assignment. Instructors have tried at times to find their own solutions to detecting AI in writing, using messy, untested methods to enforce rules , and distressing students. Further complicating the issue, some teachers are even using generative AI in their grading processes.

Detecting the use of gen AI is tricky. It’s not as easy as flagging plagiarism, because generated text is still original text. Plus, there’s nuance to how students use gen AI; some may ask chatbots to write their papers for them in large chunks or in full, while others may use the tools as an aid or a brainstorm partner.

Students also aren't tempted by only ChatGPT and similar large language models. So-called word spinners are another type of AI software that rewrites text, and may make it less obvious to a teacher that work was plagiarized or generated by AI. Turnitin’s AI detector has also been updated to detect word spinners, says Annie Chechitelli, the company’s chief product officer. It can also flag work that was rewritten by services like spell checker Grammarly, which now has its own generative AI tool . As familiar software increasingly adds generative AI components, what students can and can’t use becomes more muddled.

Detection tools themselves have a risk of bias. English language learners may be more likely to set them off; a 2023 study found a 61.3 percent false positive rate when evaluating Test of English as a Foreign Language (TOEFL) exams with seven different AI detectors. The study did not examine Turnitin’s version. The company says it has trained its detector on writing from English language learners as well as native English speakers. A study published in October found that Turnitin was among the most accurate of 16 AI language detectors in a test that had the tool examine undergraduate papers and AI-generated papers.

Schools that use Turnitin had access to the AI detection software for a free pilot period, which ended at the start of this year. Chechitelli says a majority of the service’s clients have opted to purchase the AI detection. But the risks of false positives and bias against English learners have led some universities to ditch the tools for now. Montclair State University in New Jersey announced in November that it would pause use of Turnitin’s AI detector. Vanderbilt University and Northwestern University did the same last summer.

“This is hard. I understand why people want a tool,” says Emily Isaacs, executive director of the Office of Faculty Excellence at Montclair State. But Isaacs says the university is concerned about potentially biased results from AI detectors, as well as the fact that the tools can’t provide confirmation the way they can with plagiarism. Plus, Montclair State doesn’t want to put a blanket ban on AI, which will have some place in academia. With time and more trust in the tools, the policies could change. “It’s not a forever decision, it’s a now decision,” Isaacs says.

Chechitelli says the Turnitin tool shouldn’t be the only consideration in passing or failing a student. Instead, it’s a chance for teachers to start conversations with students that touch on all of the nuance in using generative AI. “People don’t really know where that line should be,” she says.

You Might Also Like …

In your inbox: Our biggest stories , handpicked for you each day

How one bad CrowdStrike update crashed the world’s computers

The Big Story: How soon might the Atlantic Ocean break ?

Welcome to the internet's hyper-consumption era

Celebrating 150 years of Harvard Summer School. Learn about our history.

Should I Use ChatGPT to Write My Essays?

Everything high school and college students need to know about using — and not using — ChatGPT for writing essays.

Jessica A. Kent

ChatGPT is one of the most buzzworthy technologies today.

In addition to other generative artificial intelligence (AI) models, it is expected to change the world. In academia, students and professors are preparing for the ways that ChatGPT will shape education, and especially how it will impact a fundamental element of any course: the academic essay.

Students can use ChatGPT to generate full essays based on a few simple prompts. But can AI actually produce high quality work, or is the technology just not there yet to deliver on its promise? Students may also be asking themselves if they should use AI to write their essays for them and what they might be losing out on if they did.

AI is here to stay, and it can either be a help or a hindrance depending on how you use it. Read on to become better informed about what ChatGPT can and can’t do, how to use it responsibly to support your academic assignments, and the benefits of writing your own essays.

What is Generative AI?

Artificial intelligence isn’t a twenty-first century invention. Beginning in the 1950s, data scientists started programming computers to solve problems and understand spoken language. AI’s capabilities grew as computer speeds increased and today we use AI for data analysis, finding patterns, and providing insights on the data it collects.

But why the sudden popularity in recent applications like ChatGPT? This new generation of AI goes further than just data analysis. Instead, generative AI creates new content. It does this by analyzing large amounts of data — GPT-3 was trained on 45 terabytes of data, or a quarter of the Library of Congress — and then generating new content based on the patterns it sees in the original data.

It’s like the predictive text feature on your phone; as you start typing a new message, predictive text makes suggestions of what should come next based on data from past conversations. Similarly, ChatGPT creates new text based on past data. With the right prompts, ChatGPT can write marketing content, code, business forecasts, and even entire academic essays on any subject within seconds.

But is generative AI as revolutionary as people think it is, or is it lacking in real intelligence?

The Drawbacks of Generative AI

It seems simple. You’ve been assigned an essay to write for class. You go to ChatGPT and ask it to write a five-paragraph academic essay on the topic you’ve been assigned. You wait a few seconds and it generates the essay for you!

But ChatGPT is still in its early stages of development, and that essay is likely not as accurate or well-written as you’d expect it to be. Be aware of the drawbacks of having ChatGPT complete your assignments.

It’s not intelligence, it’s statistics

One of the misconceptions about AI is that it has a degree of human intelligence. However, its intelligence is actually statistical analysis, as it can only generate “original” content based on the patterns it sees in already existing data and work.

It “hallucinates”

Generative AI models often provide false information — so much so that there’s a term for it: “AI hallucination.” OpenAI even has a warning on its home screen , saying that “ChatGPT may produce inaccurate information about people, places, or facts.” This may be due to gaps in its data, or because it lacks the ability to verify what it’s generating.

It doesn’t do research

If you ask ChatGPT to find and cite sources for you, it will do so, but they could be inaccurate or even made up.

This is because AI doesn’t know how to look for relevant research that can be applied to your thesis. Instead, it generates content based on past content, so if a number of papers cite certain sources, it will generate new content that sounds like it’s a credible source — except it likely may not be.

There are data privacy concerns

When you input your data into a public generative AI model like ChatGPT, where does that data go and who has access to it?

Prompting ChatGPT with original research should be a cause for concern — especially if you’re inputting study participants’ personal information into the third-party, public application.

JPMorgan has restricted use of ChatGPT due to privacy concerns, Italy temporarily blocked ChatGPT in March 2023 after a data breach, and Security Intelligence advises that “if [a user’s] notes include sensitive data … it enters the chatbot library. The user no longer has control over the information.”

It is important to be aware of these issues and take steps to ensure that you’re using the technology responsibly and ethically.

It skirts the plagiarism issue

AI creates content by drawing on a large library of information that’s already been created, but is it plagiarizing? Could there be instances where ChatGPT “borrows” from previous work and places it into your work without citing it? Schools and universities today are wrestling with this question of what’s plagiarism and what’s not when it comes to AI-generated work.

To demonstrate this, one Elon University professor gave his class an assignment: Ask ChatGPT to write an essay for you, and then grade it yourself.

“Many students expressed shock and dismay upon learning the AI could fabricate bogus information,” he writes, adding that he expected some essays to contain errors, but all of them did.

His students were disappointed that “major tech companies had pushed out AI technology without ensuring that the general population understands its drawbacks” and were concerned about how many embraced such a flawed tool.

Explore Our High School Programs

How to Use AI as a Tool to Support Your Work

As more students are discovering, generative AI models like ChatGPT just aren’t as advanced or intelligent as they may believe. While AI may be a poor option for writing your essay, it can be a great tool to support your work.

Generate ideas for essays

Have ChatGPT help you come up with ideas for essays. For example, input specific prompts, such as, “Please give me five ideas for essays I can write on topics related to WWII,” or “Please give me five ideas for essays I can write comparing characters in twentieth century novels.” Then, use what it provides as a starting point for your original research.

Generate outlines

You can also use ChatGPT to help you create an outline for an essay. Ask it, “Can you create an outline for a five paragraph essay based on the following topic” and it will create an outline with an introduction, body paragraphs, conclusion, and a suggested thesis statement. Then, you can expand upon the outline with your own research and original thought.

Generate titles for your essays

Titles should draw a reader into your essay, yet they’re often hard to get right. Have ChatGPT help you by prompting it with, “Can you suggest five titles that would be good for a college essay about [topic]?”

The Benefits of Writing Your Essays Yourself

Asking a robot to write your essays for you may seem like an easy way to get ahead in your studies or save some time on assignments. But, outsourcing your work to ChatGPT can negatively impact not just your grades, but your ability to communicate and think critically as well. It’s always the best approach to write your essays yourself.

Create your own ideas

Writing an essay yourself means that you’re developing your own thoughts, opinions, and questions about the subject matter, then testing, proving, and defending those thoughts.

When you complete school and start your career, projects aren’t simply about getting a good grade or checking a box, but can instead affect the company you’re working for — or even impact society. Being able to think for yourself is necessary to create change and not just cross work off your to-do list.

Building a foundation of original thinking and ideas now will help you carve your unique career path in the future.

Develop your critical thinking and analysis skills

In order to test or examine your opinions or questions about a subject matter, you need to analyze a problem or text, and then use your critical thinking skills to determine the argument you want to make to support your thesis. Critical thinking and analysis skills aren’t just necessary in school — they’re skills you’ll apply throughout your career and your life.

Improve your research skills

Writing your own essays will train you in how to conduct research, including where to find sources, how to determine if they’re credible, and their relevance in supporting or refuting your argument. Knowing how to do research is another key skill required throughout a wide variety of professional fields.

Learn to be a great communicator

Writing an essay involves communicating an idea clearly to your audience, structuring an argument that a reader can follow, and making a conclusion that challenges them to think differently about a subject. Effective and clear communication is necessary in every industry.

Be impacted by what you’re learning about :

Engaging with the topic, conducting your own research, and developing original arguments allows you to really learn about a subject you may not have encountered before. Maybe a simple essay assignment around a work of literature, historical time period, or scientific study will spark a passion that can lead you to a new major or career.

Resources to Improve Your Essay Writing Skills

While there are many rewards to writing your essays yourself, the act of writing an essay can still be challenging, and the process may come easier for some students than others. But essay writing is a skill that you can hone, and students at Harvard Summer School have access to a number of on-campus and online resources to assist them.

Students can start with the Harvard Summer School Writing Center , where writing tutors can offer you help and guidance on any writing assignment in one-on-one meetings. Tutors can help you strengthen your argument, clarify your ideas, improve the essay’s structure, and lead you through revisions.

The Harvard libraries are a great place to conduct your research, and its librarians can help you define your essay topic, plan and execute a research strategy, and locate sources.

Finally, review the “ The Harvard Guide to Using Sources ,” which can guide you on what to cite in your essay and how to do it. Be sure to review the “Tips For Avoiding Plagiarism” on the “ Resources to Support Academic Integrity ” webpage as well to help ensure your success.

Sign up to our mailing list to learn more about Harvard Summer School

The Future of AI in the Classroom

ChatGPT and other generative AI models are here to stay, so it’s worthwhile to learn how you can leverage the technology responsibly and wisely so that it can be a tool to support your academic pursuits. However, nothing can replace the experience and achievement gained from communicating your own ideas and research in your own academic essays.

About the Author